Can everyone shut up about those fabled 4TF.

You have to have insane expectations in order to rant how underpowered the system is when it is revealed.

Can everyone shut up about those fabled 4TF.

well there's also power to the cpu and to ram that's missingIf we are going to try to equate or rationalize flops or whatever at x TDP or what have you, the 8nm MX570 at 4.7TFLOPs (2048 CC @ 1155MHz) is probably the 25W TGP that nVidia listed for it.

If that were on 5nm, then it would probably be lower.

I’m using probably because I feel like something is amiss with the information about the TGP of the MX570 and those clock speeds.

I fixed my post.well there's also power to the cpu and to ram that's missing

Some perspective is necessary. 1.3GHz GPU clock speed isn't "extreme optimism", certainly not for the lithography process in question. Bear in mind that Steam Deck hits up to 1.6GHz, and the 1.3 would be for a docked mode, where there would be more room for better cooling, etc. Also, on a much poorer lithograph with more heating issues, the 2017 Switch still achieved 90% of the XB1's GPU clock speed in docked mode, and 96% of the PS4's. A boost mode of 1.267GHz was also reported at one point on the Codename Mariko models. So, a relatively marginal increase on that for a much improved process is neither "extreme optimism" nor unthinkable. 1.3GHz is probably the highest estimation, in my view. I think 1-1.3GHz is likely for docked, and 500-600MHz for portable. The docked clock speed estimations would be about 60-85% of XSS, 55-70% of XSX, and 46-60% of PS5, meaning significantly lower percentages under better conditions... However, I believe the CPU will be clocked higher instead, as that's been an important upgrade on the other systems. There's enough power for graphics.In what sense is a clock speed of 1.3Ghz for the GPU in docked mode completely beyond the laws of thermodynamics? Not talking about the credibility of the leak here, but if it really is on 5nm it seems within the realm of possibility with a sub 30W power draw. It also happens to perfectly match the ratio between handheld and docked mode with handheld being "a handheld PS4".

It pushes everything into the realm of "extreme optimism" but it doesn't seem to break the laws of physics? Please, genuinely, correct me if I am wrong here.

Can you pass the links?

Yeah, i understand nothing in the page xd* Hidden text: cannot be quoted. *

Some perspective is necessary. 1.3GHz GPU clock speed isn't "extreme optimism", certainly not for the lithography process in question. Bear in mind that Steam Deck hits up to 1.6GHz, and the 1.3 would be for a docked mode, where there would be more room for better cooling, etc. Also, on a much poorer lithograph with more heating issues, the 2017 Switch still achieved 90% of the XB1's GPU clock speed in docked mode, and 96% of the PS4's. A boost mode of 1.267GHz was also reported at one point on the Codename Mariko models. So, a relatively marginal increase on that for a much improved process is neither "extreme optimism" nor unthinkable.

Exactly. That's what I mean by extreme optimism - you are the most optimistic person here, and 1.3Ghz is your highest estimate - 1.3Ghz is the most optimistic thing possible.1.3GHz is probably the highest estimation, in my view.

I think 1-1.3GHz is likely for docked, and 500-600MHz for portable. The docked clock speed estimations would be about 60-85% of XSS, 55-70% of XSX, and 46-60% of PS5, meaning significantly lower percentages under better conditions...

For home consoles yeah. Although for handheld, 3ds to switch was huge..This hardware will be the first time we’ve seen a large leap in graphic tech from Nintendo since 2012 when we went from Wii level graphics to PS360 level with the Wii U. The Switch was a step up from the Wii U no doubt but no where near enough for it to be PS4 level.

Honestly, the idea of playing Nintendo games with PS4 (Pro?) level graphics is amazing to me. I’ve not got a PS5 yet but whenever I boot up my Pro I still to this day think ‘damn, those graphics look great’. I can only be excited about the future possibilities.

I can’t help but feel happy that games like Breath of the Wild 2 and Metroid Prime 4 are going to benefit from this new technology as well. They’re going to look so damn nice on this thing with the increased resolutions and smooth frame rates, and maybe with some extra bells and whistles too.

Honestly, this is the most I’ve been excited about a new console for a long long time.

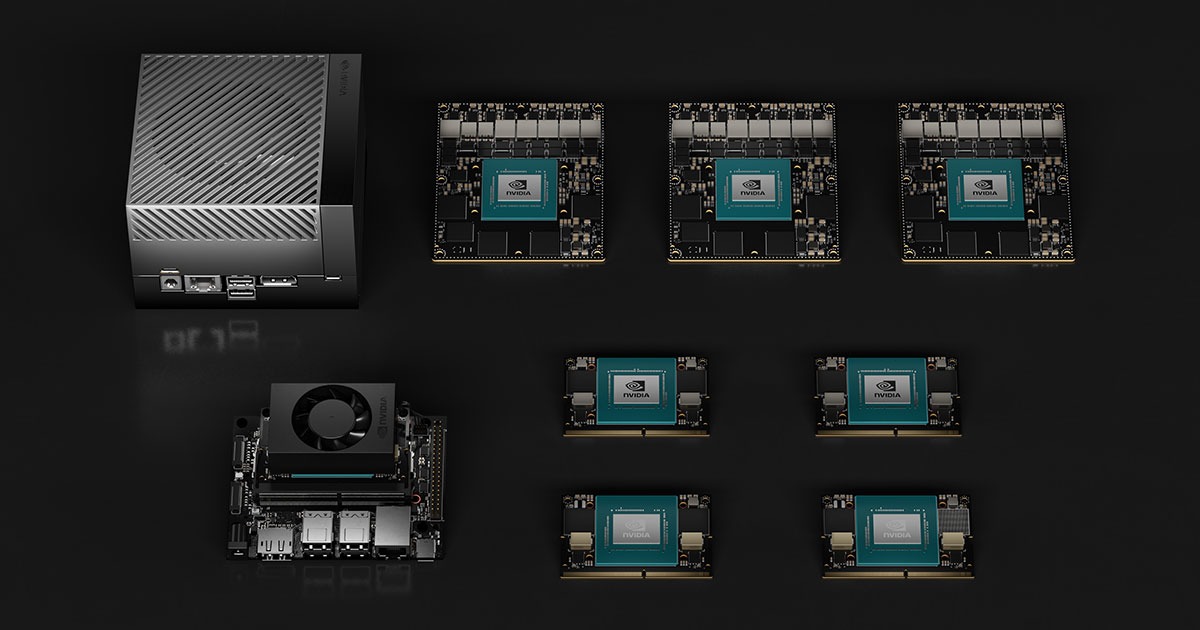

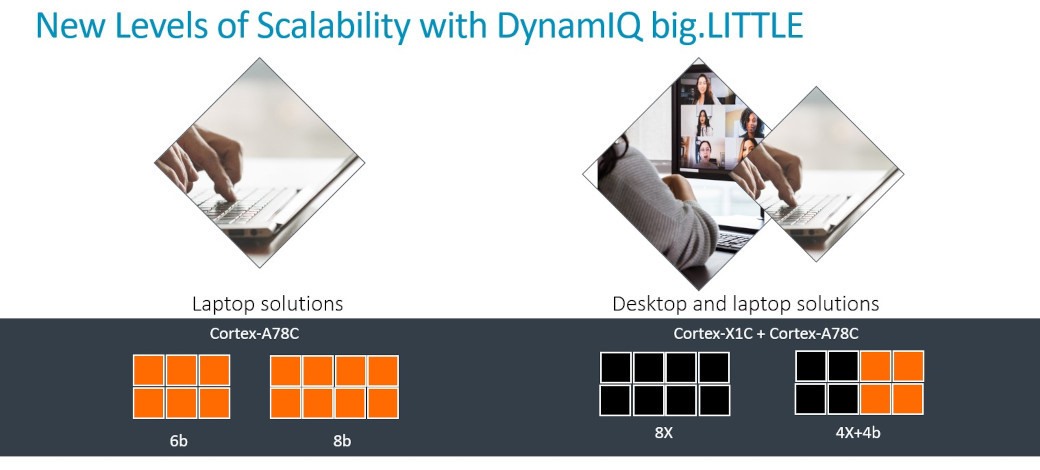

Where did you get 8mb of L3 cache from? As reference, The AGX modules with 12 A78s have 6mb of L3 cache, while the highest Orion NX module with 8 A78s has 4MB of L3. I wouldn't be surprised if we get 4MB. I do think the cache has been discussed shortly after the breach, and 8 or 6MB was brought up, but some were thinking it was more likely to be on the lower end like 4MB realistically IIRC.I'm not gonna definitively rule out 1.3 ghz for the GPU when docked, but my confidence in that is pretty low when combined with some default assumptions.

Said default assumptions being: 128-bit of normal LPDDR5, no more than 8 MB of L3 cache for the CPU cluster.

The ~102.4 GB/s bandwidth means that I'm not exactly confident in going above 1 ghz when docked, when assuming a similar balance of bandwidth to SM_count*clock as desktop Ampere + allocating a chunk for the CPU. I'm also assuming that 8 MB or less of L3 cache for the CPU cluster will predominantly be for the CPU's usage, despite Tegras allowing the GPU to access it (or so I've heard from this thread?).

There is that outside shot though, of LPDDR5X. Be it either 7500 MT/s (120 GB/s bandwidth) or 8533 MT/s (~136.5 GB/s bandwidth), climbing above 1 ghz does start to sound more plausible. And there is that other long shot of more L3 cache, to either reduce the CPU's share of bandwidth usage and/or be of assistance to the GPU.

I knew I’d get a smart arse response from at least one comic book guy.no

For home consoles yeah. Although for handheld, 3ds to switch was huge..

Look over there is making an assumption Nintendo and Nvidia could use the Cortex-A78C for the CPU on Drake, which allows up to 8 MB for the L3 cache.Where did you get 8mb of L3 cache from?

You didn't count the handheld sideI knew I’d get a smart arse response from at least one comic book guy.

Oh I see.Look over there is making an assumption Nintendo and Nvidia could use the Cortex-A78C for the CPU on Drake, which allows up to 8 MB for the L3 cache.

I expect the A78C, if they're going so far to customize the chip already. We know they're doing wild stuff like backporting Lovelace features and forward porting power management stuff. If you're going to keep your clocks and power consumption flat, then A78 doesn't have anything to offer over A78C.It's really gonna be interesting to see how custom Drake ends up being from the Orion modules. Yeah I remember the discussions the a78c.

Of course, 20nm TX1 was what happened, so…I'm skeptical of the full 1.3 GHz clock too in favor of balancing CPU speeds and the power draw at 8nm, but 5nm would be far more likely.

8nm feels like 20nm TX1 all over again.

We know they're doing wild stuff like backporting Lovelace features and forward porting power management stuff.

Well, Drake is a custom variation of Orin (here and here). And so far, Orin has one feature not present in consumer Ampere GPUs, AV1 encode support.This is new, any sources of Drake having some Lovelace features? And what are they?

No one really knows what these Lovelace features are supposed to entail, even though Kopite7kimi mentioned that there are Lovelace features in Orin which is apparently what drake is based on, even though Drake is much closer to the desktop implementation than Orin implementation of the Ampere architecture.This is new, any sources of Drake having some Lovelace features? And what are they?

If it were announced you would see it all over the news.Has it been announced yet?

You are correct, my brain had lodged "backported lovelace" into my brain before the NVN2 hack came out. Thank you for correcting me that we're not actually explicit about that.No one really knows what these Lovelace features are supposed to entail, even though Kopite7kimi mentioned that there are Lovelace features in Orin which is apparently what drake is based on, even though Drake is much closer to the desktop implementation than Orin implementation of the Ampere architecture.

The Rumor Mill(tm) suggests that Lovelace started out as an optimized Ampere built on TSMC 5nm before a larger rethink occurred. This is part of why I expected TSMC 7nm for Drake, simply because that and Samsung 8nm are the processes Ampere is built for.Ampere is SM 8_6, ORIN version of Ampere is SM 8_7 and Lovelace is SM 8_9. However Drake is SM 8_8, and it follows the desktop implementation but isn’t categorized as such.

Lovelace, for all intents and purposes to me seems like it’s just the furthest optimized version of Ampere, and Drake is just further optimizations done to it (Ampere 8_6) before the Lovelace had its further optimization from there. Which is why it’s between ORIN and Lovelace.

So what's expectations like these days, will I be regretting buying an OLED in November?

He didn't.@oldpuck Why do you say in tweet that Drake will be presented at the TGS when we all believe that it is impossible for it to be presented there?

So what's expectations like these days, will I be regretting buying an OLED in November?

If I could post this without tagging him I would; he's already explained that he never made such a suggestion and it's merely conjecture from that Twitter account that posted that "rumour".Why do you say in tweet that Drake will be presented at the TGS when we all believe that it is impossible for it to be presented there?

This is me. I am the source. I am not joking.

I followed the links in the screenshots, ran some google translation, and found a table of leaked "Drake" specs, which matches my speculated table of specs from last week. It adds a few little bits of data (a row saying "ray tracing" with "High" as the value), but the other values are identical. It includes my random assumptions (that handheld Drake clocks would match TX1 docked clocks) but also my dumb mistakes, like where I scaled CPU clocks instead of leaving them stable across modes (necessary if you want your game logic to run the same in both modes).

This is a mashup of stuff we've either discovered on this thread or our random speculation to make things look "technical". This is the snake eating it's own tail till it reaches its eyeballs

I’d say it’s a stretch to call it proto-Lovelace in a sense rather than just a further optimized Ampere.You are correct, my brain had lodged "backported lovelace" into my brain before the NVN2 hack came out. Thank you for correcting me that we're not actually explicit about that.

The Rumor Mill(tm) suggests that Lovelace started out as an optimized Ampere built on TSMC 5nm before a larger rethink occurred. This is part of why I expected TSMC 7nm for Drake, simply because that and Samsung 8nm are the processes Ampere is built for.

But perhaps Drake is proto-Lovelace in much the same way that PS5 was proto-RDNA2 - but we're very much in the "speculating" part of the speculation thread.

SoonThat is, if we knew what Lovelace even had.

He did not, he explained that someone took what he said which was just speculation and they attributed it to Nate which was somehow found by this twitter user, and Nintendo isn’t part of TGS anyway so they do not have that holding them back in case they were to do a reveal around then.@oldpuck Why do you say in tweet that Drake will be presented at the TGS when we all believe that it is impossible for it to be presented there?

Manufacturing leaks is a youngpuck's game after all.To be fair I did say stupid stuff in my original post which is how I know the reposted Twitter stuff came from me, but the TGS is it’s own stupid, not mine.

But honestly, to have my words repackaged as an Internet hoax and credited to Nate? That’s very good. To have that posted here? I’ve arrived.

All I need now is for someone to make a YouTube video about it and I can retire from the thread

This sounds petty, but I want them to announce it this year just to end the posts about how it cannot happen or would be moronic decision. Is it likely? No idea, but there’s probably plenty of ways to rationalize it.

We’re 5 years into the Switch life, it’s done incredibly well already. As another poster has pointed out above, the success of this device is likely a much higher priority than the risk (not guarantee) of cannibalizing holiday sales materially. Also Switch is going to have a stellar holiday with its current lineup - Splatoon 3, Pokémon, inevitable unannounced first party titles and a wave of quality third party goodness. New hardware on the horizon that plays (mostly?) the same games and costs a chunk more isn’t going to change that.

If I were to bet, at this stage I’m assuming we don’t hear until Jan/Feb, but I’m just tired of people acting like something is just impossible.

Anyway y’all should play Splatoon 3.

YoungPuck, Straight Outta LeaksvilleManufacturing leaks is a youngpuck's game after all.

I'm mostly worried in the case of something coming in months time, but anything closer to a year bothers me less. The OLED is easy to justify for me because it means I can upgrade my sister into my Mariko Switch.For what it's worth, when GraffitiMAX (the moderator of the Chinese forum that is considered semi-reliable by users on here) was asked this very question after the Splatoon OLED edition was revealed, they replied with, 'Go ahead and buy it".

If you're primarily a handheld player and you see enough value in the OLED model, I'd recommend the purchase. You can always trade it in/sell it if the new model comes soon after. After all, nobody really knows when the new model will launch

You can buy an oled just fine, it’ll be obsolete by a 8-14 months time anyway.I'm mostly worried in the case of something coming in months time, but anything closer to a year bothers me less. The OLED is easy to justify for me because it means I can upgrade my sister into my Mariko Switch.

Nvidia mentioned BlueField-3's fabricated using TSMC's 7N process node at GTC 2021 (autumn 2021). And BlueField-3 seems to use two octa-core Cortex-A78C clusters for a total of 16 CPU cores.Hmm, wonder what's an example of A78 on 7 nm... probably Qualcomm's Snapdragon 888.

To add to this, a reason to believe that this is the A78C is that it mentions this architecture of the ARM version in it:Nvidia mentioned BlueField-3's fabricated using TSMC's 7N process node at GTC 2021 (autumn 2021). And BlueField-3 seems to use two octa-core Cortex-A78C clusters for a total of 16 CPU cores.

(I'm aware this is a very late reply. But I've recently remembered that Look over there was asking a question of an example of the Cortex-A78 being used on a 7 nm** chip. And I think BlueField-3 being fabricated using TSMC's 7N process node could make the possibility of Drake being fabricated using TSMC's N6 process node more likely.)

** → a marketing nomenclature used by all foundry companies

Up to 16 Armv8.2+ A78 Hercules cores (64-bit)

8MB L2 cache

16MB LLC system cache

The Cortex-A78C core is also a little different from the standard Cortex-A78. It implements instructions from newer Armv8.X architecture revisions, such as Armv8.3’s Pointer Authentication and other security-focused features. As a result, the CPU can’t be paired up with existing Armv8.2 CPUs, such as the Cortex-A55 for a big.LITTLE arrangement. We’re looking at six or eight big core only configurations. This wouldn’t be a good fit for mobile, but small core power efficiency is not so important in the laptop market.

There's mention of 6 GB of RAM in the iPhone 14 Pro's Geekbench 5 score. So the iPhone 14 Pro having 6 GB of RAM is not a rumour.The 6GB 64 bit modules are less common, but do exist in at least a couple of phones, namely the Pixel 6a and, according to rumours, the iPhone 14 Pro (although we'll have to wait for a teardown for confirmation on that one).

Got more from another person and I wasn't expecting it. What I'm posting is what I know. I won't get more from this person it was a random event to meet them. I'm not interested in people with 30 posts that don't beleive me. Take it or don't. I don't care. If I did I'd go for twitter insider fame or sell this info to a site which would generate thousands in clicks.

Ram was increased from 6gb to 12gb during H1 2022. Clocks also much higher than expected in newer dev kits. Devs go from thinking 'pro/x' to 'wow next gen console' and not only next gen but as I said before 'this feels like the graphical bump we wanted in 2005 from ninty' type comments from more than 1 but they don't believe it will be marketed as next gen but rather as another option in current line up. I personally think they want the PS2's crown of best selling console ever.

The Wild West runs like it does on SD but at higher resolutions due to dlss and at 60fps 'crazy to see on a handheld'... 'lots of ps4/xbo ports will come' and xbss/ps5 ports more than possible due to cpu and dlss.

Developers have asked for 16gb ram... rofl again 'never happy' when it comes to ram so that says to me that the CPU and GPU are more than enough.

Again Q1 2023 is the launch window think due to Fairy Sky Boy 2.

Fuck my head hurts lol. That's all for now x

Steam DeckWhat is SD?

Developers: Nintendo, please put 32 GB of RAM on the devkits. Microsoft put 40 GB of RAM for the Xbox Series X devkits.Developers have asked for 16gb ram... rofl again 'never happy' when it comes to ram so that says to me that the CPU and GPU are more than enough.

Oh thanks!Steam Deck

I don't think 64-bit 24 Gb (3 GB) LPDDR5 modules exist. So 128-bit 6 GB of LPDDR5 of RAM seems rather suspect.Ram was increased from 6gb to 12gb during H1 2022.

I assume he's talking about ram available to gamesI don't think 64-bit 24 Gb (3 GB) LPDDR5 modules exist. So 128-bit 6 GB of LPDDR5 of RAM seems rather suspect.