oldpuck

Like Like

- Pronouns

- he/they

No, unfortunately.Doesn't Nvidia have some fancy upscaling tech that makes this discussion somewhat moot? Wouldn't all the OG Switch games potentially get upscaled to 1080p by the GPU?

"Fancy upscaling tech" works by finding detail that is lost in the low resolution image and adding it back into the picture. Think of aliasing - aliasing is caused by the underlying art assets support a higher resolution than the one you've got and the mismatch at the edges shows up as a stair-step. Nvidia's various AI upscaling tech finds that lost data, and restores it, giving you a smooth edge.

But in some images there just isn't more detail to find. Pixel art is the best example. That art was drawn exactly to the pixel grid. Upscaling the NES Super Mario Bros can't find a seperate mouth and mustache for Mario because there isn't one. If you try to stick that game's art on a screen whose own grid doesn't like up with the art's original grid, you get smearing.

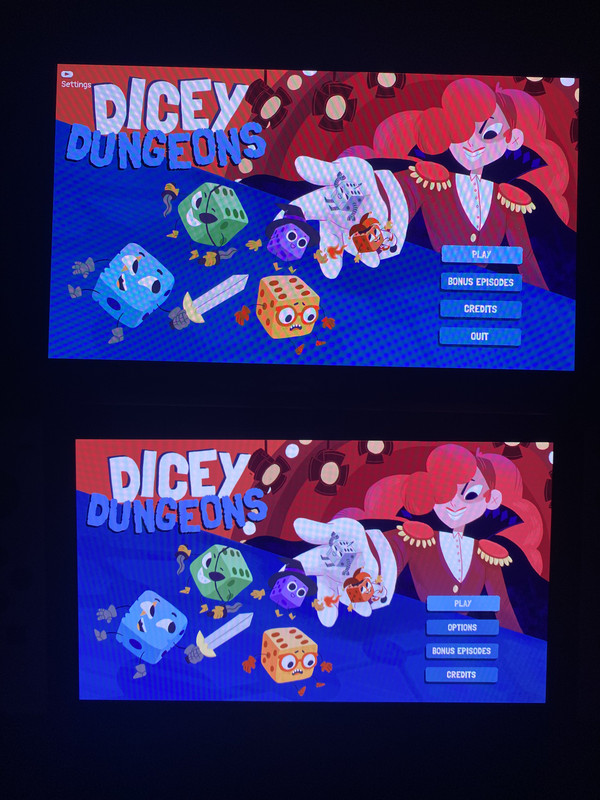

This isn't just a pixel art problem. Even in 3D games, if you have a texture, DLSS can't invent a higher resolution version. And if the art was carefully created for a specific screen resolution, uprezzig it to something that doesn't match will introduce artifacts. It's just less of an issue in most 3D games because the camera moving around means that you're rarely in that position.

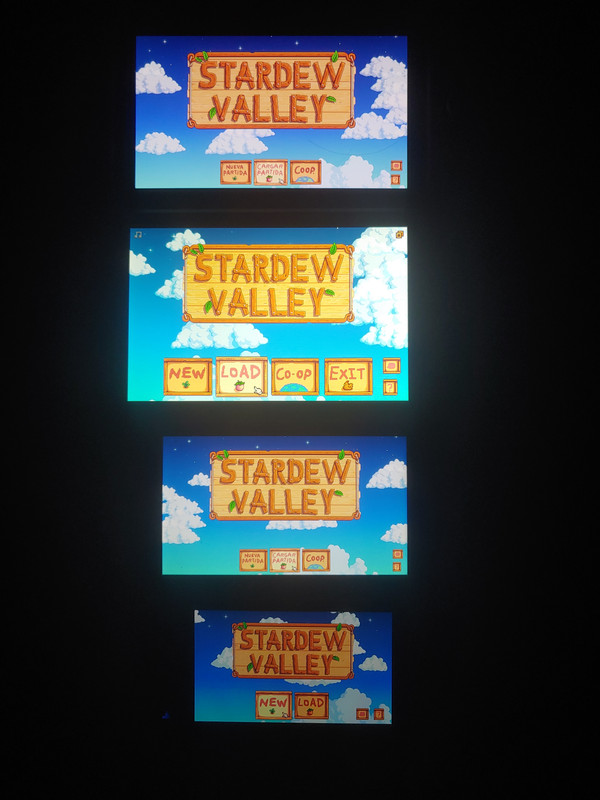

Individual users have different tolerances for these artifacts but they are artifacts. Individual users may have different preferences for trading off these artifacts for other advantages - like aging eyes not having to fight a small screen. Individual games have different levels of artifacting, depending on the nature of the assets, the engine, and the visual style.

Switch is a console with a strong retro-oriented subset of fans. Those fans are going to be most hit by the 720p->1080p shift. I think offering a "pixel perfect" BC mode, off by default, would be a pretty reasonable move. But I can imagine Nintendo being afraid of folks turning it on by accident and wondering why all of their games only take up two thirds of the screen.

I worked phone tech support for years, and the most common calls were "I accidentally turned on a useful accessibility feature, and now I think your service is broken." Well, second most, this was the early 2000s so "grandma has a thousand viruses from porn her grandson looked at" ranked higher, but the accessibility thing was a decent second.