I mean, it is Gundam, why are people surprised?That looks like a cute looking anim---TRAUMATISED

-

Hey everyone, staff have documented a list of banned content and subject matter that we feel are not consistent with site values, and don't make sense to host discussion of on Famiboards. This list (and the relevant reasoning per item) is viewable here.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

StarTopic Future Nintendo Hardware & Technology Speculation & Discussion |ST| (Read the staff posts before commenting!)

- Thread starter Dakhil

- Start date

Threadmarks

View all 18 threadmarks

Reader mode

Reader mode

Recent threadmarks

Poll #3: When do you think is the launch window for Nintendo's new hardware? Announcement regarding links to news and rumours from 2022 and 2021 Rough summary of the 2 August 2023 episode of Nate the Hate Rough summary of the 11 September 2023 episode of Nate the Hate Anatole's deep dive into a convolutional autoencoder Differences between T234 and T239 Rough summary of the 19 October 2023 episode of Nate the Hate necrolipe's Twitter (X) post on why a 2025 launch doesn't automatically make T239 outdated based on one of the leaked slides from Microsoft

Staff Communication

View all 13 threadmarks

Reader mode

Reader mode

Recent threadmarks

Staff Communication 05/26/23 Staff Post about Citing Leaks and Rumors Staff Post about reading Hidden Content Staff Post- Please read Keeping on topic New Switch 2 ST Made - Nontechnical Talk Has A New Home Staff Post Regarding Dubious Insiders And Better Sourcing Reminder about hide tags Newplank

Piranha Plant

Tomorrow.

Is another day.

Smash Kirby

Bob-omb

People thought they getting a soft wlw wholesome lesbian anime.I mean, it is Gundam, why are people surprised?

lemonfresh

#Team2024

- Pronouns

- He/Him

Reset the clockTomorrow.

ziggyrivers

thunder

- Pronouns

- He/him/his

Daylight Saving Times?Reset the clock

Supreme Overlord

Look on my Works, ye Mighty, and despair!

- Pronouns

- he/him

Reset the clock

SiG

Chain Chomp

This isn't ResetEra. There's nothing to reset.Reset the clock

Tentacle-tropes

Kremling

Bdays in this one were especially cursedThat looks like a cute looking anim---TRAUMATISED

Dark Cloud

Warpstar Knight

My head tells me it’s releasing H2 2024, but my heart wants H1 2024.

- Pronouns

- He/Him

My heart has been saying six months out since 2021My head tells me it’s releasing H2 2024, but my heart wants H1 2024.

ZachyCatGames

Cappy

You'd think, but nahNot to mention, you’d better hope the Firmware for the OS is pretty much ready to go before mass production.

~August 2016: Switch begins retail MP

October 2016: Switch is publicly revealed

November 2016: Switch fw v1.0.0 is compiled

XRTerra

Peter. Welcome to fortnite👍

Is there a consensus on what the NG Switch(or NX 2, or Switch 2) hardware will be? I personally have my own guess involving the Tegra T239 that was leaked last year. For example, wikipedia lists the T239 as having 12 SMs, or 1536 CUDA/Shader cores. Then, looking at the closest in terms of gpu performance, the AGX Orin 32 GB, it'll have 12 RT cores and 44 tensor cores as well(good on nvidia for making very easily scalable SoCs). TFlops wise, despite not being the best indicator of real world performance, will be anywhere from 2.5 to 2.9. Most likely just above 2, or even on par with the PS4 in handheld mode. Ram wise, I think it'll be 8-12 gigabytes. CPU wise, it'll be on par, but most likely better, than the CPU found in the Snapdragon 888. Probably better because it has active cooling. I have no idea on what the screen size or storage will be but I don't want to make any unreasonable estimates other than maybe a 900p OLED screen with 128/256 gigs of storage.

Feature wise, I'll bet that DLSS will be HEAVILY abused by developers to make games run on par with, if not better than their Xbox Series S versions. Fortnite at 1080p with Nanite and Lumen at 60 fps in docked with no other graphical options isn't unreasonable, seeing as Nintendo probably wouldn't add 120hz support(that's not really a Nintendo thing to do despite the hardware definitely supporting it). Speaking of Raytracing, Nvidia is really good at raytracing. So it also wouldn't be unreasonable to assume that the NG Switch's raytracing is potentially on par with, or slightly worse than, the Series S in docked mode. I don't think many games will support raytracing in portable mode without locking the framerate to 30 fps. VRR would also be an amazing feature to have as then games that normally run at 30 max could go to 40 or even 50 at times, though I don't think Nintendo really cares too much despite how much of an improvement it is to games running on the PS5(Spider-Man Miles Morales runs at 40-50 fps in Fidelity mode with VRR on!) or XSX. But it could be beneficial as it means that for open world games, developers can make the game look as nice as they can, and as long as it runs at at LEAST 30 fps, VRR takes care of the rest.

One final note about DLSS, it was stated back in August of 2022 that, in handheld mode, the Switch 2 is PS4 level without DLSS, and PS4 Pro level WITH DLSS. If that means "slightly better than ps4 in handheld, slightly better than ps4 pro with dlss" or "4k 30/60 in handheld mode" is up to interpretation, especially considering that there's no way Nintendo would put a 4k screen on a 7-8 inch device.

NSO will probably carry over, though I'd imagine it would just be called "Nintendo Online"

Feature wise, I'll bet that DLSS will be HEAVILY abused by developers to make games run on par with, if not better than their Xbox Series S versions. Fortnite at 1080p with Nanite and Lumen at 60 fps in docked with no other graphical options isn't unreasonable, seeing as Nintendo probably wouldn't add 120hz support(that's not really a Nintendo thing to do despite the hardware definitely supporting it). Speaking of Raytracing, Nvidia is really good at raytracing. So it also wouldn't be unreasonable to assume that the NG Switch's raytracing is potentially on par with, or slightly worse than, the Series S in docked mode. I don't think many games will support raytracing in portable mode without locking the framerate to 30 fps. VRR would also be an amazing feature to have as then games that normally run at 30 max could go to 40 or even 50 at times, though I don't think Nintendo really cares too much despite how much of an improvement it is to games running on the PS5(Spider-Man Miles Morales runs at 40-50 fps in Fidelity mode with VRR on!) or XSX. But it could be beneficial as it means that for open world games, developers can make the game look as nice as they can, and as long as it runs at at LEAST 30 fps, VRR takes care of the rest.

One final note about DLSS, it was stated back in August of 2022 that, in handheld mode, the Switch 2 is PS4 level without DLSS, and PS4 Pro level WITH DLSS. If that means "slightly better than ps4 in handheld, slightly better than ps4 pro with dlss" or "4k 30/60 in handheld mode" is up to interpretation, especially considering that there's no way Nintendo would put a 4k screen on a 7-8 inch device.

NSO will probably carry over, though I'd imagine it would just be called "Nintendo Online"

Last edited:

MadMarvainy

Moblin

- Pronouns

- He/Him

Assuming that Microsoft and Activision plan to make Call of Duty a cross-gen game for the Nintendo Switch and Nintendo's new hardware, I do wonder if Microsoft and Activision plan to develop a Nintendo Switch specific Call of Duty.

Big news and huge implications regarding CoD on Drake.

I have no doubt in my mind that Diablo IV will be arriving on Drake at some point as well. Blizzard seems really good about getting certain games on Switch (Overwatch and Diablo III). Also, doesn't MS still have a hurdle with the CMA in the UK?

I've been there since the direct was announced. Became clear to me Nintendo was going to treat this year like normal(for the most part) so I moved completely to 2024. Then the direct itself pretty much confirmed my feelings.My head tells me it’s releasing H2 2024, but my heart wants H1 2024.

Smile

Piranha Plant

that's cutting it much more close than I expectedYou'd think, but nah

~August 2016: Switch begins retail MP

October 2016: Switch is publicly revealed

November 2016: Switch fw v1.0.0 is compiled

D

Deleted member 645

Guest

SO ready to play call of duty in bed on my Nintendo gaming device.Big news and huge implications regarding CoD on Drake.

I have no doubt in my mind that Diablo IV will be arriving on Drake at some point as well. Blizzard seems really good about getting certain games on Switch (Overwatch and Diablo III). Also, doesn't MS still have a hurdle with the CMA in the UK?

Hartmann

Piranha Plant

Samsung Electronics’ Foundry Yield Above 75% for 4-Nanometer Process

Recently, Samsung Electronics has improved its process yield (the ratio of good products) to over 75% for its 4-nm process, leading to speculation that it coul

Add: I found an interesting site, not related to Switch NG. This site leaks new SoC/AP from various manufacturers.

팹리스, 파운드리 단신. (2023.07.09. 삼성, AMD)

- 퍼가려면 2차 출처 표시바람. - 삼성 삼성 4nm, 3nm(3GAP) 공정 모바일/웨어러블 AP 3nm 모바일 AP는 당연히 나올거 같고, 웨어러블 AP 공정이 3nm인가, 4nm인가, 둘 다 인가.가 문제인데 삼성 웨어러블 AP 신제품 출시 주기가 일반적인 모바일 AP보다 길다는 것, (제품 특성도 이유겠지만) 그래서 플래그십 다음으로 최신 공정이 적용될 정도로 최신 공정 적용이 빠른데 아직까지 4nm 공정 제품이 없다는 것을 고려하면 3GAP 공정으로 나오려는듯. 하만의 삼성 AP 작업 내역. 5nm 모바일 AP ...

Last edited:

Kazuyamishima

Moblin

- Pronouns

- He

You must be new here lolIs there a consensus on what the NG Switch(or NX 2, or Switch 2) hardware will be? I personally have my own guess involving the Tegra T239 that was leaked last year. For example, wikipedia lists the T239 as having 12 SMs, or 1536 CUDA/Shader cores. Then, looking at the closest in terms of gpu performance, the AGX Orin 32 GB, it'll have 12 RT cores and 44 tensor cores as well(good on nvidia for making very easily scalable SoCs). TFlops wise, despite not being the best indicator of real world performance, will be anywhere from 2.5 to 2.9. Most likely just above 2, or even on par with the PS4 in handheld mode. Ram wise, I think it'll be 8-12 gigabytes. CPU wise, it'll be on par, but most likely better, than the CPU found in the Snapdragon 888. Probably better because it has active cooling. I have no idea on what the screen size or storage will be but I don't want to make any unreasonable estimates other than maybe a 900p OLED screen with 128/256 gigs of storage.

Feature wise, I'll bet that DLSS will be HEAVILY abused by developers to make games run on par with, if not better than their Xbox Series S versions. Fortnite at 1080p with Nanite and Lumen at 60 fps in docked with no other graphical options isn't unreasonable, seeing as Nintendo probably wouldn't add 120hz support(that's not really a Nintendo thing to do despite the hardware definitely supporting it). Speaking of Raytracing, Nvidia is really good at raytracing. So it also wouldn't be unreasonable to assume that the NG Switch's raytracing is potentially on par with, or slightly worse than, the Series S in docked mode. I don't think many games will support raytracing in portable mode without locking the framerate to 30 fps. VRR would also be an amazing feature to have as then games that normally run at 30 max could go to 40 or even 50 at times, though I don't think Nintendo really cares too much despite how much of an improvement it is to games running on the PS5(Spider-Man Miles Morales runs at 40-50 fps in Fidelity mode with VRR on!) or XSX. But it could be beneficial as it means that for open world games, developers can make the game look as nice as they can, and as long as it runs at at LEAST 30 fps, VRR takes care of the rest.

One final note about DLSS, it was stated back in August of 2022 that, in handheld mode, the Switch 2 is PS4 level without DLSS, and PS4 Pro level WITH DLSS. If that means "slightly better than ps4 in handheld, slightly better than ps4 pro with dlss" or "4k 30/60 in handheld mode" is up to interpretation, especially considering that there's no way Nintendo would put a 4k screen on a 7-8 inch device.

NSO will probably carry over, though I'd imagine it would just be called "Nintendo Online"

XRTerra

Peter. Welcome to fortnite👍

Yeah, did somebody already say everything I just said but better?You must be new here lol

We have been talking about the T239 since kopite7kimi leaked it's existence in 2021, he said that Nintendo would use it.Yeah, did somebody already say everything I just said but better?

Otherwise everything from your post is basically what this thread has been talking about for the last year and half.

Nvidia in talks to be an anchor investor in Arm IPO

World’s most valuable semiconductor group discusses acquiring stake in SoftBank-owned chip designer ahead of New York listing

www.ft.com

www.ft.com

Chip designer Arm is in talks to bring in Nvidia as an anchor investor, while the SoftBank-owned company presses ahead with plans for a New York listing as soon as September, several people briefed on the talks said.

Nvidia, the world’s most valuable semiconductor company, was forced last year to abandon its planned $66bn acquisition of Arm after the deal was challenged by regulators.

The Silicon Valley-based chipmaker is one of several existing Arm partners, including Intel, that the UK-based company is hoping will take a long-term stake at the initial public offering stage, according to the people.

The prospective investors are still negotiating with Arm over its valuation. One person familiar with the discussions said Nvidia wanted to come in at a share price that would put Arm’s total value at $35bn to $40bn, while Arm wants to be closer to $80bn.

The aim of bringing in large anchor investors as Arm launches an IPO in New York would be to help to support the stock as SoftBank, which bought Arm for £24bn ($32bn) in 2016, sells down its stake.

Many private tech companies and their advisers are watching closely to see if Arm can succeed in launching its IPO in 2023 after a year-long slump in new listings.

Securing the advance support of a few anchor investors is a popular tactic during difficult IPO markets, serving as a way to ensure demand and reassure other potential investors.

Arm and Nvidia declined to comment. A person close to the situation said the talks had not been concluded and might not lead to an investment.

Arm is expected to be the most valuable company to go public in the US since automaker Rivian, which listed with an initial market capitalisation of $70bn in late 2021.

It is widely considered to be a less risky prospect than many IPO candidates given its previous record as a public company.

People close to SoftBank said its founder Masayoshi Son has been personally involved in seeking anchor investors for Arm. Son has been focusing on expanding the chip designer’s revenue ahead of its IPO. SoftBank declined to comment.

Arm and Nvidia have contacted regulators in the US to smooth over any potential concerns about what is likely to be a small minority investment, in the low hundreds of millions of dollars, according to people close to the discussions.

When Nvidia first announced plans to take over Arm in September 2020, competition authorities in the US and Europe objected. They said the deal might restrict rivals' access to Arm's intellectual property, which can be found in the vast majority of smartphones and a growing portion of the automotive market, as well as give Nvidia access to competitively sensitive information.

Nvidia is already an Arm customer but its talks to invest in the company point to bigger ambitions to expand from its core business in "graphics processing units" — dedicated chips for accelerating specialised tasks, including 3D rendering and training artificial intelligence — into the "central processing units" that handle most other computing functions.

One person familiar with the plans said Arm was keen to bring in Nvidia in its bid as a way to position AI as central to the UK group's growth plans. "AI will be every third word in the offering document," the person said. "Nvidia is so important as its involvement implies AI."

The move would bring Nvidia into closer competition with Intel, whose CPUs have long dominated the PC and data centre markets.

Nvidia, which became the first chipmaker to hit a $1tn valuation in May, recently produced its first CPU using Arm's designs as part of a so-called superchip, dubbed Grace Hopper and designed for artificial intelligence and high-performance computing.

Global listing volumes plummeted last year as investors were put off by falling stock prices, rising market volatility and the uncertain economic outlook. However, activity has begun to pick up in recent weeks, thanks in part to a broader upswing in stock prices led by Nvidia.

Additional reporting by Richard Waters

KeijiKG

Moblin

I believe they meant 50 year anniversary, not 50+10 years from now.See you in 2083 then.

pelusilla6

Bob-omb

Yeah, did somebody already say everything I just said but better?

What you have read has probably come from users of this thread

Kazuyamishima

Moblin

- Pronouns

- He

Oldpuck, concert and multiple other users have gone into extensive write ups and theorizing with beautiful wording and usually fairly easy to understand terms on this topic you should be able to find it in this threadYeah, did somebody already say everything I just said but better?

Pour yourself a drink and sit down in a good chair, to read this excellent loong argument for what clocks it will run.Yeah, did somebody already say everything I just said but better?

My guess is currently 1.7 Tflops in portable mode (12 SMs @ 550MHz) and 3.4 Tflops docked (12 SMs @ 1.1GHz).

To explain my reasoning, let's play a game of Why does Thraktor think a TSMC 4N manufacturing process is likely for T239?

The short answer is that a 12 SM GPU is far too large for Samsung 8nm, and likely too large for any intermediate process like TSMC's 6nm or Samsung's 5nm/4nm processes. There's a popular conception that Nintendo will go with a "cheap" process like 8nm and clock down to oblivion in portable mode, but that ignores both the economic and physical realities of microprocessor design.

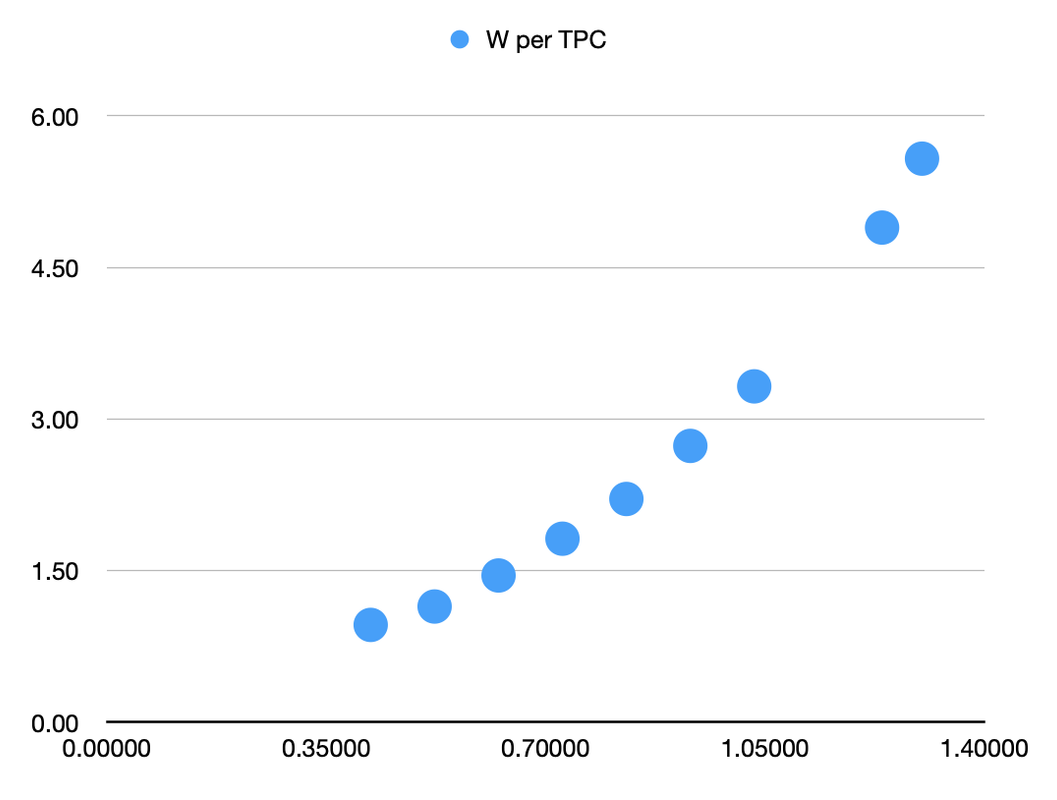

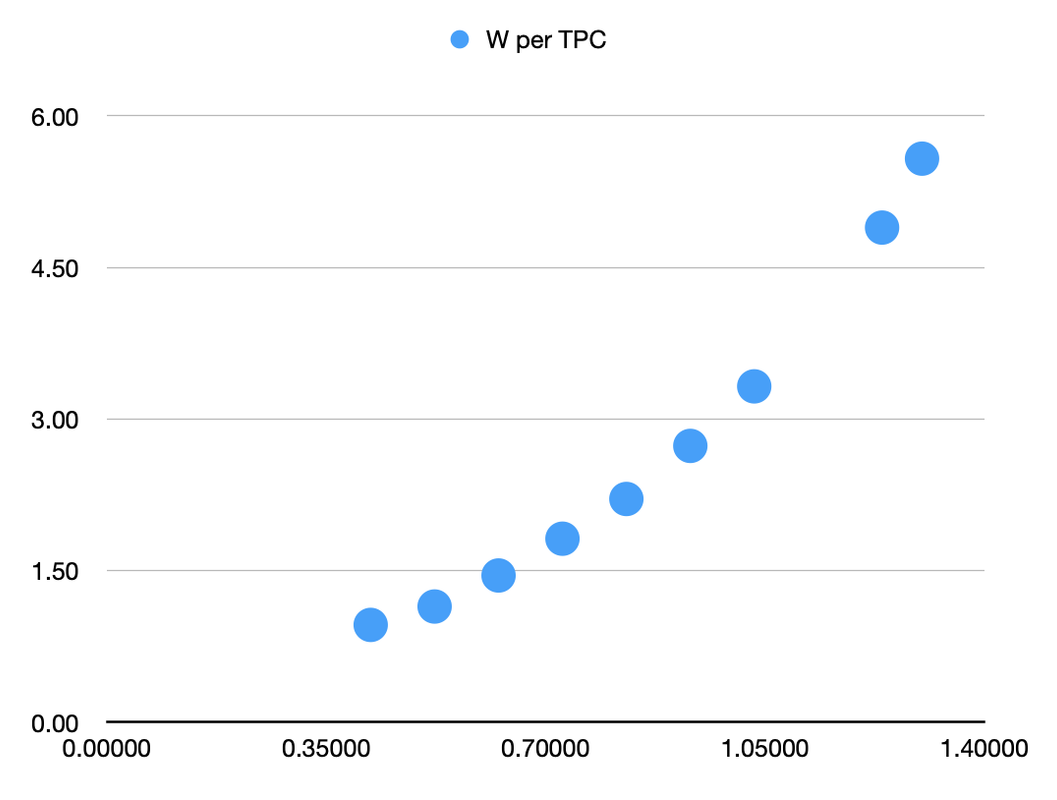

To start, let's quickly talk about power curves. A power curve for a chip, whether a CPU or GPU or something else, is a plot of the amount of power the chip consumes against the clock speed of the chip. A while ago I extracted the power curve for Orin's 8nm Ampere GPU from a Nvidia power estimator tool. There are more in-depth details here, here and here, but for now let's focus on the actual power curve data:

Code:Clock W per TPC 0.42075 0.96 0.52275 1.14 0.62475 1.45 0.72675 1.82 0.82875 2.21 0.93075 2.73 1.03275 3.32 1.23675 4.89 1.30050 5.58

The first column is the clock speed in GHz, and the second is the Watts consumed per TPC (which is a pair of SMs). Let's create a chart for this power curve:

We can see that the power consumption curves upwards as clock speeds increase. The reason for this is that to increase clock speed you need to increase voltage, and power consumption is proportional to voltage squared. As a result, higher clock speeds are typically less efficient than lower ones.

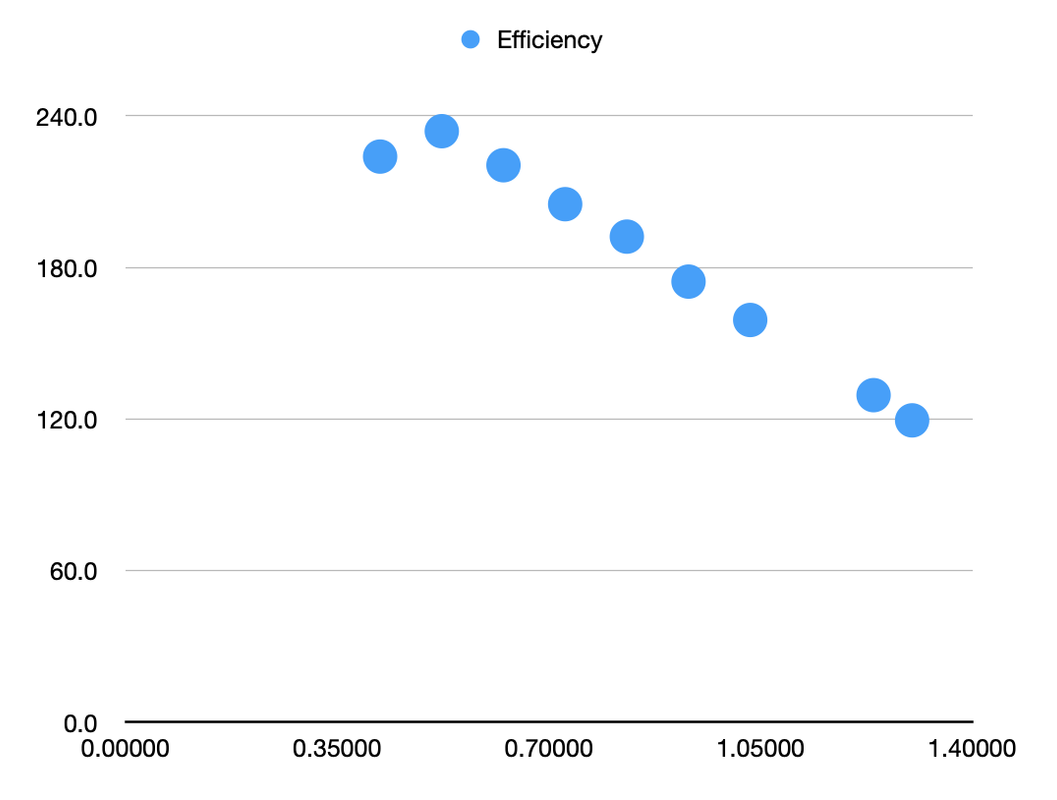

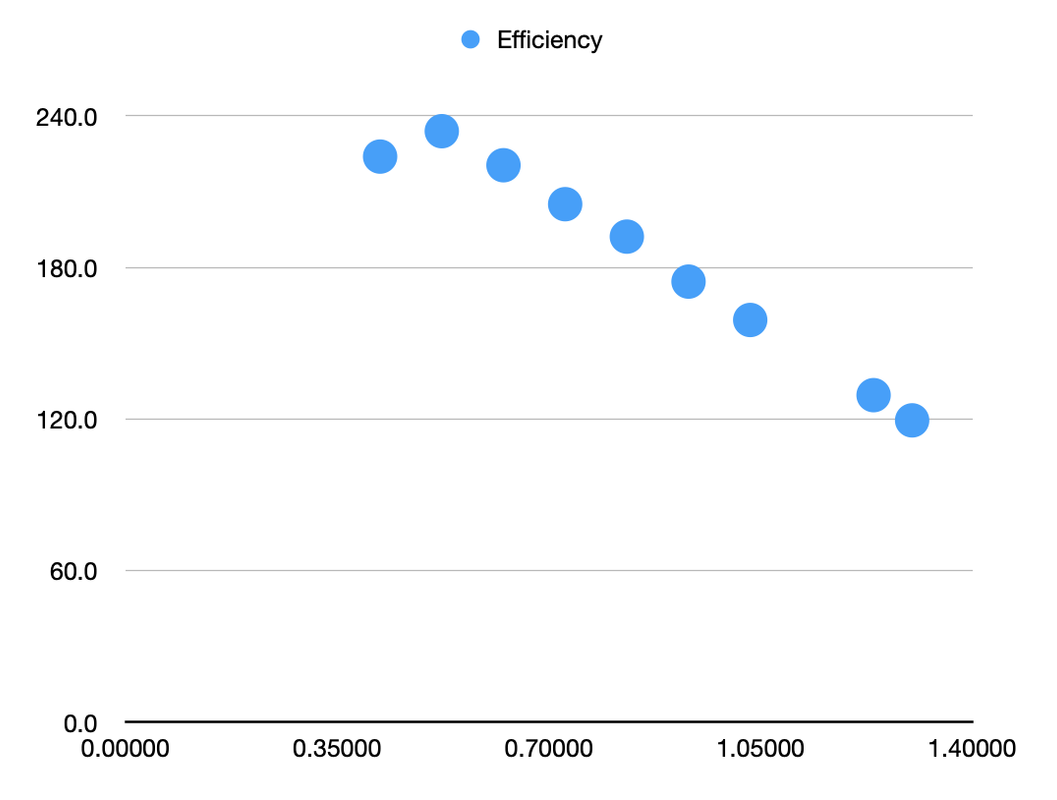

So, if higher clock speeds are typically less efficient, doesn't that mean you can always reduce clocks to gain efficiency? Not quite. While the chart above might look like a smooth curve, it's actually hiding something; at that lowest clock speed of 420MHz the curve breaks down completely. To illustrate, let's look at the same data, but chart power efficiency (measured in Gflops per Watt) rather than outright power consumption:

There are two things going on in this chart. For all the data points from 522 MHz onwards, we see what you would usually expect, which is that efficiency drops as clock speeds increase. The relationship is exceptionally clear here, as it's a pretty much perfect straight line. But then there's that point on the left. The GPU at 420MHz is less efficient than it is at 522MHz, why is that?

The answer is relatively straight-forward if we consider one important point: there is a minimum voltage that the chip can operate at. Voltage going up with clock speed means efficiency gets worse, and voltage going down as clock speeds increase means efficiency gets better. But what happens when you want to reduce clocks but can't reduce voltage any more? Not only do you stop improving power efficiency, but it actually starts to go pretty sharply in the opposite direction.

Because power consumption is mostly related to voltage, not clock speed, when you reduce clocks but keep the voltage the same, you don't really save much power. A large part of the power consumption called "static power" stays exactly the same, while the other part, "dynamic power", does fall off a bit. What you end up with is much less performance, but only slightly less power consumption. That is, power efficiency gets worse.

So that kink in the efficiency graph, between 420MHz and 522MHz, is the point at which you can't reduce the voltage any more. Any clocks below that point will all operate at the same voltage, and without being able to reduce the voltage, power efficiency gets worse instead of better below that point. The clock speed at that point can be called the "peak efficiency clock", as it offers higher power efficiency than any other clock speed.

How does this impact how chips are designed?

There are two things to take from the above. First, as a general point, every chip on a given manufacturing process has a peak efficiency clock, below which you lose power efficiency by reducing clocks. Secondly, we have the data from Orin to know pretty well where this point is for a GPU very similar to T239's on a Samsung 8nm process, which is around 470MHz.

Now let's talk designing chips. Nvidia and Nintendo are in a room deciding what GPU to put in their new SoC for Nintendo's new console. Nintendo has a financial budget of how much they want to spend on the chip, but they also have a power budget, which is how much power the chip can use up to keep battery life and cooling in check. Nvidia and Nintendo's job in that room is to figure out the best GPU they can fit within those two budgets.

GPUs are convenient in that you can make them basically as wide as you want (that is use as many SMs as you want) and developers will be able to make use of all the performance available. The design space is basically a line between a high number of SMs at a low clock, and a low number of SMs at a high clock. Because there's a fixed power budget, the theoretically ideal place on that line is the one where the clock is the peak efficiency clock, so you can get the most performance from that power.

That is, if the power budget is 3W for the GPU, and the peak efficiency clock is 470MHz, and the power consumption per SM at 470MHz is 0.5W, then the best possible GPU they could include would be a 6 SM GPU running at 470MHz. Using a smaller GPU would mean higher clocks, and efficiency would drop, but using a larger GPU with lower clocks would also mean efficiency would drop, because we're already at the peak efficiency clock.

In reality, it's rare to see a chip designed to run at exactly that peak efficiency clock, because there's always a financial budget as well as the power budget. Running a smaller GPU at higher clocks means you save money, so the design is going to be a tradeoff between a desire to get as close as possible to the peak efficiency clock, which maximises performance within a fixed power budget, and as small a GPU as possible, which minimises cost. Taking the same example, another option would be to use 4 SMs and clock them at around 640MHz. This would also consume 3W, but would provide around 10% less performance. It would, however, result in a cheaper chip, and many people would view 10% performance as a worthwhile trade-off when reducing the number of SMs by 33%.

However, while it's reasonable to design a chip with intent to clock it at the peak efficiency clock, or to clock it above the peak efficiency clock, what you're not going to see is a chip that's intentionally designed to run at a fixed clock speed that's below the peak efficiency clock. The reason for this is pretty straight-forward; if you have a design with a large number of SMs that's intended to run at a clock below the peak efficiency clock, you could just remove some SMs and increase the clock speed and you would get both better performance within your power budget and it would cost less.

How does this relate to Nintendo and T239's manufacturing process?

The above section wasn't theoretical. Nvidia and Nintendo did sit in a room (or have a series of calls) to design a chip for a new Nintendo console, and what they came out with is T239. We know that the result of those discussions was to use a 12 SM Ampere GPU. We also know the power curve, and peak efficiency clock for a very similar Ampere GPU on 8nm.

The GPU in the TX1 used in the original Switch units consumed around 3W in portable mode, as far as I can tell. In later models with the die-shrunk Mariko chip, it would have been lower still. Therefore, I would expect 3W to be a reasonable upper limit to the power budget Nintendo would allocate for the GPU in portable mode when designing the T239.

With a 3W power budget and a peak efficiency clock of 470MHz, then the (again, not theoretical) numbers above tell us the best possible performance would be achieved by a 6 SM GPU operating at 470MHz, and that you'd be able to get 90% of that performance with a 4 SM GPU operating at 640MHz. Note that neither of these say 12 SMs. A 12 SM GPU on Samsung 8nm would be an awful design for a 3W power budget. It would be twice the size and cost of a 6 SM GPU while offering much less performance, if it's even possible to run within 3W at any clock.

There's no world where Nintendo and Nvidia went into that room with an 8nm SoC in mind and a 3W power budget for the GPU in handheld mode, and came out with a 12 SM GPU. That means either the manufacturing process, or the power consumption must be wrong (or both). I'm basing my power consumption estimates on the assumption that this is a device around the same size as the Switch and with battery life that falls somewhere between TX1 and Mariko units. This seems to be the same assumption almost everyone here is making, and while it could be wrong, I think them sticking with the Switch form-factor and battery life is a pretty safe bet, which leaves the manufacturing process.

So, if it's not Samsung 8nm, what is it?

Well, from the Orin data we know that a 12 SM Ampere GPU on Samsung 8nm at the peak efficiency clocks of 470MHz would consume a bit over 6W, which means we need something twice as power efficient as Samsung 8nm. There are a couple of small differences between T239 and Orin's GPUs, like smaller tensor cores and improved clock-gating, but they are likely to have only marginal impact on power consumption, nowhere near the 2x we need, which will have to come from a better manufacturing process.

One note to add here is that we actually need a bit more than a 2x efficiency improvement over 8nm, because as the manufacturing process changes, so does the peak efficiency clock. The peak efficiency clock will typically increase as an architecture is moved to a more efficient manufacturing process, as the improved process allows higher clocks at given voltages. From DVFS tables in Linux, we know that Mariko's peak efficiency clock on 16nm/12nm is likely 384MHz. That's increased to around 470MHz for Ampere on 8nm, and will increase further as it's migrated to more advanced processes.

I'd expect peak efficiency clocks of around 500-600MHz on improved processes, which means that instead of running at 470MHz the chip would need to run at 500-600MHz within 3W to make sense. A clock of 550MHz would consume around 7.5W on 8nm, so we would need a 2.5x improvement in efficiency instead.

So, what manufacturing process can give a 2.5x improvement in efficiency over Samsung 8nm? The only reasonable answer I can think of is TSMC's 5nm/4nm processes, including 4N, which just happens to be the process Nvidia is using for every other product (outside of acquired Mellanox products) from this point onwards. In Nvidia's Ada white paper (an architecture very similar to Ampere), they claim a 2x improvement in performance per Watt, which appears to come almost exclusively from the move to TSMC's 4N process, plus some memory changes.

They don't provide any hard numbers for similarly sized GPUs at the same clock speed, with only a vague unlabelled marketing graph here, but they recently announced the Ada based RTX 4000 SFF workstation GPU, which has 48 SMs clocked at 1,565MHz and a 70W TDP. The older Ampere RTX A4000 also had 48 SMs clocked at 1,560MHz and had a TDP of 140W. There are differences in the memory setup, and TDPs don't necessarily reflect real world power consumption, but the indication is that the move from Ampere on Samsung 8nm to an Ampere-derived architecture on TSMC 4N reduces power consumption by about a factor of 2.

What about the other options? TSMC 6nm or Samsung 5nm/4nm?

Honestly the more I think about it the less I think these other possibilities are viable. Even aside from the issue that these aren't processes Nvidia is using for anything else, I just don't think a 12 SM GPU would make sense on either of them. Even on TSMC 4N it's a stretch. Evidence suggests that it would achieve a 2x efficiency improvement, but we would be looking for 2.5x in reality. There's enough wiggle room there, in terms of Ada having some additional features not in T239 and not having hard data on Ada's power consumption, so the actual improvement in T239's case may be 2.5x, but even that would mean that Nintendo have gone for the largest GPU possible within the power limit.

With 4N just about stretching to the 2.5x improvement in efficiency required for a 12 SM GPU to make sense, I don't think the chances for any other process are good. We don't have direct examples for other processes like we have for Ada, but from everything we know, TSMC's 5nm class processes are significantly more efficient than either their 6nm or Samsung's 5nm/4nm processes. If it's a squeeze for 12 SMs to work on 4N, then I can't see how it would make sense on anything less efficient than 4N.

But what about cost, isn't 4nm really expensive?

Actually, no. TSMC's 4N wafers are expensive, but they're also much higher density, which means you fit many more chips on a wafer. This SemiAnalysis article from September claimed that Nvidia pays 2.2x as much for a TSMC 4N wafer as they do for a Samsung 8nm wafer. However, Nvidia is achieving 2.7x higher transistor density on 4N, which means that a chip with the same transistor count would actually be cheaper if manufactured on 4N than 8nm (even more so when you factor yields into account).

Are there any caveats?

Yes, the major one being the power consumption of the chip. I'm assuming that Nintendo's next device is going to be roughly the same size and form-factor as the Switch, and they will want a similar battery life. If it's a much larger device (like Steam Deck sized) or they're ok with half an hour of battery life, then that changes the equations, but I don't think either of those are realistic. Ditto if it turned out to be a stationary home console for some reason (again, I'm not expecting that).

The other one is that I'm assuming that Nintendo will use all 12 SMs in portable mode. It's theoretically possible that they would disable half of them in portable mode, and only run the full 12 in docked mode. This would allow them to stick within 3W even on 8nm. However, it's a pain from the software point of view, and it assumes that Nintendo is much more focussed on docked performance than handheld, including likely running much higher power draw docked. I feel it's more likely that Nintendo would build around handheld first, as that's the baseline of performance all games will have to operate on, and then use the same setup at higher clocks for docked.

That's a lot of words. Is this just all confirmation bias or copium or hopium or whatever the kids call it?

I don't think so. Obviously everyone should be careful of their biases, but I actually made the exact same argument over a year ago back before the Nvidia hack, when we thought T239 would be manufactured on Samsung 8nm but didn't know how big the GPU was. At the time a lot of people thought I was too pessimistic because I thought 8 SMs was unrealistic on 8nm and a 4 SM GPU was more likely. I was wrong about T239 using a 4 SM GPU, but the Orin power figures we got later backed up my argument, and 8 SMs is indeed unrealistic on 8nm. The 12 SM GPU we got is even more unrealistic on 8nm, so by the same logic we must be looking at a much more efficient manufacturing process. What looked pessimistic back then is optimistic now only because the data has changed.

Z0m3le

Bob-omb

They're Nintendo's primary (and at this point, I believe exclusive) manufacturing partner. Foxconn assembles the Switch and manufactures the backplate.

Not that I'm Nate, but even assuming the report is correct, its a little ambiguous about "launch". From a manufacturing perspective, the "launch" is when they first get orders for devices, and the article sites December as the first indication of increased demand for Foxconn products (including iPhones).

Beginning manufacturing in Q1 matches solidly with all of that.

Here is the relevant quote from the article: "Nintendo plans to launch the Switch (successor) in Q1 next year. Based on this estimate, Hongzhun’s game console assembly business can expand It is hoped that we will start to see the momentum of pulling goods in December this year."This. If Q1 is when they are expecting/forecasting initial supply to arrive for manufacturing, then the article would be accurate in the sense of "launch" being when they begin to do test-runs & such.

Production for Switch started in October 2016 for a launch 5 months later at the very beginning of March. Production starting in Q1 would lead to a Spring release in April or May. Production for PS4 started around May for a November launch, same with PS5, OLED Switch models were much closer from production to release with a June production start and a September release.

Holiday 2024 is possible with a delay, but there is no evidence of it in this article.

The foxconn leak from October 2016 said that production had begun that month, it is the one with all the crazy detailed info and was proven correct. Production to release was ~5 months for the Switch.You'd think, but nah

~August 2016: Switch begins retail MP

October 2016: Switch is publicly revealed

November 2016: Switch fw v1.0.0 is compiled

Since this post was brought back up, I think LiC's find of that DLSS test lines up with your docked clock really well, with the 1.125GHz for 3.456TFLOPs. The DLSS test using 3 clocks named after power consumption, really does make a lot of sense here, it tests a 660MHz clock called 4.2w which could be a boost mode similar to 460MHz clock Switch uses for it's boost mode. That would offer 2TFLOPs in portable and 3.456TFLOPs docked, we are talking about a ~9x raw performance increase here, and with everything else the chip offers (DLSS, Raytracing, much more modern GPU features), it should feel closer to current gen consoles than Switch did to last gen consoles.My guess is currently 1.7 Tflops in portable mode (12 SMs @ 550MHz) and 3.4 Tflops docked (12 SMs @ 1.1GHz).

To explain my reasoning, let's play a game of Why does Thraktor think a TSMC 4N manufacturing process is likely for T239?

The short answer is that a 12 SM GPU is far too large for Samsung 8nm, and likely too large for any intermediate process like TSMC's 6nm or Samsung's 5nm/4nm processes. There's a popular conception that Nintendo will go with a "cheap" process like 8nm and clock down to oblivion in portable mode, but that ignores both the economic and physical realities of microprocessor design.

To start, let's quickly talk about power curves. A power curve for a chip, whether a CPU or GPU or something else, is a plot of the amount of power the chip consumes against the clock speed of the chip. A while ago I extracted the power curve for Orin's 8nm Ampere GPU from a Nvidia power estimator tool. There are more in-depth details here, here and here, but for now let's focus on the actual power curve data:

Code:Clock W per TPC 0.42075 0.96 0.52275 1.14 0.62475 1.45 0.72675 1.82 0.82875 2.21 0.93075 2.73 1.03275 3.32 1.23675 4.89 1.30050 5.58

The first column is the clock speed in GHz, and the second is the Watts consumed per TPC (which is a pair of SMs). Let's create a chart for this power curve:

We can see that the power consumption curves upwards as clock speeds increase. The reason for this is that to increase clock speed you need to increase voltage, and power consumption is proportional to voltage squared. As a result, higher clock speeds are typically less efficient than lower ones.

So, if higher clock speeds are typically less efficient, doesn't that mean you can always reduce clocks to gain efficiency? Not quite. While the chart above might look like a smooth curve, it's actually hiding something; at that lowest clock speed of 420MHz the curve breaks down completely. To illustrate, let's look at the same data, but chart power efficiency (measured in Gflops per Watt) rather than outright power consumption:

There are two things going on in this chart. For all the data points from 522 MHz onwards, we see what you would usually expect, which is that efficiency drops as clock speeds increase. The relationship is exceptionally clear here, as it's a pretty much perfect straight line. But then there's that point on the left. The GPU at 420MHz is less efficient than it is at 522MHz, why is that?

The answer is relatively straight-forward if we consider one important point: there is a minimum voltage that the chip can operate at. Voltage going up with clock speed means efficiency gets worse, and voltage going down as clock speeds increase means efficiency gets better. But what happens when you want to reduce clocks but can't reduce voltage any more? Not only do you stop improving power efficiency, but it actually starts to go pretty sharply in the opposite direction.

Because power consumption is mostly related to voltage, not clock speed, when you reduce clocks but keep the voltage the same, you don't really save much power. A large part of the power consumption called "static power" stays exactly the same, while the other part, "dynamic power", does fall off a bit. What you end up with is much less performance, but only slightly less power consumption. That is, power efficiency gets worse.

So that kink in the efficiency graph, between 420MHz and 522MHz, is the point at which you can't reduce the voltage any more. Any clocks below that point will all operate at the same voltage, and without being able to reduce the voltage, power efficiency gets worse instead of better below that point. The clock speed at that point can be called the "peak efficiency clock", as it offers higher power efficiency than any other clock speed.

How does this impact how chips are designed?

There are two things to take from the above. First, as a general point, every chip on a given manufacturing process has a peak efficiency clock, below which you lose power efficiency by reducing clocks. Secondly, we have the data from Orin to know pretty well where this point is for a GPU very similar to T239's on a Samsung 8nm process, which is around 470MHz.

Now let's talk designing chips. Nvidia and Nintendo are in a room deciding what GPU to put in their new SoC for Nintendo's new console. Nintendo has a financial budget of how much they want to spend on the chip, but they also have a power budget, which is how much power the chip can use up to keep battery life and cooling in check. Nvidia and Nintendo's job in that room is to figure out the best GPU they can fit within those two budgets.

GPUs are convenient in that you can make them basically as wide as you want (that is use as many SMs as you want) and developers will be able to make use of all the performance available. The design space is basically a line between a high number of SMs at a low clock, and a low number of SMs at a high clock. Because there's a fixed power budget, the theoretically ideal place on that line is the one where the clock is the peak efficiency clock, so you can get the most performance from that power.

That is, if the power budget is 3W for the GPU, and the peak efficiency clock is 470MHz, and the power consumption per SM at 470MHz is 0.5W, then the best possible GPU they could include would be a 6 SM GPU running at 470MHz. Using a smaller GPU would mean higher clocks, and efficiency would drop, but using a larger GPU with lower clocks would also mean efficiency would drop, because we're already at the peak efficiency clock.

In reality, it's rare to see a chip designed to run at exactly that peak efficiency clock, because there's always a financial budget as well as the power budget. Running a smaller GPU at higher clocks means you save money, so the design is going to be a tradeoff between a desire to get as close as possible to the peak efficiency clock, which maximises performance within a fixed power budget, and as small a GPU as possible, which minimises cost. Taking the same example, another option would be to use 4 SMs and clock them at around 640MHz. This would also consume 3W, but would provide around 10% less performance. It would, however, result in a cheaper chip, and many people would view 10% performance as a worthwhile trade-off when reducing the number of SMs by 33%.

However, while it's reasonable to design a chip with intent to clock it at the peak efficiency clock, or to clock it above the peak efficiency clock, what you're not going to see is a chip that's intentionally designed to run at a fixed clock speed that's below the peak efficiency clock. The reason for this is pretty straight-forward; if you have a design with a large number of SMs that's intended to run at a clock below the peak efficiency clock, you could just remove some SMs and increase the clock speed and you would get both better performance within your power budget and it would cost less.

How does this relate to Nintendo and T239's manufacturing process?

The above section wasn't theoretical. Nvidia and Nintendo did sit in a room (or have a series of calls) to design a chip for a new Nintendo console, and what they came out with is T239. We know that the result of those discussions was to use a 12 SM Ampere GPU. We also know the power curve, and peak efficiency clock for a very similar Ampere GPU on 8nm.

The GPU in the TX1 used in the original Switch units consumed around 3W in portable mode, as far as I can tell. In later models with the die-shrunk Mariko chip, it would have been lower still. Therefore, I would expect 3W to be a reasonable upper limit to the power budget Nintendo would allocate for the GPU in portable mode when designing the T239.

With a 3W power budget and a peak efficiency clock of 470MHz, then the (again, not theoretical) numbers above tell us the best possible performance would be achieved by a 6 SM GPU operating at 470MHz, and that you'd be able to get 90% of that performance with a 4 SM GPU operating at 640MHz. Note that neither of these say 12 SMs. A 12 SM GPU on Samsung 8nm would be an awful design for a 3W power budget. It would be twice the size and cost of a 6 SM GPU while offering much less performance, if it's even possible to run within 3W at any clock.

There's no world where Nintendo and Nvidia went into that room with an 8nm SoC in mind and a 3W power budget for the GPU in handheld mode, and came out with a 12 SM GPU. That means either the manufacturing process, or the power consumption must be wrong (or both). I'm basing my power consumption estimates on the assumption that this is a device around the same size as the Switch and with battery life that falls somewhere between TX1 and Mariko units. This seems to be the same assumption almost everyone here is making, and while it could be wrong, I think them sticking with the Switch form-factor and battery life is a pretty safe bet, which leaves the manufacturing process.

So, if it's not Samsung 8nm, what is it?

Well, from the Orin data we know that a 12 SM Ampere GPU on Samsung 8nm at the peak efficiency clocks of 470MHz would consume a bit over 6W, which means we need something twice as power efficient as Samsung 8nm. There are a couple of small differences between T239 and Orin's GPUs, like smaller tensor cores and improved clock-gating, but they are likely to have only marginal impact on power consumption, nowhere near the 2x we need, which will have to come from a better manufacturing process.

One note to add here is that we actually need a bit more than a 2x efficiency improvement over 8nm, because as the manufacturing process changes, so does the peak efficiency clock. The peak efficiency clock will typically increase as an architecture is moved to a more efficient manufacturing process, as the improved process allows higher clocks at given voltages. From DVFS tables in Linux, we know that Mariko's peak efficiency clock on 16nm/12nm is likely 384MHz. That's increased to around 470MHz for Ampere on 8nm, and will increase further as it's migrated to more advanced processes.

I'd expect peak efficiency clocks of around 500-600MHz on improved processes, which means that instead of running at 470MHz the chip would need to run at 500-600MHz within 3W to make sense. A clock of 550MHz would consume around 7.5W on 8nm, so we would need a 2.5x improvement in efficiency instead.

So, what manufacturing process can give a 2.5x improvement in efficiency over Samsung 8nm? The only reasonable answer I can think of is TSMC's 5nm/4nm processes, including 4N, which just happens to be the process Nvidia is using for every other product (outside of acquired Mellanox products) from this point onwards. In Nvidia's Ada white paper (an architecture very similar to Ampere), they claim a 2x improvement in performance per Watt, which appears to come almost exclusively from the move to TSMC's 4N process, plus some memory changes.

They don't provide any hard numbers for similarly sized GPUs at the same clock speed, with only a vague unlabelled marketing graph here, but they recently announced the Ada based RTX 4000 SFF workstation GPU, which has 48 SMs clocked at 1,565MHz and a 70W TDP. The older Ampere RTX A4000 also had 48 SMs clocked at 1,560MHz and had a TDP of 140W. There are differences in the memory setup, and TDPs don't necessarily reflect real world power consumption, but the indication is that the move from Ampere on Samsung 8nm to an Ampere-derived architecture on TSMC 4N reduces power consumption by about a factor of 2.

What about the other options? TSMC 6nm or Samsung 5nm/4nm?

Honestly the more I think about it the less I think these other possibilities are viable. Even aside from the issue that these aren't processes Nvidia is using for anything else, I just don't think a 12 SM GPU would make sense on either of them. Even on TSMC 4N it's a stretch. Evidence suggests that it would achieve a 2x efficiency improvement, but we would be looking for 2.5x in reality. There's enough wiggle room there, in terms of Ada having some additional features not in T239 and not having hard data on Ada's power consumption, so the actual improvement in T239's case may be 2.5x, but even that would mean that Nintendo have gone for the largest GPU possible within the power limit.

With 4N just about stretching to the 2.5x improvement in efficiency required for a 12 SM GPU to make sense, I don't think the chances for any other process are good. We don't have direct examples for other processes like we have for Ada, but from everything we know, TSMC's 5nm class processes are significantly more efficient than either their 6nm or Samsung's 5nm/4nm processes. If it's a squeeze for 12 SMs to work on 4N, then I can't see how it would make sense on anything less efficient than 4N.

But what about cost, isn't 4nm really expensive?

Actually, no. TSMC's 4N wafers are expensive, but they're also much higher density, which means you fit many more chips on a wafer. This SemiAnalysis article from September claimed that Nvidia pays 2.2x as much for a TSMC 4N wafer as they do for a Samsung 8nm wafer. However, Nvidia is achieving 2.7x higher transistor density on 4N, which means that a chip with the same transistor count would actually be cheaper if manufactured on 4N than 8nm (even more so when you factor yields into account).

Are there any caveats?

Yes, the major one being the power consumption of the chip. I'm assuming that Nintendo's next device is going to be roughly the same size and form-factor as the Switch, and they will want a similar battery life. If it's a much larger device (like Steam Deck sized) or they're ok with half an hour of battery life, then that changes the equations, but I don't think either of those are realistic. Ditto if it turned out to be a stationary home console for some reason (again, I'm not expecting that).

The other one is that I'm assuming that Nintendo will use all 12 SMs in portable mode. It's theoretically possible that they would disable half of them in portable mode, and only run the full 12 in docked mode. This would allow them to stick within 3W even on 8nm. However, it's a pain from the software point of view, and it assumes that Nintendo is much more focussed on docked performance than handheld, including likely running much higher power draw docked. I feel it's more likely that Nintendo would build around handheld first, as that's the baseline of performance all games will have to operate on, and then use the same setup at higher clocks for docked.

That's a lot of words. Is this just all confirmation bias or copium or hopium or whatever the kids call it?

I don't think so. Obviously everyone should be careful of their biases, but I actually made the exact same argument over a year ago back before the Nvidia hack, when we thought T239 would be manufactured on Samsung 8nm but didn't know how big the GPU was. At the time a lot of people thought I was too pessimistic because I thought 8 SMs was unrealistic on 8nm and a 4 SM GPU was more likely. I was wrong about T239 using a 4 SM GPU, but the Orin power figures we got later backed up my argument, and 8 SMs is indeed unrealistic on 8nm. The 12 SM GPU we got is even more unrealistic on 8nm, so by the same logic we must be looking at a much more efficient manufacturing process. What looked pessimistic back then is optimistic now only because the data has changed.

I do think a 3w mode is perfectly reasonable, I'd also expect a much lower mode for just rendering Switch games, as I don't expect it to try to run exact performance for BC, not when modded Switch units can be overclocked with little to no issues. CPU wise, I think 1.8GHz-2GHz makes sense, I'm hoping they run one core at a much lower clock during gaming for the OS, so they can hit the 2GHz clock, compared to Ryzen CPU cores in current consoles, A78C should offer a surprisingly smaller performance gap than many are expecting.

Last edited:

EdFiftyNine

Switch 2 hype recharged

- Pronouns

- He/Him

Big news and huge implications regarding CoD on Drake.

I have no doubt in my mind that Diablo IV will be arriving on Drake at some point as well. Blizzard seems really good about getting certain games on Switch (Overwatch and Diablo III). Also, doesn't MS still have a hurdle with the CMA in the UK?

Shortly after the verdict the CMA, Microsoft and ABK all agreed to go back to the table and paused the legal battle. It’s been reported Microsoft have offered something that the CMA may find acceptable.

Microsoft-Activision deal moves closer as judge denies FTC injunction request

The U.S. government can now bring the judge's decision to the U.S. Court of Appeals for the 9th Circuit.

Not over until it’s over but I think the CMA take it and then posture it as a win for regulators while also not standing in the way of the deal.

FernandoRocker

Piranha Plant

Did I just travel back in time?Is there a consensus on what the NG Switch(or NX 2, or Switch 2) hardware will be? I personally have my own guess involving the Tegra T239 that was leaked last year. For example, wikipedia lists the T239 as having 12 SMs, or 1536 CUDA/Shader cores. Then, looking at the closest in terms of gpu performance, the AGX Orin 32 GB, it'll have 12 RT cores and 44 tensor cores as well(good on nvidia for making very easily scalable SoCs). TFlops wise, despite not being the best indicator of real world performance, will be anywhere from 2.5 to 2.9. Most likely just above 2, or even on par with the PS4 in handheld mode. Ram wise, I think it'll be 8-12 gigabytes. CPU wise, it'll be on par, but most likely better, than the CPU found in the Snapdragon 888. Probably better because it has active cooling. I have no idea on what the screen size or storage will be but I don't want to make any unreasonable estimates other than maybe a 900p OLED screen with 128/256 gigs of storage.

Feature wise, I'll bet that DLSS will be HEAVILY abused by developers to make games run on par with, if not better than their Xbox Series S versions. Fortnite at 1080p with Nanite and Lumen at 60 fps in docked with no other graphical options isn't unreasonable, seeing as Nintendo probably wouldn't add 120hz support(that's not really a Nintendo thing to do despite the hardware definitely supporting it). Speaking of Raytracing, Nvidia is really good at raytracing. So it also wouldn't be unreasonable to assume that the NG Switch's raytracing is potentially on par with, or slightly worse than, the Series S in docked mode. I don't think many games will support raytracing in portable mode without locking the framerate to 30 fps. VRR would also be an amazing feature to have as then games that normally run at 30 max could go to 40 or even 50 at times, though I don't think Nintendo really cares too much despite how much of an improvement it is to games running on the PS5(Spider-Man Miles Morales runs at 40-50 fps in Fidelity mode with VRR on!) or XSX. But it could be beneficial as it means that for open world games, developers can make the game look as nice as they can, and as long as it runs at at LEAST 30 fps, VRR takes care of the rest.

One final note about DLSS, it was stated back in August of 2022 that, in handheld mode, the Switch 2 is PS4 level without DLSS, and PS4 Pro level WITH DLSS. If that means "slightly better than ps4 in handheld, slightly better than ps4 pro with dlss" or "4k 30/60 in handheld mode" is up to interpretation, especially considering that there's no way Nintendo would put a 4k screen on a 7-8 inch device.

NSO will probably carry over, though I'd imagine it would just be called "Nintendo Online"

TLZ

Like Like

We always do.Did I just travel back in time?

This thread is like Groundhog Day.

RennanNT

Bob-omb

Quick summary to anyone curious about this:Also, doesn't MS still have a hurdle with the CMA in the UK?

The FTC and CMA wanted to block the merge, but found no base on law for this, so they coordinated to make things harder and longer, in hope either side gives up.

MS executives went public saying the CMA was acting in bad faith and that "UK is closed for business". Then they had private meetings with the UK government and the CMA. Right after that, the FTC brought them in a hurry to the court to prevent MS from closing before their investigation ends.

Since the CMA didn't change their position, people started thinking MS found way to workaround CMA block. In hindsight, the CMA gave in but hold onto the hope the FTC would be able to stall them.

At this point, MS and CMA are most likely still discussing the remedies in details. And the FTC isn't necessarily done yet. Even losing the PI and even if MS merges, they can still investigate and force MS divest ABK if they can prove it's unlawful in the end.

So it will only be 100% done when both says they accepted MS remedies. But they don't have the law on their side nor can stall things further, so it's probably happening soon.

lattjeful

Like Like

- Pronouns

- he/him

And tomorrow... and tomorrow... creeps in this petty pace from day to day...Tomorrow.

I said "See you in 2083, then" for the 100th anniversary, since the 40th wasn't significant enough.I believe they meant 50 year anniversary, not 50+10 years from now.

Semi Lazy Gamer

Like Like

LoneRanger

Chain Chomp

- Pronouns

- He/Him

The big question is if they can manage to get day 1 COD2024 as launch title/window. That + Assasins Creed Red (that is based in Japan so I think a SKU for a Nintendo system can be a good addition.) can be a very good start for Switch NG regarding (western) third party support.Imagine if the first acknowledgement of Drake comes from a talking heads video of Phil Spencer with Doug Bowser right after the deal completion, proudly announcing that thr next Call of Duty will launch on the "next generation Nintendo device"

Like a (fall) launch with (only considering NG exclusive games):

1) 3D Mario (Nintendo)

2) 2024COD (Activision-Blizzard)

3) Assasins Creed Red (Ubisoft)

4) Avatar: Frontiers of Pandora (Ubisoft)

5) Madden/FIFA/NHL/UFC (EA)

6) NBA 2k (Take-2)

7) Something from Capcom (DD2?)

8) Something from Konami (MGS3D?)

9) Something from Square-Enix (FFXV?)

10) Something from Bandai-Namco (Tekken 8?)

11) Something from Rockstar (RDR2?)

+ update to existing Switch 1 titles.

So I think that Nintendo will make a “soft” launch and not releasing every mayor AAA PS4/5 day 1.

Last edited:

The big question is if they can manage to get day 1 COD2024 as launch title/window. That + Assasins Creed Red (that is based in Japan so I think a SKU for a Nintendo system can be a good addition.) can be a very good start for Switch NG regarding (western) third party support.

Like a (fall) launch with (only considering NG exclusive games):

1) 3D Mario (Nintendo)

2) 2024COD (Activision-Blizzard)

3) Assasins Creed Red (Ubisoft)

4) Avatar: Frontiers of Pandora (Ubisoft)

5) Madden/FIFA/NHL/UFC (EA)

6) NBA 2k (Take-2)

7) Something from Capcom (DD2?)

8) Something from Konami (MGS3D?)

9) Something from Square-Enix (FFXV?)

10) Something from Bandai-Namco (Tekken 8?)

Blue Monty

Inkling

- Pronouns

- he/him

From non-Western publishers keep the expectations you would have of games arriving on the Switch, just because is more powerful it doesnt mean games (especially new games PS5/XSX only) will arrive day 1. From SE internal teams expect little outside remasters/ports of old games , from BNS nothing (unless Nintendo pays for it ofc) and Capcom will just port the most popular games of their PS4/XOne catalog. Western pubs will probably do quite a bit of trial runs after missing the Switch launch but increasing their support (as much as possible) late into the gen, EA was the only big missing one and in recent times has changed their position of Nintendo consolesThe big question is if they can manage to get day 1 COD2024 as launch title/window. That + Assasins Creed Red (that is based in Japan so I think a SKU for a Nintendo system can be a good addition.) can be a very good start for Switch NG regarding (western) third party support.

Like a (fall) launch with (only considering NG exclusive games):

1) 3D Mario (Nintendo)

2) 2024COD (Activision-Blizzard)

3) Assasins Creed Red (Ubisoft)

4) Avatar: Frontiers of Pandora (Ubisoft)

5) Madden/FIFA/NHL/UFC (EA)

6) NBA 2k (Take-2)

7) Something from Capcom (DD2?)

8) Something from Konami (MGS3D?)

9) Something from Square-Enix (FFXV?)

10) Something from Bandai-Namco (Tekken 8?)

11) Something from Rockstar (RDR2?)

+ update to existing Switch 1 titles.

So I think that Nintendo will make a “soft” launch and not releasing every mayor AAA PS4/5 day 1.

Z0m3le

Bob-omb

Hey everyone, look at the insider. Next he is going to tell us that Microsoft is going to buy Call of Duty and put it on the Switch.Folks I think Nintendo is making a new video game console

ziggyrivers

thunder

- Pronouns

- He/him/his

And tomorrow... and tomorrow... creeps in this petty pace from day to day...

lattjeful

Like Like

- Pronouns

- he/him

No way in hell they do that. Look at the Switch's numbers. Only 125 million? Pathetic. They should just go third party. Let Sony buy them up.Folks I think Nintendo is making a new video game console

TSR3

Pilotwings

I recommend the OP (page 1) and the Threadmarks to catch up on significant news and commentsIs there a consensus on what the NG Switch(or NX 2, or Switch 2) hardware will be? I personally have my own guess involving the Tegra T239 that was leaked last year. For example, wikipedia lists the T239 as having 12 SMs, or 1536 CUDA/Shader cores. Then, looking at the closest in terms of gpu performance, the AGX Orin 32 GB, it'll have 12 RT cores and 44 tensor cores as well(good on nvidia for making very easily scalable SoCs). TFlops wise, despite not being the best indicator of real world performance, will be anywhere from 2.5 to 2.9. Most likely just above 2, or even on par with the PS4 in handheld mode. Ram wise, I think it'll be 8-12 gigabytes. CPU wise, it'll be on par, but most likely better, than the CPU found in the Snapdragon 888. Probably better because it has active cooling. I have no idea on what the screen size or storage will be but I don't want to make any unreasonable estimates other than maybe a 900p OLED screen with 128/256 gigs of storage.

Feature wise, I'll bet that DLSS will be HEAVILY abused by developers to make games run on par with, if not better than their Xbox Series S versions. Fortnite at 1080p with Nanite and Lumen at 60 fps in docked with no other graphical options isn't unreasonable, seeing as Nintendo probably wouldn't add 120hz support(that's not really a Nintendo thing to do despite the hardware definitely supporting it). Speaking of Raytracing, Nvidia is really good at raytracing. So it also wouldn't be unreasonable to assume that the NG Switch's raytracing is potentially on par with, or slightly worse than, the Series S in docked mode. I don't think many games will support raytracing in portable mode without locking the framerate to 30 fps. VRR would also be an amazing feature to have as then games that normally run at 30 max could go to 40 or even 50 at times, though I don't think Nintendo really cares too much despite how much of an improvement it is to games running on the PS5(Spider-Man Miles Morales runs at 40-50 fps in Fidelity mode with VRR on!) or XSX. But it could be beneficial as it means that for open world games, developers can make the game look as nice as they can, and as long as it runs at at LEAST 30 fps, VRR takes care of the rest.

One final note about DLSS, it was stated back in August of 2022 that, in handheld mode, the Switch 2 is PS4 level without DLSS, and PS4 Pro level WITH DLSS. If that means "slightly better than ps4 in handheld, slightly better than ps4 pro with dlss" or "4k 30/60 in handheld mode" is up to interpretation, especially considering that there's no way Nintendo would put a 4k screen on a 7-8 inch device.

NSO will probably carry over, though I'd imagine it would just be called "Nintendo Online"

- Pronouns

- He/Him

Today is the day?

Yes.

No!

Maybe?

I don't know.

Can you repeat the question?

- Pronouns

- He/Him

I'm pretty sure Nintendo stopped doing such a thing.Folks I think Nintendo is making a new video game console

Since Furukawa assumed they have never released a single new video game console, only iterations of the last one.

I don't know why there's a lot of doubt about Square Enix. damn near anything that doesn't have an exclusivity contract tied to it comes to switch. SE even talked about trying to bring FF15 and KH3 to switch but wasn't satisfied with the performance. Drake solves that. they'll be big supportersFrom non-Western publishers keep the expectations you would have of games arriving on the Switch, just because is more powerful it doesnt mean games (especially new games PS5/XSX only) will arrive day 1. From SE internal teams expect little outside remasters/ports of old games , from BNS nothing (unless Nintendo pays for it ofc) and Capcom will just port the most popular games of their PS4/XOne catalog. Western pubs will probably do quite a bit of trial runs after missing the Switch launch but increasing their support (as much as possible) late into the gen, EA was the only big missing one and in recent times has changed their position of Nintendo consoles

kimbo99

Spirit Detective

- Pronouns

- He/him

Yes.

No!

Maybe?

I don't know.

Can you repeat the question?

You're not the boss of me now.

TSR3

Pilotwings

This rings true, but do you have links, especially for the FTC/CMA collaboration claim?Quick summary to anyone curious about this:

The FTC and CMA wanted to block the merge, but found no base on law for this, so they coordinated to make things harder and longer, in hope either side gives up.

MS executives went public saying the CMA was acting in bad faith and that "UK is closed for business". Then they had private meetings with the UK government and the CMA. Right after that, the FTC brought them in a hurry to the court to prevent MS from closing before their investigation ends.

Since the CMA didn't change their position, people started thinking MS found way to workaround CMA block. In hindsight, the CMA gave in but hold onto the hope the FTC would be able to stall them.

At this point, MS and CMA are most likely still discussing the remedies in details. And the FTC isn't necessarily done yet. Even losing the PI and even if MS merges, they can still investigate and force MS divest ABK if they can prove it's unlawful in the end.

So it will only be 100% done when both says they accepted MS remedies. But they don't have the law on their side nor can stall things further, so it's probably happening soon.

- Pronouns

- He/Him

You're not the boss of me now.

And you're not so big.

- Pronouns

- Him

pages haven’t moved. Nothing new…. Sits patiently , and refresh!

Threadmarks

View all 18 threadmarks

Reader mode

Reader mode

Recent threadmarks

Poll #3: When do you think is the launch window for Nintendo's new hardware? Announcement regarding links to news and rumours from 2022 and 2021 Rough summary of the 2 August 2023 episode of Nate the Hate Rough summary of the 11 September 2023 episode of Nate the Hate Anatole's deep dive into a convolutional autoencoder Differences between T234 and T239 Rough summary of the 19 October 2023 episode of Nate the Hate necrolipe's Twitter (X) post on why a 2025 launch doesn't automatically make T239 outdated based on one of the leaked slides from Microsoft

Staff Communication

View all 13 threadmarks

Reader mode

Reader mode

Recent threadmarks

Staff Communication 05/26/23 Staff Post about Citing Leaks and Rumors Staff Post about reading Hidden Content Staff Post- Please read Keeping on topic New Switch 2 ST Made - Nontechnical Talk Has A New Home Staff Post Regarding Dubious Insiders And Better Sourcing Reminder about hide tags NewPlease read this staff post before posting.

Furthermore, according to this follow-up post, all off-topic chat will be moderated.

Furthermore, according to this follow-up post, all off-topic chat will be moderated.

Last edited: