- Pronouns

- He/Him

-

Hey everyone, staff have documented a list of banned content and subject matter that we feel are not consistent with site values, and don't make sense to host discussion of on Famiboards. This list (and the relevant reasoning per item) is viewable here.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

StarTopic Future Nintendo Hardware & Technology Speculation & Discussion |ST| (New Staff Post, Please read)

- Thread starter Dakhil

- Start date

Threadmarks

View all 18 threadmarks

Reader mode

Reader mode

Recent threadmarks

Poll #3: When do you think is the launch window for Nintendo's new hardware? Announcement regarding links to news and rumours from 2022 and 2021 Rough summary of the 2 August 2023 episode of Nate the Hate Rough summary of the 11 September 2023 episode of Nate the Hate Anatole's deep dive into a convolutional autoencoder Differences between T234 and T239 Rough summary of the 19 October 2023 episode of Nate the Hate necrolipe's Twitter (X) post on why a 2025 launch doesn't automatically make T239 outdated based on one of the leaked slides from Microsoftgonanzir

Nintendo doing Nintendo.

When I searched through a lot of information, I found that I can only expect a 1+7 cluster, where one A78 core can individually adjust frequency and voltage while the others adjust collectively. Most chips also only offer a 1+3+4 configuration, with one big core running at high frequency, three big core running at medium frequency, and the remaining four being small cores. The difference lies in the 1+7 configuration, where one big core runs at low frequency, while the other seven run at high frequency.

LiC

Member

I don't think that's the case. There are multiple, but here's one Micron part with the max LPDDR5X speed, 32 Gb density, and x64 configuration. Micron just organizes things in a confusing way so everything is under the LPDDR5 "family" even if it's LPDDR5X.Edit: Actually, all the 4 GB (32 Gb) LPDDR5X modules from Micron and Samsung turn out to be 32-bit only. So yeah, 128-bit 8 GB LPDDR5X with 4 GB (32 Gb) modules is never a realistic possibility.

That doesn't mean Apple gets 12 GB cheaper than 8 GB, it more likely means it's cheaper for them to only produce using a single RAM module and then do whatever dumb software thing they're doing to constrain it. If they were choosing between 12 GB and 8 GB as Nintendo did, then 8 GB would assuredly be cheaper.it woudnt change my point. If Apple gets 12gb cheaper than 8gb, so would probably Nintendo. Hence 8gb was never a real possibility.

On top of 8 GB of LPDDR5X being possible and cheaper, there is also the possibility of Nintendo using LPDDR5-speed parts which was commonly discussed here in the past. So "8 GB was never a technical possibility" is a very strange conclusion.

RennanNT

Bob-omb

The SD 768G has 2x A76 clocked differently from each other.Yes, but in those phones, the X1 core is the only X1 core. The DynamIQ documentation implies that all cores of the same type are in a "shared unit" which have the same clock speed. The A78C documentation is unclear about how it can be broken up in DSUs, as it contradicts itself several times, possibly because the documentation is not entirely updated from the base A78 variant.

Rather than DSUs needing to have different types, it's more likely that cores are split into DSUs based on their purpose. And ARM offers 3 types of core, tailored for phone needs, so each DSU ends with their own type as a result.

Nintendo won't fund a core tailored for their needs and likely it just so happens that out of the 3 types available, the best fit for the OS was the same as the cores for games.

Of course, the savings from lowering clocks in 1 core is rather small, so who knows if they will actually go this route. But they can. And if latency between units isn't a problem, they could be flexible about turning off some cores to boost the remaining one for gamss which are bad at parallelism.

EDIT: I forgot to read other posts before pressing post and missed gonanzir already posted an example earlier.

Last edited:

Dekuman

Starman

- Pronouns

- He/Him/His

So few games or game engines this gen tax the CPU that I don't know if it matters very much.

It's like Dragon's Dogma 2 and Baldur's Gate 3 in terms of AAA games 3.5 years in.

If this is like 40-50% of the PS5's CPU, that's great and good enough. Third-party games and ambitious first-party games CPU-wise will run at 30 FPS regardless.

What estimated clock speed would give 40-50% of the PS5's CPU's performance roughly?

Referring to Thraktor's post, we get a single core score for A78 at 3GHz being roughly the same as PS5's Zen 2 core at 4 GHz. Depending on your expectation (let's say the expected range is 1.5 GHz-2.0 GHz), it would be about 55%-75% of the PS5 CPU core-for-core.

With the necessary caveat that this is a general purpose benchmark, so for specific tasks the ratio can be different.

I think Switch 2 is in a good position if it also has 8 cores, it would core for core match the PS5/XBOX series.

Switch's biggest issue was its disadvantage in core counts, 3 available to games (out of 4) vs. 6 available to games (out of 8)

The A57's cores weren't a slouch compared to the processors in the PS4/XBOX and could match the weak cores in the PS4/XBONE if clocked IIRC at 1.5GHZ. vs the 1Ghz retail clocks.

So if Switch 2 can hit around 55% of PS5 on the very low end, it would be in a much better position than Switch ever was. It all depends on final clocks, and knowing Nintendo tends to compensate for the previous' console's weakness, I have strong belief core count parity aside, they will aim to get maximum clocks out of the CPU to close the gap. Because CPU power isn't something they can fix with DLSS, at least with the GPU side, there's DLSS crutch to lean on as the generation progresses.

Last edited:

- Pronouns

- She/Her

Having a full sized, full speed A78C core dedicated to the system software seems like it could open some interesting possibilities. No matter what's actually running, from the smallest 2D puzzler to the biggest and best open world game with mesh shaders, the system will have access to 1-2GB of (also full speed) memory and a full core. That core alone is something like a Wii U's total CPU capabilities. Why slow it down? Make use of it! The things the system could do. They could have a new class of application for media or third party voice chat, though as much as I doubt that, it should mean a more feature rich, even faster console UI.

Gerald

Bob-omb

- Pronouns

- He/Him

I’m personally expected 2.0-2.5ghz for cpu, even if is 2ghz will be aleardy really goodI think Switch 2 is in a good position if it also has 8 cores, it would core for core match the PS5/XBOX series.

Switch's biggest issue was its disadvantage in core counts, 3 available to games (out of 4) vs. 6 available to games (out of 8)

The A57 also could match the weak cores in the PS4/XBONE if clocked IIRC at 1.5GHZ.

So if Switch 2 can hit around 55% of PS5 on the very low end, it would be in a much better position than Switch ever was. It all depends on final clocks, and knowing Nintendo tends to compensate for the previous' console's weakness, I have strong belief core count parity aside, they will aim to get maximum clocks out of the CPU to close the gap. Because CPU power isn't something they can fix with DLSS, at least with the GPU side, there's DLSS crutch to lean on as the generation progresses.

I don't think using a LCD display for a devkit and then using an OLED display for retail hardware makes sense, especially since I imagine colour calibrating for a LCD display is different from colour calibrating for an OLED display, especially when handheld mode is concerned.Maybe reaching here, but I would say there's a non zero chance.

brainchild

Moblin

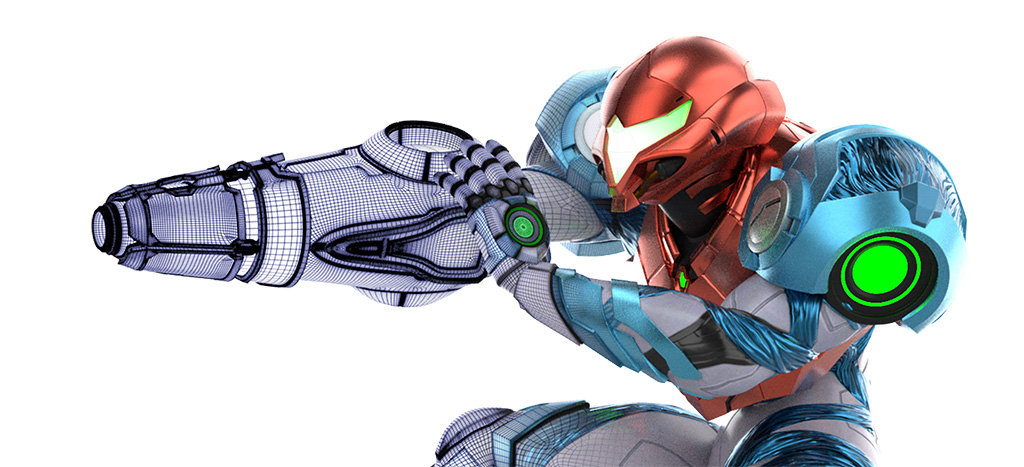

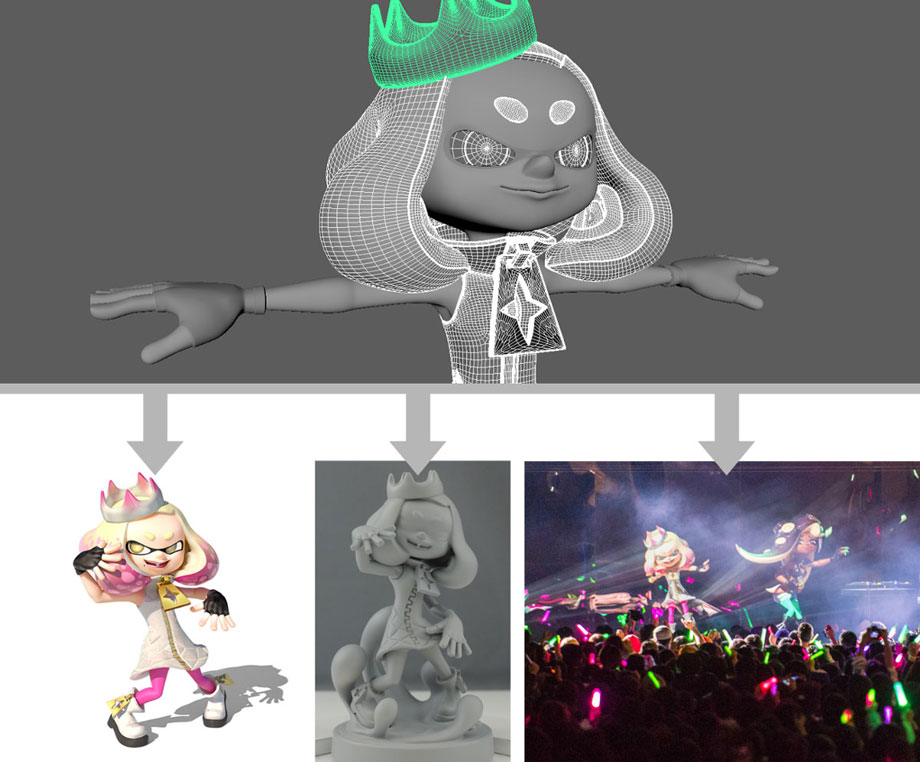

its someone who works at nintendo,using a pseudonym online, so dont direct link people here, they probably want to keep low profile,it had nothing to do with switch 2 power its just an example of what a nintendo artist can do with more power

I think your point was clear from the beginning and is valid. A lot of people believe Nintendo artists will have growing pains through this next era of development due to inexperience authoring higher fidelity assets, but the reality is that they've been authoring higher fidelity assets for many years due to the nature of their job. Source assets != in-game assets. They are certainly capable of authoring high fidelity assets. The challenge will come in optimizing them for a specific platform.

As far as I know (which very possibly is wrong) no Switch games got a color calibration patch after swoled released?I don't think using a LCD display for a devkit and then using an OLED display for retail hardware makes sense, especially since I imagine colour calibrating for a LCD display is different from colour calibrating for an OLED display, especially when handheld mode is concerned.

Stand corrected, I just assumed that since it doesn't make sense for Apple to use 4gb modules, it woudnt make sense for Nintendo either.That doesn't mean Apple gets 12 GB cheaper than 8 GB, it more likely means it's cheaper for them to only produce using a single RAM module and then do whatever dumb software thing they're doing to constrain it. If they were choosing between 12 GB and 8 GB as Nintendo did, then 8 GB would assuredly be cheaper.

On top of 8 GB of LPDDR5X being possible and cheaper, there is also the possibility of Nintendo using LPDDR5-speed parts which was commonly discussed here in the past. So "8 GB was never a technical possibility" is a very strange conclusion.

- Pronouns

- She/Her

We know T239 has an 8 core single cluster configuration of A78C. Assuming one core is reserved for the system software, which seems like a reasonable assumption given how Nintendo has handled the system software on Wii U, 3DS and Nintendo Switch, that leaves 7 whole cores for games to access. This is technically half a core more than PS5, which retains three threads (one and a half cores) for system software.I think Switch 2 is in a good position if it also has 8 cores, it would core for core match the PS5/XBOX series.

Switch's biggest issue was its disadvantage in core counts, 3 available to games (out of 4) vs. 6 available to games (out of 8)

The A57 also could match the weak cores in the PS4/XBONE if clocked IIRC at 1.5GHZ.

So if Switch 2 can hit around 55% of PS5 on the very low end, it would be in a much better position than Switch ever was. It all depends on final clocks, and knowing Nintendo tends to compensate for the previous' console's weakness, I have strong belief core count parity aside, they will aim to get maximum clocks out of the CPU to close the gap. Because CPU power isn't something they can fix with DLSS, at least with the GPU side, there's DLSS crutch to lean on as the generation progresses.

That threading is part of the kicker though, PS5 is multithreaded, games have access to 13 threads vs. 7 on the Switch successor.

Honestly that's pretty well equipped. On PS5, multithreading certainly doesn't give you double the performance, and doing it on all cores simultaneously kicks the maximum speed down a notch.

I wouldn't call the CPU configuration of the Nintendo Switch successor the same performance category, but being realistic, it still seems like as good a CPU as you could get in a slim enough power budget for a handheld.

TamazonX

Former Wii U Evangelist

- Pronouns

- she/her

I'm mostly a lurker. This makes my 58th post. lol

- Pronouns

- She/Her

The system settings on OLED Model have a colour setting. The "vivid" mode is pretty much the uncalibrated setting, the other setting is calibrated to appear like the original system.As far as I know (which very possibly is wrong) no Switch games got a color calibration patch after swoled released?

If you were planning to launch a system with an OLED panel, you'd almost certainly want to provide developers with an equivalent panel as soon as possible so they can calibrate their games for the release model. This actually is something Nintendo provided for OLED Model, with the ADEV unit.

Given we have no evidence of an ADEV equivalent, it seems reasonable to assume development kits are using LCD panels because the release model is expected to, too.

Eclissi

Moblin

I understood that oldpuck was aware of the console's codename but that he preferred not to reveal it. Have there been any developments in this regard?Supposedly the placeholder for the real code name Oz or Ounce. Call it whatever the hell you want at this point.

Still, if Oled specific color calibration makes such a difference, you would expect at least Nintendos own games to get patched.The system settings on OLED Model have a colour setting. The "vivid" mode is pretty much the uncalibrated setting, the other setting is calibrated to appear like the original system.

If you were planning to launch a system with an OLED panel, you'd almost certainly want to provide developers with an equivalent panel as soon as possible so they can calibrate their games for the release model. This actually is something Nintendo provided for OLED Model, with the ADEV unit.

Given we have no evidence of an ADEV equivalent, it seems reasonable to assume development kits are using LCD panels because the release model is expected to, too.

rinku

Cappy

- Pronouns

- He/Him

As far as I understand it, SMT has little impact on most gaming performance, most games don’t use more than 6 threads anyway.We know T239 has an 8 core single cluster configuration of A78C. Assuming one core is reserved for the system software, which seems like a reasonable assumption given how Nintendo has handled the system software on Wii U, 3DS and Nintendo Switch, that leaves 7 whole cores for games to access. This is technically half a core more than PS5, which retains three threads (one and a half cores) for system software.

That threading is part of the kicker though, PS5 is multithreaded, games have access to 13 threads vs. 7 on the Switch successor.

Honestly that's pretty well equipped. On PS5, multithreading certainly doesn't give you double the performance, and doing it on all cores simultaneously kicks the maximum speed down a notch.

I wouldn't call the CPU configuration of the Nintendo Switch successor the same performance category, but being realistic, it still seems like as good a CPU as you could get in a slim enough power budget for a handheld.

Gerald

Bob-omb

- Pronouns

- He/Him

Is all on a78 ipc, some data are saying is beetwen zen2 and zen3 in ipc , and some people are even saying it have higher ipc than zen3, also we must remember that a78c is better than a78 beacuse is special for gaming purposesWe know T239 has an 8 core single cluster configuration of A78C. Assuming one core is reserved for the system software, which seems like a reasonable assumption given how Nintendo has handled the system software on Wii U, 3DS and Nintendo Switch, that leaves 7 whole cores for games to access. This is technically half a core more than PS5, which retains three threads (one and a half cores) for system software.

That threading is part of the kicker though, PS5 is multithreaded, games have access to 13 threads vs. 7 on the Switch successor.

Honestly that's pretty well equipped. On PS5, multithreading certainly doesn't give you double the performance, and doing it on all cores simultaneously kicks the maximum speed down a notch.

I wouldn't call the CPU configuration of the Nintendo Switch successor the same performance category, but being realistic, it still seems like as good a CPU as you could get in a slim enough power budget for a handheld.

- Pronouns

- She/Her

They did - at the system level, with the vividness toggle.Still, if Oled specific color calibration makes such a difference, you would expect at least Nintendos own games to get patched.

But people LIKE vivid, even if one thinks one shouldn't, and that uncalibrated saturated image was part of the pitch for OLED Model. A calibrated picture is not necessarily an appealing one. Nintendo continued to produce games targeting the original panel, and OLED users could opt to use that original calibration target, or enjoy the less accurate but more vivid default calibration of the OLED display.

This is a little different to the start of a generation, where Nintendo would either be telling all the developers of the system that the retail panels will have totally different colour rendering, and that they'll have to scramble to fix it when final units are delivered, or they'd be launching with an OLED panel calibrated to LCD specifications, at which point, why?

What is the simpler answer, really. That Nintendo decided to give developers LCD panels before launch despite planning an OLED panel for the system, or that they provided what a developer kit should be; representative panels with comparable colour rendering, if not the same panels.

This would also presuppose Nintendo has spun cost cutting measures on their head; development kits are essentially cost-no-object devices, production consoles are not. Why would the development kits get the cheaper panels?

The Nintendo Switch successor almost certainly has HDR to lean on, new games and patched games can dazzle far beyond the OLED Model's relatively paltry colour rendering.

Last edited:

The name Oldpuck heard was Muji. It since been discovered it was probably a placeholder for Oz (ounce).I understood that oldpuck was aware of the console's codename but that he preferred not to reveal it. Have there been any developments in this regard?

Last edited:

- Pronouns

- She/Her

It doesn't impact games that aren't heavily CPU reliant, but is unequivocally a consideration for those that are.As far as I understand it, SMT has little impact on most gaming performance, most games don’t use more than 6 threads anyway.

However your point is good news for the Nintendo Switch successor, most games aren't pushing the CPU of the PS5 all that hard and should fit neatly into the constraints of 7 A78C cores.

MetalLord

Koopa

yeah,even if they wont use it at work most artists are always researching new technology on free time,they not learning just nowI think your point was clear from the beginning and is valid. A lot of people believe Nintendo artists will have growing pains through this next era of development due to inexperience authoring higher fidelity assets, but the reality is that they've been authoring higher fidelity assets for many years due to the nature of their job. Source assets != in-game assets. They are certainly capable of authoring high fidelity assets. The challenge will come in optimizing them for a specific platform.

some even have experience with the publicity renders who are really high poly

They did - at the system level, with the vividness toggle.

But people LIKE vivid, even if one thinks one shouldn't, and that uncalibrated saturated image was part of the pitch for OLED Model. A calibrated picture is not necessarily an appealing one. Nintendo continued to produce games targeting the original panel, and OLED users could opt to use that original calibration target, or enjoy the less accurate but more vivid default calibration of the OLED display.

This is a little different to the start of a generation, where Nintendo would either be telling all the developers of the system that the retail panels will have totally different colour rendering, and that they'll have to scramble to fix it when final units are delivered, or they'd be launching with an OLED panel calibrated to LCD specifications, at which point, why?

What is the simpler answer, really. That Nintendo decided to give developers LCD panels before launch despite planning an OLED panel for the system, or that they provided what a developer kit should be; representative panels with comaprable colour rendering, if not the same panels.

This would also presuppose Nintendo has spun cost cutting measures on their head; development kits are essentially cost-no-object devices, production consoles are not. Why would the development kits get the cheaper panels?

The Nintendo Switch successor almost certainly has HDR to lean on, new games and patched games can dazzle far beyond the OLED Model's relatively paltry colour rendering.

About the cost cutting measures, if Nintendo knew this was a temporary kit while waiting for final hardware, and devs would have ample time to correct color calibration why not just go with a cheap one?

Also I still think that it was such a big deal, they would patch their games for optimal colors on the "vivid" mode. And who's to say they won't have system level settings on the new display as well?

I mostly agree with you, just trying to play devils advocate at this point.

Last edited:

- Pronouns

- She/Her

I'm glad we agree. While I do entertain the idea of OLED as possible for the launch model, based on what we know I do think it's very unlikely.About the cost cutting measures, if Nintendo knew this was a temporary kit while waiting for final hardware, and devs would have ample time to correct color calibration why not just go with a cheap one?

Also I still think that it was such a big deal, they would patch their games for optimal colors on the "vivid" mode. And who's to say they won't have system level settings on the new display as well?

I mostly agree with you, just trying to play devils advocate at this point.

As for your points:

Developers kits cost an absolute bomb, there's really no sense in trying to cost cut them beyond the bare minimum. Having a screen with totally different colour rendering would be pretty severe, and only save a few dozen USD per unit, when units probably cost thousands.

Calibrating games for the "vivid" mode essentially nullifies the vividness, and makes for less accurate colour rendering on the non-vivid setting system settings define as more accurate. It would also require games to read at least the hardware configuration, and ideally system settings, to do so. That's a lot of work for something that could cause problems and users can fix themselves with a two second toggle, right?

Why patch it when it's in the system settings?

My point earlier about the importance of this being a new generation is hard to explain I feel... Basically, the launch system sets the standard for the generation, any games have to work and look acceptable on that unit. You don't really want to be messing around with differences in colour rendering between development kits and consumer hardware when you're doing a generational transition, certainly not when the system is set to support HDR, where these things are especially important. Changing things mid-way through a generation, with a built in setting to use the original model's calibrated colours is one thing, changing them between launch development kits and launch units is another.

Last edited:

The why did Nintendo provide Aula devkits, if it wasn't for devs to make oled specific calibrations?I'm glad we agree. While I do entertain the idea of OLED as possible for the launch model, based on what we know I do think it's very unlikely.

As for your points:

Developers kits cost an absolute bomb, there's really no sense in trying to cost cut them beyond the bare minimum. Having a screen with totally different colour rendering would be pretty severe, and only save a few dozen USD per unit, when units probably cost thousands.

Calibrating games for the "vivid" mode essentially nullifies the vividness, and makes for less accurate colour rendering on the non-vivid setting system settings define as more accurate. It would also require games to read at least the hardware configuration, and ideally system settings, to do so. That's a lot of work for something that could cause problems and users can fix themselves with a two second toggle, right?

- Pronouns

- She/Her

Lots of reasons! One reason is the same reason they provided development kits for the Nintendo Switch Lite - a different sized screen affects gameplay and UI in small ways, and technical changes might have to be accounted for, especially with the Lite. Meanwhile, for screen calibration, if you're calibrating for OLED Model you should calibrate for the "Standard" mode - not the Vivid mode. So that goes back to where the patch was provided: the system settings.The why did Nintendo provide Aula devkits, if it wasn't for devs to make oled specific calibrations?

Also, ADEV provided more upgrades than just the screen, like additional RAM. It wasn't really for screen calibration so much as "this is the latest development kit". To calibrate for colour across all models, well, there's a screen colour setting provided for that!

- Pronouns

- She/Her

On the subject of "why is it so unlikely development kits use fundamentally different displays from the production model", maybe it would be helpful to consider an exaggerated analogy. Imagine we go back few decades, a Brazilian developer making a console for the North American market. The development kit outputs PAL at 60hz, but the production unit is NTSC60! That's a problem for colour rendering - the problem we're discussing. If you're making games for the North American market in the analogue era, should work with development kits capable of NTSC. Same idea, basically, just a bit less problematic, when you develop on HDR LCD for a console with a HDR OLED panel. Wouldn't Nintendo have considered that problem, and made sure everyone was working with the 'right equipment' from the get-go?

I've spilt an awful lot of ink on this subject, despite the chance I could be very much wrong, but the core point is, they could, but why?

I've spilt an awful lot of ink on this subject, despite the chance I could be very much wrong, but the core point is, they could, but why?

Lots of reasons! One reason is the same reason they provided development kits for the Nintendo Switch Lite - a different sized screen affects gameplay and UI in small ways, and technical changes might have to be accounted for, especially with the Lite. Meanwhile, for screen calibration, if you're calibrating for OLED Model you should calibrate for the "Standard" mode - not the Vivid mode. So that goes back to where the patch was provided: the system settings.

Also, ADEV provided more upgrades than just the screen, like additional RAM. It wasn't really for screen calibration so much as "this is the latest development kit". To calibrate for colour across all models, well, there's a screen colour setting provided for that!

Thanks for the explanation, but it's the first time I heard of a lite specific devkit. Not sure you are right about that detail.

Shoulder

Koopa

What DLSS and FSR frame generation are currently doing are very much the same. Take frame 1, take frame 2, take into account data about motion of objects in those frames, and from that produce an in-between frame.

What the video you shared is about (only skimmed it but have seen another covering the same demo) is something quite different, and basically involves slightly modifying frame 1 in extremely cheap ways to simulate the movement of the game camera though the objects within the frame aren't moving. I feel like this would fall apart the more active a game is, though. When we look at demos that are basically an environment and some models sitting there, yeah, just making fake camera movements can be OK. But what if you're shifting the image to the left (simulating movement to the right), while an object on screen is also supposed to be moving right? For each "real" frame it would be correctly moved to a new position further to the right, while in all the in-between frames it would be sliding left along with the environment. Probably there are more tricks one could use to work around this... like save the environment and moving objects separately, and change the way they layer together based on individual movement properties. But that seems like a more involved thing a game engine would need to really be built for, whereas DLSS/FSR frame gen are "just do things as you've always been, make sure you provide the information you've probably already been providing for upscaling, and we'll take care of the rest". Relatively easy for PC games to support.

I know in many games today, different assets are rendered at different frame rates depending on how far they are relative to the player.

So say a game plays at 60fps, all NPCs will do so as well. But as you get further away from the player, and lower quality assets are loaded, the models of the NPCs might be rendered at half the rate, so 30fps.

I was curious if this principle could work for static objects in order to reduce rendering requirements, but maybe it’s not as simple as that? I’d like to hear folks smarter than I go into details of variable rendered assets besides just shaders for example.

It looks like I need to retract my previous statement.

Do M4 iPad Pros with 8GB of RAM actually have 12GB?

Several people have already torn down the new iPad Pro models and photographed their M4 chips and the RAM alongside them. Most teardown I could find were of the 256GB model, but one website (TechInsights) did the 1TB model as well. The one 1TB teardown I could find showed 2 RAM chips alongside...forums.macrumors.com

The new iPad Pro with 8GB of RAM has the same performance as the Switch 2, but Apple has restricted 4GB of it.(what?)

edit:It looks like almost the same memory chip as switch2.

That is such an Apple thing to do if that turns out to be true. Plus, if it’s purely a software lock, someone along the any will find a way to unlock that remaining 4 gigs. I’ve owned Apple products of varying types for over two decades now, and these days, I find myself less and less impresse. About the only device I tend to be ok with nowadays is iPhone, and that's honestly mostly because of iMessage. The 2017 iPad Pro I’m using right now to type this post is really nothing more than a content consumption device, and since Apple refuses to put full blown MacOS on iPad to begin with, I’m getting sick of them these days.

And before someone says, “But-but-but MacOS won't work on an iPad!“ Yes, it fucking can. Apple just refuses to make it work, and it could work, and work well. Guess they really just want folks to get a MacBook, which given how expensive iPads have gotten, especially the pro models, it’s a joke IMO.

Ok, that's my rant about Apple, so apologizes for going off topic.

If you look at it positively, it‘s a goal to work towards. You could maybe even reach it before Switch 2 launch.

The only goal I have right now is to get over this goddamn stomach bug from earlier in the week, and let my ass heal from all the discharge. And I’m not going to be able to post another thousand posts between now, and the Switch 2 launching…maybe.

If you look at it positively, it‘s a goal to work towards. You could maybe even reach it before Switch 2 launch.

This is my post #936. We'll be nearing Switch 3 by the time I hit 2000.Just for your current message count, blink and you might miss it

* Hidden text: cannot be quoted. *

- Pronouns

- She/Her

HDEVThanks for the explanation, but it's the first time I heard of a lite specific devkit. Not sure you are right about that detail.

LuigiBlood

Mage Robot

Please stop saying Oz lol

Nintendo has never used that word like this it's either Muji (through the Nintendo Package Manager for devs as previously revealed) or Ounce (Switch OS leftover SSL cert package file).

I know Oz sounds enticing as shortened Ounce and how it's close to NX but we're not even certain Nintendo actually thinks that way especially with how the PCB names previously uncovered clearly aren't named as usual either.

The whole U-King-O name is definitely one thing where I might be leaning more towards Ounce, as well as how Fifty is possibly a codename for the new Joy Cons (oh yeah, didn't you know? the Switch OS has that apparently and there were updates about those), which for me is closer to the theme of using amounts as words, but seeing Oz just bothers me because it really comes from absolutely nothing lol

Nintendo has never used that word like this it's either Muji (through the Nintendo Package Manager for devs as previously revealed) or Ounce (Switch OS leftover SSL cert package file).

I know Oz sounds enticing as shortened Ounce and how it's close to NX but we're not even certain Nintendo actually thinks that way especially with how the PCB names previously uncovered clearly aren't named as usual either.

The whole U-King-O name is definitely one thing where I might be leaning more towards Ounce, as well as how Fifty is possibly a codename for the new Joy Cons (oh yeah, didn't you know? the Switch OS has that apparently and there were updates about those), which for me is closer to the theme of using amounts as words, but seeing Oz just bothers me because it really comes from absolutely nothing lol

darthdiablo

+5 Death Stare

- Pronouns

- he/him

This is my post #936. We'll be nearing Switch 3 by the time I hit 2000.

Did it work? Were you able to see the hidden comment of mine? (Which will become hidden again once you have 937 posts lol - visible only when you have 936 posts exactly)

Pillydillsy

The greatest banana sherif in town

- Pronouns

- He/ him

You have posted 23584 times over the course of roughly 931 days, putting you at an average of 25.3 posts a day. Goodness

snoota

Rattata

- Pronouns

- He/Him

Nah I'll just continue to mostly lurk as usualIf you look at it positively, it‘s a goal to work towards. You could maybe even reach it before Switch 2 launch.

Yes it did.Did it work? Were you able to see the hidden comment of mine? (Which will become hidden again once you have 937 posts lol - visible only when you have 936 posts exactly)

WonderLuigi

eegee

We Wii U apologists learned the importance of silence and droughts of information.I'm mostly a lurker. This makes my 58th post. lol

bernbern345

Rattata

- Pronouns

- He/him

So i figured I’d ask here as I’ve been browsing this thread for a while, and you guys are all super knowledgeable.I don't have time to compile the details, but, from the shipment listings:

The console has 12 GB RAM, from two 6 GB 7500 MT/s LPDDR5 (LPDDR5X? it's unclear) modules. The internal storage is 256 GB of UFS 3.1.

Thank you to several other people who have been sharing in the research on these listings to determine this.

Edit: I put this in hide tags without thinking because it's shipment stuff, but this is going to get out no matter what, so I might as well remove them.

Does dlss work as a setting that theoretically Nintendo could have in settings and you can use it on every game, including switch 1 titles, or is it something that needs to be coded into the game.

Also do you all believe switch 2 will let switch 1 games perform better in handheld mode. Like for example could Xenoblade 2 maybe get closer to docked performance?

WonderLuigi

eegee

It's not automatic. Game needs to be programmed around itSo i figured I’d ask here as I’ve been browsing this thread for a while, and you guys are all super knowledgeable.

Does dlss work as a setting that theoretically Nintendo could have in settings and you can use it on every game, including switch 1 titles, or is it something that needs to be coded into the game.

Also do you all believe switch 2 will let switch 1 games perform better in handheld mode. Like for example could Xenoblade 2 maybe get closer to docked performance?

carbvan

Chain Chomp

- Pronouns

- he/him

Games must be updated to included DLSS support. It's not a 1-size fits all solution, and many games need some work to make the games look good with the tech. As for your second question, hard to say. It really depends on the game, though with something like XBC2 it'll definitely run closer to 1080p than 144p lolSo i figured I’d ask here as I’ve been browsing this thread for a while, and you guys are all super knowledgeable.

Does dlss work as a setting that theoretically Nintendo could have in settings and you can use it on every game, including switch 1 titles, or is it something that needs to be coded into the game.

Also do you all believe switch 2 will let switch 1 games perform better in handheld mode. Like for example could Xenoblade 2 maybe get closer to docked performance?

again I will say OZ and there's not a damn thing anyone can do about it. It my preferred name for the thing.Please stop saying Oz lol

Nintendo has never used that word like this it's either Muji (through the Nintendo Package Manager for devs as previously revealed) or Ounce (Switch OS leftover SSL cert package file).

I know Oz sounds enticing as shortened Ounce and how it's close to NX but we're not even certain Nintendo actually thinks that way especially with how the PCB names previously uncovered clearly aren't named as usual either.

SoldierDelta

Designated Xenoblade Loremaster

- Pronouns

- he/him

From how I understand it, DLSS is essentially a software that has to be implemented game-by-game. It isn't an external software that magically solves everything. If Nintendo lacks performance/fidelity modes, then it's very likely that each game will have a designated DLSS setting attached to it. For instance if Alan Wake 2 was ported to the system, alongside the texture quality, RT settings, models and other various settings, DLSS will likely be configured by the developers to allow the game to run how they want it to (for instance if the game wants a locked 30fps at a specific graphics setting, they could set it to either balanced or performance to maintain that framerate). It has to be configured in the same way.So i figured I’d ask here as I’ve been browsing this thread for a while, and you guys are all super knowledgeable.

Does dlss work as a setting that theoretically Nintendo could have in settings and you can use it on every game, including switch 1 titles, or is it something that needs to be coded into the game.

Also do you all believe switch 2 will let switch 1 games perform better in handheld mode. Like for example could Xenoblade 2 maybe get closer to docked performance?

As for BC, I don't really see a reason why games like Xenoblade 2 wouldn't be running SW1 Docked mode when playing portably on the Switch 2. The settings toggle is already there, it likely just need to tell the software to run at the docked preset. This is the bare minimum expectation for what Nintendo is going to do with BC on Switch 2 tbh.

bernbern345

Rattata

- Pronouns

- He/him

Thank you I wasn’t entirely sure how it worked but that makes sense.It's not automatic. Game needs to be programmed around it

Do you think switch 1 games will play better in handheld mode if the new switch is backwards compatible. I always hoped we could get close to docked performance. Theoretically handheld mode will be more capable on this system.

oldpuck

Like Like

- Pronouns

- he/they

Ah, neat!

Has to be coded into the game, as several folks have said.So i figured I’d ask here as I’ve been browsing this thread for a while, and you guys are all super knowledgeable.

Does dlss work as a setting that theoretically Nintendo could have in settings and you can use it on every game, including switch 1 titles, or is it something that needs to be coded into the game.

It's debatable, but I think that better performance will require patches.Also do you all believe switch 2 will let switch 1 games perform better in handheld mode. Like for example could Xenoblade 2 maybe get closer to docked performance?

Threeman

Cappy

Yeah obviously people missed the point : the codename actually reveal it will port mac os X feature, since an ounce is a snow leopard.Please stop saying Oz lol

Nintendo has never used that word like this it's either Muji (through the Nintendo Package Manager for devs as previously revealed) or Ounce (Switch OS leftover SSL cert package file).

I know Oz sounds enticing as shortened Ounce and how it's close to NX but we're not even certain Nintendo actually thinks that way especially with how the PCB names previously uncovered clearly aren't named as usual either.

The whole U-King-O name is definitely one thing where I might be leaning more towards Ounce, as well as how Fifty is possibly a codename for the new Joy Cons (oh yeah, didn't you know? the Switch OS has that apparently and there were updates about those), which for me is closer to the theme of using amounts as words, but seeing Oz just bothers me because it really comes from absolutely nothing lol

bernbern345

Rattata

- Pronouns

- He/him

Is there something with switch handheld mode that locks the games to lower fps. I know cpu clockspeeds get sliced in half but if switch 2 has a better cpu and clockspeeds shouldn’t handheld games perform better? I guess I’m asking can we ever expect docked visuals?Games must be updated to included DLSS support. It's not a 1-size fits all solution, and many games need some work to make the games look good with the tech. As for your second question, hard to say. It really depends on the game, though with something like XBC2 it'll definitely run closer to 1080p than 144p lol

Threadmarks

View all 18 threadmarks

Reader mode

Reader mode

Recent threadmarks

Poll #3: When do you think is the launch window for Nintendo's new hardware? Announcement regarding links to news and rumours from 2022 and 2021 Rough summary of the 2 August 2023 episode of Nate the Hate Rough summary of the 11 September 2023 episode of Nate the Hate Anatole's deep dive into a convolutional autoencoder Differences between T234 and T239 Rough summary of the 19 October 2023 episode of Nate the Hate necrolipe's Twitter (X) post on why a 2025 launch doesn't automatically make T239 outdated based on one of the leaked slides from MicrosoftPlease read this new, consolidated staff post before posting.

Furthermore, according to this follow-up post, all off-topic chat will be moderated.

Furthermore, according to this follow-up post, all off-topic chat will be moderated.

Last edited by a moderator: