D

Deleted member 887

Guest

This makes sense, but it's still hard to reconcile the idea that 'something' was cancelled in favor of something else in any short timeframe. Just how realistic is it that a device was planned to be released in 2023, then 'cancelled' to pivot to a different thing in 2024?

Project Indy is the Nintendo Switch. Project Indy went through several iterations before landing on what we got, and the name "Nintendo Switch" was given to a product that didn't yet have the TX1 inside it.But like I said, February 2014 was when Iwata made the comments about "adequately absorb the Wii U architecture" which we know now was about project Indy.

Sure they were simultaneously considering other options, but we know they hadn't 100% committed to Nvidia at that time. NX was announced in 2016 when the tx1 was finished.

There isn't enough data in the gigaleak to say what the hell was going on there, and how that device evolved (and I have my own theories) but here is what is clear.

In the beginning of 2014, Nintendo was building Indy around a custom SOC from ST called Mont Blank. Mont Blanc has two years of development, a custom GPU, and a complete spec sheet with pin outs (though this doesn't mean final hardware).

Mont Blanc was a hybrid 3DS WiiU sorta deal, running 4 ARM cores, but a "decaf" version of the GPU in the WiiU. We can't be certain, but the design seems unlikely to have been as powerful as the Wii U, and so at least at the start of SOC development, the idea was still for a device that would continue the handheld line independently of the TV line.

By August of 2014, this device was called Nintendo Switch. By the end of 2014, the "New Switch" had replaced Mont Blanc with a TX1.

I do not believe there is any document in the gigaleak that indicates when this transition happened, but those who have reviewed it say that Nintendo's discussions do influence TX1's design (IIRC it's security concerns, not performance)

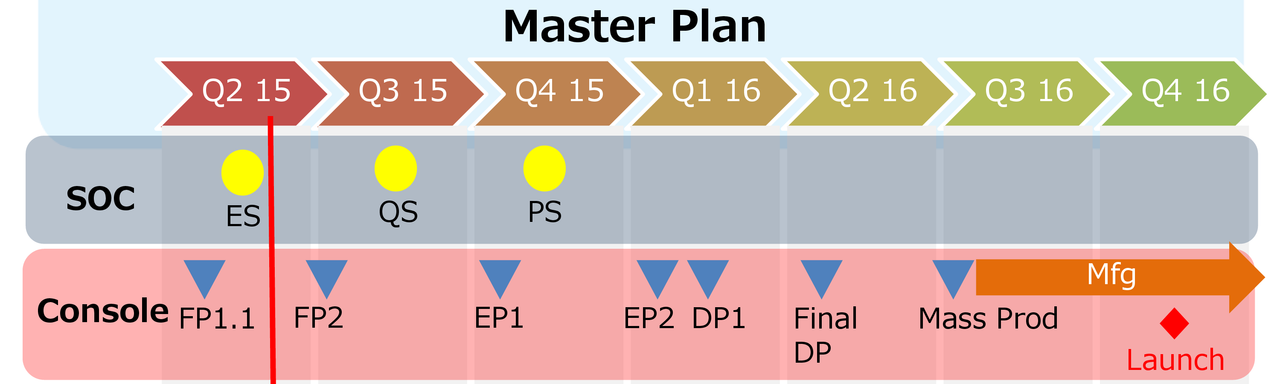

Sometime in Q1 2015, Nintendo builds functional prototype 1 for the Switch, in March, Iwata says the NX is coming during the DeNA press conference, and by June, they're planning to launch in holiday 2016.

So, yes, Nintendo had moved to a TX1 based design before the TX1 launched.

Yes, Nintendo can scrap an SOC with 2 years of custom development.

No, it's unlikely that the SOC manufacturer is going to put your custom chip in rando hardware when you do - Mont Blanc never showed up anywhere.

Some version of the Switch was being developed for 5 years before launch, and yes, some of the hardware designed in that first year wound up in the final product (the DRM hardware on the cartridge reader in the switch was designed in 2012 as part of Indy).

From Pivot to Final Hardware took anywhere from 2 years to 2.5 depending on how you look at it. So yeah, the idea that a device scrapped in mid-2022 would get a replacement out the door in 2024 is precedented.

Again, I'm trying to dodge the narrative a bit here. I suspect that the none of the puzzles pieces seem to fit because not only do we not have all the puzzle pieces, we also don't now what the box looks like.