Eclissi

Moblin

... and General Direct was in March (2025)!The plot twist was that the Indie Showcase was in April....

... and General Direct was in March (2025)!The plot twist was that the Indie Showcase was in April....

Not sure why you're so confused. Everybody knows the alphabet goes A, B, C, E, D...Well that's intuitive. /s

Not in my alphabetWell that's intuitive. /s

I had some anxiety about seeing your reply because when I woke up this morning, I thought I'd been kind of a dick. Sorry about that.I mostly disagree about dual issue being useless also, which is why I only quoted that... It has only been useless for gaming because of the open-ended nature of PCs and the shader compiler not being able to leverage those instructions often enough to impact performance, with a closed platform though? It's a completely different story, and Sony is actively betting on it for their Pro model as we speak.

I have seen shook Nintendo. We all have. Nothing about what's now happening behind the scenes or in plain sight is giving off fear and uncertainty. The only panic I see is from forum dwellers and people who are chronically online.It just seems to be about shifting the time widow on transitioning to new system ever into the future, to keep the Switch alive as the main system ever longer. I mean Nintendo is not even comfortable about even confirming that they are working on a successor to the Switch, and the president got panicked when gamescom rumours happened and went out of his way to deny the whole thing as fast as he could, and Nintendo were so frightened by those gamescom reports that afterwards they started boycotting gamescom. Thus their panic is even causing them to self sabotage themselves by removing the whole aspect of building relations with third parties and showing of their hardware to more third parties, like they did last year at gamescom.

Nintendo seems to be trying to follow their own version of the Xenoblade 3 plotline about the ''Endless now'', Nintendo wants to stay in their version of the ''endless now'' with the Switch.

Don't worry Oldpuck, it's okay. This is hardware speculation in the end of the day, people are gonna preach what they believe in and stick to it. You know how much I appreciate your posts and i'm sure the others do as well!I had some anxiety about seeing your reply because when I woke up this morning, I thought I'd been kind of a dick. Sorry about that.

I actually misunderstood what you meant about FP4 dual issue. I thought you were referring to the transformer engine in the tensor cores - which can convert some types to fp4 for performance improvements during model training - not dual issue in the shader cores. That was my mistake.

I think it feels like Nintendo could have gotten more, because we're not looking at "what chip could Nvidia have delivered by 2025" and instead looking at "all the technology that's available if you started in 2025". But Orin came out a few months before Ada, it came out after the Cortex-A715, it came out a year after LPDDR5X, two years after Apple silicon hit 5nm.

Yet it is still an Ampere, A78, LPDDR5, 8nm chip. And it costs $2,000! This is not a price constrained device! That's because integrating each of these separate technologies into a single, stable chip, along with the custom hardware built for it, takes time and money. A state-of-the-art SOC will always lag behind the state-of-the-art-individual-components. There is a reason that the GPUs in Sony and Microsoft's consoles are secretly still RDNA1 under the hood despite releasing the same time as RDNA2.

12 months of additional development time would not have changed the price considerations on the node, when you're talking about a device that Nintendo is planning to keep for 7 years anyway. 12 months of additional development time would not have changed the CPU choice, since that's maxed out by backwards compatibility. 12 months of additional development time might have baked in a minor upgrade to the memory, but it wouldn't have changed bandwidth targets, which would be based on the node (and it's power consumption) and the needs of the GPU. The GPU size is dictated by the node, and Nintendo's performance target.

The GPU architecture is a halfway design between Ampere and Ada. Perhaps it could have gone "full" Ada, but beyond what we've already got, that's only three updates. A minor, researcher targeted, update to the tensor cores. A minor update to the RT design. Frame gen, which is already not viable.

These things, to me, do not constitute a gap between "long lived device" and "woefully out of date"

Yeah, cache node shrinking has died so it’s starting to get stacked now and the same will happen with other CPU and GPU components.

I just wonder whether this will massively increase electricity consumption.

Thanks! I really appreciate your input as well.Don't worry Oldpuck, it's okay. This is hardware speculation in the end of the day, people are gonna preach what they believe in and stick to it. You know how much I appreciate your posts and i'm sure the others do as well!

Basically. Nvidia technically is saying they'll have a Blackwell SOC around the same time that Blackwell comes out - but that is the Atlan/Thor projects which has been very troubled, and I would take what they're saying with a grain of salt.So, essentially... It feels like Nintendo could've went for more because of its already delayed release date, and nearly three full years is quite a bit on computer timescale for people. The technology might go all the way back to 2022 technically, but getting it into an SoC (also power constrained) in the first place still takes time, right?

I think Nvidia could offer something better, but I don't think they could have done it at the same cost. Potentially if they'd gone fully last minute, and shelled out the cash to really rush the project, they might have been able to offer a 3nm based chip. But it would have been really expensive, even before adding the "rush job" costs and consequences.I must admit, when do you see it from this perspective... Maybe Nvidia really couldn't offer anything better other than an Ampere+ device, and that's reassuring at least! I assume the posters reading eased their concerns as well, if they had any.

I'm honestly not sure. I don't believe it can be backported, though I don't think it's useful if it was. But I could be misunderstanding the tech. @Anatole is probably the person hereNow, on a related note I suppose... As far as I know, dual issue shader cores are a full Ada feature, but there's really no chance for them to be backported in an hypothetical scenario? The compute left on the table for a closed system is pretty significant, and Nintendo clearly need every single trick under its sleeve. Imo, it's the only reason full Ada might have been worth it, although i'm equally curious about the tidbits T239 already has (other than the potential node).

Lol but I am not getting upset. In fact, i find this place very informative and a bit humorous.Says the guy who posts daily in the hardware thread

I love how Nintendo not attending Gamescom has now been spun into an all-out "boycott" because Nintendo are "frightened", "panicking", and "self-sabotaging", and that Gamescom is no longer just a consumer trade show but also apparently the only possible communication method Nintendo has with 3rd parties, all of a sudden. Again - all of this on the basis of a single report that delayed Switch 2 from one side of Christmas to the other, which cites absolutely fucking nothing about Nintendo arbitrarily trying to hold back their own successor hardware.It just seems to be about shifting the time widow on transitioning to new system ever into the future, to keep the Switch alive as the main system ever longer. I mean Nintendo is not even comfortable about even confirming that they are working on a successor to the Switch, and the president got panicked when gamescom rumours happened and went out of his way to deny the whole thing as fast as he could, and Nintendo were so frightened by those gamescom reports that afterwards they started boycotting gamescom. Thus their panic is even causing them to self sabotage themselves by removing the whole aspect of building relations with third parties and showing of their hardware to more third parties, like they did last year at gamescom.

Nintendo seems to be trying to follow their own version of the Xenoblade 3 plotline about the ''Endless now'', Nintendo wants to stay in their version of the ''endless now'' with the Switch.

Isn't that kinda the primary benefit of real time raytraced lighting? It just so happens that it also looks really pretty.It’s gonna be a boring time if video games take longer and longer to develop. Focus should go from realism to R&D in simplifying development times

theres a inovation barely focused on it consoles,that is VR/AR, the Nintendo Switch sucessor or the sucessor of sucessor of the Nintendo Switch(Switch 3) could focus on VR/AR as it main concepth/gimmickThe issue is just that the leaked chip suggests an extremely powerful and power hungry system.

Enough time has passed and we're still potentially so far away from the system launch that the leaked chip may no longer be the actual chip, but in that case, 4N is more likely is 4N is the last not shit node ever made and will be a mature node by 2025/2026. Even low power systems would be cheaper on 4N for a release in 2026.

(And again, the major issue is that there are no real alternatives for Nintendo other than standard power leaps. Outside of Kinect stuff, there have been no interesting innovations in the tech sector that could be applied to control inputs and Kinect is a terrible fit with a hybrid system. The Switch 2 will probably just be a power leap with no meaningful innovation because that's the only option Nintendo has available. Any innovation from Nintendo would have to come from completely custom R&D which is wildly expensive and likely to fail)

while realism has a higher ceiling, I think we got to remember the reason we always see realistic art styles when pushing technology is because our measuring stick is real life. how close we get to that is what was used to define progress. and all those tools feed other stylesIt’s gonna be a boring time if video games take longer and longer to develop. Focus should go from realism to R&D in simplifying development times

Is it biased for DF to use native rendering performance to measure switch2, especially since switch2 will have many extensions from nvidia the expressiveness is close to xss I don't think it's a hard thing to do.Nobody needs SNG revealed more than the full time YouTubers. These channels cant help but to create daily videos that focus on Nintendo's next gen hardware, even when we are absent any new details. There just isn't much enthusiasm for the withered Switch platform at this point, and the completely lack of compelling high profile software on the release schedule makes it all the worse. Nothing wrong with a couple remakes, but that doesn't raise the hype meter in a meaningful way.

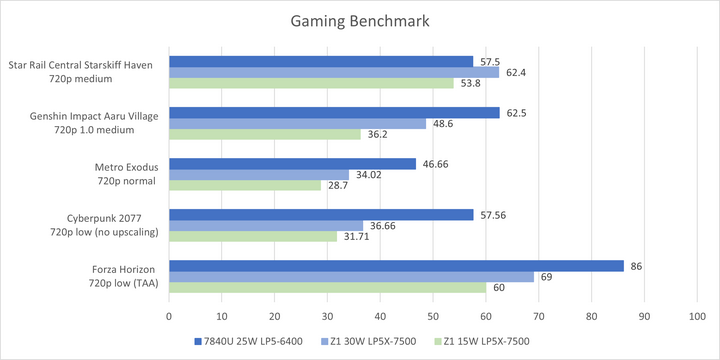

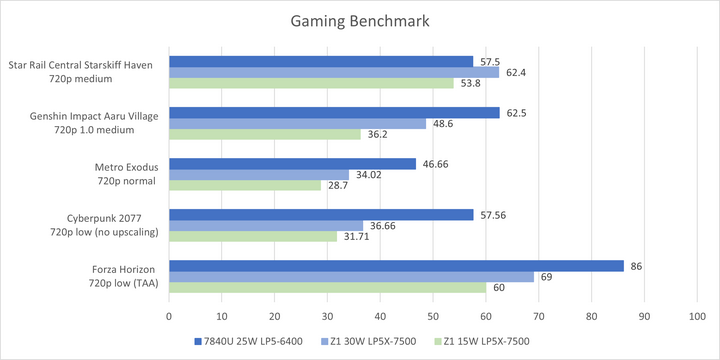

I was watching a clip from DF discussing a portable Series S handheld from Microsoft, and Rich pointed out that the Series S pulls around 80 watts. The Series S APU is on TSMC 7nm, and even with a couple of node shrinks, it would likely still be around 20 watts, best case scenario. They pointed out the Rog Ally, which already uses an advanced 4nm APU (Ryzen Z1), and that unit has a TDP or 15w with an option for the a 30w Turbo mode. Even when pulling 15-30 watts, this APU is not on par with the Series S, still falling well short.

Nintendo is one of the few publishers in the world that has the full-range of games, from smaller scale games like Kirby's Dream Buffet and F-Zero 99, to full AAA games like Zelda and 3D Mario. I believe this helped A LOT Nintendo on this gen filling the gaps in the schedules, unlike Sony for instance, that don't has any games to release this year.It’s gonna be a boring time if video games take longer and longer to develop. Focus should go from realism to R&D in simplifying development times

I believe people forgets that for Nintendo be able to do some marketing in an event like this, is needed prior preparation like billboards, standing material, play booths, and all of this not just costs money, but time and outsourced material which could lead to leaks. To attend in an event like this, the marketing material should be done for games announced, idk, 2-3 months before? Even if we have a June direct, they wouldn't have time to prepare the material to Gamescon for games announced in June, and the cost to get just evergreen games to this event wouldn't make up for it.It couldn't be, I don't know, that Nintendo don't particularly want to spend resources attending Gamescom and having to produce showfloor demos of the last handful of Switch 1 games, when at that point they will be going into the last half-year of development before Switch 2.

Imagine how crazy would it be if these YouTubers played and reviewed actual games?Nobody needs SNG revealed more than the full time YouTubers. These channels cant help but to create daily videos that focus on Nintendo's next gen hardware, even when we are absent any new details.

while realism has a higher ceiling, I think we got to remember the reason we always see realistic art styles when pushing technology is because our measuring stick is real life. how close we get to that is what was used to define progress. and all those tools feed other styles

It’s gonna be a boring time if video games take longer and longer to develop. Focus should go from realism to R&D in simplifying development times

The Legend of Zelda production team is precisely the result of playing with gameplay ideas in a limited amount of time rather than cramming in as much stuff as possible, and this development model you're thinking of doesn't work for Nintendo because for the Legend of Zelda development team, they have no more time than a lot of top-tier 3A games, maybe even less, so they have to focus on what they're good at.I think we're approaching a threshold of the limits of how long someone wants to develop a game. Once you get past that 4-5 year mark, I think similarly how as graphics get more real, it’s diminishing returns at that point. I think game development follows a similar pattern.

At what point does a game developer, after working on the same fucking game for 8-10 years just goes, “Why the fuck am I doing this?” On the flip side, those perfectionist types that are willing to work on it as long as it takes, and the game lives on forever as a symbol of how to finish a game (I.e. Zelda TOTK), or it is revered for how good it is, there maybe something to this.

It used to be the limits of technology were limiting developers' vision on what they wanted, and they had to adapt and be creative. Nowadays, they are not bound by those same limitations anymore, so I feel they try to cram as much as they can.

Replace the word “scientists” with ”developers,”and you get a similar result:

The Legend of Zelda production team is precisely the result of playing with gameplay ideas in a limited amount of time rather than cramming in as much stuff as possible, and this development model you're thinking of doesn't work for Nintendo because for the Legend of Zelda development team, they have no more time than a lot of top-tier 3A games, maybe even less, so they have to focus on what they're good at.

Yes, I quite agree with this, the development of major North American big games after the 7th gen consoles has become a dilemma of going for more and more so-called big, yet more and more mediocre, whether it's Bethesda or Ubisoft or Sony, the development cycle will always be limited, and the pursuit of everything will only lead to more and more boring and uninspired games.I should clarify I wasn’t taking a dig at Nintendo specifically. It’s mostly all the other developers who want to cram as much in an open world not realizing they may not be compatible with each other.

I was using TOTK as an example of what developers SHOULD be doing given the kinds of development times we're seeing. There are games with longer development that don’t have the polish, nor the consistency of what Tears of the Kingdom managed. Like you said, it’s about working within their constraints, and focusing on what they’re good at.

Quite honestly, I think games like Ass Creed for example aren’t really good at anything anymore besides having a beautiful looking world.

FP8 is tricky and still fairly new; FP4 is even trickier. You need to allocate bits for 3 different things in floating point precision: the sign, the exponent, and the mantissa, which is the actual number you care about. For example, in base 10, 0.5578 * 10^-2, the sign would be +1, the mantissa is 0.5578, and the exponent is -2. In base 2, you can always assume that the leading digit is 1, so for example, in 1.011011 * 2^-4 (base 2), you only need to store the mantissa (.011011).I'm honestly not sure. I don't believe it can be backported, though I don't think it's useful if it was. But I could be misunderstanding the tech. @Anatole is probably the person here

My limited understanding is that Hopper/Ada/Blackwell provide some lower precision datatypes, and a transformer engine - a way to use high precision data-types for part of AI training and the faster, lower precision datatype later, when the loss of precision has little impact. This is primarily a training optimization

I've seen FP4 referred to as an acceleration for MoE models during inference, as in when the model is executing, not being trained. This is even further outside my wheelhouse, but these models are extremely large. This is about taking models so large their execution time is measured in seconds, or even minutes, and making them faster. Stuff like ChatGPT, large language models. I don't know that these models are ready for real time graphics operations, and if they are, I doubt they would ever run on something as small as Drake.

Realism isn't everything.

Games like Okami still look prettier than many others that strive to detail human pores.

FP8 is tricky and still fairly new; FP4 is even trickier. You need to allocate bits for 3 different things in floating point precision: the sign, the exponent, and the mantissa, which is the actual number you care about. For example, in base 10, 0.5578 * 10^-2, the sign would be +1, the mantissa is 0.5578, and the exponent is -2. In base 2, you can always assume that the leading digit is 1, so for example, in 1.011011 * 2^-4 (base 2), you only need to store the mantissa (.011011).

FP8: 8-bit floats

Depending on how you allocate the bits, you can emphasize precision or dynamic range. For example, if you allocate 4 bits for the exponent and 3 bits for the mantissa, the largest normal number you can represent up to the sign is 1110.111 (base 2), which is on the order of 2^8 (because of exponent bias, and allocating a few numbers for things like NaN and infinity), while the smallest normal value is 0001.000 (base 2), on the order of 2^-6 (any values with an exponent of 0000 are considered subnormal).

If you instead use 2 bits for the mantissa and 5 for the exponent, the largest and smallest normal numbers respectively become 11110.11 (base 2), on the order of 2^15, and 00001.00 (base 2), on the order of 2^-14. So increasing the number of bits for the exponent enhances the dynamic range, at the expense of precision. Lots more info here: arXiv

You are right about what the transformer engine does; generally you need more dynamic range during training and more precision during inference. It's also rescaling the loss function so that the gradients fall within a range that can be represented by the precision. Nvidia's blog post for FP8 explains how calculating the maximum value for each tensor just in time for scaling would be slow, so they instead save the max value history and use that history for scaling.

FP4: 4-bit floats

As for FP4, I am extremely skeptical about it. All the issues of FP8 are pushed even further. I don't know if the details for Blackwell are public, but allocating 1 bit for the exponent essentially gives you an unevenly distributed version of INT4, so I assume they are using 2 bits for the exponent and 1 bit for the mantissa. The list of numbers you can represent is small and finite (with positive and negative versions of each of these, and an exponent bias of 1):

This is no better than INT4 in terms of dynamic range, which is the main reason to even use floating points; its main merit is that the values are distributed differently, but I wouldn't say that it's much better than just using the integers from 0-8, like INT4 would give you. I honestly think that Nvidia is probably only implementing FP4 to pump up their TFLOPS number for marketing reasons, making it seem like floating point inference has doubled. Maybe I'm wrong though; perhaps they assume all the numbers are non-negative (not a great assumption for neural network weights) or do some other shenanigans to make it work. Consider me a cynic on this one.

- 11.1 [1 + 1 * 2^(-1)] * 2^(3-1) = 1.5 * 2^2 = 6 (may also be reserved for NaN or infinity)

- 11.0 [1 + 0 * 2^(-1)] * 2^(3-1) = 1.0 * 2^2 = 4 (largest normal number)

- 10.1 [1 + 1 * 2^(-1)] * 2^(2-1) = 1.5 * 2^1 = 3

- 10.0 [1 + 0 * 2^(-1)] * 2^(2-1) = 1.0 * 2^1 = 2

- 01.1 [1 + 1 * 2^(-1)] * 2^(1-1) = 1.5 * 2^0 = 1.5

- 01.0 [1 + 0 * 2^(-1)] * 2^(1-1) = 1.0 * 2^0 = 1 (smallest normal number)

- 00.1 [1 + 1 * 2^(-1)] * 2^(0-1) = 1.5 * 2^-1 = 0.75 (subnormal)

- 00.0 (reserved for 0)

Exponent bias is 0, at least, the way exponent bias is typically defined.

• 1.11 [1 + 1 * 2^(-1) + 1 * 2^(-2)] * 2^(1) = 1.75 * 2 = 3.5 (may also be reserved for NaN or infinity)

• 1.10 [1 + 1 * 2^(-1) + 0 * 2^(-2)] * 2^(1) = 1.50 * 2 = 3

• 1.01 [1 + 0 * 2^(-1) + 1 * 2^(-2)] * 2^(1) = 1.25 * 2 = 2.5

• 1.00 [1 + 0 * 2^(-1) + 0 * 2^(-2)] * 2^(1) = 2 (smallest normal number)

• 0.11 [1 + 1 * 2^(-1) + 1 * 2^(-2)] * 2^(0) = 1.75 (subnormal)

• 0.10 [1 + 1 * 2^(-1) + 0 * 2^(-2)] * 2^(0) = 1.50 (subnormal)

• 0.01 [1 + 0 * 2^(-1) + 1 * 2^(-2)] * 2^(0) = 1.25 (subnormal)

• 00.0 (reserved for 0)

DLSS and lower precisions (FP8/FP4/INT8/INT4)

If DLSS were hypothetically implemented with FP4 in the most optimal case, it would run at 4 times the speed of FP16. However! As with FP8, this ideal case will not work if the dynamic range of the tensor you are trying to represent is outside the inherent range of the precision you are working in. With FP4, I bet this criterion would fail more often than not. There's a reason that INT8/INT4 quantization usually happens after training, or with "quantization aware" training in FP16; INT8/INT4 simply do not have the dynamic range to represent the gradients needed to train a typical network.

For many ML image applications, the final output doesn't have any dynamic range or precision. For example, in classification ("tell me if this image is a cat or a dog"), the final few layers aren't usually even convolutional. In segmentation ("generate two binary maps, one labeling all dog pixels and one labeling all cat pixels"), the situation is similar. In this situation, it's possible that FP4 might work, but I don't imagine the results would be much better than INT4. FP8 is a far superior option.

However, for reconstruction like DLSS, the final output is not a label or a binary map; it's an image, so human perception matters. You need to be able to propagate the dynamic range and precision of your input images to your output image. If any of your internal layers loses precision or dynamic range, then your output layers are hallucinating (making up from scratch) all of the fine detail, which isn't something that Nvidia wants. Ideally, the actual pixel samples would be represented in the final, reconstructed image. It's for this reason that DLSS runs at FP16 for HDR inputs and INT8 for tonemapped inputs; if you want an output that looks good, you need the precision and dynamic range that FP16 nets you.

tl;dr

I don't think FP4 will ever be feasible for DLSS. Honestly, I doubt it has any real utility other than pumping up the FLOPS numbers in Nvidia's marketing. There is a chance that FP8 could be workable for DLSS with careful rescaling at each layer, and I'm curious to see if Nvidia tries it. But dynamic range and precision are both important for reconstruction, so you ultimately need to choose a floating point precision that captures both.

Hollow Knight looks like a flash gameHell, Hollow Knight is only a mere Indie title made by a team of less than a half dozen people, and yet looks, and plays better than 80% of the shit that’s come out since.

I do find it funny how most of the big publishers and developers left the AA industry behind for the big budget titles.Hell, Hollow Knight is only a mere Indie title made by a team of less than a half dozen people, and yet looks, and plays better than 80% of the shit that’s come out since.

I would say that's not true, but also, is it a bad thing?Hollow Knight looks like a flash game

It was Japanese games that were pushed out of the mainstream in the 2000s by the massively industrialized production model of big games in North America, but in retrospect one can see that this process quickly morphed into throwing good money after bad, and with Nintendo's return to full force in the 8th generation and the general mediocrity of big games and the slowing down of the development of visual effects, the mainstream market began to place a renewed emphasis on the strengths of Japanese games, namely gameplay, whileNorth America also entered an era of highly segmented markets along with the general rise of indie games (mostly thanks to steam).I do find it funny how most of the big publishers and developers left the AA industry behind for the big budget titles.

Which left lots of room for indie developers to step right up and make their games fit into this space.

During the 360/PS3/Wii era indie games were relegated to very small gimmick like cell phone games and now we have things like Hades or Hollow Knight, which can stand up there with the best of them...

This is fantastic, thank you! Nvidia seems to be pushing the FP4 support specifically for generative LLMs, so perhaps there is some super narrow use case there? Regardless, they're only talking about it in relation to Blackwell, and right now all their public Blackwell info is about the datacenter chips.tl;dr

I don't think FP4 will ever be feasible for DLSS. Honestly, I doubt it has any real utility other than pumping up the FLOPS numbers in Nvidia's marketing. There is a chance that FP8 could be workable for DLSS with careful rescaling at each layer, and I'm curious to see if Nvidia tries it. But dynamic range and precision are both important for reconstruction, so you ultimately need to choose a floating point precision that captures both.

I wonder if anime helped out. I mean we had a couple of big boom in anime. The overall trend in Japanese culture I think rose.Nintendo's return to full force in the 8th generation and the general mediocrity of big games and the slowing down of the development of visual effects, the mainstream market began to place a renewed emphasis on the strengths of Japanese games, namely gameplay, whileNorth America also entered an era of highly segmented markets along with the general rise of indie games (mostly thanks to steam).

But I need to see there pores especially in Madden. Where I pay for the same crap every year? Who will take my money for pores?!Realism isn't everything.

Games like Okami still look prettier than many others that strive to detail human pores.

I would say that's not true, but also, is it a bad thing?

The last data I saw was that Japanese anime in the streaming era is still mainly for the local market, but the US is the second largest market, but if you look at it from my perspective Japanese anime is not enough to support its gaming status because Japan is the founding country of the rules of the modern gaming industry, and Japanese anime is very local in nature, with a fair amount of anime that is only popular in East Asia (China Japan South Korea) such as anime likeRobot animation, daily type of animation, etc. in North America no one asked, but the Japanese game even the most downtrodden time is also the world's second.I wonder if anime helped out. I mean we had a couple of big boom in anime. The overall trend in Japanese culture I think rose.

But I need to see there pores especially in Madden. Where I pay for the same crap every year? Who will take my money for pores?!

The main application that FP4 is used for is in training super sparse data sets. No need for those extra bits when most of your data is all zeroes.This is fantastic, thank you! Nvidia seems to be pushing the FP4 support specifically for generative LLMs, so perhaps there is some super narrow use case there? Regardless, they're only talking about it in relation to Blackwell, and right now all their public Blackwell info is about the datacenter chips.

It'll be interesting to see if Nvidia continues to segment out some of these features for enterprise customers, where the margins are still high, or let them flow down into consumer products.

It was Japanese games that were pushed out of the mainstream in the 2000s by the massively industrialized production model of big games in North America, but in retrospect one can see that this process quickly morphed into throwing good money after bad, and with Nintendo's return to full force in the 8th generation and the general mediocrity of big games and the slowing down of the development of visual effects, the mainstream market began to place a renewed emphasis on the strengths of Japanese games, namely gameplay, whileNorth America also entered an era of highly segmented markets along with the general rise of indie games (mostly thanks to steam).

I see what you mean, what I mean is that if you only look at traditional buyout games, the fact that Japan returned to the mainstream after 2015 is an easily observed fact, and 2015's MGSV Splatoon Bloodborne basically kicked off the full return of Japanese gaming, but as you said, in the pc and console side of the service based gaming segment Japan is stillLackluster, as Japanese online gaming was already beaten by South Korea & China in the 2000s lol, and Japan's service-based gaming revenue relies heavily on the many anime games on smartphones, which I'm afraid I've never even heard of in North America, but have quite a bit of notoriety in China.Is any of this true, lol.

Like, gaming is completely dominated by Fortnite and a bunch of really shitty titles (Roblox, EA FC, Madden, 2K, Call of Duty, mobile shovelware, porn garbage like Nikke, Genshin, etc).

None of the dominating games that control most of the revenue of the game industry are from Japan, idk.

I have a classic case of a North American game producer discriminating against Japanese games, it was probably the producer of Fez (sorry forgot his name) in 2011 who mocked Japanese games as "it's suck", and Jonathan Blow expressed a similar viewpoint euphemistically, and I think the target of his attack included Nintendo rather than just extremely backward makers like falcom .This whole thing speaks volumes about how unpopular Japanese games have become with the mainstream market during the ps3/x360 era.looking at north american charts, there's not much change here. the typical western fair still does exceptionally well, with the speckles of Nintendo and more mainstream asian games. realism as an art style hasn't gone out of vogue

I do kinda get it. Flash is vector art, hand keyed animation, and often flat colors because that's the core toolset and because it compressed well.Right? Even if it was one, it'd still be not only the best looking, but also the best flash game ever made. And at the same time, deserving of a full-fledged release on a console.

Truth be told, I got out of flash games in the early 2000s during when Newgrounds, and ebaumsworld were still the shit. So I pay zero attention to the current state of flash games.

LPDDR6 and Blackwell's release schedules aren't out either, but that didn't stop you thereBut we do know that the 2025 ARM processor won't have 32-bit support. So if Nintendo waited for that, they'd have to give up on backwards compatibility with Switch 1 software.

Either they could make a custom chip with 32 bit support, build in a 32 bit supporting chip soley for BC or create an translation layer.Does this mean that Switch 1 BC for Switch 3 is out of the question? Or would they be able to get around this issue somehow?

* Hidden text: cannot be quoted. *