Real Short Answer

DLSS BABY

Long, but hopefully ELI5, answer

There are actually

dozens of feature differences between the PS4 Pro's 2013 era technology and Drake's 2022 era technology that allow games to produce results better than the raw numbers might suggest. But DLSS is the biggest, and easiest to understand.

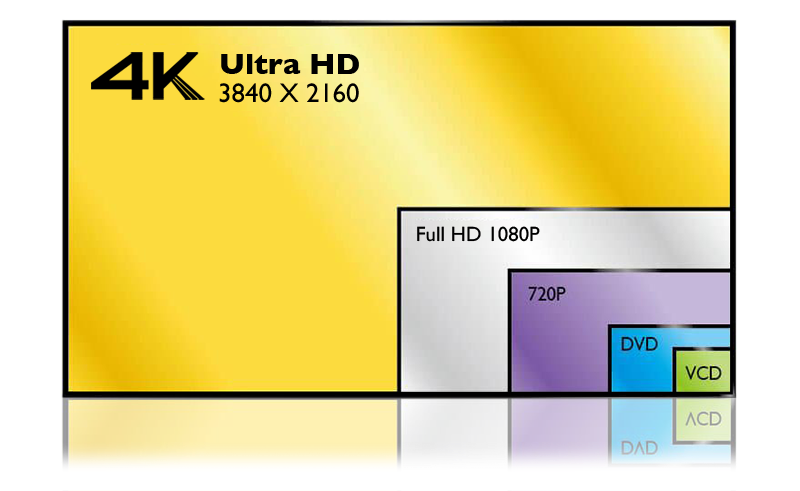

Let's talk about pixel counts for a second. A 1080p image is made up of about 2 million pixels. A 4K image is about 8 million pixels, 4 times as big.

All else being equal if you want to natively draw 4 times as many pixels, you need 4 times as much horsepower. It's pretty straight forward. One measure of GPU horsepower is FLOPS -

floating-point operations per second. The PS4 runs at 1.8 TFLOPS (a teraflop is 1,000,000,000 FLOPS).

But that leads to a curious question - how does the PS4 Pro work? The PS4 Pro runs at only 4.1 TFLOPS. How does the PS4 Pro make images that are 4x as big as the PS4, with only 2x as much power?

The answer is

checkerboarding. Imagine a giant checkerboard with millions of squares - one square for every pixel in a 4k image. Instead of rendering

every pixel every frame, the PS4 Pro only renders half of them, alternating between the "black" pixels and the "red"

It doesn't blindly merge these pixels either - it uses a clever algorithm to combine the last frame's pixels with the new pixels, trying to preserve the detail of the combined frames without making a blurry mess. This class of technique is called

temporal reconstruction.

Temporal because it uses data over time (ie, previous frames) and

reconstruction because it's not just upscaling the current frame but trying to

reconstruct the high res image underneath, like a paleontologist trying to reconstruct a whole skeleton from a few bones.

This is how the PS4 Pro was able to make a high quality image at 4x the PS4's resolution, with only 2x the power. And the basic concept is, I think, pretty easy to understand. But what if we had an

even more clever way of combining the images - could we get higher quality results? Or perhaps, could we use more frames to generate those results? And maybe, instead of half resolution, could we go as far as quarter resolution, or even 1/8th resolution, and still get 4k?

That's exactly what DLSS 2.0 does. It replaces the clever algorithm with an AI. That AI doesn't just take 2 frames, but every frame it has ever seen over the course of the game, and combines them with extra information from the game engine - information like, what objects are moving, or what parts of the screen have UI on them - to determine the final image.

DLSS 2.0 can make images that look as good or better as checkerboarding, but with half the native pixels. Half the pixels means half the horsepower. However, it does need special hardware to work - it needs

tensor cores, a kind of AI accelerator designed by Nvidia and included in newer GPUs.

Which brings us to Drake. Drake's raw horsepower might be lower than the PS4 Pro - I suspect it will be lower by a significant amount - but because it includes these tensor cores it can replace the older checkerboarding technique with DLSS 2. This is why I said low-effort ports might not look at good. DLSS 2.0 is pretty simple to use, but it does require some custom engine work.

Hope that answers your question!

But what about Zelda?

It's kinda hard to imagine what a Zelda game would look like with this tech, especially since Nintendo reboots Zelda's look so often. But

Windbound is a cel-shaded game highly inspired by

Windwaker, and it has both a Switch and a PS4 Pro version.

Here is a section of the opening cutscene on Switch. Watch for about 10 seconds

Here is the same scene on PS4 Pro. The differences are night and day.

It's not just that the Pro is running a 4k60, while the Switch runs at 1080p30. This isn't a great example, because Zelda was designed to look good on Switch, and this clearly wasn't -

Windbound uses multiple dynamic lights in each shot, and either removes things that cast shadows (like the ocean in that first shot) making the scene look too bright and flat, or removes lights entirely (like the lamp in the next shot) making things look too dark.

/cdn.vox-cdn.com/uploads/chorus_asset/file/24867220/IMG_0249.jpg)