link?Heard something sexy about the new switch. Sub to my OF and I will reveal more.

-

Hey everyone, staff have documented a list of banned content and subject matter that we feel are not consistent with site values, and don't make sense to host discussion of on Famiboards. This list (and the relevant reasoning per item) is viewable here.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

StarTopic Future Nintendo Hardware & Technology Speculation & Discussion |ST| (New Staff Post, Please read)

- Thread starter Dakhil

- Start date

Threadmarks

View all 18 threadmarks

Reader mode

Reader mode

Recent threadmarks

Poll #3: When do you think is the launch window for Nintendo's new hardware? Announcement regarding links to news and rumours from 2022 and 2021 Rough summary of the 2 August 2023 episode of Nate the Hate Rough summary of the 11 September 2023 episode of Nate the Hate Anatole's deep dive into a convolutional autoencoder Differences between T234 and T239 Rough summary of the 19 October 2023 episode of Nate the Hate necrolipe's Twitter (X) post on why a 2025 launch doesn't automatically make T239 outdated based on one of the leaked slides from Microsoftnecrolipe

Universo Nintendo Owner

- Pronouns

- he/him

Just some estimates based on clock possibilities and wat notIt's not the performance specs of S that are an issue. It's the RAM difference between X and S that has led to some challenges.

Who is comparing Switch Successor to a Series S?

Series S isn't a impossible scenario if the RAM reaches 12GB + DLSS 3.5 + right CPU clocks, actually... it can leave the cheaper Xbox behind imo

GPKs3

Paratroopa

Pleasure-Cons?Heard something sexy about the new switch. Sub to my OF and I will reveal more.

- Pronouns

- he/him

how do I avatar quote someone with no avatarwill it have cute feet?

Concernt

Optimism is non-negotiable

- Pronouns

- She/Her

Who is comparing Switch Successor to a Series S?

Pretty much everyone, and with good reason. It's the lowest common denominator of next gen.

Concernt

Optimism is non-negotiable

- Pronouns

- She/Her

Oh that's polite.Usually the opposite of what you say is what happens so thanks for the confirmation

Sagitario

Like Like

NateDrake

Chain Chomp

That's a bit too much of a "best case" scenario.Just some estimates based on clock possibilities and wat not

Series S isn't a impossible scenario if the RAM reaches 12GB + DLSS 3.5 + right CPU clocks, actually... it can leave the cheaper Xbox behind imo

I like Oldpuck's post of PS4+ more, which would make it inferior in raw numbers but modern technology will make it more than suitable to receive ports of Series S builds.

Last edited:

Onizuka

Great Player Onizuka

That's a bit too much of a "best case" scenario.

And the best case, is it a real case?

Instro

Like Like

Tbf the particular issue with the Series S is that Microsoft has requirements on developers as far as matching features with the Series X, which in conjunction with the power and RAM difference, is causing headaches. Especially when they obviously know that a Series S version is probably going to be the worst selling version of their game anyway.If this doesn't reach better than PS4 pro specs,.going by the Series S cry from developers, should be interesting to see.

Nintendo won't really care, and will take whatever ports they can get.

Personally, I agree. I think Nintendo's new hardware being comparable to the Xbox Series S, or the Xbox One X, GPU wise, after enabling DLSS, is the absolute best case scenario.Be wary of posts proclaiming ‘better than Series S’. Just expect PS4 with some resolution boosts since anything else is based on extreme best case scenarios which are unlikely to come to fruition.

Keep in mind there's a big caveat to the performance claims for the AMD Z1 Extreme.I work in developing software but my hardware knowledge is terrible (I know this doesn't make sense lol), but from my understanding, the Switch 2 is very likely going to be less capable than the Ally but far more efficient, correct?

will it have cute feet?

That's a bit too much of a "best case" scenario.

VanVulpes

Rattata

Switch 2 will be waterproof and Nintendo will advertise it by selling Switch bath water!!Heard something sexy about the new switch. Sub to my OF and I will reveal more.

TomNookYankees

Koopa

- Pronouns

- He/Him

I guess it wasn't quite a direct comparison, but back in the January episode of your podcast you brought up how "It's almost as though Microsoft did Nintendo a favor in this case with the Series S" when it came to Nintendo getting downports of current gen (X|S and PS5) games onto the Switch Successor.It's not the performance specs of S that are an issue. It's the RAM difference between X and S that has led to some challenges.

Who is comparing Switch Successor to a Series S?

Dude_lookinatleak

Rattata

- Pronouns

- He/Him

Personally, I agree. I think Nintendo's new hardware being comparable to the Xbox Series S, or the Xbox One X, GPU wise, after enabling DLSS, is the absolute best case scenario.

Keep in mind there's a big caveat to the performance claims for the AMD Z1 Extreme.

NVIDIA DLSS 2.5.1 Review - Significant Image Quality Improvements

NVIDIA has released an update for their Super Resolution Technology (DLSS 2.5) and it contains improved fine detail stability, reduced ghosting, improved edge smoothness in high contrast scenarios and overall image quality improvements. In this mini-review we take a look, comparing the image...

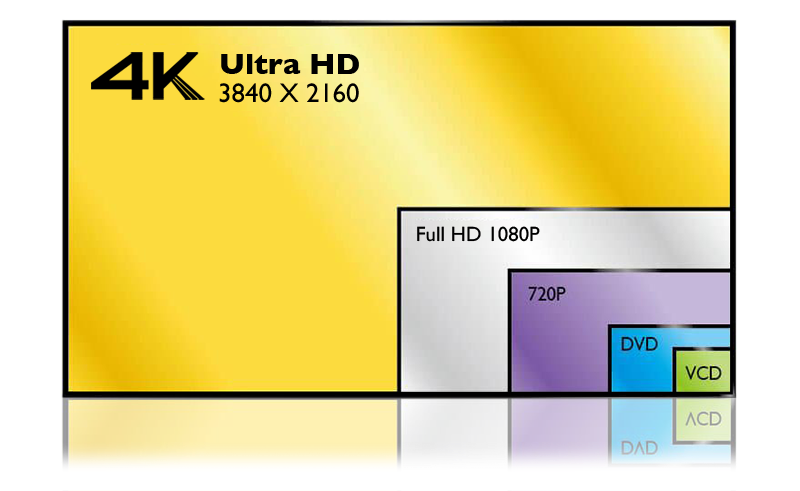

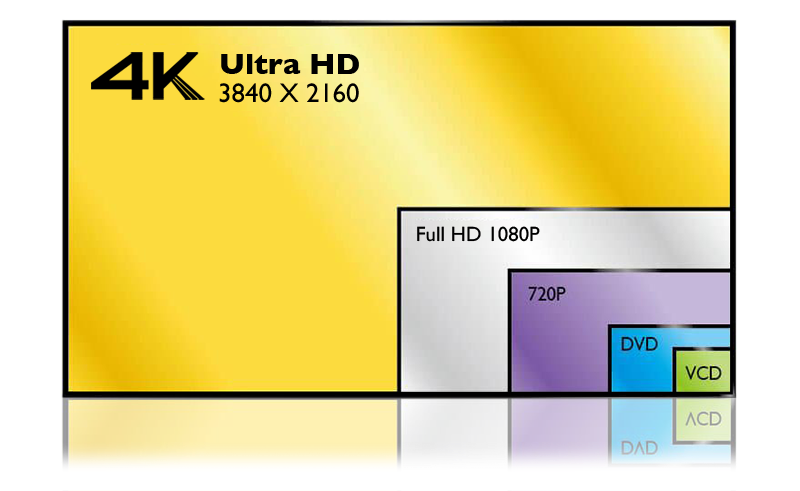

Being fair, DLSS Ultra Performance mode for both 1080p and 4K is a lot better than it was a few years ago. An upscale from 360p (Ultra Performance mode) to 1080p in handheld mode would do it a lot of justice.

Also let's say the device is about 3.5TF docked. It can do a better upscale that is less aliased and more detailed from 720p to 4K than the Series X/S can from 1080p to 4K (with FSR 2.X). There is also less of a performance cost from DLSS than there is FSR, which is crucial.

Then you stack DLSS 3.5 Ray Reconstruction on top for RT 30fps modes and it will definitely beat the Series S due to it not having very good RT performance.

And that's not including if Nintendo and NVIDIA decide to include a compatible OFA for DLSS 3 which could mean developers make a "60fps" mode players could choose which interpolates >=30fps to around 60fps in both docked and portable. It might give you only about 4 hours of battery life for the DLSS 3 mode, but it would be so worth it

I don't like to use DLSS to say it'll compare with any other system. if a Drake game uses DLSS Performance Mode to hit 1440p, so could Series S. since we see a lot of Series S games hit 1080p, I think that should be the expected output for Drake, just with more cuts to the settings to fit

TheRealWario

Piranha Plant

- Pronouns

- He/him

Cautiously optimistic for FSR 3. Might make all the difference for AMD devices like the Steam Deck.Will the Switch 2 have better RT performance than a PS5 or Series X with DLSS 3.5?

AMD is like 10 years behind at this point.

NateDrake

Chain Chomp

I guess it wasn't quite a direct comparison, but back in the January episode of your podcast you brought up how "It's almost as though Microsoft did Nintendo a favor in this case with the Series S" when it came to Nintendo getting downports of current gen (X|S and PS5) games onto the Switch Successor.

Having a Series S version to down port from is a favor for Nintendo, as it means there will be a variety of titles from third-party partners with potential of release -- depending what the quality of the Series S release was.

It doesn't mean the two are directly comparable in terms of raw power, however.

Just to be clear, nobody knows anything about the Optical Flow Accelerator (OFA) on Drake's GPU outside the fact that Drake's GPU does inherit the OFA from Orin's GPU. (And although the OFA on Orin's GPU being faster than the OFA on consumer Ampere GPUs is a reasonable assumption to make since automotive vehicles do benefit greatly from faster optical flow, not only is that not 100% confirmed, nobody knows how the performance of the OFA on Orin's GPU compares to the OFA on Ada Lovelace GPUs.)And that's not including if Nintendo and NVIDIA decide to include a compatible OFA for DLSS 3 which could mean developers make a "60fps" mode players could choose which interpolates >=30fps to around 60fps in both docked and portable. It might give you only about 4 hours of battery life for the DLSS 3 mode, but it would be so worth it

Anyway, Alex Battaglia from Digital Foundry mentioned that DLSS 3 only starts to become beneficial when the game runs at ~80 fps, which I assume means the game has to run at ~40 fps before enabling DLSS 3. And that's a very hard requirement to achieve, especially if the game's taxing in a multitude of ways (e.g. polygon count, draw distance, number of NPCs, etc.).

You make me thirsty with these kinds of posts mister.Lets just look at the PS5 and Switch 2 from the specs we have, it's simple to figure out if this is a next gen product or a "pro" model.

PS4 uses GCN from 2011 as it's GPU architecture, it has 1.84TFLOPs available to it. (We will use GCN as a base) The performance of GCN is a factor of 1

Switch uses Maxwell v3 from 2015, it has 0.393TFLOPs available to it. The performance of Maxwell V3 is a factor of 1.4 + mixed precision for games that use this... This means when docked Switch is capable of 550GFLOPs to 825GFLOPs GCN, still a little less than half the GPU performance of PS4, this doesn't factor in far lower bandwidth, RAM amount or CPU performance, all of which sit around 30-33% of the PS4, with the GPU somewhere around 45% when completely optimized.

PS5 uses RDNA1.X, customized in part by Sony, introduced with the PS5 in 2020, it has up to 10.2TFLOPs available to it. The performance of RDNA 1.X is a factor of 1.2 + mixed precision (though this is limited to AI in use cases, developers just don't use mixed precision atm for console or PC gaming, it's used heavily in mobile and in Switch development though). This means ultimately that the PS5's GPU is about 6.64 times as powerful as the PS4, and around 3 times the PS4 Pro.

Switch 2 uses Ampere, specifically GA10F, which is a custom GPU architecture that will be introduced with the Switch 2 in 2024 (hopefully), it has 3.456TFLOPs available to it. The performance of Ampere is a factor of 1.2 + mixed precision* (this uses the tensor cores, and is independent of the shader cores). Mixed precision offers 5.2TFLOPs to 6TFLOPs. It also reserves 1/4th of the tensor cores for DLSS according to our estimates, much like the PS5 using FSR2, this allows the device to render the scene at 1/4th the resolution of the output with minimal loss to image quality, greatly boosting available GPU performance, and allowing the device to force a 4K image.

When comparing these numbers to PS4 GCN, Switch 2 has 4.14TFLOPs to 7.2TFLOPs, and PS5 12.24TFLOPs GCN equivalent, meaning that Switch 2 will do somewhere between 34% to 40% of PS5. It should also manage RT performance, and while PS5 will use some of that 10.2TFLOPs to do FSR2, Switch 2 can freely use the remaining 1/4th of it's tensor cores to manage DLSS. Ultimately there are other bottlenecks, the CPU is only going to be about 2/3rd as fast as the PS5's, and bandwidth with respect to their architectures, will only be about half as much, though it could offer 10GB+ for games, which is pretty standard atm for current gen games.

Switch 2 is going to manage current gen much better than Switch did with last gen games. The jump is bigger, the technology is a lot newer, and the addition of DLSS has leveled the playing field a lot, not to mention Nvidia's edge in RT playing a factor. I'd suggest that Switch 2 when docked, if using mixed precision will be noticeably better than the Series S, but noticeably behind current gen consoles.

Sounds like a Leisure Suit Larry game.DLSS stands for Drake Leaks Start Soon

With the relationship that Nvidia and Nintendo have, Nintendo probably gets Nvidia software on a different schedule than most devs.There is a downside - DLSS 3.5 isn't a "free" upgrade from DLSS 2. It requires the developer to remove their existing denoisers from the rendering path, and provide new inputs to the DLSS model. In the case of NVN2, this would require an API update, not just a library version update. I haven't seen an integration guide for 3.5 yet, so I have no idea how extensive the new API is, but there will be some delay before this is in the hand of Nintendo devs.

Dude_lookinatleak

Rattata

- Pronouns

- He/Him

Didn't say it had to have DLSS 3. But the tech is getting better and like Ultra Performance mode, it will get better with time.Just to be clear, nobody knows anything about the Optical Flow Accelerator (OFA) on Drake's GPU outside the fact that Drake's GPU does inherit the OFA from Orin's GPU. (And although the OFA on Orin's GPU being faster than the OFA on consumer Ampere GPUs is a reasonable assumption to make since automotive vehicles do benefit greatly from faster optical flow, not only is that not 100% confirmed, nobody knows how the performance of the OFA on Orin's GPU compares to the OFA on Ada Lovelace GPUs.)

Anyway, Alex Battaglia from Digital Foundry mentioned that DLSS 3 only starts to become beneficial when the game runs at ~80 fps, which I assume means the game has to run at ~40 fps before enabling DLSS 3. And that's a very hard requirement to achieve, especially if the game's taxing in a multitude of ways (e.g. polygon count, draw distance, number of NPCs, etc.).

But even without DLSS Frame Generation, it will still outperform and possibly look better than the Series S in scenarios where Ray Tracing is required/RT 30fps modes

- Pronouns

- he / him

It literally couldn't use DLSS, though it could use an alternative super resolution method, like Starfield using FSR2. But of the current options DLSS is the one that seems to provide the best results starting from sub-1080p render resolutions*, so to get equivalent 1440p output quality a Series S version couldn't get away with stretching as far as a Drake version.I don't like to use DLSS to say it'll compare with any other system. if a Drake game uses DLSS Performance Mode to hit 1440p, so could Series S.

*In this comparison across many games from earlier this year, in 4K Quality mode (1440p->4K), DLSS only has a slight advantage over FSR2, but as we move down the line (1080p->4K, then 960p->1440p, then 720p->1440p) its advantage grows. So maybe DLSS doing 720p->1440p would require something more like 960p->1440p from FSR2 to match? I don't know if anyone has done a comparison from that angle.

Paul_Subsonic

Être majestuatisant et subversif

- Pronouns

- He/Him

I think Nintendo probably already has it. Nvidia has showcased DLSS 3.5 in Cyberpunk, and tomorrow will do it in Alan Wake 2. So CD Project Red and Remedy not only already have it but they implemented it, at least to the level it can be showcased.Re: DLSS 3.5. Fucking Finally. To be a bit of a diva and quote myself from earlier this year (emphasis added)

Here is a simplified way of looking at the problem and what DLSS 3.5 does.

Imagine you're in an empty room with a single light source - a 40W light bulb. That light bulb shoot outs rays of light (you know, photons), and those bounce against you and the walls over and over again, changing frequency - ie color - at each bounce. Then those rays hit your eyes, boom, you see an image.

Ray tracing replicates that basic idea when rendering. The problem is that real life has a shitload of rays - that 40W light bulb is pumping out ~148,000,000,000,000,000,000 (148 quintillion) photons a second. RT uses lots of clever optimizations but the basic one is just not use that many rays.

In fact, RT uses so few rays that the raw image generated looks worse than a 2001 flip phone camera taking a picture at night. It doesn't even have connected lines, just semi-random dots. The job of the denoiser is to go and connect those dots into a coherent image. You can think of a denoiser kinda like anti-aliasing on steroids - antialiasing takes jagged lines, figures out what the artistic intent of those lines was supposed to be, and smoothes it out.

The problem with anti-aliasing is that it can be blurry - you're deleting "real" pixels, and replacing them with higher res guesses. It's cleaner, but deletes real detail to get a smoother output. That's why DLSS 2 is also an anti-aliaser - DLSS 2 needs that raw, "real" pixel data to feed its AI model, with the goal of producing an image that keeps all the detail and smoothes the output.

And that's why DLSS 2 has not always interacted well with RT effects. The RT denoiser runs before DLSS 2 does, deleting useful information that DLSS would normally use to show you a higher resolution image. DLSS 3.5 now replaces the denoiser with its own upscaler, just as it replaced anti-aliasing tech before it. This has four big advantages.

The first and obvious one is that DLSS's upscaler now has higher quality information about the RT effects underneath. This should allow upscaled images using RT to look better.

The second is that, hopefully, DLSS 3.5 produces higher quality RT images even before the upscaling.

The third is that DLSS 3.5 is generic for all RT effects. Traditionally, you needed to write a separate denoiser for each RT effect. One for shadows, one for ambient occlusion, one for reflections, and so on. By using a single, adaptive tech for all of these, performance should improve in scenes that use multiple effects. This is especially good for memory bandwidth, where multiple denoisers effectively required extra buffers and passes.

And finally, this reduces development costs for engine developers. Before this, each RT effect required the engine developer to write a hand-tuned denoiser for their implementation. Short term, as long as software solutions and cross-platform engines exist, that will still be required. But in the case of Nintendo specifically, first party development won't need to write their own RT implementations, instead leveraging a massive investment from Nvidia.

There is a downside - DLSS 3.5 isn't a "free" upgrade from DLSS 2. It requires the developer to remove their existing denoisers from the rendering path, and provide new inputs to the DLSS model. In the case of NVN2, this would require an API update, not just a library version update. I haven't seen an integration guide for 3.5 yet, so I have no idea how extensive the new API is, but there will be some delay before this is in the hand of Nintendo devs.

Besides, Nvidia probably worked on this for a long time and Nintendo may have known to expect that. I'll look into Nvidia papers to see if I can find something like Ray Reconstruction, to know for how long has this been a thing.

my point is in terms of performance, not something that's barely quantifiable as image quality. the larger public couldn't judge image quality to save their lives nor do they care to. if you want Drake to match IQ with Series S, that's easy, just reduce the settings (which is what's gonna happen anyway). but I don't think there will be any (output) resolution improvements over Series S. just because Drake can go lower, so can Series S. 4A went lower than 540p with Metro Exodus Enhanced Edition. Drake won't be able to go that low and hit 60fps and similar settings (I don't think Steam Deck does either).It literally couldn't use DLSS, though it could use an alternative super resolution method, like Starfield using FSR2. But of the current options DLSS is the one that seems to provide the best results starting from sub-1080p render resolutions*, so to get equivalent 1440p output quality a Series S version couldn't get away with stretching as far as a Drake version.

*In this comparison across many games from earlier this year, in 4K Quality mode (1440p->4K), DLSS only has a slight advantage over FSR2, but as we move down the line (1080p->4K, then 960p->1440p, then 720p->1440p) its advantage grows. So maybe DLSS doing 720p->1440p would require something more like 960p->1440p from FSR2 to match? I don't know if anyone has done a comparison from that angle.

if we're taking into account like for like settings, Drake will lose against Series S somehow and DLSS can't help that.

in other ray tracing news, UL Solutions added Solar Bay results to their mobile phone database. the Dimensity 9200+ takes the top spot at over 6000 points and an average frame rate of 23fps. the benchmark runs at the same quality settings and resolution (1440p) regardless of platform and hardware. the closest x86 device I can find is the AMD Radeon 660M

Best Smartphones and Tablets - August 2023

See the best Smartphones and Tablets ranked by performance.

Dark Cloud

Warpstar Knight

Thank god for Microsoft creating the Xbox Series S

Just wish they did a better jobThank god for Microsoft creating the Xbox Series S

Magic-Man

Perseus Jackson

- Pronouns

- He/Him

They did an amazing job tho. It's a budget console.Just wish they did a better job

To play devil's advocate, Microsoft could have increased the amount of RAM the Xbox Series S has (e.g. 12 GB GDDR6, with 10 GB reserved for video games, and 2 GB reserved for the OS) and/or increased the RAM bus width (e.g. 256-bit), from the beginning. Alex Battaglia from Digital Foundry has heard from third party developers that the RAM is the Xbox Series S's biggest bottleneck. And besides, Microsoft's already selling the Xbox Series S at a loss.They did an amazing job tho. It's a budget console.

Magic-Man

Perseus Jackson

- Pronouns

- He/Him

Wouldn't that make it cost more? Which would defeat the purpose of the console. It's to get people into the ecosystem for Gamepass.To play devil's advocate, Microsoft could have increased the amount of RAM the Xbox Series S has (e.g. 12 GB GDDR6, with 10 GB reserved for video games, and 2 GB reserved for the OS) and/or increased the RAM bus width (e.g. 256-bit), from the beginning. Alex Battaglia from Digital Foundry has heard from third party developers that the RAM is the Xbox Series S's biggest bottleneck. And besides, Microsoft's already selling the Xbox Series S at a loss.

the technical problems also risk defeating the purpose of the system. Microsoft's intention was to be more like the Switch's docked and handheld mode, where all devs have to do is lower the resolution for the Series S. but that's not what ended up happening and the differences is causing more headaches than anything else. not to mention it's a separate chip from the Series X, so cost would have been a problem regardless because they split their purchases between two chipsWouldn't that make it cost more? Which would defeat the purpose of the console. It's to get people into the ecosystem for Gamepass.

Magic-Man

Perseus Jackson

- Pronouns

- He/Him

It was never going to just lower the resolution though, that wouldn't lower the cost by 200 dollars. Sacrifices had to be made and I think it's worth it.the technical problems also risk defeating the purpose of the system. Microsoft's intention was to be more like the Switch's docked and handheld mode, where all devs have to do is lower the resolution for the Series S. but that's not what ended up happening and the differences is causing more headaches than anything else. not to mention it's a separate chip from the Series X, so cost would have been a problem regardless because they split their purchases between two chips

Shoulder

Koopa

It was never going to just lower the resolution though, that wouldn't lower the cost by 200 dollars. Sacrifices had to be made and I think it's worth it.

It may be worth it for the consumer, especially if you love Gamepass, or just Xbox Live for that matter.

But for Microsoft, and the developers making games for it, it may or may not be worth it.

Microsoft still offers Xbox All Access, which starts at 25/month for a Series S vs 35/month for the Series X.

They provided such an amazing value, they forgot they’re still a business, and businesses need to make money.

- Pronouns

- he / him

Then speaking of resolution is meaningless, they're part and parcel. If CPU is the limiting factor Series S will have a large advantage. But otherwise, something that's using DLSS on Redacted should require fewer FLOPs to get to a similar looking output, even if Series S is using FSR2. Whether it has enough to begin with for that difference to put it in the same ballpark, TBD.my point is in terms of performance, not something that's barely quantifiable as image quality. the larger public couldn't judge image quality to save their lives nor do they care to.

LoneRanger

Chain Chomp

- Pronouns

- He/Him

Given some people here comments, I just think next Switch HW will have same clocks speeds as OG Switch thus its GPU performances in FLOPS will be...

Portable: 921/1152/1380 GFLOPS (307/384/460 MHz)

Docked: 2304/2763 GFLOPS (768/920 MHz)

In raw numbers in portable side its inferior to a PS4 but considering advancements in architecture and such, its a PS4+ in real life performance.

Its hard to compare it to Xbox Series S as CPU will be inferior, though as others said, good optimized ports of XBSeries S should be very doable to happen, though maybe a little late (so not day one as Sony/Microsoft HW).

Nintendo IPs that run at 900-1080p at Switch OG Docked mode should get a big boost at visuals in their Switch NG entries.

Portable: 921/1152/1380 GFLOPS (307/384/460 MHz)

Docked: 2304/2763 GFLOPS (768/920 MHz)

In raw numbers in portable side its inferior to a PS4 but considering advancements in architecture and such, its a PS4+ in real life performance.

Its hard to compare it to Xbox Series S as CPU will be inferior, though as others said, good optimized ports of XBSeries S should be very doable to happen, though maybe a little late (so not day one as Sony/Microsoft HW).

Nintendo IPs that run at 900-1080p at Switch OG Docked mode should get a big boost at visuals in their Switch NG entries.

D

Deleted member 887

Guest

Real Short AnswerSorry to ask and only if you don't mind answering, could you ELI5 for us non-tech people how modern feature sets would offset less raw power to produce on-par or better results, and would you be able to take a shot from that at how the scale and presentation of the next Zelda game may compare to Tears of the Kingdom?

DLSS BABY

Long, but hopefully ELI5, answer

There are actually dozens of feature differences between the PS4 Pro's 2013 era technology and Drake's 2022 era technology that allow games to produce results better than the raw numbers might suggest. But DLSS is the biggest, and easiest to understand.

Let's talk about pixel counts for a second. A 1080p image is made up of about 2 million pixels. A 4K image is about 8 million pixels, 4 times as big.

All else being equal if you want to natively draw 4 times as many pixels, you need 4 times as much horsepower. It's pretty straight forward. One measure of GPU horsepower is FLOPS - floating-point operations per second. The PS4 runs at 1.8 TFLOPS (a teraflop is 1,000,000,000 FLOPS).

But that leads to a curious question - how does the PS4 Pro work? The PS4 Pro runs at only 4.1 TFLOPS. How does the PS4 Pro make images that are 4x as big as the PS4, with only 2x as much power?

The answer is checkerboarding. Imagine a giant checkerboard with millions of squares - one square for every pixel in a 4k image. Instead of rendering every pixel every frame, the PS4 Pro only renders half of them, alternating between the "black" pixels and the "red"

It doesn't blindly merge these pixels either - it uses a clever algorithm to combine the last frame's pixels with the new pixels, trying to preserve the detail of the combined frames without making a blurry mess. This class of technique is called temporal reconstruction. Temporal because it uses data over time (ie, previous frames) and reconstruction because it's not just upscaling the current frame but trying to reconstruct the high res image underneath, like a paleontologist trying to reconstruct a whole skeleton from a few bones.

This is how the PS4 Pro was able to make a high quality image at 4x the PS4's resolution, with only 2x the power. And the basic concept is, I think, pretty easy to understand. But what if we had an even more clever way of combining the images - could we get higher quality results? Or perhaps, could we use more frames to generate those results? And maybe, instead of half resolution, could we go as far as quarter resolution, or even 1/8th resolution, and still get 4k?

That's exactly what DLSS 2.0 does. It replaces the clever algorithm with an AI. That AI doesn't just take 2 frames, but every frame it has ever seen over the course of the game, and combines them with extra information from the game engine - information like, what objects are moving, or what parts of the screen have UI on them - to determine the final image.

DLSS 2.0 can make images that look as good or better as checkerboarding, but with half the native pixels. Half the pixels means half the horsepower. However, it does need special hardware to work - it needs tensor cores, a kind of AI accelerator designed by Nvidia and included in newer GPUs.

Which brings us to Drake. Drake's raw horsepower might be lower than the PS4 Pro - I suspect it will be lower by a significant amount - but because it includes these tensor cores it can replace the older checkerboarding technique with DLSS 2. This is why I said low-effort ports might not look at good. DLSS 2.0 is pretty simple to use, but it does require some custom engine work.

Hope that answers your question!

But what about Zelda?

It's kinda hard to imagine what a Zelda game would look like with this tech, especially since Nintendo reboots Zelda's look so often. But Windbound is a cel-shaded game highly inspired by Windwaker, and it has both a Switch and a PS4 Pro version.

Here is a section of the opening cutscene on Switch. Watch for about 10 seconds

Here is the same scene on PS4 Pro. The differences are night and day.

It's not just that the Pro is running a 4k60, while the Switch runs at 1080p30. This isn't a great example, because Zelda was designed to look good on Switch, and this clearly wasn't - Windbound uses multiple dynamic lights in each shot, and either removes things that cast shadows (like the ocean in that first shot) making the scene look too bright and flat, or removes lights entirely (like the lamp in the next shot) making things look too dark.

I'm not arguing whether it was worth it or not (as I've repeatedly said it was the best idea MS had), I'm arguing that MS went about it the wrong way. they ended up causing more problems for themselves and developers than was needed. how much this will hurt them in the long run is up in the air but we're already seeing some instances of it like with Baldur's Gate 3. MS has to pray this don't get worseIt was never going to just lower the resolution though, that wouldn't lower the cost by 200 dollars. Sacrifices had to be made and I think it's worth it.

there are lots of other aspects that will have to be taken into account like memory bandwidth and gpu performance differences. the IQ may be similar, but they'll be working on different settings, making it an apples to oranges comparison againThen speaking of resolution is meaningless, they're part and parcel. If CPU is the limiting factor Series S will have a large advantage. But otherwise, something that's using DLSS on Redacted should require fewer FLOPs to get to a similar looking output, even if Series S is using FSR2. Whether it has enough to begin with for that difference to put it in the same ballpark, TBD.

Dark Cloud

Warpstar Knight

Nintendo Switch sucessor reveal?

I’m not sure if you’re serious or not

Dimensio

Tektite

Nintendo Switch sucessor reveal?

I'm actually excited for Video Game 2, Video Game 1 was kind of mid overall but there were some really cool ideas there, I really hope they improve on the concept.

With that said, you should try actually reading the staff you link.

- Pronouns

- he / him

I don't even think it's a given a Series S version would always have the higher settings. It's a plausible scenario where Series S has 1.5-2x GPU performance (versus docked), but also needs to render things with 1.5-2x as many pixels to achieve a similar image quality.there are lots of other aspects that will have to be taken into account like memory bandwidth and gpu performance differences. the IQ may be similar, but they'll be working on different settings, making it an apples to oranges comparison again

theguy

Chain Chomp

Real Short Answer

DLSS BABY

Long, but hopefully ELI5, answer

There are actually dozens of feature differences between the PS4 Pro's 2013 era technology and Drake's 2022 era technology that allow games to produce results better than the raw numbers might suggest. But DLSS is the biggest, and easiest to understand.

Let's talk about pixel counts for a second. A 1080p image is made up of about 2 million pixels. A 4K image is about 8 million pixels, 4 times as big.

All else being equal if you want to natively draw 4 times as many pixels, you need 4 times as much horsepower. It's pretty straight forward. One measure of GPU horsepower is FLOPS - floating-point operations per second. The PS4 runs at 1.8 TFLOPS (a teraflop is 1,000,000,000 FLOPS).

But that leads to a curious question - how does the PS4 Pro work? The PS4 Pro runs at only 4.1 TFLOPS. How does the PS4 Pro make images that are 4x as big as the PS4, with only 2x as much power?

The answer is checkerboarding. Imagine a giant checkerboard with millions of squares - one square for every pixel in a 4k image. Instead of rendering every pixel every frame, the PS4 Pro only renders half of them, alternating between the "black" pixels and the "red"

It doesn't blindly merge these pixels either - it uses a clever algorithm to combine the last frame's pixels with the new pixels, trying to preserve the detail of the combined frames without making a blurry mess. This class of technique is called temporal reconstruction. Temporal because it uses data over time (ie, previous frames) and reconstruction because it's not just upscaling the current frame but trying to reconstruct the high res image underneath, like a paleontologist trying to reconstruct a whole skeleton from a few bones.

This is how the PS4 Pro was able to make a high quality image at 4x the PS4's resolution, with only 2x the power. And the basic concept is, I think, pretty easy to understand. But what if we had an even more clever way of combining the images - could we get higher quality results? Or perhaps, could we use more frames to generate those results? And maybe, instead of half resolution, could we go as far as quarter resolution, or even 1/8th resolution, and still get 4k?

That's exactly what DLSS 2.0 does. It replaces the clever algorithm with an AI. That AI doesn't just take 2 frames, but every frame it has ever seen over the course of the game, and combines them with extra information from the game engine - information like, what objects are moving, or what parts of the screen have UI on them - to determine the final image.

DLSS 2.0 can make images that look as good or better as checkerboarding, but with half the native pixels. Half the pixels means half the horsepower. However, it does need special hardware to work - it needs tensor cores, a kind of AI accelerator designed by Nvidia and included in newer GPUs.

Which brings us to Drake. Drake's raw horsepower might be lower than the PS4 Pro - I suspect it will be lower by a significant amount - but because it includes these tensor cores it can replace the older checkerboarding technique with DLSS 2. This is why I said low-effort ports might not look at good. DLSS 2.0 is pretty simple to use, but it does require some custom engine work.

Hope that answers your question!

But what about Zelda?

It's kinda hard to imagine what a Zelda game would look like with this tech, especially since Nintendo reboots Zelda's look so often. But Windbound is a cel-shaded game highly inspired by Windwaker, and it has both a Switch and a PS4 Pro version.

Here is a section of the opening cutscene on Switch. Watch for about 10 seconds

Here is the same scene on PS4 Pro. The differences are night and day.

It's not just that the Pro is running a 4k60, while the Switch runs at 1080p30. This isn't a great example, because Zelda was designed to look good on Switch, and this clearly wasn't - Windbound uses multiple dynamic lights in each shot, and either removes things that cast shadows (like the ocean in that first shot) making the scene look too bright and flat, or removes lights entirely (like the lamp in the next shot) making things look too dark.

Alovon11

Like Like

- Pronouns

- He/Them

Well the main thing is, look at RTX 3050M, which can be argued to be the closest desktop counterpart to T239/DrakeThat's a bit too much of a "best case" scenario.

I like Oldpuck's post of PS4+ more, which would make it inferior in raw numbers but modern technology will make it more than suitable to receive ports of Series S builds.

- 3050M

- 16SMs (2056 CUDA, 16 RT, 64 Tensor)

- 4GB GDDR6 on a 128bit bus, 192GB/s

- With Latency (based on GDDR6 Latency tests on other systems) likely breaking the 140ns mark easily, easily inflating over 160ns when pushing higher data amounts.

- 1.34GHz Boost clock resulting in 5.5TFLOPs FP32 on Samsung 8N

- Has multiple degrees of overhead holding performance back and limiting those 5.5TFLOPs from being fully used.

- Windows Overhead

- DirectX Overhead

- Latency overhead as it's a dedicated GPU.

- Shader Comp overhead

- Unable to receive specific optimizations due to being a PC part.

- Has multiple degrees of overhead holding performance back and limiting those 5.5TFLOPs from being fully used.

- T239

- 12SMs (1536 CUDA, 12RT, 48 Tensor)

- 12+GB LPDDR5 on a 128bit bus, likely 102GB/s at least docked.

- However, latency would be far tighter at worst being half the latency of the GDDR6 in the 3050M/Other Consoles. So, it can make repeat-calls far faster, which can help dramatically for CPU/Latency dependent tasks like Ray Tracing.

- ??? Clocks, however Thraktor best calculated in this post, that T239 would probably best-fit on TSMC 4N due if not die-size concerns, power concerns. So running on his clocks, T239/Drake Docked would be around 3.4TFLOPs at 1.1GHz.

- And unlike the 3050M, it would have little of the overhead

- Custom OS that would be puny versus likely even the PS5 OS and especially Xbox's OS.

- Custom API that has shown to be very close to metal

- Less latency as the GPU is right next to the CPU and RAM

- No Shader Comp Overhead

- Able to be specifically tuned for.

- And unlike the 3050M, it would have little of the overhead

- Has more cache overall than 3050M as the GPU would have CPU-Cache access and also, assuming NVIDA still follows best ARM practices, a System-Level Cache.

And looking at 3050M...well it punches the PS4 into a 30ft hole. And actually trades blows with the arguable closest GPU (within the same Architecture) to the Series S GPU, the 6500M.

So I say Series S and T239/Drake could be compared, unless you 100% with 0 doubt or other chance Nintendo and NVIDIA would really ship a mobile SoC on Samsung 8N in 2024/2025.

And then you add DLSS, Ray Reconstruction, Ampere Mixed Percision theoretically resulting in a Mixed-Percision TFLOP Value over 5TFLOPs with Accumulate FP16...yeah, I say T239 vs Series S would probably be closer than a lot people are expecting unless they really stick with 8N

That's where my prediction is, I don't think we'll see much (if any) difference in input and output resolutions for Drake and Series S. One will use DLSS, the other FSR2, with the only difference being the objects on screenI don't even think it's a given a Series S version would always have the higher settings. It's a plausible scenario where Series S has 1.5-2x GPU performance (versus docked), but also needs to render things with 1.5-2x as many pixels to achieve a similar image quality.

NateDrake

Chain Chomp

If we want to factor in DLSS and such, sure; the visual gap between the two will be minimal but that still goes back to the Switch Successor being a PS4 Pro+ tier hardware with modern features to help assist it outperform its raw numbers. Going in expecting Series S in raw performance without DLSS factored in would be setting one self for disappointment. It'll be capable hardware, especially for its form factor.Well the main thing is, look at RTX 3050M, which can be argued to be the closest desktop counterpart to T239/Drake

So, in my honest opinion, the 3050M and T239 would likely perform similarly. The 3050M's inefficiencies allowing T239's Optimizations/Efficiencies to match or overtake it.

- 3050M

- 16SMs (2056 CUDA, 16 RT, 64 Tensor)

- 4GB GDDR6 on a 128bit bus, 192GB/s

- With Latency (based on GDDR6 Latency tests on other systems) likely breaking the 140ns mark easily, easily inflating over 160ns when pushing higher data amounts.

- 1.34GHz Boost clock resulting in 5.5TFLOPs FP32 on Samsung 8N

- Has multiple degrees of overhead holding performance back and limiting those 5.5TFLOPs from being fully used.

- Windows Overhead

- DirectX Overhead

- Latency overhead as it's a dedicated GPU.

- Shader Comp overhead

- Unable to receive specific optimizations due to being a PC part.

- T239

- 12SMs (1536 CUDA, 12RT, 48 Tensor)

- 12+GB LPDDR5 on a 128bit bus, likely 102GB/s at least docked.

- However, latency would be far tighter at worst being half the latency of the GDDR6 in the 3050M/Other Consoles. So, it can make repeat-calls far faster, which can help dramatically for CPU/Latency dependent tasks like Ray Tracing.

- ??? Clocks, however Thraktor best calculated in this post, that T239 would probably best-fit on TSMC 4N due if not die-size concerns, power concerns. So running on his clocks, T239/Drake Docked would be around 3.4TFLOPs at 1.1GHz.

- And unlike the 3050M, it would have little of the overhead

- Custom OS that would be puny versus likely even the PS5 OS and especially Xbox's OS.

- Custom API that has shown to be very close to metal

- Less latency as the GPU is right next to the CPU and RAM

- No Shader Comp Overhead

- Able to be specifically tuned for.

- Has more cache overall than 3050M as the GPU would have CPU-Cache access and also, assuming NVIDA still follows best ARM practices, a System-Level Cache.

And looking at 3050M...well it punches the PS4 into a 30ft hole. And actually trades blows with the arguable closest GPU (within the same Architecture) to the Series S GPU, the 6500M.

So I say Series S and T239/Drake could be compared, unless you 100% with 0 doubt or other chance Nintendo and NVIDIA would really ship a mobile SoC on Samsung 8N in 2024/2025.

And then you add DLSS, Ray Reconstruction, Ampere Mixed Percision theoretically resulting in a Mixed-Percision TFLOP Value over 5TFLOPs with Accumulate FP16...yeah, I say T239 vs Series S would probably be closer than a lot people are expecting unless they really stick with 8N

Last edited:

Concernt

Optimism is non-negotiable

- Pronouns

- She/Her

My prediction being down to earth about it would be, we will see a difference in output resolution, but not necessarily image quality. As NG Switch games push internal resolutions lower and lower to keep up while trying to keep the output resolution reasonable, image quality will suffer, while Series S will have that lower image quality from the start by outputting a lower resolution.That's where my prediction is, I don't think we'll see much (if any) difference in input and output resolutions for Drake and Series S. One will use DLSS, the other FSR2, with the only difference being the objects on screen

Lower performance, better upscaling is a very reasonable performance arena to expect NG Switch to be in, I think.

An example could be, for instance, a game that runs in the 900-1080p range on Series S and outputting at 1080p-1440p if upscaling is applied, while that same game struggles to hold a consistent internal resolution of 720p on NG Switch, and gets stretched to 4K with aggressive upscaling, resulting in a higher resolution image but no additional (or even less) detail.

Threadmarks

View all 18 threadmarks

Reader mode

Reader mode

Recent threadmarks

Poll #3: When do you think is the launch window for Nintendo's new hardware? Announcement regarding links to news and rumours from 2022 and 2021 Rough summary of the 2 August 2023 episode of Nate the Hate Rough summary of the 11 September 2023 episode of Nate the Hate Anatole's deep dive into a convolutional autoencoder Differences between T234 and T239 Rough summary of the 19 October 2023 episode of Nate the Hate necrolipe's Twitter (X) post on why a 2025 launch doesn't automatically make T239 outdated based on one of the leaked slides from MicrosoftPlease read this new, consolidated staff post before posting.

Furthermore, according to this follow-up post, all off-topic chat will be moderated.

Furthermore, according to this follow-up post, all off-topic chat will be moderated.

Last edited by a moderator: