And here is that CPU compared to Orin at comparable clocks.

https://browser.geekbench.com/v5/cpu/compare/18647313?baseline=9535279

Orin core perf (only really comparable since cluster sizes are not the same) is 2.6x Jaguar. The CPU in the Pro consoles was clocked at 2.3 GHz. Roughly comparable. Unless I am doing something deeply stupid?

*2.84x

If Normalized to the same frequency.

Neither, I was bouncing back between comparing the Series S and the PS4 and swapped numbers.

PS4 is 18CUs @811Mhz. Drake is 12SMs @???Mhz. Raster perf scales linearly in both arches with clock and CU/SM number, and Ampere vs GCN4 is 1.8 more efficient controlling for clock and CU/SM. At the same clocks, Drake is 20% more powerful than PS4 in raster perf.

I’m not sure if that’s an apt comparison, the 28SM 3060 outperforms the Vega 64 with 64 CUs by 20% as per TechPowerUP. While also having less memory bandwidth. And roughy the same TFLOP count.

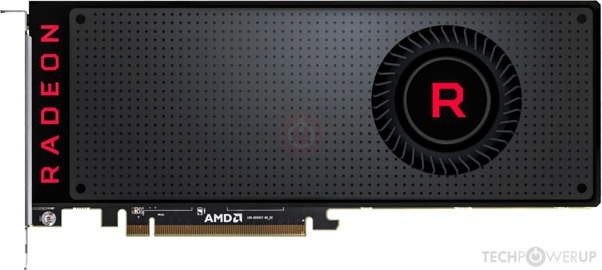

AMD Vega 10, 1546 MHz, 4096 Cores, 256 TMUs, 64 ROPs, 8192 MB HBM2, 945 MHz, 2048 bit

www.techpowerup.com

NVIDIA GA106, 1777 MHz, 3584 Cores, 112 TMUs, 48 ROPs, 12288 MB GDDR6, 1875 MHz, 192 bit

www.techpowerup.com

If we were to normalize it, CU to SM, 2.28:1 ratio

just using that kind of metric.

Add the 20% when at equal TFLOPs, and you get that an SM performs like 2.736 CUs of GCN5.0 who uses rapid packed math.

Or let’s compare the RX Vega 56 to the 3060 mobile.

56CUs vs 30SMs, both around 10TF (latter is a bit higher), the 3060 mobile outperforms that by 11%

So the performance would be 2.07CU:1SM in this case. Without accounting for the TF, 1.866CU:1SM to have roughly similar performance.

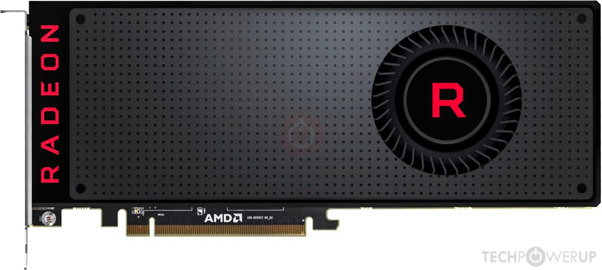

AMD Vega 10, 1471 MHz, 3584 Cores, 224 TMUs, 64 ROPs, 8192 MB HBM2, 800 MHz, 2048 bit

www.techpowerup.com

NVIDIA GA106, 1425 MHz, 3840 Cores, 120 TMUs, 48 ROPs, 6144 MB GDDR6, 1750 MHz, 192 bit

www.techpowerup.com

So, if we were to take it by

strictly using the CU to SM method,

Using the latter example, to have the same performance, it would be 24.4% more performant/efficient than the PS4. If both were the same 1.843TF, then accounting it the Drake would be 38% more performant.

If using the former example, then 52% more performant/efficient than the PS4 while having less. And if they are the same TF, then 82.4% more performant/efficient than the the PS4… which I think might be unrealistic?

Unless my math doesn’t check out here….

Let’s use the GCN3.0 Radeon R9 Fury, a 56 CU graphics card that has the same shader count as the 3060 the 28SM card, the latter outperforms it by 1.78x… but also has ~1.78x the TF or close enough.

7.168TF vs 12.74TF

AMD Fiji, 1000 MHz, 3584 Cores, 224 TMUs, 64 ROPs, 4096 MB HBM, 500 MHz, 4096 bit

www.techpowerup.com

So I’m not really sure using CUs and SMs is really a good idea in this case, or Drake would be >20% the PS4.

Additionally, while PS and Series X don't have Infinity Cache, they use a shared L3 cache between the CPU and the GPU.

They do not. AMD APUs don’t do that.

Intel, Apple Silicon, Snapdragons (via SLC acts as L4$ to CPU) and nVidia SOCs do that. Also Mediatek via the SLC (acts as a L4 cache to the CPU)

And AMD does that by design. The L3 is exclusive for the CPU. GPU gets 0 access to that. It goes from the L2 to the VRAM and that’s about it.

None of their APUs do that “GPU access to the CPU L3”. They go straight to the RAM.

@Look over there has determined that Drake likely is a "well fed" system from a memory bandwidth perspective, that the bandwidth available tracks with similarly sized Ampere GPUs. Looking at PS5/Series X, by comparison to their GPU counterparts, they similarly well fed.

That is

only if Drake doesn’t exceed 1.043GHz. After that it has worse memory bandwidth allocation per TF than the desktop Ampere.

Basically, why I said before about the ceiling for Drake with respect to TF is 3.2, after that it doesn’t scale in the favor and you get a more

imbalanced system like the Tegra X1.

And also why I think that with the GPU clocking to half in portable mode, a bandwidth of 68.2GB/s is “preferred” to balance the scaling from portable to Dock as best as possible. If they used LPDDR5X it can make it easier, being 2x the bandwidth scaling with 2x the FP32 increase.

And yes it does account for the CPU.