kvetcha

hoopy frood

- Pronouns

- he/him

Nintendo better be sending a fruit basket to Redmond.the Series S is the best thing to happen to Drake

Nintendo better be sending a fruit basket to Redmond.the Series S is the best thing to happen to Drake

IIRC, the drake SoC is also based off the same ampere arch and off of Tegra Orin. So CUDA core count per SM is still applicable and I believe the same can be said about GPU clock speeds. The fact that the TDP targets in the lower end also match what a device like the switch would typically use, only proves my point.you're still using Orin as a representative of what Nintendo would use when it isn't. Orin isn't made for gaming and Nvidia wouldn't sell it to nintendo for such

IIRC, the drake SoC is also based off the same ampere arch and off of Tegra Orin. So CUDA core count per SM is still applicable and I believe the same can be said about GPU clock speeds. The fact that the TDP targets in the lower end also match what a device like the switch would typically use, only proves my point.

Also, why do you care about Orin not being targeted towards gaming? like I said, the only important thing on that dev kit is the chip itself.

The original purpose of the the hardware is meaningless if it can be repurposed to be used within another platform.

lots of power is being used for other parts. if you look back on the specs of Orin, the listed clocks for the gpu and cpu are low for the TDP because power is used elsewhere. given the purpose of the SoC, it'd be an esoteric case to turn off those bits for the sake of more cpu/gpu power. which is why we don't actually know just how much power is devoted to the PVA/DLA/etc because the general use case is with them being utilizedIIRC, the drake SoC is also based off the same ampere arch and off of Tegra Orin. So CUDA core count per SM is still applicable and I believe the same can be said about GPU clock speeds. The fact that the TDP targets in the lower end also match what a device like the switch would typically use, only proves my point.

Also, why do you care about Orin not being targeted towards gaming? like I said, the only important thing on that dev kit is the chip itself.

The original purpose of the the hardware is meaningless if it can be repurposed to be used within another platform.

Seriously.the Series S is the best thing to happen to Drake

I’m on itthe Series S is the best thing to happen to Drake

Very good. These have been my expectations since Drake was leaked. . Since DF reaches a wider audience and folks take them almost as gospel (what with the whole discussion about BotW2 showing Switch Pro footage...) I hope this can levelset expectations. I'm a little tired of hearing about lowballing specs 'because Nintendo' in a world after the Nvidia leak. And by lowballing I mean the unreasonable kind.Small summary:

- Because games will be written specifically for the platform with a low-level API it will likely outperform Steam Deck due to the latter's OS overhead and unoptimised nature.

- On par with Series S is not gonna happen due to battery life, but with strategic nips and tucks (and DLSS + Ampere being more efficient compared to RDNA2) some games might be comparable while docked.

Seriously.

Also, looking at the cross-gen period we're in, none of the current crop of PS5/XSX games seem to push game design boundaries much. It's mostly PS4/XBO level games spruced up. That's also a blessing for Drake's image as a revitalised hybrid console.

Orin is an unusually bad Tegra SOC for a new Switch. Orin is a useful data point for a lot of things but we know Drake is a custom chip, so even where we don't have hard info on Drake, we can make assumptions about changes. This makes power-per-watt comparisons using Orin pretty difficult.IIRC, the drake SoC is also based off the same ampere arch and off of Tegra Orin. So CUDA core count per SM is still applicable and I believe the same can be said about GPU clock speeds. The fact that the TDP targets in the lower end also match what a device like the switch would typically use, only proves my point.

Also, why do you care about Orin not being targeted towards gaming? like I said, the only important thing on that dev kit is the chip itself.

The original purpose of the the hardware is meaningless if it can be repurposed to be used within another platform.

Plot twist of the week :O .And it's running surprisingly well on switch.

No one is claiming the existence of a 4 core, 4SM Orin SoC. We have hard data on the design of Drake, we know it's a custom chip. If Nintendo wanted a custom chip at a far less ambitious goal, that would be possible, and we know Drake isn't that.Despite you realizing that nintendo doesn't go for even mid-range hardware but really low end, there doesn't seem to be any actual documentation or spec info available online that would reinforce your claims of a 4cores, 4SMs Orin SoC.

Some games "might be comparable" to the series S (when accounting for DLSS, architecture, etc.) wow. Just how weak are they expecting this thing to be? The Series S is 4 tflops. If you assume the switch 2 is 2 tflops, with a better architecture and DLSS then it should absolutely outperform the series S in many key areas. So I guess they think it's gonna be like 1tflop docked?

- On par with Series S is not gonna happen due to battery life, but with strategic nips and tucks (and DLSS + Ampere being more efficient compared to RDNA2) some games might be comparable while docked.

Some games "might be comparable" to the series S (when accounting for DLSS, architecture, etc.) wow. Just how weak are they expecting this thing to be? The Series S is 4 tflops. If you assume the switch 2 is 2 tflops, with a better architecture and DLSS then it should absolutely outperform the series S in many key areas. So I guess they think it's gonna be like 1tflop docked?

They're talking about docked mode, not handheld.It’s a portable system. Expecting better than Series S shows a complete lack of understanding.

Docked… but always keep your expectation in check…They're talking about docked mode, not handheld.

The performance CPU cores on smartphone SoCs only run at high frequencies in short bursts of time, which helps prevent smartphone SoCs from getting too hot, and allows smartphones to get therefore, the performance CPU cores on smartphone SoCs are advertised at running at high frequencies, usually within the range of around 2-3 GHz (e.g. 1 Cortex-A78 core at 3 GHz and 3 Cortex-A78 cores at 2.6 GHz for the Dimensity 1300, 4 Cortex-A78 cores at 2.85 GHz for the Dimensity 8100, 4 Cortex-A78 cores at 2.75 GHz for the Dimensity 8000, 2 Cortex-A78 cores at 2.5 GHz for the Dimensity 920, 2 Cortex-A78 cores at 2.4 GHz for the Dimensity 900, 2 Cortex-A78 cores at 2.2 GHz for the Dimensity 930).Codename Drake will have an A78C class CPU with it, which was quite disruptive - A78C can clock up to 3.3GHz, but I suspect that it will be around 2-2.3GHz and believe the sub 2GHz consensus estimations on here to be woefully conservative.

I'm gonna go crazy and say Drake could run Metro Exodus Enhanced at the same resolution (but lower non-RT settings) as the Series S at 30fpsSome games "might be comparable" to the series S (when accounting for DLSS, architecture, etc.) wow. Just how weak are they expecting this thing to be? The Series S is 4 tflops. If you assume the switch 2 is 2 tflops, with a better architecture and DLSS then it should absolutely outperform the series S in many key areas. So I guess they think it's gonna be like 1tflop docked?

If Drake is manufactured on 8nm, I would be amazed if they were running all 12 SMs in portable mode at any clock. All we have to do is look at the supported power states for the Jetson Orin variants (which can be accessed on Nvidia's developer site) to see how unlikely that is.I know we been over this multiple times, but assuming 8 nm is all SM running in portable mode even feasible? If it is, wouldn't it be running below the lowest Switch clock?

The performance CPU cores on smartphone SoCs only run at high frequencies in short bursts of time, which helps prevent smartphone SoCs from getting too hot, and allows smartphones to get therefore, the performance CPU cores on smartphone SoCs are advertised at running at high frequencies, usually within the range of around 2-3 GHz (e.g. 1 Cortex-A78 core at 3 GHz and 3 Cortex-A78 cores at 2.6 GHz for the Dimensity 1300, 4 Cortex-A78 cores at 2.85 GHz for the Dimensity 8100, 4 Cortex-A78 cores at 2.75 GHz for the Dimensity 8000, 2 Cortex-A78 cores at 2.5 GHz for the Dimensity 920, 2 Cortex-A78 cores at 2.4 GHz for the Dimensity 900, 2 Cortex-A78 cores at 2.2 GHz for the Dimensity 930).

The CPU cores on Drake, which as mentioned is possibly the Cortex-A78C, on the other hand, have to run for sustained periods of time at the same frequency for TV mode and handheld mode. And nobody knows for certain which process node Nintendo and Nvidia decide to use to fabricate Drake. If Nintendo and Nvidia decide to fabricate Drake using Samsung's 8N process node, which is still a possibility, especially with Orin likely being fabricated using Samsung's 8N process node, running the CPU cores at 2-2.3 GHz could cause Drake to potentially run too hot, if the varying CPU frequencies for the various AGX Orin and Orin NX modules are any indication. And although Nintendo's new hardware is certainly going to have access to adequate cooling, unlike smartphones, generally speaking, the higher the frequencies, the lower the yield rate for chips. And Drake is a high volume chip, so ensuring high yields for Drake is in Nintendo's and Nvidia's best interest.

Although not related to Nintendo, Nvidia is added as a board member of the UCIe (Universal Chiplet Interconnect Express) Consortium.

(Try removing the "?utm_source=Twitter&utm_medium=Social&utm_content=MediaTek+Delivers+Efficient+Cortex-X2&utm_campaign=2022+-+FY+-+Linley+Microprocessor+Report" portion after clicking on the link. But if that doesn't work, here's the archive of the TechInsights blog post.)MediaTek Delivers Efficient Cortex-X2 | TechInsights

Our die-photo analysis reveals that the Dimensity 9000 features the smallest Cortex-X2 design, but Apple’s Avalanche CPU still leads in performance and power efficiency.www.techinsights.com

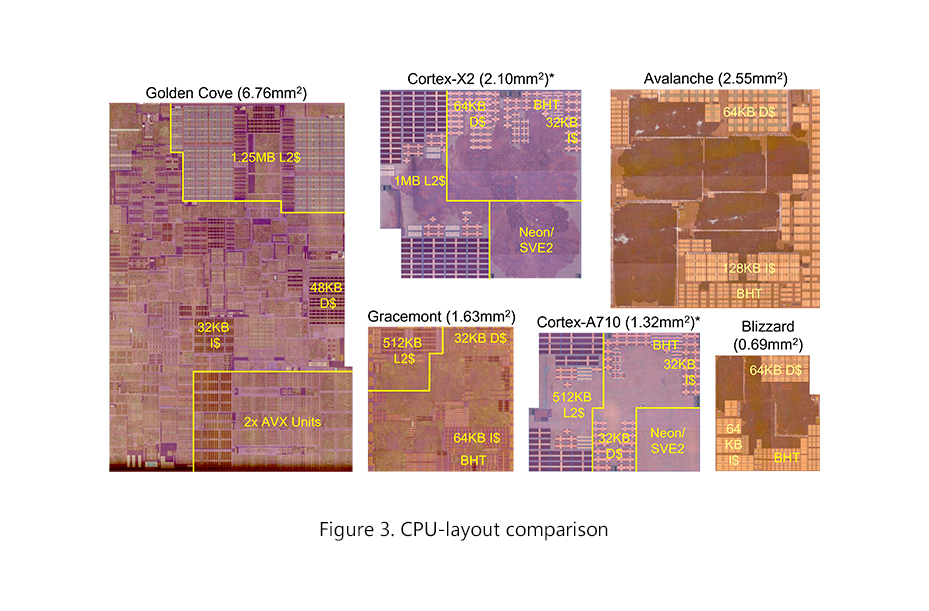

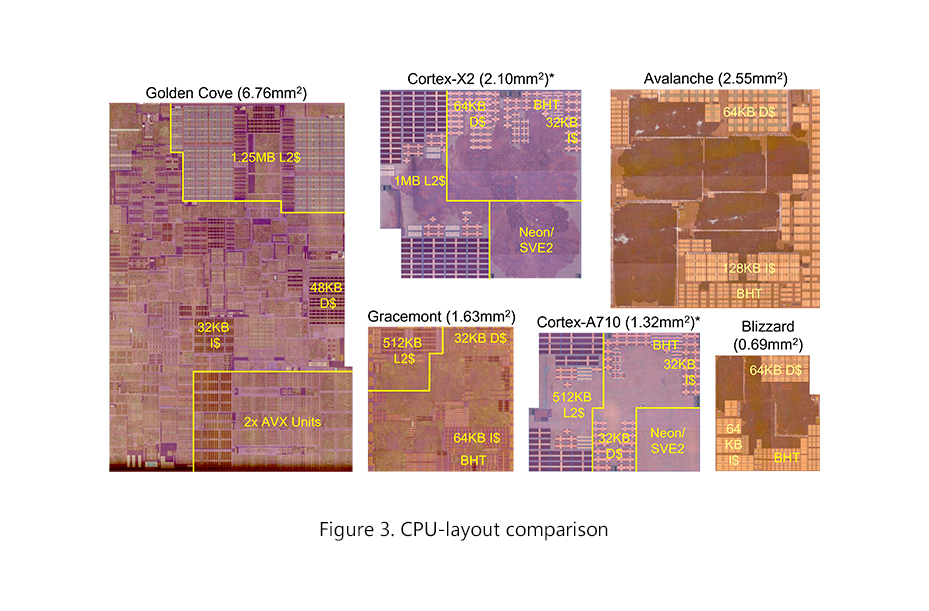

Although the die shot of the Cortex-A710 is from the Dimensity 9000, which is fabricated using TSMC's N5 process node, I think the Cortex-A710 could potentially give a very rough idea of how large the Cortex-A78 is. Considering that Arm advertises the Cortex-A710 having a 10% IPC increase compared to the Cortex-A78, and all of the Cortex-A710's major structures in the front end are exactly the same as the Cortex-A78's, I presume the Cortex-A78 is ~10% larger than the Cortex-A710, assuming TSMC's N5 process node is used for the comparison.

I think so, considering that Arm has reduced the macro-OP (MOP) cache from 6 MOPs on the Cortex-A78 to 5 MOPs on the Cortex-A710, which does decrease the area and power consumption, but with a performance penalty, for the Cortex-A710.I see a direct correlation between ipc and percentage size increase from you. Your basis for your presumption is the increase is directly related to something physically added to the footprint like transistors/cache?

If Drake is manufactured on 8nm, I would be amazed if they were running all 12 SMs in portable mode at any clock. All we have to do is look at the supported power states for the Jetson Orin variants (which can be accessed on Nvidia's developer site) to see how unlikely that is.

The full-fat Jetson Orin 64GB version (which has 16 SMs and 12 A78 CPU cores) has power profiles going down to 15W. To get there, Nvidia have to disable most of the CPU and GPU cores, leaving them with 4 CPU cores at 1.1GHz and 6 SMs at 420MHz. Memory is also clocked down by half. Now, there are PVA and DVA components on there (also clocked down), and we know Drake's tensor cores are less powerful, but to assume that Nvidia and Nintendo would somehow manage to get twice as many CPU and GPU cores running at half the power consumption of Orin using the same architecture on the same manufacturing process is extremely wishful thinking.

Even the cut-down Jetson Orin NX 16GB, which is most similar to Drake in memory width, (assumed) CPU cores and GPU SMs, has to drop down to just 4 SMs at 625MHz to hit its 15W power mode.

Given what the new model is trying to do, though, dropping down to (say) 6 SMs in portable mode really isn't that bad of an idea. The original Switch had a 720p output resolution in portable mode, and a 1080p output in docked. That's a 2.25x difference in the number of pixels being output between the mode, and originally the Switch had a 2x difference in GPU clocks and therefore performance between the two modes (I believe 384MHz was the original portable clock, and the 460MHz was added later, happy to be corrected on that, though.) What this meant is that the GPU performance per pixel was within the ballpark in both modes, which makes it easier for developers to support both modes. Obviously there are other aspects like memory bandwidth which come into play, but the whole idea of docked vs portable modes is to balance the performance, resolution and power consumption across the two use-cases.

Coming to the new Drake-powered model, pretty much the most-repeated fact about it is that it outputs in 4K resolution. Most people in this thread seem to think it will have a 720p screen, which would mean that instead of a 2.25x difference in output resolution on the original Switch, this would have a 9x difference in output resolution. This is the main reason I personally believe it's more likely to have a 1080p screen, but even in that case there's a 4x difference in resolution between handheld and docked. If we keep the number of SMs the same between both modes, then we're unlikely to come close to the 4x difference in GPU performance, let alone anywhere near the 9x difference a 720p screen would bring. Realistically, we would probably have something like a 2x difference in clocks between the two modes, which would leave the performance per pixel either 2x higher in portable mode or 4.5x higher.

If they're using an 8nm chip, then it really should be thought of as adding extra SMs that are only going to be used in docked mode, rather than disabling SMs in portable mode. Running 12 SMs in portable mode would never have been on the table for 8nm, so their options were either to run say 6 SMs in both modes and have perhaps 420MHz portable/1GHz docked clocks, or add 6 more SMs, only run them in docked mode, and have say 420MHz portable/840MHz docked. The latter would provide a lot more performance in docked mode, and if they use a 1080p screen for portable mode, would give developers a 1:1 ratio of performance per pixel between the two. Of course it would also require higher power draw in docked mode (which is more achievable than in portable, at it's only a question of cooling, not battery life), and some additional cost in terms of increased die area, but Nintendo may have found those trade-offs worthwhile.

The other possibility is that it's just not on 8nm. On a process in TSMC's 5nm family, like Nvidia are using for Hopper and Ada, it should probably be fine running all 12 SMs in portable mode. On either TSMC 7nm/6nm or Samsung 5nm/4nm it might be able to squeeze it, but it's hard to say without data like we have for Jetson Orin on 8nm.

They're talking about docked mode, not handheld.

The counter argument, is that the 1536 cc/ 12 SM figure is hardcoded several places in NVN2, which does seem to indicate its a constant.

But NVN2 in the state from the theft was definitely still work in progress, so we probably can't make conclusions like that from it.

There's a rule of thumb (called Pollack's rule) that CPU core performance increases as a square of the increase in complexity, whereas power draw generally increases linearly with complexity. So if, on the same manufacturing process, a core is 4x bigger than another, we should expect it to be about 2x as powerful. ARM's X1/X2 cores are a good example of this, as they're basically just bigger versions of the A78/A710. With the X1 (according to Anandtech) the die area and power consumption should both be about 1.5x higher over the A78, and ARM claimed a 22% performance increase, which is exactly the square root of the die area increase.I see a direct correlation between ipc and percentage size increase from you. Your basis for your presumption is the increase is directly related to something physically added to the footprint like transistors/cache?

I believe the API calls for 12SMs, but other places just have information on what the hardware ORIN (and others) specs are and their differences. Like AD102 having 144SMs.As far as I'm aware, the same places that specify 12 SMs for Drake also hardcode 16 SMs for Orin, 144 SMs for AD102, etc. I don't think there are any references to the reduced SM modes for Orin, the binned versions of desktop GPUs, etc., so there wouldn't necessary have to be any reference to different modes for Drake in the stolen code, and it may be handled somewhere else (perhaps on Nintendo's side).

They're not talking about either, they're talking about Switch in general.They're talking about docked mode, not handheld.

I don’t think anybody is expecting overall more power.If people are expecting better than Series S even in docked then I think they’re going to be disappointed.

This is very good analysis, however, I think the power-per-pixel ratios don't take DLSS properly into account. The power-per-pixel number needs to be factored based on the targeted internal resolution, not the targeted output, with accommodations made for additional frame budget for DLSS.If Drake is manufactured on 8nm, I would be amazed if they were running all 12 SMs in portable mode at any clock. All we have to do is look at the supported power states for the Jetson Orin variants (which can be accessed on Nvidia's developer site) to see how unlikely that is.

The full-fat Jetson Orin 64GB version (which has 16 SMs and 12 A78 CPU cores) has power profiles going down to 15W. To get there, Nvidia have to disable most of the CPU and GPU cores, leaving them with 4 CPU cores at 1.1GHz and 6 SMs at 420MHz. Memory is also clocked down by half. Now, there are PVA and DVA components on there (also clocked down), and we know Drake's tensor cores are less powerful, but to assume that Nvidia and Nintendo would somehow manage to get twice as many CPU and GPU cores running at half the power consumption of Orin using the same architecture on the same manufacturing process is extremely wishful thinking.

Even the cut-down Jetson Orin NX 16GB, which is most similar to Drake in memory width, (assumed) CPU cores and GPU SMs, has to drop down to just 4 SMs at 625MHz to hit its 15W power mode.

Given what the new model is trying to do, though, dropping down to (say) 6 SMs in portable mode really isn't that bad of an idea. The original Switch had a 720p output resolution in portable mode, and a 1080p output in docked. That's a 2.25x difference in the number of pixels being output between the mode, and originally the Switch had a 2x difference in GPU clocks and therefore performance between the two modes (I believe 384MHz was the original portable clock, and the 460MHz was added later, happy to be corrected on that, though.) What this meant is that the GPU performance per pixel was within the ballpark in both modes, which makes it easier for developers to support both modes. Obviously there are other aspects like memory bandwidth which come into play, but the whole idea of docked vs portable modes is to balance the performance, resolution and power consumption across the two use-cases.

Coming to the new Drake-powered model, pretty much the most-repeated fact about it is that it outputs in 4K resolution. Most people in this thread seem to think it will have a 720p screen, which would mean that instead of a 2.25x difference in output resolution on the original Switch, this would have a 9x difference in output resolution. This is the main reason I personally believe it's more likely to have a 1080p screen, but even in that case there's a 4x difference in resolution between handheld and docked. If we keep the number of SMs the same between both modes, then we're unlikely to come close to the 4x difference in GPU performance, let alone anywhere near the 9x difference a 720p screen would bring. Realistically, we would probably have something like a 2x difference in clocks between the two modes, which would leave the performance per pixel either 2x higher in portable mode or 4.5x higher.

If they're using an 8nm chip, then it really should be thought of as adding extra SMs that are only going to be used in docked mode, rather than disabling SMs in portable mode. Running 12 SMs in portable mode would never have been on the table for 8nm, so their options were either to run say 6 SMs in both modes and have perhaps 420MHz portable/1GHz docked clocks, or add 6 more SMs, only run them in docked mode, and have say 420MHz portable/840MHz docked. The latter would provide a lot more performance in docked mode, and if they use a 1080p screen for portable mode, would give developers a 1:1 ratio of performance per pixel between the two. Of course it would also require higher power draw in docked mode (which is more achievable than in portable, at it's only a question of cooling, not battery life), and some additional cost in terms of increased die area, but Nintendo may have found those trade-offs worthwhile.

The other possibility is that it's just not on 8nm. On a process in TSMC's 5nm family, like Nvidia are using for Hopper and Ada, it should probably be fine running all 12 SMs in portable mode. On either TSMC 7nm/6nm or Samsung 5nm/4nm it might be able to squeeze it, but it's hard to say without data like we have for Jetson Orin on 8nm.

It’s interesting that it matches it, but ampere does it as a much lower memory bandwidth than GCN.ARM didn't mention anything about size for the A710, so yea, A710 at the minimum is equal in transistor count to the A78, if not bigger. If there are any area savings, marketing would mention it (like the A78 having -5% area compared to the A77).

I don't particularly care for going by flop comparisons, but IIRC, going off of what Alovon posted some time ago, RDNA2 flops without Infinite Cache ended up comparable to RDNA1 flops, right? And RDNA1 flops were comparable to 1.25x GCN flops? And Ampere flops were comparable to GCN? So as far as raw rasterization power goes, Series S's 4 tflops convert to something like 5 tflops in Ampere?

Hmm, looks like there's been a slight update to Samsung's eUFS catalog. So, it used to be the case that 3.0 and 3.1 were lumped together in the same page, and sometime earlier this year I've commented that the only 3.0 part remaining is the 1 TB part. That 1 TB 3.0 part can still be found on the website, but 3.0 got split off to its own page. And if you go up a level to Samsung's UFS page in general, they link only to 3.1 and 4.0. Annoyingly, for 3.1, the parts finder section only returns 128/256/512 GB parts, despite the images on the website clearly advertising a 1 TB version.

...oh, Micron's LPDDR5 catalog, since when did you grow from 3 pages to 4?

Steamdeck is a more expensive product sold at a lose, Nintendo will not sell hardware at a lose*It would really be frustrating if the next switch cant get to 1440p

without having resolution scaling dipping a lot.

were to far away from switch for a 1080p console.

@people being worried about less cores mobile: with a 720p screen that should really not be to big of a problem.

and i really hope its better then the SD.

I know some argue that the steamdeck isnot that old, it wont be much better.

But steamdeck is not a mass market product as the switch is, and games are not made native for it. I would be shocked if games dont run much better even with the same power on switch.

How far away from the PS4 will Drake be in portable mode???

Any advantages???

Please don’t laugh, I play a ton in that mode and was just curious.

Economy of scale is the thing i hope for. i am hoping for roughly brute force comparable to SD, maybe slightly better, with optimization and low level punching above SD, and in regards to price 4-450€.Steamdeck is a more expensive product sold at a lose, Nintendo will not sell hardware at a lose*

*there is the chance they do in individual regions due to currency exchange reasons but it will not be global thing and especially will not happen in NA

How far away from the PS4 will Drake be in portable mode???

Any advantages???

I play exclusively in handheld.Please don’t laugh, I play a ton in that mode and was just curious.

It won't get to 1440p because 1440p isn't standard on tv's.It would really be frustrating if the next switch cant get to 1440p

And 900p is not a TV standard, yet BotW renderes 900p docked and upscales it to 1080p as the output.It won't get to 1440p because 1440p isn't standard on tv's.

Given DLSS, would there be any advantage to rendering at 1440p?And 900p is not a TV standard, yet BotW renderes 900p docked and upscales it to 1080p as the output.

When im talking 1440 im talking render resolution, not output resolution.

Honest answer? as long as it looks about right (comparable), im fine with how ever they acomplish that.Given DLSS, would there be any advantage to rendering at 1440p?

Honest answer? as long as it looks about right (comparable), im fine with how ever they acomplish that.

But my problem with dlss: it is a specific implementation. it is not just there.

I could see many ports just not bothering. I could see som eindies not having the resources to implement it just for the switch port. If its strong enough to brute force some games to 1440 that would be great.

And then theres the fact, that we dont know how effective DLSS on the switch would be.

Or if it will be there, actually, since the console is not anounced. (i know, nvidia leak, we hat a ton of leaks of stuff where nintendo did not release it, see NSO emulators... (for now))

Honest answer? as long as it looks about right (comparable), im fine with how ever they acomplish that.

But my problem with dlss: it is a specific implementation. it is not just there.

I could see many ports just not bothering. I could see som eindies not having the resources to implement it just for the switch port. If its strong enough to brute force some games to 1440 that would be great.

And then theres the fact, that we dont know how effective DLSS on the switch would be.

Or if it will be there, actually, since the console is not anounced. (i know, nvidia leak, we hat a ton of leaks of stuff where nintendo did not release it, see NSO emulators... (for now))

what do you mean it's not there? if the porting team doesn't bother, it's more likely because the game won't benefit (it's a 2d game for example), or that the game reaches the performance without it. as for effectiveness, we know enough that TAAU works well on switch and that the tensor cores would still offload work. Steam Deck and FSR2 is enough of a show on thatHonest answer? as long as it looks about right (comparable), im fine with how ever they acomplish that.

But my problem with dlss: it is a specific implementation. it is not just there.

I could see many ports just not bothering. I could see som eindies not having the resources to implement it just for the switch port. If its strong enough to brute force some games to 1440 that would be great.

And then theres the fact, that we dont know how effective DLSS on the switch would be.

Or if it will be there, actually, since the console is not anounced. (i know, nvidia leak, we hat a ton of leaks of stuff where nintendo did not release it, see NSO emulators... (for now))

If it has TAA it reduces the work by a lot, but it also need a bit of work for like a few days to a few weeks to make sure it actually looks good.Correct me if I'm wrong, but can't DLSS be used as a drop in for TAA in game engines? Surely that must reduce the work needed.

the Series S is the best thing to happen to Drake