For the “fun” of it, but here are some low end specs I made up:

4SMs (512GPU cores), 500MHz handheld, 1000MHz docked. So 512GFLOPs portable (not stronger than current switch docked in actual perf, about the same) and 1TFLOP docked (weaker than the XB1 in docked before DLSS)

4 A78 cores (1clocked lower for the OS) at 2GHz. no A55s.

6GB of LPDDR5 RAM

51GB/s docked, lower in handheld. 64-bit.

LCD screen (yes, ditching the OLED for “???” reasons)

128GB UFS 2.1 internal storage. (Or same 64GB eMMC of the current switch to go even lower)

This is the low end. Like the lowest. The floor.

If you are speculating and somehow end up lower than this, you’re doing more than necessary, going above and beyond to be more negative here. I get being cautious as they are not as clear cut and are more fluid, but there’s a limit to being cautious and being realistic at the same time. Being cautious and somehow going lower than this is being unrealistic and unreasonable.

Take into account it’s supposed to be a

derivative of an existing chip. A lot of the design is already done. They will customize it to what they need, as in remove what they don’t need.

ORIN and by extension ORIN NX are the chips that are being derived here. But these are

automotive focused SoCs, therefore what can we reasonably assume is being removed? The automotive parts of course.

However, what should be considered is that this will be on an 8nm process most likely, because ORIN has only been reported as 8nm, nothing has been officially indicated as ORIN (and by extension ORIN NX) are on anything but 8nm. Not 7nm, not 5nm or even 4nm. There is a power draw that is considered for a portable device like this.

The CPU cores in ORIN+NX have a

max frequency of 2GHz. The GPU has a

max frequency of 1GHz.

I think that, if we are to discuss this thing, while we can be optimistic or pessimistic, there is a ceiling and a floor before it becomes just unrealistic for the other reasons.

All should be considered that it will need to run on a battery, and have a screen, and RAM, BT, and wifi, and other parts that drain battery too.

________________________________________

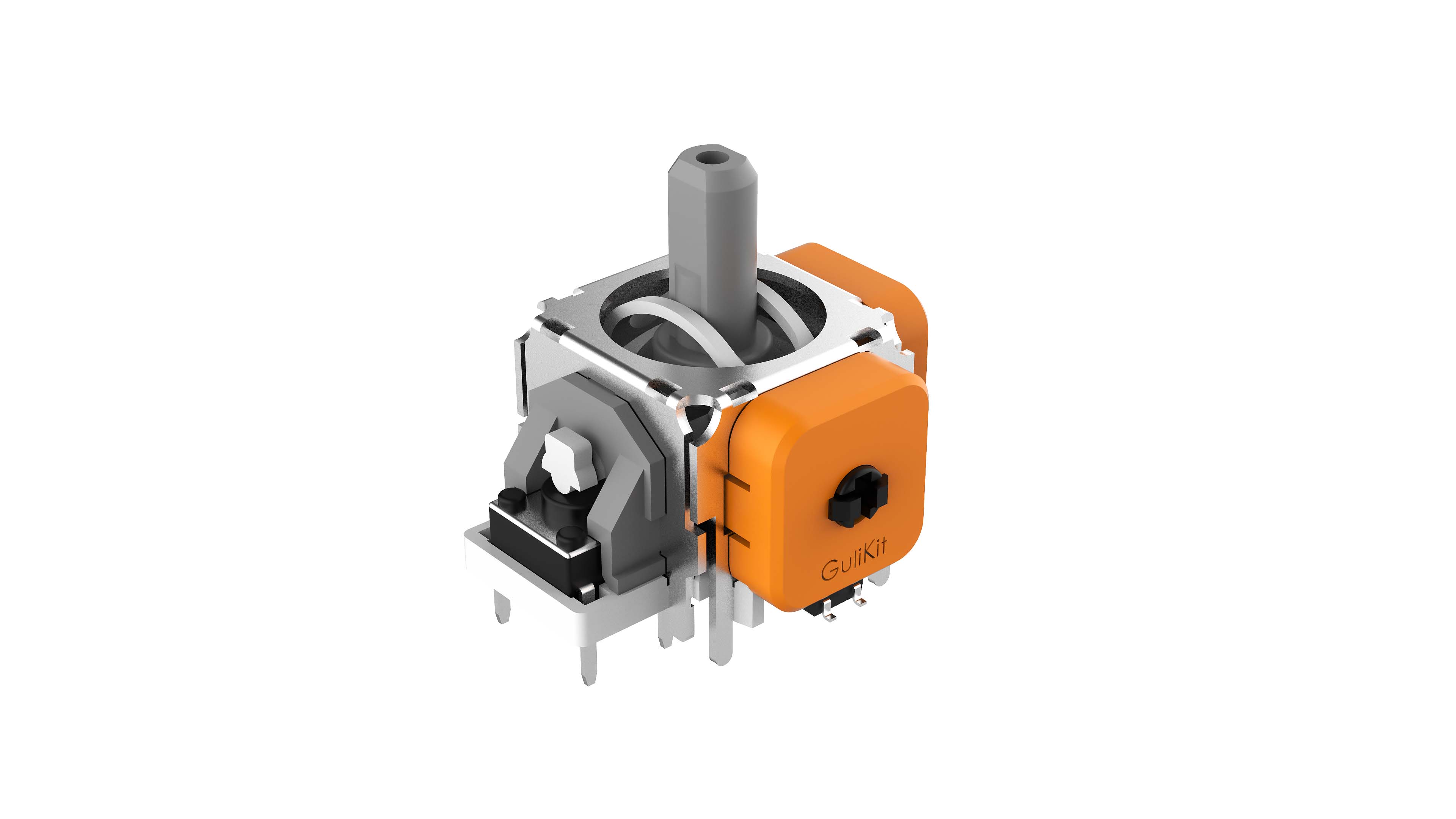

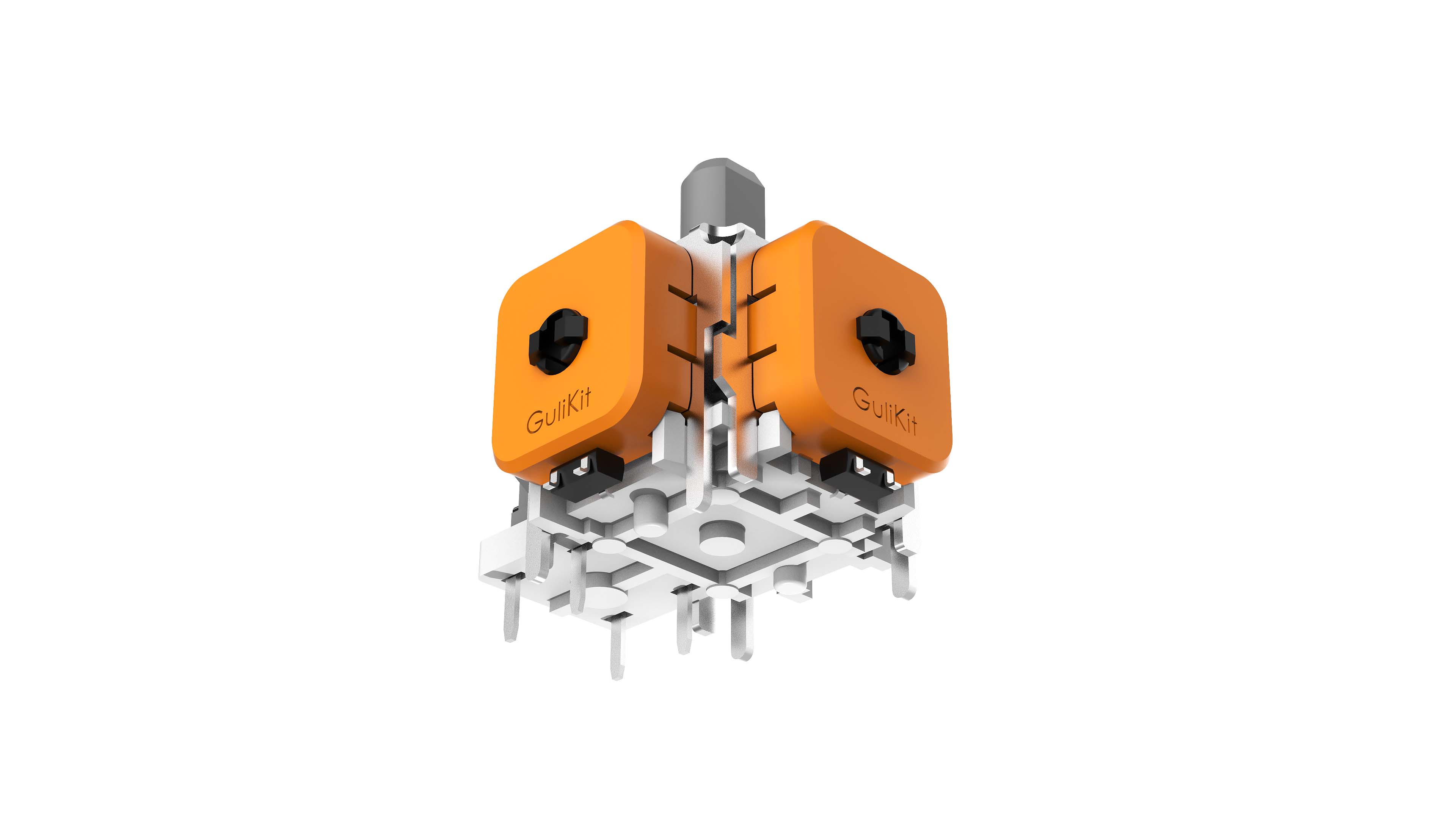

And, we can also discuss other things that are not how much power it will have. For example, the controller stick that FWD-BWD mentioned earlier. Or RT in games (when applicable). Or RT accelerated sound. Or maybe the sensor integrated into the dock for the return of the Wiimote….. or codecs that are useful in a device like this, etc.

For those that read, the ones that don’t comment but want to, you are encouraged to talk about other things too!

The soc is only part of the piece, there’s more to it.

Personally I’d like the pro controller to have an update

, but I love its long battery life.

and don’t hold your self to a 300 dollar price tag, assume it’ll be 400 bucks and give yourself more elbow room with the other specs that aren’t SoC related, as that’s a separate thing to consider