regardless of the node, this will be a massive jump based on the specs we know. with a better node, the floor is just higherI’ve read your comment like 5 times now. What does the switch times 1.5 mean? Is this just sarcasm?

-

Hey everyone, staff have documented a list of banned content and subject matter that we feel are not consistent with site values, and don't make sense to host discussion of on Famiboards. This list (and the relevant reasoning per item) is viewable here.

-

Do you have audio editing experience and want to help out with the Famiboards Discussion Club Podcast? If so, we're looking for help and would love to have you on the team! Just let us know in the Podcast Thread if you are interested!

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

StarTopic Future Nintendo Hardware & Technology Speculation & Discussion |ST| (Read the staff posts before commenting!)

- Thread starter Dakhil

- Start date

Threadmarks

View all 18 threadmarks

Reader mode

Reader mode

Recent threadmarks

Poll #3: When do you think is the launch window for Nintendo's new hardware? Announcement regarding links to news and rumours from 2022 and 2021 Rough summary of the 2 August 2023 episode of Nate the Hate Rough summary of the 11 September 2023 episode of Nate the Hate Anatole's deep dive into a convolutional autoencoder Differences between T234 and T239 Rough summary of the 19 October 2023 episode of Nate the Hate necrolipe's Twitter (X) post on why a 2025 launch doesn't automatically make T239 outdated based on one of the leaked slides from Microsoft

Staff Communication

View all 12 threadmarks

Reader mode

Reader mode

Recent threadmarks

Regarding Hogwarts Staff Communication 05/26/23 Staff Post about Citing Leaks and Rumors Staff Post about reading Hidden Content Staff Post- Please read Keeping on topic New Switch 2 ST Made - Nontechnical Talk Has A New Home Staff Post Regarding Dubious Insiders And Better SourcingToad_Kinopio

Rattata

Depending on how the nanometer stuff pans out, at the extreme ends we might be looking at "Ridiculously more powerful than 2017 Switch" and "Ridiculously more powerful than 2017 Switch times 1.5".

As we can see the AGX Orin is a monster at a higher power consumption, yet if the TDP is no more than 10w, the actual performance of Drake could be just in the level of Orin Nano.

D

Deleted member 887

Guest

We all know Nintendo can market it however they want, and they can squeeze every ounce of power to make their first party games look amazing, but I’m wondering about the possibility of “miracle” PS5/XSX ports on 8nm Drake.

The possibility is pretty clear just based on the architecture and minimum clocks. What actually happens is subject to a lot of other factors.

A die shrink is very unlikely for 8nm.Ah well. I’ll just wait and see. My last thought is if 8nm Drake gets the “Mariko“ treatment and gets a die shrink later on, it won’t be for the benefit of more power but to battery life again. Versus if they did 6nm now, then 3nm in a few years.

I know that’s the conventional wisdom around here, but is a shrink to euv really that much worse than the Mariko shrink to finfet? Is there a source on this?A die shrink is very unlikely for 8nm.

RennanNT

Bob-omb

I don't know how much harder it is to shrink to euv and I'm a layman in the field, but even if it's a lot more, NVidia is using AI to reduce die shrink labor by a huge margin, so I wouldn't assume die shrinking from 8nm now would be more expensive to NVidia than Erista's die shrink was at the time.I know that’s the conventional wisdom around here, but is a shrink to euv really that much worse than the Mariko shrink to finfet? Is there a source on this?

Essentially, it's a way of automating the process of migrating a cell, like a fundamental building block of a computer chip, to a newer process node.

...

The tool Nvidia uses for this automated cell migration is called NVCell, and reportedly 92% of the cell library can be migrated using this tool with no errors. Then 12% of those cells were smaller than the human-designed cells.

"This does two things for us. One is it’s a huge labor saving. It’s a group on the order of 10 people will take the better part of a year to port a new technology library. Now we can do it with a couple of GPUs running for a few days. Then the humans can work on those 8 percent of the cells that didn’t get done automatically. And in many cases, we wind up with a better design as well. So it’s labor savings and better than human design."

- Pronouns

- he / him

It's kind of blowing up right now that Gotham Knights will be limited to 30fps on PS5 and Series S/X. Since their statement says it's more to do with having a big multiplayer open world rather than something they can work around by lowering resolution, sounds like a CPU limitation. Bringing this up as it seems like a rare example of a current game where a Drake version might not be viable.

ReddDreadtheLead

#TeamLate2025WithAPotentialForEarly2026

- Pronouns

- He/Him

The bare minimum for low 1080p+60 is a CPU that’s weaker than the ones in the consoles so it isn’t that.It's kind of blowing up right now that Gotham Knights will be limited to 30fps on PS5 and Series S/X. Since their statement says it's more to do with having a big multiplayer open world rather than something they can work around by lowering resolution, sounds like a CPU limitation. Bringing this up as it seems like a rare example of a current game where a Drake version might not be viable.

The issue is multifaceted, and I’d wager one of them is not having their priorities in order and having to scramble at last minute. And keeping their vision in tact.

I know that’s the conventional wisdom around here, but is a shrink to euv really that much worse than the Mariko shrink to finfet? Is there a source on this?

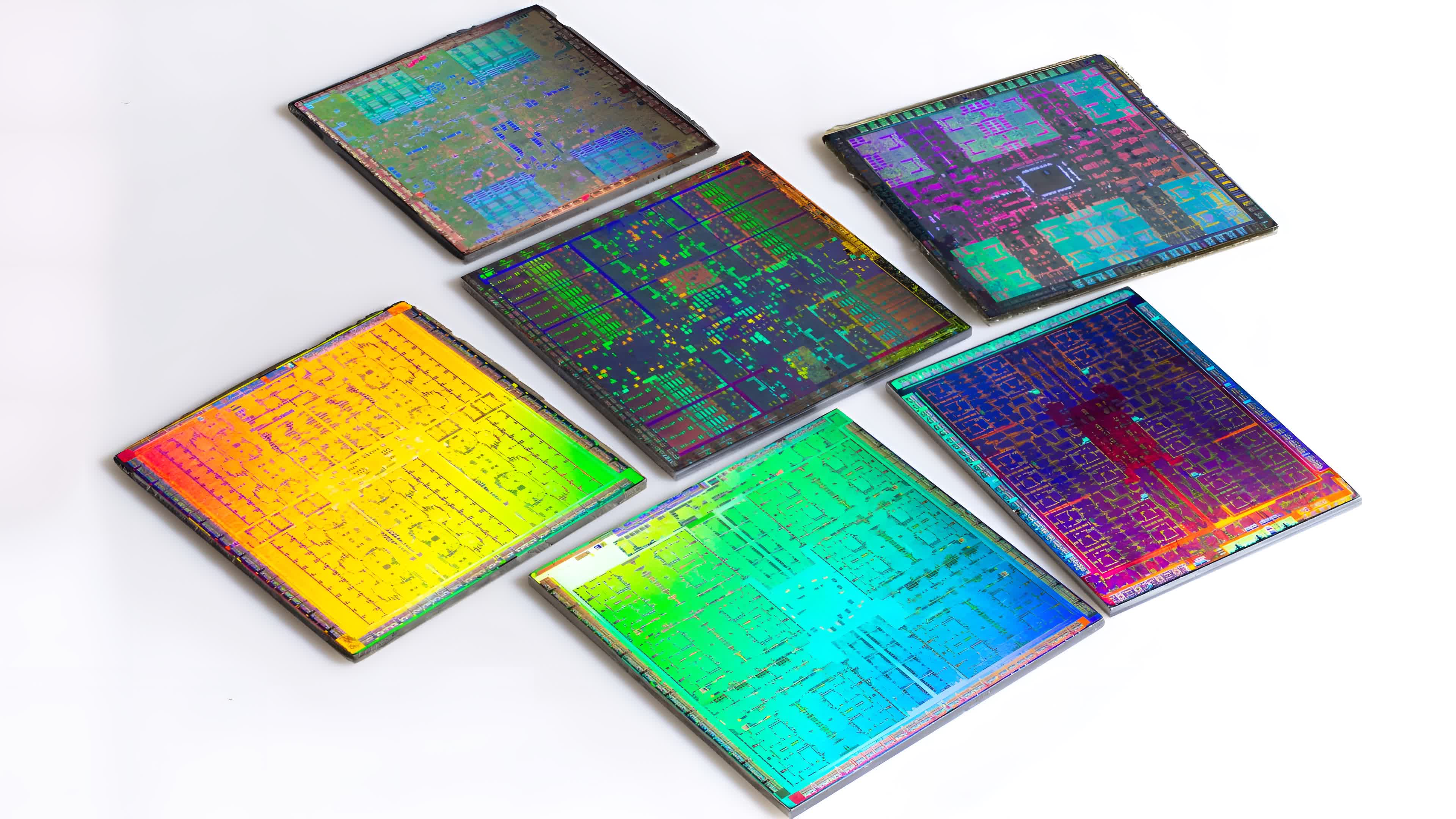

The issue isn’t so much the shrinking process. The issue is that nvdidia has no other product at the Samsung foundry of their newer nodes. The only other foundry in which Nvidia has a product on is at TSMC. If Nvidia is going to shrink the Drake chip to a more recent node, it stands to reason that the most likely scenario is another place in which Nvidia products are, just like the TX1 followed with the Pascal GPUs.I don't know how much harder it is to shrink to euv and I'm a layman in the field, but even if it's a lot more, NVidia is using AI to reduce die shrink labor by a huge margin, so I wouldn't assume die shrinking from 8nm now would be more expensive to NVidia than Erista's die shrink was at the time.

Nvidia customizes a node for their own needs and purposes. Samsung property remains at Samsung while TSMC property remains at TSMC, their IPs are made with the chip and cannot be brought over.

The likelihood of Nintendo paying an absolute fuck ton of money, just to have a chip rebuilt with the IP of the foundry, and the different technology of the foundry is so low that they are better off just paying to make a whole new chip with whole new feature set and having that as a whole new console. The more recent the node, the more expensive the R&D is to actually develop a new chip on that node.

The shrinking process is reduced by nvidia using AI at least, so that’s less of a concern, but that does not cover the Samsung IP at a TSMC foundry.

If this wasn’t so much of an issue, I feel as the Nintendo could have just shrunk the Tegra X1 to the 8N process and made that a switch pro or something.

Unless, you know, nvidia just throws it to SEC 5LP or 4LP Or 3 which is supposed to release not too far later

Last edited:

eK-XL

Rattata

- Pronouns

- He

Before their November 2020 launches the PS5 entered manufacturing in June 2020 and the Series X and Series S entered manufacturing in late July 2020. They would need to start manufacturing sometime around December - February, possibly sooner if they are going for a March or April launch

Nvidia CEO Jensen Huang: ‘The semiconductor industry is near the limit’

Jensen Huang and his wife, Lori Huang, are donating $50 million to Oregon State University to help fund the development of a new innovation complex that will include a Nvidia supercomputer.

www.protocol.com

www.protocol.com

⋮

The semiconductor industry is near the limit. It's near the limit in the sense that we can keep shrinking transistors but we can't shrink atoms — until we discover the same particle that Ant Man discovered. Our transistors are going to find limits and we're at atomic scales. And so [this problem] is a place where material science is really going to come in handy.

A great deal of the semiconductor industry is going to be governed by the advances of material sciences, and the material sciences today is such an enormously complicated problem because things are so small, and without a technology like artificial intelligence we're simply not going to be able to simulate the complicated combination of physics and chemistry that is happening inside these devices. And so artificial intelligence has been proven to be very effective in advancing battery design. It's going to be very effective in discovery and has already contributed to advancing more durable and lightweight materials. And there's no question in my mind it is going to make a contribution in advancing semiconductor physics.

When something dies? It might be reincarnated, but it dies. The question is, what's the definition of Moore’s law? And just to be serious, I think that the definition of Moore's law is about the fact that computers and advanced computers could allow us to do 10 times more computing every five years — it's two times every one and a half years — but it's easier to go 10 times every five years, with a lower cost so that you could do 10 times more processing at the same cost.

Nobody actually denies it at the physics level. Dennard scaling ended close to 10 years ago. And you could see the curves flattened. Everybody's seen the curves flatten, I'm not the only person. So the ability for us to continue to scale 10 times every five years is behind us. Now, of course, for the first five years after, it's the difference between two times and 10 times — you could argue about it a little bit and we're running about two times every five years. You could argue a little bit about it, you can nip and tuck it, you could give people a discount, you could work a little harder, so on and so forth. But over 10 years now, the disparity between Moore's law is 100 times versus four times, and in 15 years, it's 1,000 times versus eight.

We could keep our head in the sand, but we have to acknowledge the fact that we have to do something different. That's what it's really about. If we don't do something different and we don't apply a different way of computing, then what's going to happen is the world's data centers are going to continue to consume more and more of the world's total power. It's already noticeable, isn't that right? It means the moment it gets into a few percent, then every year after that, it will [continue]. Every five years it will increase by a factor of 10.

So this is an imperative. It's an imperative that we change the way we compute, there's no question about it. And it's not denied by any computer scientists. We just have to not ignore it. We can't deny it. And we just have to deal with it. The world's method of computation cannot be the way it used to be. And it is widely recognized that the right approach is to go domain by domain of application and get accelerated with new computer science.

⋮

D

Deleted member 887

Guest

Leaks aren’t guaranteed from production, though we’ve had several possible production leaks already. It’s just that until the product releases we won’t know which are valid.The lack of news is disheartening.Assuming a May launch, when should production (and therefore leaks) start?

In the case of the Lite what leaked was that it existed but we already know that, and the press and Nintendo have already been heavily burned by 2021. Nintendo is cracking down at the same moment that everyone is needing to triple check their sources means stuff isn’t bubbling up in the same way.

Concernt

Optimism is non-negotiable

- Pronouns

- She/Her

I think it's possible, if not likely, manufacturing has already begun in part, with final assembly and firmware flashing either ongoing or about to go online.Before their November 2020 launches the PS5 entered manufacturing in June 2020 and the Series X and Series S entered manufacturing in late July 2020. They would need to start manufacturing sometime around December - February, possibly sooner if they are going for a March or April launch

Reason being we still had that factory leak that had the OLED Special Editions, the reveal date of one of them, and details about the production of parts for a new model, with a new backplate, with validation starting around May if I'm not mistaken and final part production in the summer period. That would definitely line up with assembly starting between now and February if it hasn't already.

So many rumours pointing to late 2022/early 2023 were true in other ways, and it's still our working assumption. If they've had this on the boil for this long, I see no reason to doubt that timeframe, and no reason to doubt the factory leaks we've heard of.

- Pronouns

- He/Him

I think the reality is that chip makers have to start entering purpose-built computing instead of general-purpose computing to make up the lack of efficiency gains from die shrinks alone. And Nvidia seems to understand this, I have no doubt that its specialty cores in its GPU designs are there precisely to address this problem for at least a few industries, and we’re likely to see more of that moving forward.

Nvidia CEO Jensen Huang: ‘The semiconductor industry is near the limit’

Jensen Huang and his wife, Lori Huang, are donating $50 million to Oregon State University to help fund the development of a new innovation complex that will include a Nvidia supercomputer.www.protocol.com

But materials (read: advancements like graphene) need to come into play sooner rather than later. And in terms of ramp-up of power consumption, resolving the electricity loss in generation and transmission (which will require investment in infrastructure) could cushion the blow he describes, as well.

D

Deleted member 887

Guest

Many of them have. The entire mobile industry functions this way. The recent updates from More Moore that @Dakhil posted are pretty insightful in how little additional specialization and consolidation has left to give usI think the reality is that chip makers have to start entering purpose-built computing instead of general-purpose computing to make up the lack of efficiency gains from die shrinks alone.

You’re absolutely right, especially on exotic materials. We’re likely a hundred years away from a generational leap on power transmission, unfortunately. That’s a situation that will get worse for a long time before it gets better.But materials (read: advancements like graphene) need to come into play sooner rather than later. And in terms of ramp-up of power consumption, resolving the electricity loss in generation and transmission (which will require investment in infrastructure) could cushion the blow he describes, as well.

- Pronouns

- He/Him

Graphene is kinda becoming the fusion of the materials world, it's always 10-15 years away from being viable. I was doing research work on graphene like 13 years ago and was told how it would change the world in like 5 years and we're no closer today towards achieving viable mass production.

- Pronouns

- He/him

Yeah I'm pretty sure I learned about graphene and its amazing potential in high school lolGraphene is kinda becoming the fusion of the materials world, it's always 10-15 years away from being viable. I was doing research work on graphene like 13 years ago and was told how it would change the world in like 5 years and we're no closer today towards achieving viable mass production.

SiG

Chain Chomp

So Graphine is the new silicon?Graphene is kinda becoming the fusion of the materials world, it's always 10-15 years away from being viable. I was doing research work on graphene like 13 years ago and was told how it would change the world in like 5 years and we're no closer today towards achieving viable mass production.

Alovon11

Like Like

- Pronouns

- He/Them

I think it's moreso the benefits of switching to graphene don't outweigh the cost of switching atm in the eyes of companiesYeah I'm pretty sure I learned about graphene and its amazing potential in high school lol

ShadowFox08

Paratroopa

I think a newer and better node (ahem, TSMC) does allow for a significantly higher upper bound in handheld, which would also affect docked mode. Nintendo is likely targeting a specific amount of watts for both modes. If you can get a 1.5 Tflops handheldNo.

The clocks on 8nm won't be all that different from clocks on 5nm, there is a lower bound they can't really pass and an upper bound that comes up pretty quickly regardless of node. We already more or less have the full specs, it gives us a pretty narrow range of performance, this thing will not be able to be all that different just based on clocks. If they want to call it a "pro" or a "2" or a ganache is totally up to them, the process node is like, the least likely determining factor in that decision.

As I said yesterday the worry over node is getting a bit silly.

Oh and of course.. better battery life.

Witcher 3 is 30fps on last gen consoles and switch.It's kind of blowing up right now that Gotham Knights will be limited to 30fps on PS5 and Series S/X. Since their statement says it's more to do with having a big multiplayer open world rather than something they can work around by lowering resolution, sounds like a CPU limitation. Bringing this up as it seems like a rare example of a current game where a Drake version might not be viable.

There's a lot of unknowns here. I'm assuming it's 30 capped, but uncapped could be above 40 but all over the place/ unstable framerate, so better to cap at 30fps. So, Drake could potentially still meet/target 30fps as well, not to mention further optimization if it comes out later than PS5/x series Much better chance of getting 30 fps of the CPU gap is smaller than switch vs PS4.

Last edited:

I wonder if Nvidia's going to make improvements to DLSS in the denoising department?

Tellusim Technology Inc. found that DLSS 2.4 performs worse than FSR 2.1 in Tellusim Engine in the denoising department.

Tellusim Technology Inc. found that DLSS 2.4 performs worse than FSR 2.1 in Tellusim Engine in the denoising department.

- Pronouns

- He/Him

It's already in commercialized products (notably ultracapacitors and concrete additives to increase strength and lower volume to lower CO2 in construction), so viability has been proven and makes the fusion comparison rather insulting, since from discovery of monolayer graphene to commercialization has taken... at longest 12 years compared to fusion's never?Graphene is kinda becoming the fusion of the materials world, it's always 10-15 years away from being viable. I was doing research work on graphene like 13 years ago and was told how it would change the world in like 5 years and we're no closer today towards achieving viable mass production.

So production of graphene for semiconductor applications is still not as close as necessary and more work needs to be done in mass production, but considering the turnaround on monolayer graphene's commercialization in other applications, yeah, we're going to figure out the means to get there a hell of a lot sooner than it'll take to crack the fusion nut.

Last edited:

Anatole

Octorok

- Pronouns

- He/Him/His

One way to get a more stable, less grainy image is to aggressively filter the pixel samples, but that doesn’t mean the resulting image is a more accurate representation of the ground truth. This article doesn’t pass the smell test for me because it makes no attempt to consider or quantify this.I wonder if Nvidia's going to make improvements to DLSS in the denoising department?

Tellusim Technology Inc. found that DLSS 2.4 performs worse than FSR 2.1 in Tellusim Engine in the denoising department.

- Pronouns

- He/Him

Monolayer graphene was discovered in the 60s, if not earlier. They managed to create a reproducible method for fabrication only recently (2004 I think) which involved taking graphite and scotch tape and repeatedly peeling the tape on and off of the graphite.It's already in commercialized products (notably ultracapacitors and concrete additives to increase strength and lower volume to lower CO2 in construction), so viability has been proven and makes the fusion comparison rather insulting, since from discovery of monolayer graphene to commercialization has taken... at longest 12 years compared to fusion's never?

So production of graphene for semiconductor applications is still not as close as necessary and more work needs to be done in mass production, but considering the turnaround on monolayer graphene's commercialization in other applications, yeah, we're going to figure out the means to get there a hell of a lot sooner than it'll take to crack the fusion nut.

Yes, it's usable and producible in very minute quantities and can be used now in very expensive and part limited applications but we are no closer to figuring out an economic method of mass producing graphene. I think right now the most viable method of production is epitaxial CVD on a silicon carbide wafer, and when you're talking about applications that need square feet of graphene rather than square cms it is an extremely inefficient production method.

Raccoon

Fox Brigade

- Pronouns

- He/Him

and fusion has some, um, limited use cases tooMonolayer graphene was discovered in the 60s, if not earlier. They managed to create a reproducible method for fabrication only recently (2004 I think) which involved taking graphite and scotch tape and repeatedly peeling the tape on and off of the graphite.

Yes, it's usable and producible in very minute quantities and can be used now in very expensive and part limited applications but we are no closer to figuring out an economic method of mass producing graphene. I think right now the most viable method of production is epitaxial CVD on a silicon carbide wafer, and when you're talking about applications that need square feet of graphene rather than square cms it is an extremely inefficient production method.

NineTailSage

Bob-omb

Thanks for posting all of this, it does give credence to a runway of manufacturing space available for Nintendo to create Drake in since

8nm being a dead end node doesn't have much life left in it for mainstream products. So where Samsung's 8N can bode well for Orin that will have a replacement in a few years, the Drake Switch we could probably see being manufactured for the next 5-6 years...

NineTailSage

Bob-omb

there's still a bunch of Ampere gpus that will be in production for a bit in addition to Orin products. while having no future, it'll linger on life support for a bit. hard to say if Samsung will keep enough capacity for the addition of Drake

Yes but not longer than 2yrs into the future, by that point Nvidia will be onto their next architecture.

The question is a little different from TSMC's 20nm because there was a clear path to reducing designs, but on 8nm where would Nintendo be able to go 3-4 years down the road?

Gyemknight

Rattata

- Pronouns

- He/Him

Sorry if I am late to the prty but what makes people think it will release 1 day before Tears of the Kingdom. If it was going to release in may I think people should expect the first week of may but no later than that.

- Pronouns

- He/Him

Who has been saying it'll release 1 day before TotK? Most people thinking it'll release near the game like myself expect it to release the same day, not one day before. They launch all their hardware at the same time as a new game.Sorry if I am late to the prty but what makes people think it will release 1 day before Tears of the Kingdom. If it was going to release in may I think people should expect the first week of may but no later than that.

Kevin

Chain Chomp

Is October usually this slow for game news?

I feel like this has been a better news October then the last couple of Octobers but generally yes, news basically dies down a lot until the December Game Awards and then again in like February and onward it starts to pick up some.

I have seen no one here saying that, specifically. Some think some time before Zelda, others think after Zelda, and many think on the same daySorry if I am late to the prty but what makes people think it will release 1 day before Tears of the Kingdom. If it was going to release in may I think people should expect the first week of may but no later than that.

My Tulpa

Bob-omb

In everyone who has kept up with this' opinion, how far are we from an initial official announcement/acknowledgement?

Announcement Oct 31st, release Nov 18th.

This would only help Nov/Dec sales, not hurt.

Since this doesn’t have the burden of being a “new console successor”, trying to maximize sales out of the gate isn’t an issue. It will sell out along side the other models that continue to sell as if the lifespan was still at its midpoint, not end.

Simba1

Bob-omb

It's kind of blowing up right now that Gotham Knights will be limited to 30fps on PS5 and Series S/X. Since their statement says it's more to do with having a big multiplayer open world rather than something they can work around by lowering resolution, sounds like a CPU limitation. Bringing this up as it seems like a rare example of a current game where a Drake version might not be viable.

I dont think its CPU limitation, they have full PC CPU, CPU in new consoles should not be bottleneck in any case.

Saying that, CPU in Drake Switch will probably be bottleneck in some cases because it's noticeable weaker in comparison.

Simba1

Bob-omb

Announcement Oct 31st, release Nov 18th.

This would only help Nov/Dec sales, not hurt.

Since this doesn’t have the burden of being a “new console successor”, trying to maximize sales out of the gate isn’t an issue. It will sell out along side the other models that continue to sell as if the lifespan was still at its midpoint, not end.

You still think that Drake will be release this year?

NintenDuvo

Bob-omb

- Pronouns

- He him

Why can't we have a brave soul break NDA like Hellena Taylor did when it comes to the next Switch lol (joking), just really ready for something concrete to prove this thing really exists and coming soon, i love my Switch and even i am starting to notice the chug

Link_enfant

Fashion Dreamer

- Pronouns

- He/Him

It's like you describing the OLED model which was not a successor at all and still had about three months between announcement and release.Announcement Oct 31st, release Nov 18th.

This would only help Nov/Dec sales, not hurt.

Since this doesn’t have the burden of being a “new console successor”, trying to maximize sales out of the gate isn’t an issue. It will sell out along side the other models that continue to sell as if the lifespan was still at its midpoint, not end.

Drake is a major upgrade, whatever Nintendo decides to do in their marketing so I don't really see less than a 2 months timeframe happening.

- Pronouns

- He/Him

Personally I don't see the need for all that much marketing myself, none of the arguments for it make all that much sense.It's like you describing the OLED model which was not a successor at all and still had about three months between announcement and release.

Drake is a major upgrade, whatever Nintendo decides to do in their marketing so I don't really see less than a 2 months timeframe happening.

On the other hand I definitely don't see it happening in November cause we'd have definitely heard substantial leaks to that effect by now.

Hosermess

Koopa

Announcement Oct 31st, release Nov 18th.

This would only help Nov/Dec sales, not hurt.

Since this doesn’t have the burden of being a “new console successor”, trying to maximize sales out of the gate isn’t an issue. It will sell out along side the other models that continue to sell as if the lifespan was still at its midpoint, not end.

I admire your boldness. I also like how you only have to wait 2 weeks to get your answer.

But a release in a month would likely mean there are skids of Drake's already boxed up and shrink-wrapped ready to go sitting somewhere...in a warehouse, on a dock or already on a boat on their way to NA. etc. And not one peep about it?

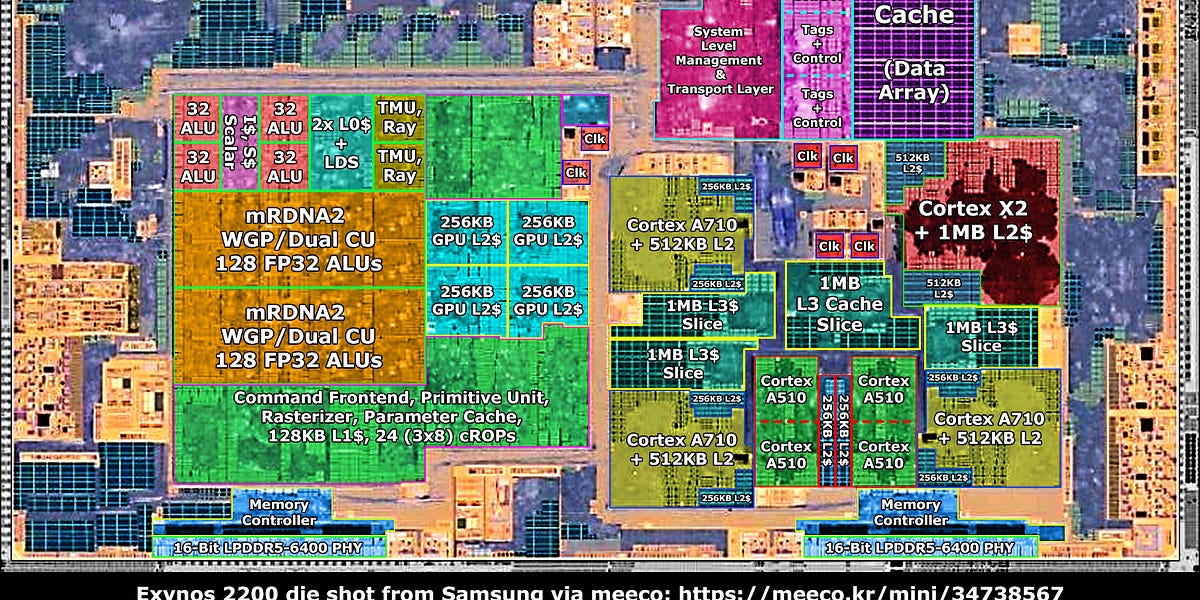

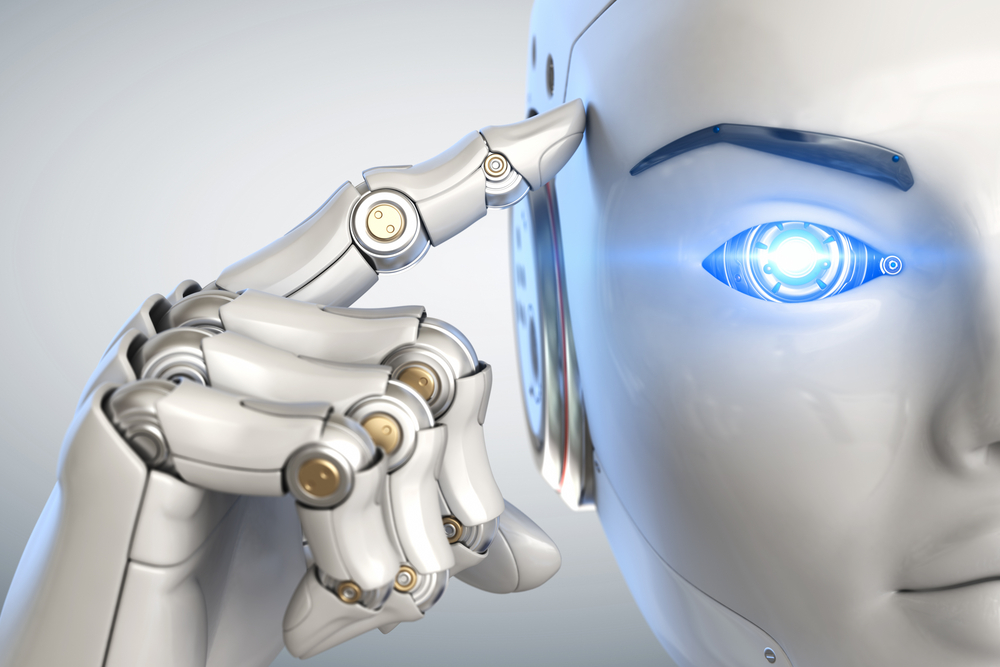

When comparing T234's drivers register file from 2 years ago to T239's drivers register file from 17 months ago, Nvidia seems to have removed Nvidia's JPEG decoder/encoder (nvJPG) and the Deep Learning Accelerators (NVDLA) for T239. And Nvidia has also confirmed T239's OFA driver is the same as T234's OFA driver.

Thanks to @LiC for the discovery.

Thanks to @LiC for the discovery.

Last edited:

ReddDreadtheLead

#TeamLate2025WithAPotentialForEarly2026

- Pronouns

- He/Him

I’m only going to post a theory, it’s entirely speculative but can put a plausible idea on this that can perhaps explain a few things and give a potential cohesive reasoning for it:

A few weeks ago, @oldpuck posted a few GitHub links, one of these links was of interest:

As you may or may not know, VDK stands for “Virtual Developer Kit”

Nvidia actually offers virtual SDKs for devs to use their cloud servers in software development:

developer.nvidia.com

developer.nvidia.com

To link a few, here’s an interesting remark:

It isn’t far fetched to believe that developers can use nVidia servers to remotely develop games for a device…. and stay with me here…. Doesn’t physically exist.

If anyone remembers, Nintendo made a comment at the time, and so did Zynga, denying that A) the former sent out developer kits for a new piece of hardware and B) the latter actually received a developer kit for a new piece of hardware.

Now I know that at the time, this was just for that moment, it’s fine for them to lie because they don’t gain anything from telling the truth only harming the company, but let’s put the hypothetical scenario that they were actually just telling the truth.

The reason for why they will be telling the truth is that Nintendo wouldn’t be the one distributing actual developer kits, that would be nVidia doing it, and it will be a software that developers can access to by a portal. Likewise Zynga, even if there was an investigation done on them, wouldn’t have had a developer kit for an unannounced hardware, because it doesn’t actually physically exist at the studio.

Now, what is a supporting evidence I have of this, how would the developers even realize that they are developing precisely for what they are targeting? Nvidia has a super computer that can actually simulate a certain performance target that they are aiming to achieve, and it uses AI. They also have a super computer that can speed up the process of developing a chip, which greatly reduce the amount of time required to develop a whole new architecture/series of chips.

www.hpcwire.com

www.hpcwire.com

www.techspot.com

www.techspot.com

It is not impossible that developers are developing on virtual hardware, a.k.a. not physical hardware, since 2020. Henceforth, this will explain why there has been zero leaks about what the hardware looks like. Granted it was going to look like a switch most likely, however Nintendo would have simply discussed the performance target that they desire over the switch and Nvidia is simply tasked with delivering set performance target because Nintendo was willing to pay for that.

As a result, the project started in late 2019/2020, the first report of developer is being task to have games “4K ready“ was in the second half of 2020. Despite all this time, there has been nothing about a physical form of the device and I know I’ve seen people hear comments on it. in essence, it would be more difficult for information to leak this time because it is not a traditional piece of hardware cycle.

It is probably not like the PlayStation5 or the Xbox series which had a physical piece of hardware and developers worked with those physical pieces of hardware that got changed numerous times over the course of its development. In this case, there is no need to update it per se when the simulated hardware is supposed to act the same for each developer that works with it. It’s not real hardware.

This is simply a theory, I’m missing a few bits of information but this should get the gear rolling on a speculative perspective of this.

Edit: to further expand upon this idea, based on the data breach, it is possible that there has been no actual requirements for a physical developer kit that actually has a Tegra in it or in the same sense as a PS5 or XBox Series this time. It could’ve just been something that was done on a PC that runs windows. And used a, at minimum, Turing-based graphics card for the feature set.

It doesn’t have to look like a switch, it doesn’t even have to be a little box with a cord, it doesn’t have to be a tablet, it could all just be software for a future hardware yet to release. At a later point in time they, devs, will get actual hardware, but it’s possible for a while they’ve just been using software to develop or get familiar with what they’ll work with.

A few weeks ago, @oldpuck posted a few GitHub links, one of these links was of interest:

So, adding check with name "nvidia,tegra239" as it is present in tegra239-sim-vdk.dts.

As you may or may not know, VDK stands for “Virtual Developer Kit”

Nvidia actually offers virtual SDKs for devs to use their cloud servers in software development:

NVIDIA Virtual GPU software management SDK

Management SDK User Guide :: NVIDIA Virtual GPU Software Documentation

Documentation for C application programmers that explains how to use the NVIDIA virtual GPU software management SDK to integrate NVIDIA virtual GPU management with third-party applications.

docs.nvidia.com

To link a few, here’s an interesting remark:

The NVIDIA vGPU software Management SDK enables third party applications to monitor and control NVIDIA physical GPUs and virtual GPUs that are running on virtualization hosts. The NVIDIA vGPU software Management SDK supports control and monitoring of GPUs from both the hypervisor host system and from within guest VMs.

NVIDIA vGPU software enables multiple virtual machines (VMs) to have simultaneous, direct access to a single physical GPU, using the same NVIDIA graphics drivers that are deployed on non-virtualized operating systems. For an introduction to NVIDIA vGPU software, see Virtual GPU Software User Guide.

It isn’t far fetched to believe that developers can use nVidia servers to remotely develop games for a device…. and stay with me here…. Doesn’t physically exist.

If anyone remembers, Nintendo made a comment at the time, and so did Zynga, denying that A) the former sent out developer kits for a new piece of hardware and B) the latter actually received a developer kit for a new piece of hardware.

Now I know that at the time, this was just for that moment, it’s fine for them to lie because they don’t gain anything from telling the truth only harming the company, but let’s put the hypothetical scenario that they were actually just telling the truth.

The reason for why they will be telling the truth is that Nintendo wouldn’t be the one distributing actual developer kits, that would be nVidia doing it, and it will be a software that developers can access to by a portal. Likewise Zynga, even if there was an investigation done on them, wouldn’t have had a developer kit for an unannounced hardware, because it doesn’t actually physically exist at the studio.

Now, what is a supporting evidence I have of this, how would the developers even realize that they are developing precisely for what they are targeting? Nvidia has a super computer that can actually simulate a certain performance target that they are aiming to achieve, and it uses AI. They also have a super computer that can speed up the process of developing a chip, which greatly reduce the amount of time required to develop a whole new architecture/series of chips.

Nvidia R&D Chief on How AI is Improving Chip Design

Getting a glimpse into Nvidia’s R&D has become a regular feature of the spring GTC conference with Bill Dally, chief scientist and senior vice president of research, providing an overview of Nvidia’s R&D organization and a few details on current priorities. This year, Dally focused mostly on AI...

Nvidia wants to use GPUs and AI to accelerate and improve future chip design

During a talk at this year's GPU Technology Conference, Nvidia's chief scientist and senior vice president of research, Bill Dally, talked a great deal about using GPUs...

www.techspot.com

www.techspot.com

It is not impossible that developers are developing on virtual hardware, a.k.a. not physical hardware, since 2020. Henceforth, this will explain why there has been zero leaks about what the hardware looks like. Granted it was going to look like a switch most likely, however Nintendo would have simply discussed the performance target that they desire over the switch and Nvidia is simply tasked with delivering set performance target because Nintendo was willing to pay for that.

As a result, the project started in late 2019/2020, the first report of developer is being task to have games “4K ready“ was in the second half of 2020. Despite all this time, there has been nothing about a physical form of the device and I know I’ve seen people hear comments on it. in essence, it would be more difficult for information to leak this time because it is not a traditional piece of hardware cycle.

It is probably not like the PlayStation5 or the Xbox series which had a physical piece of hardware and developers worked with those physical pieces of hardware that got changed numerous times over the course of its development. In this case, there is no need to update it per se when the simulated hardware is supposed to act the same for each developer that works with it. It’s not real hardware.

This is simply a theory, I’m missing a few bits of information but this should get the gear rolling on a speculative perspective of this.

Edit: to further expand upon this idea, based on the data breach, it is possible that there has been no actual requirements for a physical developer kit that actually has a Tegra in it or in the same sense as a PS5 or XBox Series this time. It could’ve just been something that was done on a PC that runs windows. And used a, at minimum, Turing-based graphics card for the feature set.

It doesn’t have to look like a switch, it doesn’t even have to be a little box with a cord, it doesn’t have to be a tablet, it could all just be software for a future hardware yet to release. At a later point in time they, devs, will get actual hardware, but it’s possible for a while they’ve just been using software to develop or get familiar with what they’ll work with.

Last edited:

LiC

Member

I think the references to VDK for both Orin and Drake likely concern the simulator environment in general, which is used within Nvidia for hardware and driver development, rather than being intended for customers. If Nintendo ever used the simulator, it would have been for OS development, since it's not useful for game developers. Game development can take place before the silicon exists, but it doesn't need a VDK, since NVN and the NintendoSDK can run on Windows, providing developers close enough behavior to the final hardware that they can start porting engines or games.I’m only going to post a theory, it’s entirely speculative but can put a plausible idea on this that can perhaps explain a few things and give a potential cohesive reasoning for it:

A few weeks ago, @oldpuck posted a few GitHub links, one of these links was of interest:

As you may or may not know, VDK stands for “Virtual Developer Kit”

Nvidia actually offers virtual SDKs for devs to use their cloud servers in software development:

NVIDIA Virtual GPU software management SDK

developer.nvidia.com

Management SDK User Guide :: NVIDIA Virtual GPU Software Documentation

Documentation for C application programmers that explains how to use the NVIDIA virtual GPU software management SDK to integrate NVIDIA virtual GPU management with third-party applications.docs.nvidia.com

To link a few, here’s an interesting remark:

It isn’t far fetched to believe that developers can use nVidia servers to remotely develop games for a device…. and stay with me here…. Doesn’t physically exist.

If anyone remembers, Nintendo made a comment at the time, and so did Zynga, denying that A) the former sent out developer kits for a new piece of hardware and B) the latter actually received a developer kit for a new piece of hardware.

Now I know that at the time, this was just for that moment, it’s fine for them to lie because they don’t gain anything from telling the truth only harming the company, but let’s put the hypothetical scenario that they were actually just telling the truth.

The reason for why they will be telling the truth is that Nintendo wouldn’t be the one distributing actual developer kits, that would be nVidia doing it, and it will be a software that developers can access to by a portal. Likewise Zynga, even if there was an investigation done on them, wouldn’t have had a developer kit for an unannounced hardware, because it doesn’t actually physically exist at the studio.

Now, what is a supporting evidence I have of this, how would the developers even realize that they are developing precisely for what they are targeting? Nvidia has a super computer that can actually simulate a certain performance target that they are aiming to achieve, and it uses AI. They also have a super computer that can speed up the process of developing a chip, which greatly reduce the amount of time required to develop a whole new architecture/series of chips.

Nvidia R&D Chief on How AI is Improving Chip Design

Getting a glimpse into Nvidia’s R&D has become a regular feature of the spring GTC conference with Bill Dally, chief scientist and senior vice president of research, providing an overview of Nvidia’s R&D organization and a few details on current priorities. This year, Dally focused mostly on AI...www.hpcwire.com

Nvidia wants to use GPUs and AI to accelerate and improve future chip design

During a talk at this year's GPU Technology Conference, Nvidia's chief scientist and senior vice president of research, Bill Dally, talked a great deal about using GPUs...www.techspot.com

It is not impossible that developers are developing on virtual hardware, a.k.a. not physical hardware, since 2020. Henceforth, this will explain why there has been zero leaks about what the hardware looks like. Granted it was going to look like a switch most likely, however Nintendo would have simply discussed the performance target that they desire over the switch and Nvidia is simply tasked with delivering set performance target because Nintendo was willing to pay for that.

As a result, the project started in late 2019/2020, the first report of developer is being task to have games “4K ready“ was in the second half of 2020. Despite all this time, there has been nothing about a physical form of the device and I know I’ve seen people hear comments on it. in essence, it would be more difficult for information to leak this time because it is not a traditional piece of hardware cycle.

It is probably not like the PlayStation5 or the Xbox series which had a physical piece of hardware and developers worked with those physical pieces of hardware that got changed numerous times over the course of its development. In this case, there is no need to update it per se when the simulated hardware is supposed to act the same for each developer that works with it. It’s not real hardware.

This is simply a theory, I’m missing a few bits of information but this should get the gear rolling on a speculative perspective of this.

- Pronouns

- He/Him

Yeah, I was gonna say isn't that what SDKs have always been for? You'll still need a physical devkit for the final, final development optimization which would be true for virtual devkits too I would imagine, at least for testing, but probably 90-95% of it can be done on development PCs.I think the references to VDK for both Orin and Drake likely concern the simulator environment in general, which is used within Nvidia for hardware and driver development, rather than being intended for customers. If Nintendo ever used the simulator, it would have been for OS development, since it's not useful for game developers. Game development can take place before the silicon exists, but it doesn't need a VDK, since NVN and the NintendoSDK can run on Windows, providing developers close enough behavior to the final hardware that they can start porting engines or games.

ShaunSwitch

Moblin

Not really related but interesting from a cost perspective is that Razer recently announced the edge, a $400 gaming tablet with detachable controllers, rocking an octocore CPU and 1024 core GPU on TSMC 4nm. Comes with 128gb storage and 8gb of ram.

m.gsmarena.com

m.gsmarena.com

There's some stuff Nintendo wouldn't use that is on this device and well they need to include a Dock but cost wise it's a good comparison, did I mention the SoC is on TSMC 4nm for $400?

I daresay the volumes Nintendo would be looking at would confer them a discount compared to razer too. Costs aren't as high as some may have guessed it seems.

Razer Edge handheld Android console announced

It's equipped with Qualcomm's Snapdragon G3x Gen 1 chipset and comes in a 5G version for streaming PC and console games on the go.

There's some stuff Nintendo wouldn't use that is on this device and well they need to include a Dock but cost wise it's a good comparison, did I mention the SoC is on TSMC 4nm for $400?

I daresay the volumes Nintendo would be looking at would confer them a discount compared to razer too. Costs aren't as high as some may have guessed it seems.

Nobody knows anything about the GPU, going by how vague Qualcomm's specs for the Snapdragon G3x Gen 1 are.Not really related but interesting from a cost perspective is that Razer recently announced the edge, a $400 gaming tablet with detachable controllers, rocking an octocore CPU and 1024 core GPU on TSMC 4nm. Comes with 128gb storage and 8gb of ram.

Razer Edge handheld Android console announced

It's equipped with Qualcomm's Snapdragon G3x Gen 1 chipset and comes in a 5G version for streaming PC and console games on the go.m.gsmarena.com

There's some stuff Nintendo wouldn't use that is on this device and well they need to include a Dock but cost wise it's a good comparison, did I mention the SoC is on TSMC 4nm for $400?

I daresay the volumes Nintendo would be looking at would confer them a discount compared to razer too. Costs aren't as high as some may have guessed it seems.

But saying that, there's a possibility Qualcomm's using Samsung's 4LPX process node to fabricate the Snapdragon G3x Gen 1, considering Qualcomm did mention a peak frequency of 3 GHz for the CPU. And the only Snapdragon SoC that has a peak frequency of 3 GHz for the CPU is the Snapdragon 8 Gen 1, which happens to be fabricated using Samsung's 4LPX process node.

VESA Releases DisplayPort 2.1 Specification

/PRNewswire/ -- The Video Electronics Standards Association (VESA®) announced today that it has released DisplayPort 2.1, the latest version of the DisplayPort...

Last edited:

Threadmarks

View all 18 threadmarks

Reader mode

Reader mode

Recent threadmarks

Poll #3: When do you think is the launch window for Nintendo's new hardware? Announcement regarding links to news and rumours from 2022 and 2021 Rough summary of the 2 August 2023 episode of Nate the Hate Rough summary of the 11 September 2023 episode of Nate the Hate Anatole's deep dive into a convolutional autoencoder Differences between T234 and T239 Rough summary of the 19 October 2023 episode of Nate the Hate necrolipe's Twitter (X) post on why a 2025 launch doesn't automatically make T239 outdated based on one of the leaked slides from Microsoft

Staff Communication

View all 12 threadmarks

Reader mode

Reader mode

Recent threadmarks

Regarding Hogwarts Staff Communication 05/26/23 Staff Post about Citing Leaks and Rumors Staff Post about reading Hidden Content Staff Post- Please read Keeping on topic New Switch 2 ST Made - Nontechnical Talk Has A New Home Staff Post Regarding Dubious Insiders And Better SourcingPlease read this staff post before posting.

Furthermore, according to this follow-up post, all off-topic chat will be moderated.

Furthermore, according to this follow-up post, all off-topic chat will be moderated.

Last edited: