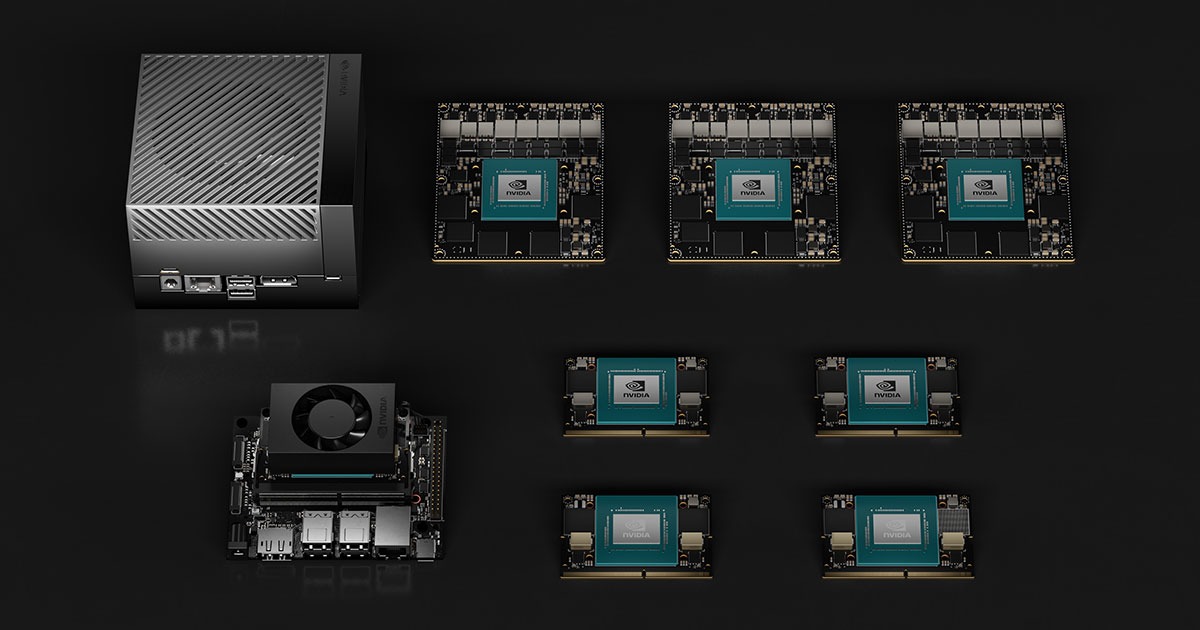

| Jetson Xavier NX Series | Jetson AGX Xavier Series | Jetson Orin NX Series | Jetson AGX Orin Series | | | | | |

| Jetson Xavier NX 16GB | Jetson Xavier NX | Jetson AGX Xavier 64GB | Jetson AGX Xavier | Jetson AGX Xavier Industrial | Jetson Orin NX 8GB | Jetson Orin NX 16GB | Jetson AGX Orin 32GB | Jetson AGX Orin 64GB | |

| AI Performance | 21 TOPS | 32 TOPS | 30 TOPS | 70 TOPS | 100 TOPS | 200 TOPS | 275 TOPS | | |

| GPU | 384-core NVIDIA Volta™GPU with 48 Tensor Cores | 512-core NVIDIA Volta GPU with 64 Tensor Cores | 1024-core NVIDIA Ampere GPU with 32 Tensor Cores | 1792-core NVIDIA Ampere GPU with 56 Tensor Cores | 2048-core NVIDIA Ampere GPU with 64 Tensor Cores | | | | |

| CPU | 6-core NVIDIA Carmel Arm®v8.2 64-bit CPU 6MB L2 + 4MB L3 | 8-core NVIDIA Carmel Arm®v8.2 64-bit CPU 8MB L2 + 4MB L3 | 6-core Arm®Cortex®-A78AE v8.2 64-bit CPU 1.5MB L2 + 4MB L3 | 8-core Arm®Cortex®-A78AE v8.2 64-bit CPU 2MB L2 + 4MB L3 | 8-core Arm®Cortex®-A78AE v8.2 64-bit CPU 2MB L2 + 4MB L3 | 12-core Arm®Cortex®-A78AE v8.2 64-bit CPU 3MB L2 + 6MB L3 | | | |

| DL Accelerator | 2x NVDLA | 2x NVDLA | 1x NVDLA v2 | 2x NVDLA v2 | 2x NVDLA v2 | | | | |

| Vision Accelerator | 2x PVA | 2x PVA | 1 x PVA v2 | 1 x PVA v2 | | | | | |

| Safety Cluster Engine | - | - | 2x Arm Cortex-R5 in lockstep | - | - | - | - | | |

| Memory | 16GB 128-bitLPDDR4x 59.7GB/s | 8GB 128-bitLPDDR4x 59.7GB/s | 64GB 256-bitLPDDR4x 136.5GB/s | 32GB 256-bitLPDDR4x 136.5GB/s | 32GB 256-bitLPDDR4x (ECC support) 136.5GB/s | 8GB 128-bit LPDDR5

102.4 GB/s | 16GB 128-bit LPDDR5

102.4 GB/s | 32GB 256-bit LPDDR5

204.8 GB/s | 64GB 256-bit LPDDR5

204.8 GB/s |

| Storage | 16GB eMMC 5.1 | 32GB eMMC 5.1 | 64GB eMMC 5.1 | -

(Supports external NVMe) | 64GB eMMC 5.1 | | | | |

| Camera | Up to 6 cameras

(24 via virtual channels)

14 lanes MIPI CSI-2

D-PHY 1.2 (up to 30 Gbps) | Up to 6 cameras

(36 via virtual channels)

16 lanes MIPI CSI-2 | 8 lanes SLVS-EC

D-PHY 1.2 (up to 40 Gbps)

C-PHY 1.1 (up to 62 Gbps) | Up to 6 cameras

(36 via virtual channels)

16 lanes MIPI CSI-2

D-PHY 1.2 (up to 40 Gbps)

C-PHY 1.1 (up to 62 Gbps) | Up to 4 cameras (8 via virtual channels*)

8 lanes MIPI CSI-2

D-PHY 1.2 (up to 20Gbps) | Up to 6 cameras (16 via virtual channels*)

16 lanes MIPI CSI-2

D-PHY 2.1 (up to 40Gbps) | C-PHY 2.0 (up to 164Gbps) | | | | |

| Video Encode | 2x 4K60 (H.265)

10x 1080p60 (H.265)

22x 1080p30 (H.265) | 4x 4K60 (H.265)

16x 1080p60 (H.265)

32x 1080p30 (H.265) | 2x 4K60 (H.265)

12x 1080p60 (H.265)

24x 1080p30 (H.265) | 1x 4K60 (H.265)

3x 4K30 (H.265)

6x 1080p60 (H.265)

12x 1080p30 (H.265) | 1x 4K60 (H.265)

3x 4K30 (H.265)

6x 1080p60 (H.265)

12x 1080p30 (H.265) | 2x 4K60 (H.265)

4x 4K30 (H.265)

8x 1080p60 (H.265)

16x 1080p30 (H.265) | | | |

| Video Decode | 2x 8K30 (H.265)

6x 4K60 (H.265)

22x 1080p60 (H.265)

44x 1080p30 (H.265) | 2x 8K30 (H.265)

6x 4K60 (H.265)

26x 1080p60 (H.265)

52x 1080p30 (H.265) | 2x 8K30 (H.265)

4x 4K60 (H.265)

18x 1080p60 (H.265)

36x 1080p30 (H.265) | 1x 8K30 (H.265)

2x 4K60 (H.265)

4x 4K30 (H.265)

9x 1080p60 (H.265)

18x 1080p30 (H.265) | 1x 8K30 (H.265)

2 x 4K60 (H.265)

4x 4K30 (H.265)

9x 1080p60 (H.265)

18x 1080p30 (H.265) | 1x 8K30 (H.265)

3x 4K60 (H.265)

7x 4K30 (H.265)

11x 1080p60 (H.265)

22x 1080p30 (H.265) | | | |

| PCIe | 1 x1 (PCIe Gen3) + 1 x4

(PCIe Gen4) | 1 x8 + 1 x4 + 1 x2 + 2 x1

(PCIe Gen4, Root Port & Endpoint) | 1 x4 + 3 x1

(PCIe Gen4, Root Port & Endpoint) | Up to 2 x8 + 2 x4 + 2 x1

(PCIe Gen4, Root Port & Endpoint) | | | | | |

| Networking | 10/100/1000 BASE-T Ethernet | 1x GbE | 1x GbE

4x 10GbE | | | | | | |

| Display | 2 multi-mode DP 1.4/eDP 1.4/HDMI 2.0

No DSI support | 3 multi-mode DP 1.4/eDP 1.4/HDMI 2.0

No DSI support | 1x 8K60 multi-mode DP 1.4a (+MST)/eDP 1.4a/HDMI 2.1 | 1x 8K60 multi-mode DP 1.4a (+MST)/eDP 1.4a/HDMI 2.1 | | | | | |

| Power | 10W | 15W | 20W | 10W | 15W | 30W | 20W | 40W | 10W | 15W | 20W | 10W | 15W | 25W | 15W | 20W | 50W | 5W | 30W | 50W Up to 60W Max | | |

| Mechanical | 69.6mm x 45mm

260-pin SO-DIMM connector | 100mm x 87mm

699-pin connector

Integrated Thermal Transfer Plate | 69.6mm x 45mm

260-pin SO-DIMM connector | 100mm x 87mm

699-pin Molex Mirror Mezz Connector

Integrated Thermal Transfer Plate | | | | | |