NG lines up with the Activision documents where they call it Switch NG.

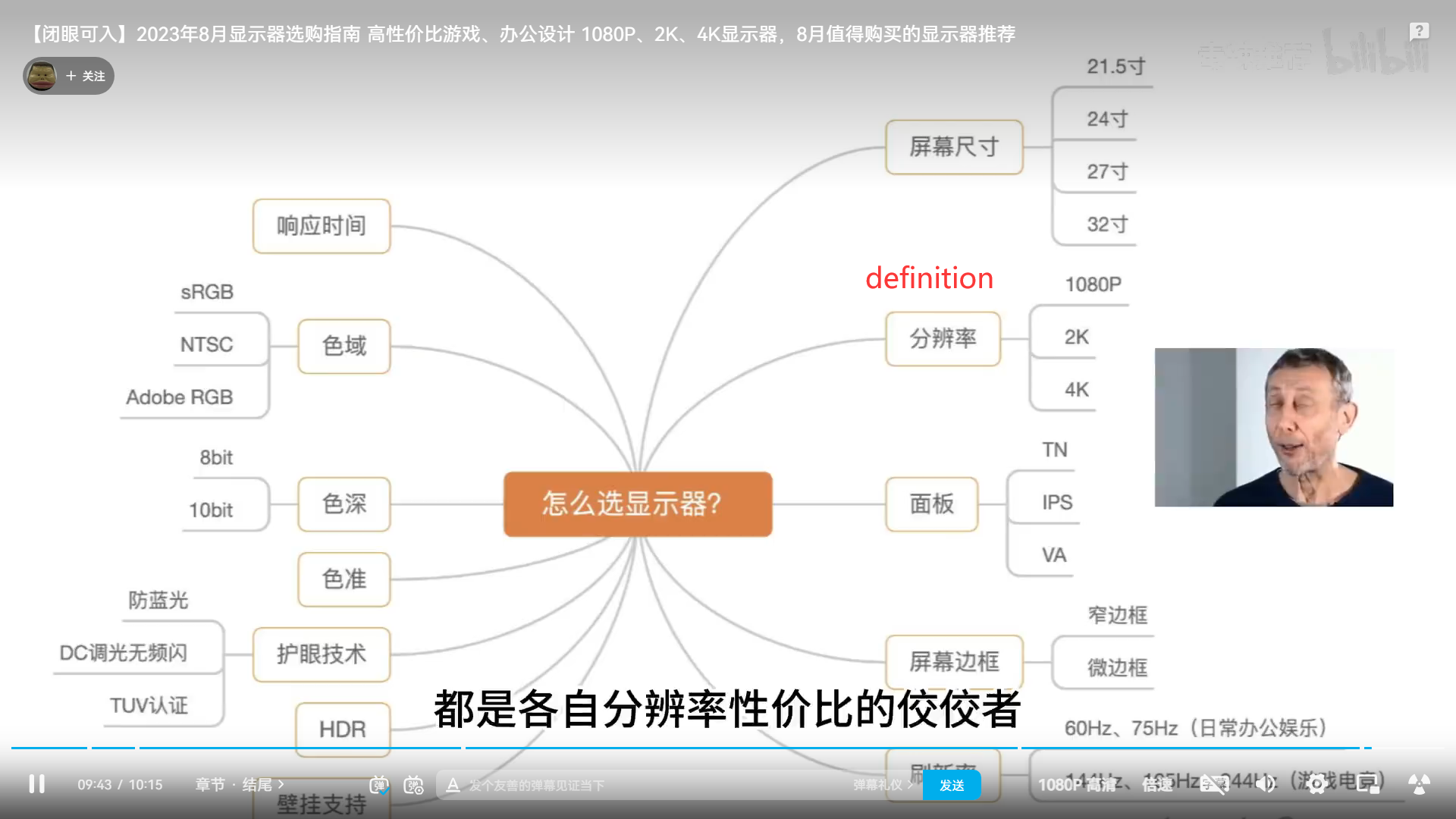

That said, the 2K output is a bit confusing. Isn't that just 1080p with a wider aspect ratio?

In the consumer space, 2K refers to 1080p.

In the professional space, 2K is actually 2048 x 1080 pixels and it’s only on specific display panels that are used in video editing and movies, etc.

There’s 4096 x 2160, a 1.896296296296296:1 display, that is true 4K.

But what we have is called “UHD 4K” which is 3840 x 2160. 4K UHD is synonymous with saying 2160p, in consumer TVs that is.

So, when they say 2K, that’s 1080p. But it’s the 2K we know as 1920 x 1080.

Another quick run down:

qHD= quarter HD, like 540p (the Vita)

HD= 720p, but there’s also 768p which is what most displays used, I don’t know why. (Nintendo Switch)

HD+= HD Plus or 900p, sits between HD and FHD, denoted with a + (XBox One)

FHD+ = Full HD Plus or 1080p/2K what people strive as the golden standard years after it was out on the market (PS4)

QHD= QuadHD or 2.5k or 1440p, this is less of a consumer TV thing and more of a PC monitor thing, it’s technically not an official thing but it’s 4 times what 720p is and is denoted as “Quad” as in “4x the HD” (PC gamers)

UHD= 3840k or 4K or 2160p, the next “peak standard” that is best consumed by movies and TV shows, but games that can suit for this res look beautiful. (PS5 and Series X)

Then there 5K, 6K, 8K and 10K, no not the user. Anyway I don’t see a point in games beyond 1440p atm. 4K is partially marketing partially not.

Partially.