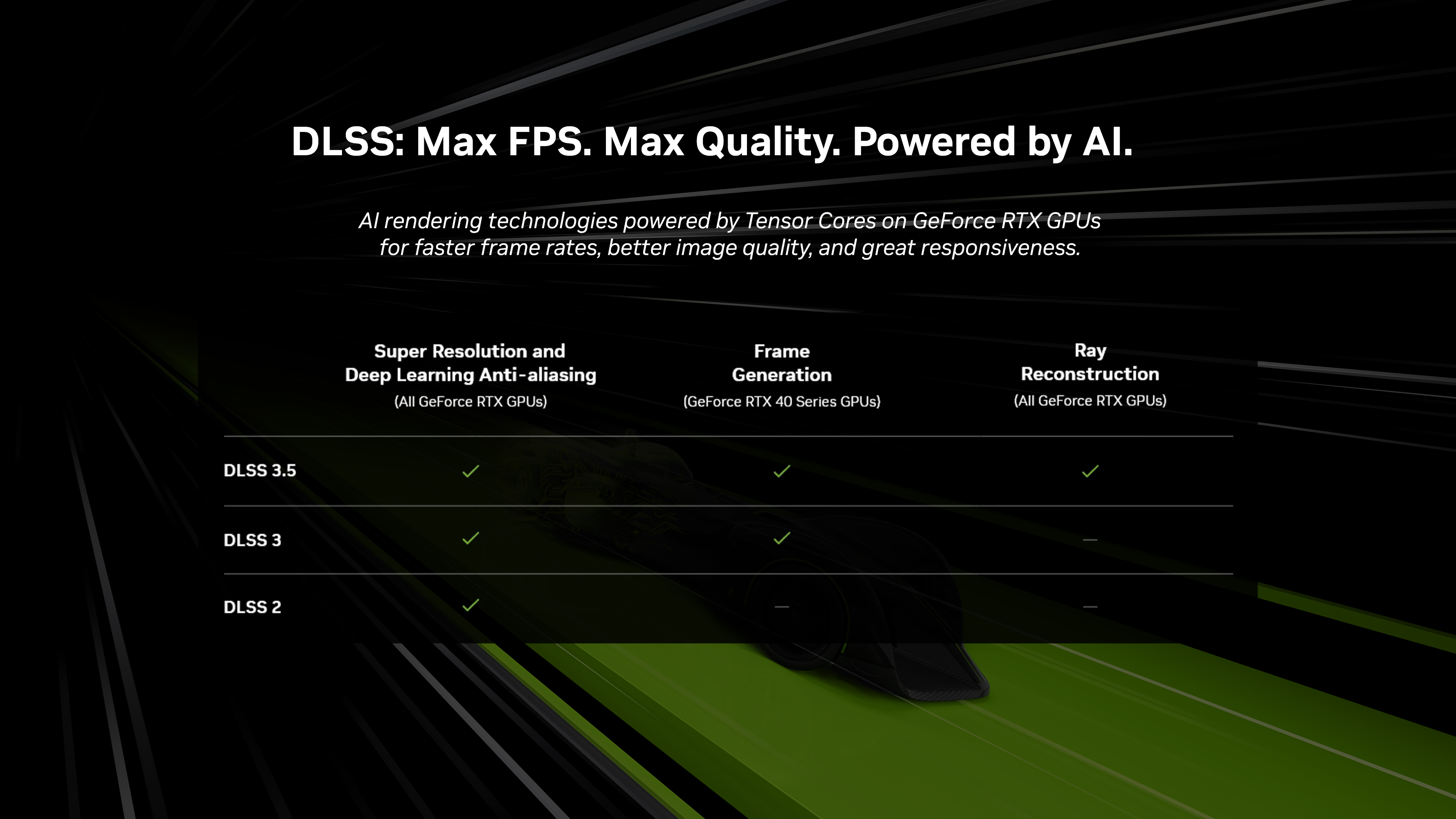

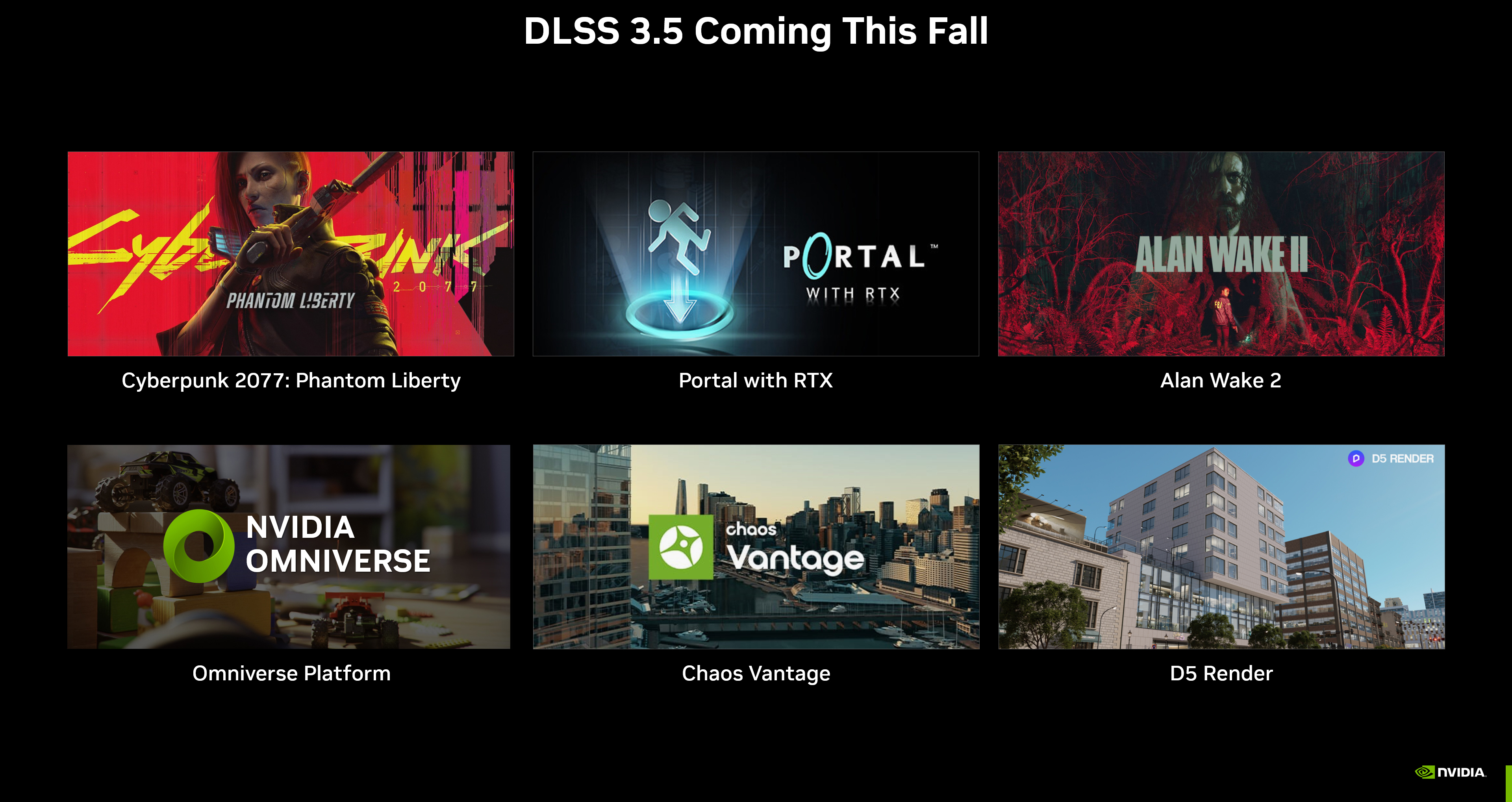

Re: DLSS 3.5.

Fucking Finally. To be a bit of a diva and quote myself from earlier this year (emphasis added)

When you cast rays backwards from the camera, it seems like jittering the camera should give you the extra data DLSS needs. But at multiple steps of the process this goes wrong.

The biggest one is just that RT already introduces a kind of noise that looks identical to the behavior of a jittering camera, so the RT denoiser actually prevents the data from getting to DLSS. The other is that ray tracing introduces unpredictability on what pixels are being effectively being sampled.

The inevitable endgame here is to get DLSS to replace the denoiser, but mixing raster and RT effects makes this tricky.

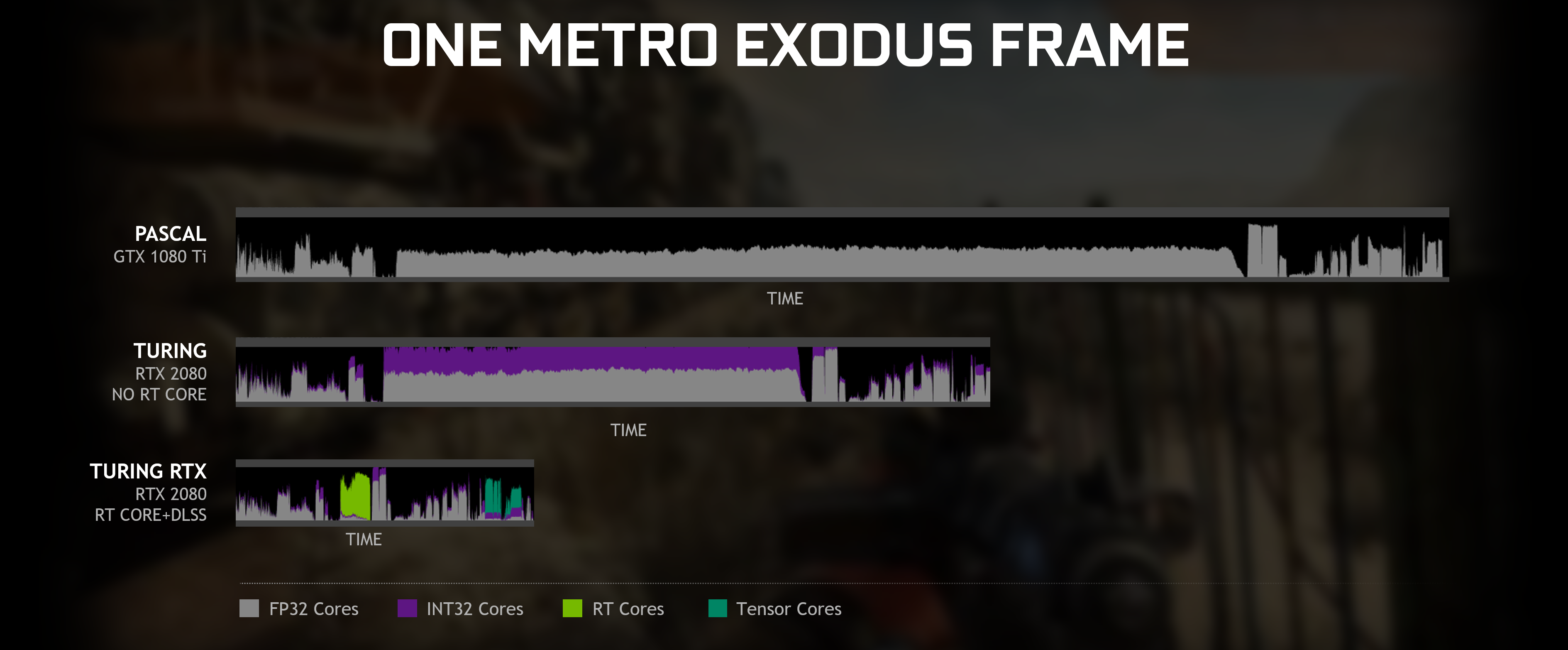

Here is a simplified way of looking at the problem and what DLSS 3.5 does.

Imagine you're in an empty room with a single light source - a 40W light bulb. That light bulb shoot outs rays of light (you know, photons), and those bounce against you and the walls over and over again, changing frequency - ie color - at each bounce. Then those rays hit your eyes, boom, you see an image.

Ray tracing replicates that basic idea when rendering. The problem is that real life has a shitload of rays - that 40W light bulb is pumping out ~148,000,000,000,000,000,000 (148 quintillion) photons a second. RT uses lots of clever optimizations but the basic one is just not use that many rays.

In fact, RT uses so few rays that the raw image generated looks worse than a 2001 flip phone camera taking a picture at night. It doesn't even have connected lines, just semi-random dots. The job of the

denoiser is to go and connect those dots into a coherent image. You can think of a denoiser kinda like anti-aliasing on steroids - antialiasing takes jagged lines, figures out what the artistic intent of those lines was supposed to be, and smoothes it out.

The problem with anti-aliasing is that it can be blurry - you're deleting "real" pixels, and replacing them with higher res guesses. It's

cleaner, but deletes real detail to get a smoother output. That's why DLSS 2 is also an anti-aliaser - DLSS 2 needs that raw, "real" pixel data to feed its AI model, with the goal of producing an image that keeps all the detail

and smoothes the output.

And that's why DLSS 2 has not always interacted well with RT effects. The RT denoiser runs

before DLSS 2 does, deleting useful information that DLSS would normally use to show you a higher resolution image. DLSS 3.5 now

replaces the denoiser with its own upscaler, just as it replaced anti-aliasing tech before it. This has four big advantages.

The first and obvious one is that DLSS's upscaler now has higher quality information about the RT effects underneath. This should allow upscaled images using RT to look better.

The second is that, hopefully, DLSS 3.5 produces higher quality RT images even

before the upscaling.

The third is that DLSS 3.5 is generic for all RT effects. Traditionally, you needed to write a separate denoiser for each RT effect. One for shadows, one for ambient occlusion, one for reflections, and so on. By using a single, adaptive tech for all of these, performance should improve in scenes that use multiple effects. This is especially good for memory bandwidth, where multiple denoisers effectively required extra buffers and passes.

And finally, this reduces development costs for engine developers. Before this, each RT effect required the engine developer to write a hand-tuned denoiser for their implementation. Short term, as long as software solutions and cross-platform engines exist, that will still be required. But in the case of Nintendo specifically, first party development won't need to write their own RT implementations, instead leveraging a massive investment from Nvidia.

There is a downside - DLSS 3.5 isn't a "free" upgrade from DLSS 2. It requires the developer to remove their existing denoisers from the rendering path, and provide new inputs to the DLSS model. In the case of NVN2, this would require an API update, not just a library version update. I haven't seen an integration guide for 3.5 yet, so I have no idea how extensive the new API is, but there will be some delay before this is in the hand of Nintendo devs.