Ok. Yea. Sure.

And my flippant response is to say my wife and my son won’t really see that much of a difference, I assure you.

Good. We agree then. The new hardware will essentially be used to take the Lite/OLED designed games and use DLSS and the SoC to make them run at 4K/60fps with increased graphics sliders.

Nothing more, nothing less.

lol

You just went from “everyone knows that since 2006 new hardware is all about fidelity increase and not about major design change…and went directly, and without any hint of irony, to “the ps5 and new Switch hardware will be utilized to design games impossible for previous hardware!!”

Which is it?

Lets just look at the PS5 and Switch 2 from the specs we have, it's simple to figure out if this is a next gen product or a "pro" model.

PS4 uses GCN from 2011 as it's GPU architecture, it has 1.84TFLOPs available to it. (We will use GCN as a base) The performance of GCN is a factor of 1

Switch uses Maxwell v3 from 2015, it has 0.393TFLOPs available to it. The performance of Maxwell V3 is a factor of 1.4 + mixed precision for games that use this... This means when docked Switch is capable of 550GFLOPs to 825GFLOPs GCN, still a little less than half the GPU performance of PS4, this doesn't factor in far lower bandwidth, RAM amount or CPU performance, all of which sit around 30-33% of the PS4, with the GPU somewhere around 45% when completely optimized.

PS5 uses RDNA1.X, customized in part by Sony, introduced with the PS5 in 2020, it has up to 10.2TFLOPs available to it. The performance of RDNA 1.X is a factor of 1.2 + mixed precision (though this is limited to AI in use cases, developers just don't use mixed precision atm for console or PC gaming, it's used heavily in mobile and in Switch development though). This means ultimately that the PS5's GPU is about 6.64 times as powerful as the PS4, and around 3 times the PS4 Pro.

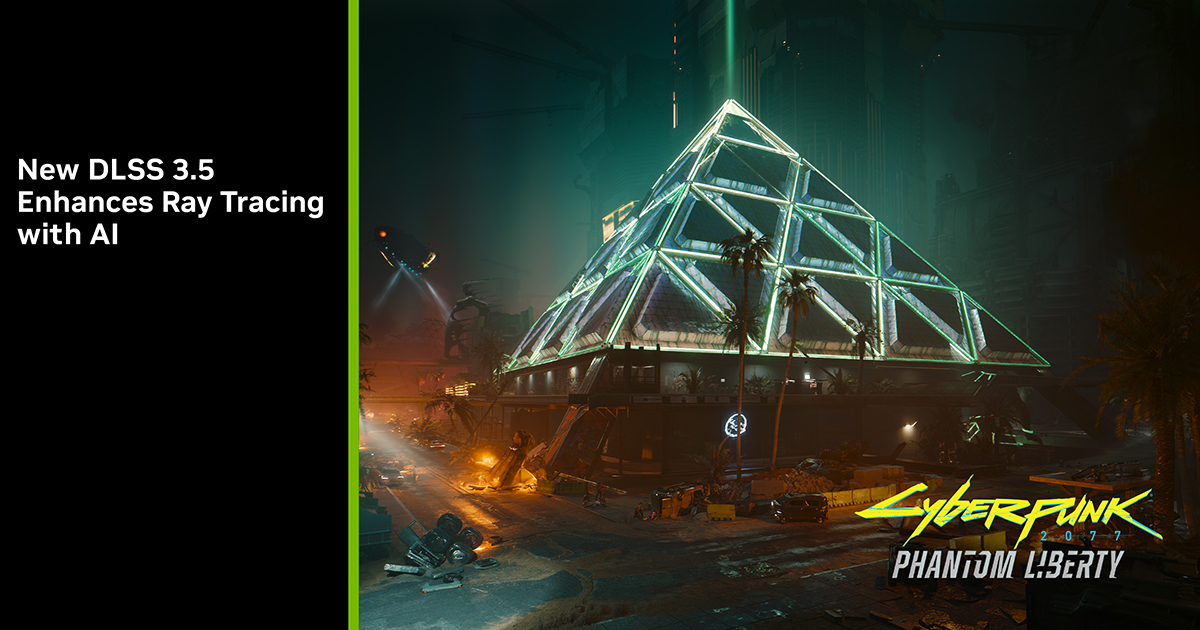

Switch 2 uses Ampere, specifically GA10F, which is a custom GPU architecture that will be introduced with the Switch 2 in 2024 (hopefully), it has 3.456TFLOPs available to it. The performance of Ampere is a factor of 1.2 + mixed precision* (this uses the tensor cores, and is independent of the shader cores). Mixed precision offers 5.2TFLOPs to 6TFLOPs. It also reserves 1/4th of the tensor cores for DLSS according to our estimates, much like the PS5 using FSR2, this allows the device to render the scene at 1/4th the resolution of the output with minimal loss to image quality, greatly boosting available GPU performance, and allowing the device to force a 4K image.

When comparing these numbers to PS4 GCN, Switch 2 has 4.14TFLOPs to 7.2TFLOPs, and PS5 12.24TFLOPs GCN equivalent, meaning that Switch 2 will do somewhere between 34% to 40% of PS5. It should also manage RT performance, and while PS5 will use some of that 10.2TFLOPs to do FSR2, Switch 2 can freely use the remaining 1/4th of it's tensor cores to manage DLSS. Ultimately there are other bottlenecks, the CPU is only going to be about 2/3rd as fast as the PS5's, and bandwidth with respect to their architectures, will only be about half as much, though it could offer 10GB+ for games, which is pretty standard atm for current gen games.

Switch 2 is going to manage current gen much better than Switch did with last gen games. The jump is bigger, the technology is a lot newer, and the addition of DLSS has leveled the playing field a lot, not to mention Nvidia's edge in RT playing a factor. I'd suggest that Switch 2 when docked, if using mixed precision will be noticeably better than the Series S, but noticeably behind current gen consoles.