-

Hey everyone, staff have documented a list of banned content and subject matter that we feel are not consistent with site values, and don't make sense to host discussion of on Famiboards. This list (and the relevant reasoning per item) is viewable here.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

StarTopic Future Nintendo Hardware & Technology Speculation & Discussion |ST| (Read the staff posts before commenting!)

- Thread starter Dakhil

- Start date

Threadmarks

View all 18 threadmarks

Reader mode

Reader mode

Recent threadmarks

Poll #3: When do you think is the launch window for Nintendo's new hardware? Announcement regarding links to news and rumours from 2022 and 2021 Rough summary of the 2 August 2023 episode of Nate the Hate Rough summary of the 11 September 2023 episode of Nate the Hate Anatole's deep dive into a convolutional autoencoder Differences between T234 and T239 Rough summary of the 19 October 2023 episode of Nate the Hate necrolipe's Twitter (X) post on why a 2025 launch doesn't automatically make T239 outdated based on one of the leaked slides from Microsoft

Staff Communication

View all 13 threadmarks

Reader mode

Reader mode

Recent threadmarks

Staff Communication 05/26/23 Staff Post about Citing Leaks and Rumors Staff Post about reading Hidden Content Staff Post- Please read Keeping on topic New Switch 2 ST Made - Nontechnical Talk Has A New Home Staff Post Regarding Dubious Insiders And Better Sourcing Reminder about hide tags NewBased on the software licenses (Switch includes a dedicated page for these per game and I like to check them out of curiosity) lots of Switch games include a set of open source media libraries from Android, to the point where I suspect it's part of the SDK (I also find curl a lot, but that's unrelated). I believe those media libraries support automatically using hardware decoders.Interesting! I didn’t even know that it was the hardware ENC/DEC is one of the things that is used for the pre-rendered cutscenes, a game would need to be coded for this I assume(aka, it is not automatic), and that it’s use is one of the reasons why switch games are pretty small. I also didn’t know that the switch used the other other codecs either, I thought it was absent from consoles.

Having this present in Dane (it will if i has to run Switch 1 games) would really help with the game size limit in my opinion, among other things. Maybe storage isn’t as bleak as it seems. Maybe. It is more complicated than this of course.

Wonder why Nintendo opted to not expose NVENC, probably due to limited hardware resources.

Thank you both for clarifying this! And the features that they have. It helped a lot.

Encoding video is largely useless to most games, though I do suspect Smash uses NVENC for encoding replay videos. Also wouldn't be surprised if the system video capture feature uses it, which probably keeps it tied up most of the time (Smash notably doesn't support normal video capture).

BlackTangMaster

Piranha Plant

I know this is unlikely, but I think UHS-II cards support for Nintendo Switch games for the DLSS model* would be nice.

This is a good news. Apple will subsidise the UHS-II card slot reader and boost the UHS-II market.

My Tulpa

Bob-omb

Of course it will take less time and effort if fewer cutbacks have to be made. It's the optimization that's really the hard part with these "impossible ports", and more powerful hardware simply requires less of that.

Eh, I disagree. CDPR would have had to spend the same amount of money, hire the same sized dev team, for about the same amount of time had they waited to port the Witcher 3 engine specifically for the Switch Dane/DLSS model as they spent/did for the Switch TX1 model.

It’s just a different level of finding shortcuts and optimizing for the unique architecture. Not that it takes longer.

With your argument, you are saying it took less time, less manpower, and less money for CDPR to get the ps4 version ported and optimized than they did for Switch. Cause after all, the hardware is a bit beefier and faster! I’m sure that’s not true either.

Saber had to be a bit more inventive and problem solve a bit harder to optimize the Witcher 3 engine for Switch…but it didn’t take more development time than normal. It didn’t take more manpower than It would be otherwise. It didn’t cost CDPR more money.

YolkFolk

Tingle

Eh, I disagree. CDPR would have had to spend the same amount of money, hire the same sized dev team, for about the same amount of time had they waited to port the Witcher 3 engine specifically for the Switch Dane/DLSS model as they spent/did for the Switch TX1 model.

It’s just a different level of finding shortcuts and optimizing for the unique architecture. Not that it takes longer.

With your argument, you are saying it took less time, less manpower, and less money for CDPR to get the ps4 version ported and optimized than they did for Switch. Cause after all, the hardware is a bit beefier and faster! I’m sure that’s not true either.

Saber had to be a bit more inventive and problem solve a bit harder to optimize the Witcher 3 engine for Switch…but it didn’t take more development time than normal. It didn’t take more manpower than It would be otherwise. It didn’t cost CDPR more money.

Do you you think Capcom porting Resident Evil 5 to Switch required the same amount of work and incurred the same amount of costs as Witcher 3 being brought to Switch?

That’s kinda what is being discussed here, so looking forward to your hot take.

Look over there

Bob-omb

The fact that you can record gameplay footage implies either the CPU is being used or it's NVENC doing it. If performance isn't tanking while recording, then I'm inclined to think that it's NVENC.

Google says that footage is compressed using H.264. So, what changes going from Maxwell's NVENC to Ampere's? First off, NVENC didn't get updated for Ampere; it's using Turing's. What I get from wiki is basically that Maxwell->Pascal improved performance for H.264/H.265 and expanded H.265 profile type stuff, while Pascal/Volta->Turing improved compression efficiency for H.264/H.265 and yet again expanded H.265 profile type stuff.

My expectation for Dane then is that you'll still record in H.264, it'll weigh even less on the CPU when doing so, and you'll get somewhat better quality for the same filesize or somewhat smaller file size for the same quality. Mind you, it'd still be nowhere near archiving-worthy quality, but that's the trade-off for the speed of hardware based encoding.

Google says that footage is compressed using H.264. So, what changes going from Maxwell's NVENC to Ampere's? First off, NVENC didn't get updated for Ampere; it's using Turing's. What I get from wiki is basically that Maxwell->Pascal improved performance for H.264/H.265 and expanded H.265 profile type stuff, while Pascal/Volta->Turing improved compression efficiency for H.264/H.265 and yet again expanded H.265 profile type stuff.

My expectation for Dane then is that you'll still record in H.264, it'll weigh even less on the CPU when doing so, and you'll get somewhat better quality for the same filesize or somewhat smaller file size for the same quality. Mind you, it'd still be nowhere near archiving-worthy quality, but that's the trade-off for the speed of hardware based encoding.

a quick google search presents me with this

www.electronicdesign.com

www.electronicdesign.com

given that the game cards run at about 25MB/s, Nintendo matched that for wide compatibility with non-UHS cards.. Nintendo using a higher speed like 104MB/s would improve loading by a good bit (maybe not so with significantly larger games)The normal speed SD cards run at 12.5 Mbytes/s while high-speed cards double that to 25 Mbyte/s. UHS-I runs at 50 Mbyte/s and 104 Mbyte/s. UHS-II can run at 312 Mbytes/s in half duplex mode or 156 Mbyte/s in full duplex mode.

What's the Difference Between SD and UHS-II Memory Cards?

UHS-II brings higher speeds to the venerable SD and MicroSD form factors.

It would have been nice if Apple uses a SD card slot that supports UHS-III cards. But at least Apple seems to be the first laptop manufacturer to use a SD card slot that supports UHS-II cards.This is a good news. Apple will subsidise the UHS-II card slot reader and boost the UHS-II market.

And it's a shame the current iteration of SD Express 7.0 cards can reach temperatures as high as 96°C.

RennanNT

Bob-omb

I agree with most of your other arguments, but no, downgrade below the minimum requirements the game was planned for isn't trivial.Eh, I disagree. CDPR would have had to spend the same amount of money, hire the same sized dev team, for about the same amount of time had they waited to port the Witcher 3 engine specifically for the Switch Dane/DLSS model as they spent/did for the Switch TX1 model.

It’s just a different level of finding shortcuts and optimizing for the unique architecture. Not that it takes longer.

With your argument, you are saying it took less time, less manpower, and less money for CDPR to get the ps4 version ported and optimized than they did for Switch. Cause after all, the hardware is a bit beefier and faster! I’m sure that’s not true either.

Saber had to be a bit more inventive and problem solve a bit harder to optimize the Witcher 3 engine for Switch…but it didn’t take more development time than normal. It didn’t take more manpower than It would be otherwise. It didn’t cost CDPR more money.

The best example is coincidentally from CDPR as well. Cyberpunk 2077 was removed from PSN for over 6 months and to this day there's a "We don't recommend to play this on base PS4" warning in it's store page.

The game is fully ported and works fine on the platform (PS4 Pro), the problem is purely downgrading it to base PS4 specs and they couldn't do it after so many months, and that cost them their reputation and A LOT of sales for their single flagship game in 5 years.

The amount of publishers bringing over their PS360 AAA ports but not PS4/X1 AAA ports, from the same series even, is also pretty telling.

My Tulpa

Bob-omb

I agree with most of your other arguments, but no, downgrade below the minimum requirements the game was planned for isn't trivial.

The best example is coincidentally from CDPR as well. Cyberpunk 2077 was removed from PSN for over 6 months and to this day there's a "We don't recommend to play this on base PS4" warning in it's store page.

The game is fully ported and works fine on the platform (PS4 Pro), the problem is purely downgrading it to base PS4 specs and they couldn't do it after so many months, and that cost them their reputation and A LOT of sales for their single flagship game in 5 years.

I see what you are trying to say but in your example there are wayyy too many variables to consider that makes it difficult to compare with 1 single team assigned to port a game to 1 single platform like you are attempting

Cyberpunk 2077 was targeting not just the base ps4 profiles, but the ps4 pro and the ps5 profiles. And the Xbox One profile, as well as the XboxOne X profile and the Series X profile. Not to mention launching on the pc as well, with all those different optimization profiles.

This is more of CDPR not being able to focus in all these directions and possibly not giving as much attention to the base ps4/one because of limited time/resources.

It’s not that the ps4 required more time/effort, more that it was given less.

With the Witcher 3, you can see how optimization was much much better for base ps4/one…cause they didn’t have to focus (want to focus) on any other console profiles.

I would argue that when CDPR redesigned their REDengine 3 in 2017 for Cyberpunk and future games, they weren’t concerned for making it scalable. They were already seeing the ps4pro/Xbox X as the low end for 2020 and beyond games.

The base ps4/one ended up suffering because the focus of optimization was elsewhere.

I’m talking about the costs of a publisher hiring one team to port one game to one platform.

If CDPR focused just on the Xbox One X for a Cyberpunk 2077, it would have taken about the same time/money/dev team size had they focused just on the Xbox One. Doesn’t matter what the final result is.

But this is getting too in the weeds lol. The original point was that the faster gpu and cpu in the Dane/DLSS Switch isn’t suddenly going to make porting so much cheaper for publishers to do that it becomes no brainers to finally spend the resources and take the risks.

It’s still going to be a huge time/resource/money commitment from publishers to port to the new model. Publishers are still going to be wary about the costs for little reward.

The amount of publishers bringing over their PS360 AAA ports but not PS4/X1 AAA ports, from the same series even, is also pretty telling.

It is telling.

It tells me that publishers feel that older AAA titles have a better chance of selling on the Switch than brand new, day and date AAA releases.

The appeal of portability trumps the appeal of graphics/performance fidelity over time.

A port of the 9 year old Red Dead Redemption would have sold far better on the Switch in 2019 than the just released Red Dead Redemption 2.

The majority of the AAA 3rd party gaming market own a pc/Xbox/ps specifically to play them at the best possible graphics/performance fidelity they can afford at release. The majority of the market would not choose to play such games on a 6 inch 720p screen. It would be their last choice.

Now, a 9 year old where graphics/performance isn’t that important anymore? And suddenly the portability factor gameplay takes priority? Switch suddenly becomes a more appealing option.

Trust me, the games support Nintendo machines do or don’t get mostly all come down to money. It’s a publisher choice, not a dev one. And it’s not that the investment is too much money, but that the return promises too little money.

Again, this really isn't how things work. The less powerful a piece of hardware is, the more optimization work a game will require to be brought over to a platform. This effort begins to drastically increase as you start to dip below the minimum specs the game was designed around. Conversely, it starts to disappear almost entirely as you get sufficiently above the original target specs, with much of the work at this point being optional enhancements. There is a fixed cost associated with porting the game to a console OS that it's never been on before, but for the vast majority of games that skip Switch, this will be massively dwarfed by the optimization cost of trying to bring over a game that was originally built for much more powerful hardware.I see what you are trying to say but in your example there are wayyy too many variables to consider that makes it difficult to compare with 1 single team assigned to port a game to 1 single platform like you are attempting

Cyberpunk 2077 was targeting not just the base ps4 profiles, but the ps4 pro and the ps5 profiles. And the Xbox One profile, as well as the XboxOne X profile and the Series X profile. Not to mention launching on the pc as well, with all those different optimization profiles.

This is more of CDPR not being able to focus in all these directions and possibly not giving as much attention to the base ps4/one because of limited time/resources.

It’s not that the ps4 required more time/effort, more that it was given less.

With the Witcher 3, you can see how optimization was much much better for base ps4/one…cause they didn’t have to focus (want to focus) on any other console profiles.

I would argue that when CDPR redesigned their REDengine 3 in 2017 for Cyberpunk and future games, they weren’t concerned for making it scalable. They were already seeing the ps4pro/Xbox X as the low end for 2020 and beyond games.

The base ps4/one ended up suffering because the focus of optimization was elsewhere.

I’m talking about the costs of a publisher hiring one team to port one game to one platform.

If CDPR focused just on the Xbox One X for a Cyberpunk 2077, it would have taken about the same time/money/dev team size had they focused just on the Xbox One. Doesn’t matter what the final result is.

But this is getting too in the weeds lol. The original point was that the faster gpu and cpu in the Dane/DLSS Switch isn’t suddenly going to make porting so much cheaper for publishers to do that it becomes no brainers to finally spend the resources and take the risks.

It’s still going to be a huge time/resource/money commitment from publishers to port to the new model. Publishers are still going to be wary about the costs for little reward.

It is telling.

It tells me that publishers feel that older AAA titles have a better chance of selling on the Switch than brand new, day and date AAA releases.

The appeal of portability trumps the appeal of graphics/performance fidelity over time.

A port of the 9 year old Red Dead Redemption would have sold far better on the Switch in 2019 than the just released Red Dead Redemption 2.

The majority of the AAA 3rd party gaming market own a pc/Xbox/ps specifically to play them at the best possible graphics/performance fidelity they can afford at release. The majority of the market would not choose to play such games on a 6 inch 720p screen. It would be their last choice.

Now, a 9 year old where graphics/performance isn’t that important anymore? And suddenly the portability factor gameplay takes priority? Switch suddenly becomes a more appealing option.

Trust me, the games support Nintendo machines do or don’t get mostly all come down to money. It’s a publisher choice, not a dev one. And it’s not that the investment is too much money, but that the return promises too little money.

Also treating all the different Xboxes and all the different PlayStations like they're entirely distinct platforms is definitely overselling things a bit, especially on the Xbox side.

Alovon11

Like Like

- Pronouns

- He/Them

Yeah, but they will build what they sort of have to build.Nintendo will NOT build the next Switch for Third Parties

And the bottom-line system is a fair bit stronger than the Xbox One, and the more average one based on cost for production (based on Orin S), would be stronger than the OG PS4 when docked.

Shrug.

NVIDIA's Licenses don't lie, they have to go with A78s or A78Cs, which even in a 6 core config (The minimum for A78Cs which is the gaming-oriented version), would be over 3 times faster than the Xbox One/PS4 CPUs.

And the GPU, like I said before, should be at the absolute minimum, stronger than the OG Xbox One...before DLSS...after DLSS it would be well on it's way to catching up to the PS4 Pro...at the minimum there as well...

Like, the system is a next-gen system that is literally the only system they can make here because of the licenses NVIDIA has.

And the most likely config in actuality is stronger than that.

The most likely config is an 8 A78C, 6-8SM GPU SoC.

The CPU is like I stated, but even closer to the next-gen systems than before.

And the GPU would be matching or beyond the OG PS4 when docked before DLSS, and matching or surpassing the PS4 Pro, and running up near or matching the Series S with DLSS.

D

Deleted member 645

Guest

I know it’s cool to speculate on these forms. We don’t know for sure anything. We will just have to wait. I know you don’t mean anything by it but those seem like big goals.Yeah, but they will build what they sort of have to build.

And the bottom-line system is a fair bit stronger than the Xbox One, and the more average one based on cost for production (based on Orin S), would be stronger than the OG PS4 when docked.

Shrug.

NVIDIA's Licenses don't lie, they have to go with A78s or A78Cs, which even in a 6 core config (The minimum for A78Cs which is the gaming-oriented version), would be over 3 times faster than the Xbox One/PS4 CPUs.

And the GPU, like I said before, should be at the absolute minimum, stronger than the OG Xbox One...before DLSS...after DLSS it would be well on it's way to catching up to the PS4 Pro...at the minimum there as well...

Like, the system is a next-gen system that is literally the only system they can make here because of the licenses NVIDIA has.

And the most likely config in actuality is stronger than that.

The most likely config is an 8 A78C, 6-8SM GPU SoC.

The CPU is like I stated, but even closer to the next-gen systems than before.

And the GPU would be matching or beyond the OG PS4 when docked before DLSS, and matching or surpassing the PS4 Pro, and running up near or matching the Series S with DLSS.

Alovon11

Like Like

- Pronouns

- He/Them

Well...it's just the numbers and most likelies.I know it’s cool to speculate on these forms. We don’t know for sure anything. We will just have to wait. I know you don’t mean anything by it but those seem like big goals.

Like, to not meet the minimum there (6-8 CPU cores, 4SMs)

They'd have to go with a 4 Core only A78 Config with only 2SMs.

Which would barely be stronger than the current switch GPU wise (Although about as strong or stronger than the steam deck CPU wise)

D

Deleted member 645

Guest

I hate to do this and I know it’s not popular but it’s Nintendo and we just have to wait and see. I want to be excited for the next switch and it’s capabilities. Nintendo in the past had just ruined that for me every again.Well...it's just the numbers and most likelies.

Like, to not meet the minimum there (6-8 CPU cores, 4SMs)

They'd have to go with a 4 Core only A78 Config with only 2SMs.

Which would barely be stronger than the current switch GPU wise (Although about as strong or stronger than the steam deck CPU wise)

Alovon11

Like Like

- Pronouns

- He/Them

But you can't "It's Nintendo" this really, this is NVIDIA we are talking about.I hate to do this and I know it’s not popular but it’s Nintendo and we just have to wait and see. I want to be excited for the next switch and it’s capabilities. Nintendo in the past had just ruined that for me every again.

Also funnily enough cutting the SMs that low considering it likely would be based on Orin S would be more expensive versus 6-8SMs which is just Orin S with some wiggle-room.

Manufacturing/SM tolerances become tighter the lower the SM count.

4+ GPU SMs is the most likely range here and that puts it beyond the OG Xbox One before DLSS when docked.

At least

Last edited:

SiG

Chain Chomp

"It's Nintendo" doesn't hold much water considering the Switch exists (i.e. they went with an Nvidia solution, of all things) and the OLED model uses RGB when they could've easily just as much go with pentile.I hate to do this and I know it’s not popular but it’s Nintendo and we just have to wait and see.

Everyone just assumes it means they'll choose the cheapest or worst possible outcome when it's more like...a bunch of random factors where we really don't know what the final outcome will be.

Angel Whispers

Follow me at @angelpagecdlmhq

"Because Nintendo" is not, was not, and never willl be a coherent reason. Fellow Nintendo fans need to do better than this.

SiG

Chain Chomp

I mean you might as well just show the UNO Wild Card."Because Nintendo" is not, was not, and never willl be a coherent reason. Fellow Nintendo fans need to do better than this.

But even then, some people would be like "Shit, I got the worst card...", which shows what their overall stance is with the brand.

Alovon11

Like Like

- Pronouns

- He/Them

Yeah, and consdiering of the former major point (NVIDIA), that sort of lets us extrapolate a lot about what the constraints they have to work on for the SoC."It's Nintendo" doesn't hold much water considering the Switch exists (i.e. they went with an Nvidia solution, of all things) and the OLED model uses RGB when they could've easily just as much go with pentile.

Everyone just assumes it means they'll choose the cheapest or worst possible outcome when it's more like...a bunch of random factors where we really don't know what the final outcome will be.

The big thing is, the SoC has to be based on Orin as that is NVIDIA's only active ARM SoC that is in development.

Orin being the base means

- Samsung 8nm or better for the Process node

- ARM A78 Series CPU cores (As that is the only License NVIDIA has for CPU cores on processes that small)

- Ampere/Lovelace GPU Architecture (The latter is from Kopite7 saying Lovelace is in Orin in some form, which isn't a first for NVIDIA as they put elements of Pascal in the TX1 before Pascal came out)

- This means Tensor Cores therefore DLSS

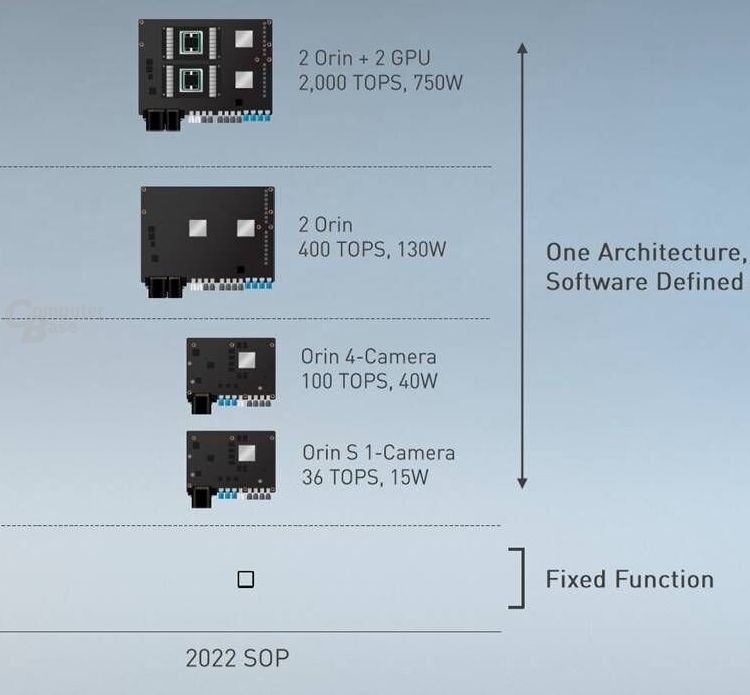

But we can further narrow it down based on the product stack NVIDIA has mentioned for Orin

Orin S, 36 TensorOPs means its GPU is roughly halved from "Big Orin" which we know has 16SMs at the minimum (RTX 3050), so half of that would be 8SMs, then 6SMs as a cut down if they want to make it more complicated/thermals are an issue.

I already mentioned why the A78s are the case (A78C has a minium of 6 CPU cores).

So honestly the "Likleliest" minimum is 6 A78Cs and 6SMs.

and even then IMHO, 8 A78Cs and 6SMs is more likely considering Nintendo's reputation on "Correction" on models like the New 3DS

Devs complained about the 3DS's CPU power and they tripled it with the new 3DS.

Guess what one of the major complaints devs have about the Switch? That's right the CPU.

And guess what would be around triple the processing power of the current Switch's CPU....8 A78Cs.

EDIT: Not necessarily meaning that all to target you @SiG , just thought it flowed better to reply to you, meant this more to the people saying "Because Nintendo"

ArchedThunder

Uncle Beerus

- Pronouns

- He/Him

Furukawa also straight up said a year ago that Nintendo will be using “cutting edge technology.” So an affordable but powerful SoC sounds about right."It's Nintendo" doesn't hold much water considering the Switch exists (i.e. they went with an Nvidia solution, of all things) and the OLED model uses RGB when they could've easily just as much go with pentile.

Everyone just assumes it means they'll choose the cheapest or worst possible outcome when it's more like...a bunch of random factors where we really don't know what the final outcome will be.

D

Deleted member 645

Guest

But you can't "It's Nintendo" this really, this is NVIDIA we are talking about.

Also funnily enough cutting the SMs that low considering it likely would be based on Orin S would be more expensive versus 6-8SMs which is just Orin S with some wiggle-room.

Manufacturing/SM tolerances become tighter the lower the SM count.

4+ GPU SMs is the most likely range here and that puts it beyond the OG Xbox One before DLSS when docked.

At least

It was highly rumored Capcom talked Nintendo into adding a 4th GB of Ram to switch while they were content on having 3. I have no problem myself reserving judgment after we see what we really get. I just don’t feel comfortable saying it should hit a certain performance goal."It's Nintendo" doesn't hold much water considering the Switch exists (i.e. they went with an Nvidia solution, of all things) and the OLED model uses RGB when they could've easily just as much go with pentile.

Everyone just assumes it means they'll choose the cheapest or worst possible outcome when it's more like...a bunch of random factors where we really don't know what the final outcome will be.

Alovon11

Like Like

- Pronouns

- He/Them

Yeah and that was pre-Furukawa after the Wii U flopped hard and cut into Nintendo's Financials, and when Nintendo was using the TX1's as off-the-shelf on discount SoCs because the TX1's didn't sell too well on NVIDIA's end.It was highly rumored Capcom talked Nintendo into adding a 4th GB of Ram to switch while they were content on having 3. I have no problem myself reserving judgment after we see what we really get. I just don’t feel comfortable saying it should hit a certain performance goal.

The circumstances here are very different versus then

D

Deleted member 645

Guest

Yeah we will see what we get. I just think we have done a disservice to ourselves multiple times. I’ve been on every big tech forums speaking on new Nintendo hardware the past couple generations. It seems there is always a “minimum” goal set. Like there is no way Nintendo doesn’t hit this. They have the money, time, and resources. Somehow we have always come up disappointed. Switch is very good for the form factor. I’m sure whatever we get will be okay. It’s just unsettling when people throw our numbers. I know this situation is supposed to be different. I guess we will see.Yeah and that was pre-Furukawa after the Wii U flopped hard and cut into Nintendo's Financials, and when Nintendo was using the TX1's as off-the-shelf on discount SoCs because the TX1's didn't sell too well on NVIDIA's end.

The circumstances here are very different versus then

mariodk18

Install Base Forum Namer

Capcom asking Nintendo to increase the Switch’s RAM isn’t a rumor. It’s a topic discussed by both of them in official capacity.

Source: https://www.technobuffalo.com/nintendo-switch-capcom-system-memory?amp

Also with regards to third party ports:

Porting down is harder than porting up.

Look how long it took SE to bring DQXI to Switch and all the optimizations they had to do. It wasn’t just waiting for the updated UE4 engine on Switch. It wasn’t just reducing resolution or shadow quality. They had to actually alter the model quality in many instances which requires a lot more hands on work from artists and designers.

See it’s one thing to port something to a system that’s weaker, yet close enough in power. If the Switch were stronger, ports would take less time because the hardware would be able to brute force its way through potential performance problems.

Watch any DF video on a late XBOX ONE port and tell me with a straight face that it took the same amount of time and effort as the Switch version. Or even with a game like Crash Bandicoot trilogy, where Crash has different fur rendering compared to the XBOX version. To argue that all ports take a set amount of time is disingenuous.

Source: https://www.technobuffalo.com/nintendo-switch-capcom-system-memory?amp

Also with regards to third party ports:

Porting down is harder than porting up.

Look how long it took SE to bring DQXI to Switch and all the optimizations they had to do. It wasn’t just waiting for the updated UE4 engine on Switch. It wasn’t just reducing resolution or shadow quality. They had to actually alter the model quality in many instances which requires a lot more hands on work from artists and designers.

See it’s one thing to port something to a system that’s weaker, yet close enough in power. If the Switch were stronger, ports would take less time because the hardware would be able to brute force its way through potential performance problems.

Watch any DF video on a late XBOX ONE port and tell me with a straight face that it took the same amount of time and effort as the Switch version. Or even with a game like Crash Bandicoot trilogy, where Crash has different fur rendering compared to the XBOX version. To argue that all ports take a set amount of time is disingenuous.

NineTailSage

Bob-omb

I hate to do this and I know it’s not popular but it’s Nintendo and we just have to wait and see. I want to be excited for the next switch and it’s capabilities. Nintendo in the past had just ruined that for me every again.

It was highly rumored Capcom talked Nintendo into adding a 4th GB of Ram to switch while they were content on having 3. I have no problem myself reserving judgment after we see what we really get. I just don’t feel comfortable saying it should hit a certain performance goal.

Yeah we will see what we get. I just think we have done a disservice to ourselves multiple times. I’ve been on every big tech forums speaking on new Nintendo hardware the past couple generations. It seems there is always a “minimum” goal set. Like there is no way Nintendo doesn’t hit this. They have the money, time, and resources. Somehow we have always come up disappointed. Switch is very good for the form factor. I’m sure whatever we get will be okay. It’s just unsettling when people throw our numbers. I know this situation is supposed to be different. I guess we will see.

I definitely understand these sentiments completely but we have something early on that we haven't had in quite some time when it comes to future Nintendo hardware.

Things we currently know

:They are continuing to work with Nvidia on future Switch hardware

:We know from Nvidia leakers the actual chip (Orin) and architecture (Lovelace) that the next SoC will be based on

:We also know that Nintendo wants to use DLSS to produce a 4k output resolution(which also let's us know this device will be marginally powerful enough to perform this task).

Also the 4GB of RAM Capcom ask for Switch is unique to the Switch as the Nvidia Shield TV uses 2-3GB which was also pretty much the norm for many phones at the time of release. Technology has advanced quite a bit since the Switch was in its R&D phase, so the level of expectations for a new hybrid device in 2022-23 should easily outperform both the PS4/XboxOne platforms.

The real question is how close will this device be to the Series S in performance?

BlackTangMaster

Piranha Plant

There is a lot of talk about Dane being a derivative of Orin which is using 12*A78 but we could also take into account the possibility for a newer version of the originally planned Orin S to use 8*A710.

A710 is compatible with 8 core cluster as opposed to the original A78 and while it is ARMv9, it's still compatible with 32bit code. That said, I suspect that Dane was the chip A78C was made for as we haven't see any implementation of this A78 core model and S898 will soon be released with ARMv9 cores.

A710 is compatible with 8 core cluster as opposed to the original A78 and while it is ARMv9, it's still compatible with 32bit code. That said, I suspect that Dane was the chip A78C was made for as we haven't see any implementation of this A78 core model and S898 will soon be released with ARMv9 cores.

D

Deleted member 887

Guest

I know it’s cool to speculate on these forms. We don’t know for sure anything. We will just have to wait. I know you don’t mean anything by it but those seem like big goals.

Not calling you out here, but this is literally the speculation thread

I did an experiment yesterday - I wasn't on Any Forum prior to the Switch era, so I hunted down the fabled Wii U Speculation Thread(s) and skimmed through it. WUST seems to be the source of a lot of "because Nintendo" chatter with regard to system power.

It was fascinating. Because especially as release got closer there were a lot of leaks about the WiiU and they were... pretty correct. Sometimes they were ignored, sometimes they weren't. There were incorrect "leaks" and rumors too, but what's interesting is that the correct leaks all matched each other (because they were correct) but there was no consistency to the incorrect leaks. There was a lot of hype and excitement based on neither correct nor incorrect leaks, but based entirely on people speculating on their desires and whipping each other into a frenzy.

Even if we ignore Kopite7, who is very reliable but is a single source, there is still a strong pattern to the leaks and rumors. Even if we just go with "4k via DLSS" that shaves down the possible hardware that Nvidia can use CONSIDERABLY. Within that realm we also know that NV has contracts that limit the kind of ARM hardware they can make legally. The consolidation of fabs means that we have a good sense of what it is possible to build. Nintendo has announced a hardware strategy years ago and have for the most part executed on it, with no reason to believe they'd stop. Their hardware partner is a set contract that is public knowledge. That gives us a lot to speculate on, and yes it's speculation but it's highly informed speculation.

Could that speculation be wrong - yes! Could we be mixing hardware up (like with the OLED model) - yes! But we're not just whipping ourselves into a frenzy based on our whims either.

davec00ke

Octorok

Within that realm we also know that NV has contracts that limit the kind of ARM hardware they can make legally.

Nvidia most likely has an ARM Subscription License which gives them access to all ARM cores.

The question I have is what is the oldest process node optimised for the Cortex-A710?There is a lot of talk about Dane being a derivative of Orin which is using 12*A78 but we could also take into account the possibility for a newer version of the originally planned Orin S to use 8*A710.

A710 is compatible with 8 core cluster as opposed to the original A78 and while it is ARMv9, it's still compatible with 32bit code. That said, I suspect that Dane was the chip A78C was made for as we haven't see any implementation of this A78 core model and S898 will soon be released with ARMv9 cores.

WikiChip mentions that 10 nm** is the oldest process node optimised for the Cortex-A78, which is not surprising, considering that people have been talking about Orin's being fabricated using Samsung's 8 nm** process node since 18 December 2019.

And WikiChip seems to imply that 7 nm** is the oldest process node optimised for the Cortex-A710. Of course, IM Motors mentioned that Orin X's fabricated using a 7 nm** process node.

I don't know if you've mentioned this before, but I have a possible theory that Orin X is Nvidia's halo product in terms of SoCs in the Orin family. That means there's a possibility that Orin X could be the only SoC in the Orin family to be fabricated on a 7 nm** process node. The rest, including Dane, on the other hand, are fabricated using Samsung's 8N process node. So in that scenario, I think it's unlikely Dane's going to use the Cortex-A710.

However, if all of the SoCs in the Orin family, including Dane, are fabricated using a 7 nm** process node, then there's a possibility the Cortex-A710 is used, although I don't know how high or how low.

** → simply a marketing nomenclature used by all foundry companies

Alovon11

Like Like

- Pronouns

- He/Them

We don't know if Dane even exists

For reference, that guy leaked all of NVIDIA's Ampere lineup very concisely and all the stuff that he said that didn't come out was confirmed canceled internally.

T239 is Dane's codename.

So Dane at least did exist.

And there hasn't been anything to say that it doesn't still.

IIRC, that has been unconfirmed and they only have access to the A78/A78C/A78E on Samsung 8nm.Nvidia most likely has an ARM Subscription License which gives them access to all ARM cores.

They would have to license new CPU cores on future nodes (Samsung 7nm, 5nm.etc)

But there is very very little reason to not license a better CPU Core like the A710 or even the X1/X2 in that scenario considering the costs of licensing.

Alovon11

Like Like

- Pronouns

- He/Them

Like, I don't think NVIDIA would go through the hassle of redesigning the whole of Orin for EUV just for one Halo SKU? (as Redesigning Orin X for EUV/7nm would require such)The question I have is what is the oldest process node optimised for the Cortex-A710?

WikiChip mentions that 10 nm** is the oldest process node optimised for the Cortex-A78, which is not surprising, considering that people have been talking about Orin's being fabricated using Samsung's 8 nm** process node since 18 December 2019.

And WikiChip seems to imply that 7 nm** is the oldest process node optimised for the Cortex-A710. Of course, IM Motors mentioned that Orin X's fabricated using a 7 nm** process node.

I don't know if you've mentioned this before, but I have a possible theory that Orin X is Nvidia's halo product in terms of SoCs in the Orin family. That means there's a possibility that Orin X could be the only SoC in the Orin family to be fabricated on a 7 nm** process node. The rest, including Dane, on the other hand, are fabricated using Samsung's 8N process node. So in that scenario, I think it's unlikely Dane's going to use the Cortex-A710.

However, if all of the SoCs in the Orin family, including Dane, are fabricated using a 7 nm** process node, then there's a possibility the Cortex-A710 is used, although I don't know how high or how low.

** → simply a marketing nomenclature used by all foundry companies

That's just me though, unlike a GPU which isn't the hardest thing to scale between processes even between DUV and EUV, and the CPU Cores are the CPU Cores and already have the DUV/EUV Design versions for at least the A78 family at ARM to Licensees.

But the rest would have to be redesigned for that, and in an automotive part that likely has a ton of custom elements in it that wouldn't be cheap, IMHO the RoI from doing that for only one Halo SKU isn't enough.

NineTailSage

Bob-omb

We don't know if Dane even exists

I don't think an accurate Nvidia architecture leaker would give us Orin being T234 and Dane being T239 being possibly manufactured on Samsung's 8nm process if it were a fake...

That's way to many inside details about a vaporware chip if that's the case...

Again I get the WUST era of speculation comparisons but since the days of social media's dominance (especially by today's standards) Nvidia and AMD's full GPU product line-up get leaked way ahead of their release with full specs details down to the die size.

One reason I think it's a possibility is that Nvidia had the A100 GPU fabricated using TSMC's N7 process node, whilst the rest of the Ampere GPUs were fabricated using Samsung's 8N process node. So I wouldn't say it's a complete impossibility.Like, I don't think NVIDIA would go through the hassle of redesigning the whole of Orin for EUV just for one Halo SKU? (as Redesigning Orin X for EUV/7nm would require such)

That's just me though, unlike a GPU which isn't the hardest thing to scale between processes even between DUV and EUV, and the CPU Cores are the CPU Cores and already have the DUV/EUV Design versions for at least the A78 family at ARM to Licensees.

But the rest would have to be redesigned for that, and in an automotive part that likely has a ton of custom elements in it that wouldn't be cheap, IMHO the RoI from doing that for only one Halo SKU isn't enough.

~

I have some potentially great news about the DLSS model*, assuming the DLSS model* will have a very similar design to the OLED model.

The OLED model's front housing, specifically the part next to the screen, is made from metal, not plastic. And the kickstand is confirmed to be made from metal. So there's more vindication to rumours from DigiTimes that the new Nintendo Switch model uses a magnesium alloy body.

Last edited:

D

Deleted member 645

Guest

I get it. I won’t say anything else in reference to performance. I will take the wait and see approach. I’m enjoying the OLED and the games that are coming. For me it’s software anyway. If the games I want from third parties aren’t supported on a new more powerful switch I’m okay just riding it out with OLED.Not calling you out here, but this is literally the speculation thread

I did an experiment yesterday - I wasn't on Any Forum prior to the Switch era, so I hunted down the fabled Wii U Speculation Thread(s) and skimmed through it. WUST seems to be the source of a lot of "because Nintendo" chatter with regard to system power.

It was fascinating. Because especially as release got closer there were a lot of leaks about the WiiU and they were... pretty correct. Sometimes they were ignored, sometimes they weren't. There were incorrect "leaks" and rumors too, but what's interesting is that the correct leaks all matched each other (because they were correct) but there was no consistency to the incorrect leaks. There was a lot of hype and excitement based on neither correct nor incorrect leaks, but based entirely on people speculating on their desires and whipping each other into a frenzy.

Even if we ignore Kopite7, who is very reliable but is a single source, there is still a strong pattern to the leaks and rumors. Even if we just go with "4k via DLSS" that shaves down the possible hardware that Nvidia can use CONSIDERABLY. Within that realm we also know that NV has contracts that limit the kind of ARM hardware they can make legally. The consolidation of fabs means that we have a good sense of what it is possible to build. Nintendo has announced a hardware strategy years ago and have for the most part executed on it, with no reason to believe they'd stop. Their hardware partner is a set contract that is public knowledge. That gives us a lot to speculate on, and yes it's speculation but it's highly informed speculation.

Could that speculation be wrong - yes! Could we be mixing hardware up (like with the OLED model) - yes! But we're not just whipping ourselves into a frenzy based on our whims either.

BlackTangMaster

Piranha Plant

If A78C had been made for Nvidia/Nintendo only I could see ARM making A710 compatible with 8 nm. A710 being the only ARMv9 core able to read 32 bit instructions would make it the best candidate for cheap SoC makers using old nodes.The question I have is what is the oldest process node optimised for the Cortex-A710?

WikiChip mentions that 10 nm** is the oldest process node optimised for the Cortex-A78, which is not surprising, considering that people have been talking about Orin's being fabricated using Samsung's 8 nm** process node since 18 December 2019.

And WikiChip seems to imply that 7 nm** is the oldest process node optimised for the Cortex-A710. Of course, IM Motors mentioned that Orin X's fabricated using a 7 nm** process node.

I don't know if you've mentioned this before, but I have a possible theory that Orin X is Nvidia's halo product in terms of SoCs in the Orin family. That means there's a possibility that Orin X could be the only SoC in the Orin family to be fabricated on a 7 nm** process node. The rest, including Dane, on the other hand, are fabricated using Samsung's 8N process node. So in that scenario, I think it's unlikely Dane's going to use the Cortex-A710.

However, if all of the SoCs in the Orin family, including Dane, are fabricated using a 7 nm** process node, then there's a possibility the Cortex-A710 is used, although I don't know how high or how low.

** → simply a marketing nomenclature used by all foundry companies

BlackTangMaster

Piranha Plant

Both A78C and Ampere (without RT cores) are optimised for TSMC's N7P (DUV).Like, I don't think NVIDIA would go through the hassle of redesigning the whole of Orin for EUV just for one Halo SKU? (as Redesigning Orin X for EUV/7nm would require such)

That's just me though, unlike a GPU which isn't the hardest thing to scale between processes even between DUV and EUV, and the CPU Cores are the CPU Cores and already have the DUV/EUV Design versions for at least the A78 family at ARM to Licensees.

But the rest would have to be redesigned for that, and in an automotive part that likely has a ton of custom elements in it that wouldn't be cheap, IMHO the RoI from doing that for only one Halo SKU isn't enough.

Samsung have been able to downport 9820 to 7LPP 9825 which is EUV.

NineTailSage

Bob-omb

One reason I think it's a possibility is that Nvidia had the A100 GPU fabricated using TSMC's N7 process node, whilst the rest of the Ampere GPUs are fabricated using Samsung's 8N process node. So I wouldn't say it's a complete impossibility.

~

I have some potentially great news about the DLSS model*, assuming the DLSS model* will have a very similar design to the OLED model.

The OLED model's front housing, specifically the part next to the screen, is made from metal, not plastic. And the kickstand is confirmed to be made from metal. So there's more vindication to rumours from DigiTimes that the new Nintendo Switch model uses a magnesium alloy body.

People want to know what's weird about Nintendo it's releasing an OLED model of their Switch that also has a metallic frame and not advertising it... Most people that reviewed this device were confused what it was made from, but being clear and open about this absolutely justifies the $350 price!

Such a baffling company at times I tell you.

It might be time to revisit some of those earlier leaks talking about the magnesium alloy frame and all now

Last edited:

Alovon11

Like Like

- Pronouns

- He/Them

One reason I think it's a possibility is that Nvidia had the A100 GPU fabricated using TSMC's N7 process node, whilst the rest of the Ampere GPUs are fabricated using Samsung's 8N process node. So I wouldn't say it's a complete impossibility.

~

I have some potentially great news about the DLSS model*, assuming the DLSS model* will have a very similar design to the OLED model.

The OLED model's front housing, specifically the part next to the screen, is made from metal, not plastic. And the kickstand is confirmed to be made from metal. So there's more vindication to rumours from DigiTimes that the new Nintendo Switch model uses a magnesium alloy body.

Well the main thing that makes me think is like what I said.

Designing Orin X to Samsung 7nm would be more complicated than Ampere going from Samsung 8nm to TSMC 7nm because of all the other parts in Orin X that is unique to itself versus the GPU uArch which is the only thing that super needs to be converted.

And TSMC 7nm and Samsung 7nm have different licenses and other elements meaning they'd be starting from pretty much scratch on making Orin X 7nm, with a ton of custom automotive elements and others.

I just don't think the effort of taking that sort of design and making it for Samsung 7nm is worth only one Halo Product on ROI.

The Cortex-X1 and the Cortex-X2 are probably too large and too power hungry for Nintendo's purposes. And unlike the Cortex-A710, the Cortex-X2 only has 64-bit support.But there is very very little reason to not license a better CPU Core like the A710 or even the X1/X2 in that scenario considering the costs of licensing.

But it's possible Nvidia has other potential uses for the Cortex-X1 and/or Cortex-X2.

I'm not sure if that's necessarily true, considering that the 7 nm** process node used for the Exynos 9825 has very little differences with Samsung's 8LPP process node outside of lithography being used. And unlike going from Samsung's 8N process node to TSMC's N7 process node, Samsung's IPs are still being used if the 7 nm** process node used for the Exynos 9825 is used to fabricate Orin X.Well the main thing that makes me think is like what I said.

Designing Orin X to Samsung 7nm would be more complicated than Ampere going from Samsung 8nm to TSMC 7nm because of all the other parts in Orin X that is unique to itself versus the GPU uArch which is the only thing that super needs to be converted.

Last edited:

My Tulpa

Bob-omb

Again, this really isn't how things work. The less powerful a piece of hardware is, the more optimization work a game will require to be brought over to a platform. This effort begins to drastically increase as you start to dip below the minimum specs the game was designed around. Conversely, it starts to disappear almost entirely as you get sufficiently above the original target specs, with much of the work at this point being optional enhancements.

Again, if CDPR only focused on pc (including the high end and low end minimum targets) and the base ps4 for those…nothing else…since the start of its development, I promise you the game would have looked and run much better on the base ps4 when released. And probably would have been released earlier.

If it was the ps4pro hardware that launched in 2013…it would have taken the same level of cost. It wouldn’t have been cheaper. The jump from architecture that takes 900p rendered games and allows it to render at 1440p and then checkboard it to 4K…doesn’t make development cost significantly cheaper.

There is a fixed cost associated with porting the game to a console OS that it's never been on before, but for the vast majority of games that skip Switch, this will be massively dwarfed by the optimization cost of trying to bring over a game that was originally built for much more powerful hardware.

The relatively short amount of development for Switch Witcher 3, the relatively small dev team size, the relatively small cost…kind of dispels this theory.

Chew on this…”next gen” Witcher 3 port is being done by Saber. The same people who ported Witcher 3 to the Switch.

It is going to take them twice as long, with more manpower…will cost more…to port that game to the ps5 than it took to port it to the Switch.

How can this be? How can much more powerful hardware take more development time/costs for the same game than much inferior hardware??

It’s not as simple as you are portraying it. The Dane/DLSS isn’t going to reduce development time/costs to port to it.

Switch hardware doesn’t present a much major cost investment than if you just focused on, say, the Xbox one hardware. It’s the same amount of publisher cost, more or less.

But most publishers think the cost of making an Xbox One version of their games for the past 8 years rather than the Switch, will more likely be financially worth it.

It’s about the return not the investment.

If the focus of Cyberpunk development was actually base PS4, they would have made a different, less demanding game. If the focus was still higher end PCs, then the results may have been marginally better, but still overall pretty similar. Aside from just being massively rushed, the fundamental problem with Cyberpunk's development is that they didn't scope the game according to the lowest common denominator.Again, if CDPR only focused on pc (including the high end and low end minimum targets) and the base ps4 for those…nothing else…since the start of its development, I promise you the game would have looked and run much better on the base ps4 when released. And probably would have been released earlier.

If it was the ps4pro hardware that launched in 2013…it would have taken the same level of cost. It wouldn’t have been cheaper. The jump from architecture that takes 900p rendered games and allows it to render at 1440p and then checkboard it to 4K…doesn’t make development cost significantly cheaper.

For a port with no additional content, the Switch version of the Witcher 3 had a pretty long development cycle. Similarly, the PS5/XS upgrade is not a simple port, it is adding new features and improvements which take resources to develop. A simple native port of the game to those platforms would probably require largely just QA work.The relatively short amount of development for Switch Witcher 3, the relatively small dev team size, the relatively small cost…kind of dispels this theory.

Chew on this…”next gen” Witcher 3 port is being done by Saber. The same people who ported Witcher 3 to the Switch.

It is going to take them twice as long, with more manpower…will cost more…to port that game to the ps5 than it took to port it to the Switch.

How can this be? How can much more powerful hardware take more development time/costs for the same game than much inferior hardware??

It’s not as simple as you are portraying it. The Dane/DLSS isn’t going to reduce development time/costs to port to it.

Switch hardware doesn’t present a much major cost investment than if you just focused on, say, the Xbox one hardware. It’s the same amount of publisher cost, more or less.

But most publishers think the cost of making an Xbox One version of their games for the past 8 years rather than the Switch, will more likely be financially worth it.

It’s about the return not the investment.

This isn't the 80s or (to a lesser degree) 90s where every port of a game is a fairly bespoke product. Modern multiplatform games have more code and assets in common across platforms than they have platform-specific stuff. And even then, many games are developed with middleware that takes care of some of the platform differences for them. The thing that makes Switch ports so much more expensive than for other platforms is that, for games not developed with its limits in mind, their existing code doesn't run very well (if at all) and often a lot of bespoke work is required to optimize the games to run with acceptable performance and visuals. If games were developed with Switch level hardware in mind from the outset, this wouldn't be a problem, but that's still more the exception than the rule among higher budget games not made by Nintendo themselves, despite the Switch's success. This is why power matters. When the hardware sits well below what games are actually being made for, ports become a substantially pricier proposition.

Last edited:

but dat ray tracing thoIf the focus of Cyberpunk development was actually base PS4, they would have made a different, less demanding game. If the focus was still higher end PCs, then the results may have been marginally better, but still overall pretty similar. Aside from just being massively rushed, the fundamental problem with Cyberpunk's development is that they didn't scope the game according to the lowest common denominator.

I mean, skip PS4/XB1 is an equally valid solution to that problem, albeit one the game was probably announced too early to do without some backlash.but dat ray tracing tho

- Pronouns

- He/Him

Yup. To drive the point home, Ori and the Will of the Wisps, built on a fork of the Unity engine, was compiled and running at 60fps on Xbox One. Taking that same code to Xbox Series X/S, they re-compiled it on that and had it running at 4K 120hz with minimal work, and it was ready for launch with a tiny handful of optimizations. When they tried to use the codebase and assets they had already made and dropped it on Switch at 900p docked? 20fps. To get it running as amazingly as it does required construction of a custom rendering technique that was only used for this port.If the focus of Cyberpunk development was actually base PS4, they would have made a different, less demanding game. If the focus was still higher end PCs, then the results may have been marginally better, but still overall pretty similar. Aside from just being massively rushed, the fundamental problem with Cyberpunk's development is that they didn't scope the game according to the lowest common denominator.

For a port with no additional content, the Switch version of the Witcher 3 had a pretty long development cycle. Similarly, the PS5/XS upgrade is not a simple port, it is adding new features and improvements which take resources to develop. A simple native port of the game to those platforms would probably require largely just QA work.

This isn't the 80s or (to a lesser degree) 90s where every port of a game is a fairly bespoke product. Modern multiplatform games have more code and assets in common across platforms than they have platform-specific stuff. And even then, many games are developed with middleware that takes care of some of the platform differences for them. The thing that makes Switch ports so much more expensive than for other platforms is that, for games not developed with its limits in mind, their existing code doesn't run very with (if at all) and often a lot of bespoke work is required to optimize the games to run with acceptable performance and visuals. If games were developed with Switch level hardware in mind from the outset, this wouldn't be a problem, but that's still more the exception than the rule among higher budget games not made by Nintendo themselves, despite the Switch's success. This is why power matters. When the hardware sits well below what games are actually being made for, ports become a substantially pricier proposition.

Last edited:

the most interesting example is Dusk, a game that replicates the look of a late 90s shooter. first build was 20fps despite lacking a lot of shader effects. for Ori, it really helped that Series was an evolution of the XBO tools, which I expect Dane to doYup. To drive the point home, Ori and the Will of the Wisps, built on a fork of the Unity engine, was compiled and running at 60fps on Xbox One. Taking that same code to Xbox Series X/S, they re-compiled it on that and had it running at 4K 120hz with minimal work, and it was ready for launch with a tiny handful of optimizations. When they tried to use the codebase and assets they had already made and dropped it on Switch at 900p docked? 20fps. To get it running as amazingly as it does required construction of a custom rendering technique that was only used for this port.

My Tulpa

Bob-omb

Also with regards to third party ports:

Porting down is harder than porting up.

See it’s one thing to port something to a system that’s weaker, yet close enough in power. If the Switch were stronger, ports would take less time because the hardware would be able to brute force its way through potential performance problems.

I appreciate your argument from a generalized point of view, but it’s just not that hardcoded in real world terms, especially the Switch in this

case.

As I mentioned above, it’s going to take Saber twice as long, with more costs and manpower, to put the “next gen” version of Witcher 3 onto the ps5 (porting up) than it took them to put Witcher 3 on the Switch (porting down).

Yes, if you have a system, and then take that system and just double the RAM, double the bandwidth, double the CPU speed, and increase gpu cores…developers can brute force some things in a game instead of having to figure out some tricks or shortcuts to optimize that a system with half of that would require.

But rarely is it just that simple with architecture differences, and to be honest, this kind of time consumption is more about working with something different, not with just something less/slower.

An argument against my Witcher 3 example would be “well, Saber has to add a bunch of stuff for the porting up! There are a bunch of new features available on the new systems that they have to figure out how to use properly and optimize! Like FSR! That’s why it will take longe to port to the ps5 than the Switch!”

Which would prove my point…you can’t argue about ease of porting based just on the simplicity of brute forcing something on beefier hardware speeds.

When devs approach the Dane/DLSS Switch, it’s not going to make things faster or cost less. They are now going to have to come up with ways to optimize the usage of DLSS within the major restrictions of mobile SoC architecture. It is in fact going to require a lot of balancing and trial and error to figure out the best way to port stuff on a 15 watt system with heat/battery concerns. It’s not just “brute force”

Development for 4K Switch will not be any less costly or more faster than development for the OLED Switch.

Threadmarks

View all 18 threadmarks

Reader mode

Reader mode

Recent threadmarks

Poll #3: When do you think is the launch window for Nintendo's new hardware? Announcement regarding links to news and rumours from 2022 and 2021 Rough summary of the 2 August 2023 episode of Nate the Hate Rough summary of the 11 September 2023 episode of Nate the Hate Anatole's deep dive into a convolutional autoencoder Differences between T234 and T239 Rough summary of the 19 October 2023 episode of Nate the Hate necrolipe's Twitter (X) post on why a 2025 launch doesn't automatically make T239 outdated based on one of the leaked slides from Microsoft

Staff Communication

View all 13 threadmarks

Reader mode

Reader mode

Recent threadmarks

Staff Communication 05/26/23 Staff Post about Citing Leaks and Rumors Staff Post about reading Hidden Content Staff Post- Please read Keeping on topic New Switch 2 ST Made - Nontechnical Talk Has A New Home Staff Post Regarding Dubious Insiders And Better Sourcing Reminder about hide tags NewPlease read this staff post before posting.

Furthermore, according to this follow-up post, all off-topic chat will be moderated.

Furthermore, according to this follow-up post, all off-topic chat will be moderated.

Last edited: