There is some nuance there, but yeah. To

vastly oversimplify you can think of the Switch as like 1/5th of a PS4 in terms of GPU performance, and the NG as, like, 1/3rd of a PS5. That's a very silly way of thinking of things, but it does help you sort of see that the gap is closing, but the gap isn't tiny.

On the other hand, if we look at the CPU, the story inverts. The gap between NG and current gen is larger than it was between Switch and last gen.

I think it's multi-layered. Ultimately, the bottleneck for a port will

always be cash and time. Randy Linden put

Quake on the GBA for goodness sake. The number of miracle ports will not just be about hardware, it will be about number of Switch NG sold. Better hardware makes port costs go down. But higher sales makes expensive ports profitable. So don't consider any of these problems "insurmountable".

I tend to think that CPU is going to be an issue. Not the

only issue, and not an issue for every game, but

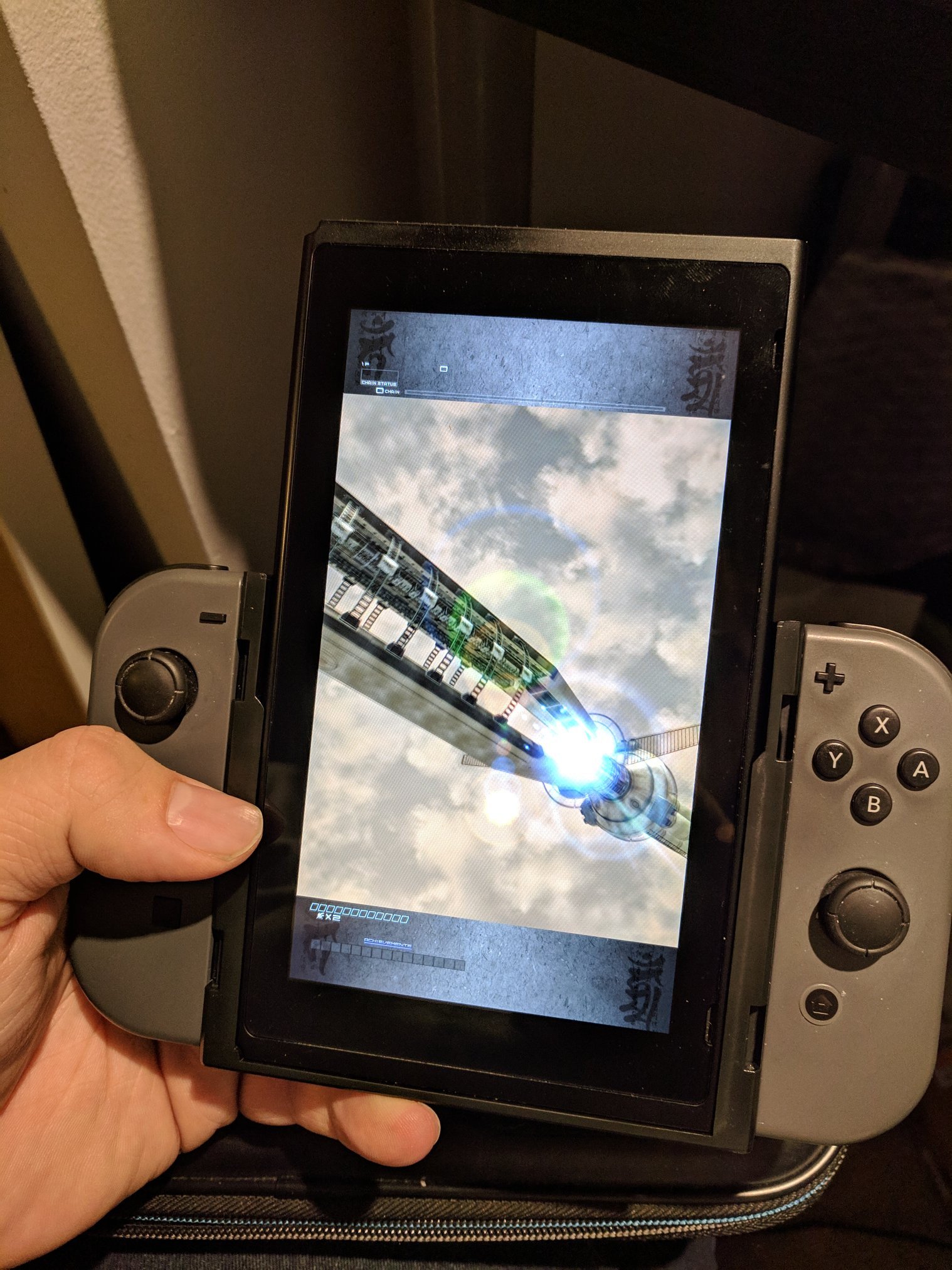

Starfield and

A Plague Tale: Requiem are both games that tax next gen CPUs to their limit to enable the core gameplay.

Then there are games like

Gotham Knights - a game that doesn't so much stress the CPU for gameplay reasons, as use it as a crutch. I'm not defending that game, but there will be more of those. And there will be games like the

Life is Strange series - games that aren't impossible on smaller hardware, but are only economically viable because a small team can just use Unreal Engine defaults for everything, and throw lots of CPU power at mocap'ed animation, and not pay a dozen programmers to optimize the engine.

I also think the GPU will start becoming more and more of an issue. We're coming out of the long cross-gen period, we're going to see more and more 30fps games, more and more low-res/high reconstruction games. We're going to see more games skip Xbox because of Series S + Weak Sales, and we're going to see nigh-unplayable Series S versions.

At some point devs will be competing with each other to deliver better and better looking experiences on the same hardware, and they will start deploying the sorts of cuts for "low end hardware" on the current gen consoles to do it. AAA game development that starts today will be targeting a 2028 release date. That will only be the 3-4th year of the Switch NG, but it will be the cross-gen period for the Playstation

Six.

This all sounds like Nintendoom, and I don't mean that. I actually think I'm pretty optimistic! Last gen consoles were a little weak, relative to where technology was at the time. They had very bad CPUs, and very modest GPUs. Switch was able to capitalize on that, offering a more modern GPU despite the lack of power, and a CPU which actually started to get close to what the then-current consoles were doing. Those were the aces-in-the-hole for making games like

The Witcher III possible.

The PS5 and the Series X aren't just more powerful consoles than last gen, they're more powerful relative to their era. AMD was now top-of-the-heap on CPU tech, and if you pull up any list of "best graphics cards in 2020" and compare specs, the consoles are competitive. Not to mention the forward thinking bells and whistles - SSDs, custom decompression hardware, 3D audio engines.

These consoles don't have the sorts of weaknesses that give NG as much "catchup" room as the Switch had, and yet - Nintendo

seems to be delivering. The CPU gap was going to get larger, but Nintendo is keeping it from becoming massive. The GPU gap is getting smaller, despite the

10x leap that the Series X made over the Xbox One. DLSS 2 and Nvidia's RT solution are more forward thinking than the AMD counterparts. Nintendo seems to be keeping pace with storage speed and decompression hardware.