I think LPDDR5X RAM is also likely if Nintendo plans to launch the DLSS model* in early 2023, considering that

the Dimensity 9000 supports LPDDR5X, and Nintendo has shown with the Nintendo Switch to be willing to splurge money on RAM. And Nintendo has shown with

the OLED model that Nintendo has no problem using RAM from companies other than Samsung, especially since

Micron has confirmed to be working with Mediatek on including LPDDR5X support for the Dimensity 9000.

I believe

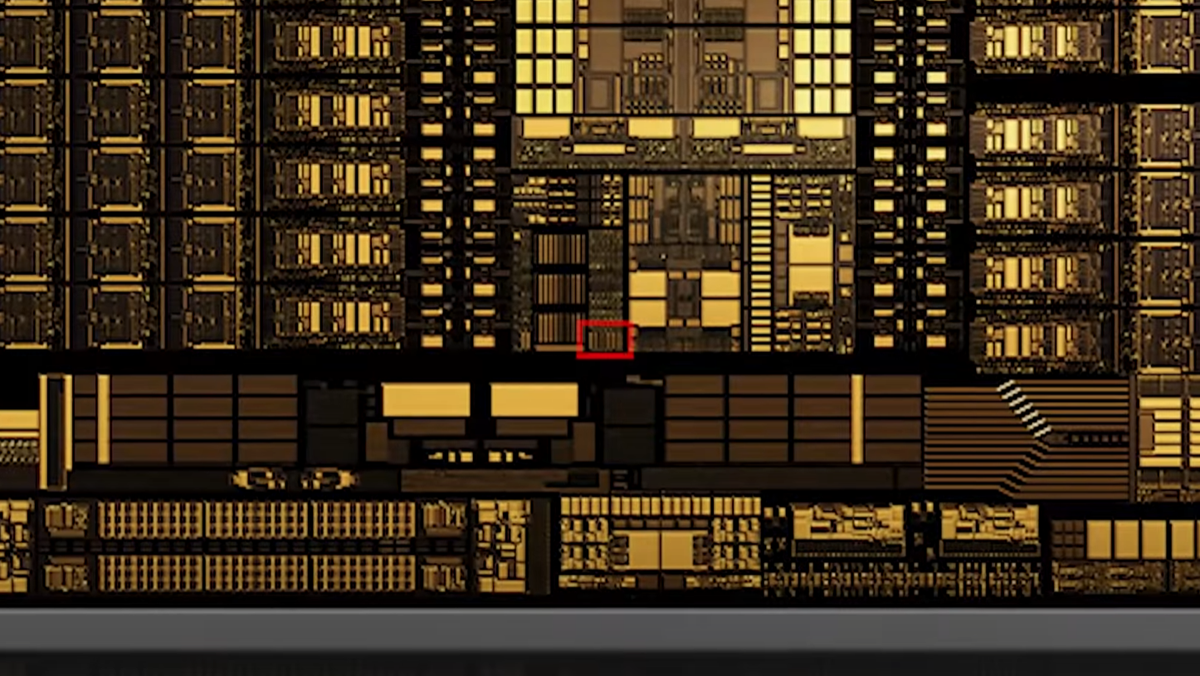

@ILikeFeet mentioned that Nvidia made no mention of which generation the RT cores in Orin are part of in the Jetson AGX Orin Data Sheet. So there's a possibility that Orin's (and by extension Dane's) RT cores are part of the same generation as the RT cores in Lovelace GPUs. And I think there's also a possibility that the RT cores on Lovelace GPUs are as performant as the RT cores on Ampere GPUs, but with fewer RT cores required for Lovelace GPUs in comparison to Ampere GPUs. (Of course, that's my speculation.)

Yeah, I don't think it's impossible that they would use LPDDR5X, but it would require Nvidia to include a memory controller which supports it, which Orin's doesn't. Not impossible by any means, but not something we can infer from Orin.

Yeah, the performance of the RT cores is up in the air, and there's also the chance that they'll double the number of RT cores back up for Dane compared to Orin. I just didn't want to write "Dane will support ray tracing" and have people assume that we'll have ray traced reflections, shadows and global illumination all over the place. Even in the best case scenario, the RT performance of Dane will be very limited, and I'd go as far as saying most games probably won't even use RT, and where it is used will be subtle, limited applications. There's the chance of one or two developers putting a lot of work into highly optimised games managing to squeeze out an approximation of something like RTXGI, but I'd expect that to be the exception, not the norm.

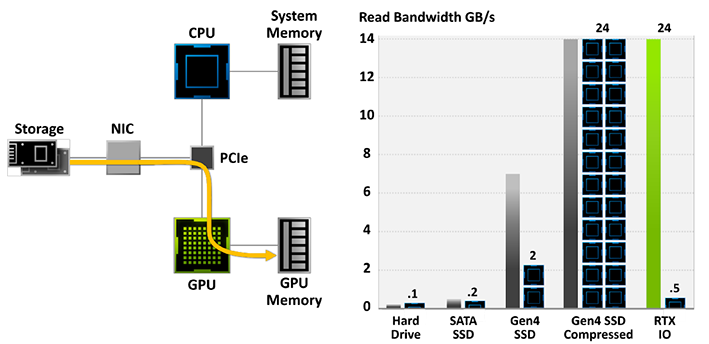

I will also note that the topic of storage speed and CPU/GPU Bottlenecking each other should be stated with the context of NVIDIA announcing new features that could help both.

Storage Speed could be effectively doubled through RTX-IO, and if Dane/Orin still has the Shader Bloat problem from Desktop Ampere, they can use a fixed-preset version of Rapid Core Scaling to turn off GPU cores to feed more power to the remaining cores while letting those cores also theoretically use the extra Cache to feed them as well.

Also the prospect of DyanmicIQ+Dyanmic Boost 3.0 is a very interesting one for Dane even if it's in a fixed form due to the sheer flexibility that it would give developers on optimization

(EX: a 60fps mode needs more CPU power than GPU power to hit 60fps? They can forward more power to the most important CPU cores that the game needs and less to the other cores/GPU with the two combined. A game is highly GPU dependent and doesn't need much CPU? Keep 2-4 out of 8 assumed cores at a higher clock then use the extra clock taken from the 4-6 and give that to the GPU)

As previously mentioned, RTX-IO doesn't really double storage speed (in the same way that PS5's hardware decompression doesn't really give them 9GB/s read speeds). These comparisons are against completely uncompressed data, which isn't really a thing. Most game data is already compressed, so we're already getting those "double" speeds, even on Switch. The actual benefit of RTX-IO and PS5's hardware decompression is that it takes the decompression load off the CPU.

RTX-IO specifically is a set of technologies which is quite PC specific, and don't really translate to consoles. Having CPU and GPU on the same die and with a single set of I/O and shared memory pool makes it redundant. That said, I think Nintendo is acutely aware of how much the CPU is limiting load speeds on the Switch (hence the addition of the higher CPU clocked mode for loading), but I think the sensible approach is to offload that decompression to dedicated hardware like on the PS5. Not only would such hardware allow them to comfortably cover the full I/O requirements of the new model (which even in the best case will be much lower than PS5), but importantly for Nintendo it should be a much more power-efficient way of performing decompression than on either CPU or GPU.

Nvidia have a lot of experience with implementing high-bandwidth compression and decompression in hardware, with both texture compression and framebuffer compression, and although they're a bit different than general-purpose lossless compression algorithms, I have no doubt a general purpose decompression block operating at ~1GB/s would be very much doable for them. And while Sony licensed a compression algorithm for the PS5, there are a lot of open source compression algorithms they could build the hardware around. The DEFLATE algorithm used in zlib has been around for a while, but is still a good choice, and has the benefit of already being used extensively, including in games. Alternatively, they could go with a newer algorithm, either something like LZ4HC if they want to minimise the complexity (and therefore size and cost) of the decompression hardware, or something like brotli for maximised compression ratios, at the expense of larger and more complex decompression hardware.

Edit: Actually, I completely forgot about Nvidia's acquisition of Mellanox. Their DPU network cards already have decompression hardware, so they could likely reuse some of that technology here.

Hardware is intended to deliver software sales because software is where the bulk of money is made and Switch hardware has hit its sales peak, as the tears of #Team2021 clearly evidence, in addition to ample stock over the recent holiday period in Japan. OLED, like the Lite, has a limited capacity at market.

By this point, all versions of Switch are providing more than ample margins (even with the chip shortage in mind), given that the Switch was estimated to cost $257 to manufacture in 2017 and both economies of scale and the Mariko process node change have almost certainly increased the $43 profit per unit sold from 2017, with the $50 price bump in OLED likely meant to cover the margin difference between it and the base Switch (and test the viability of the $350 MSRP at a product launch).

Nintendo is at liberty to cut hardware prices in the interest of better hardware sell-through and keeping software sales on an upward trend; they've done this before, most alarmingly with the 3DS, where they eliminated their entire profit margin to boost its sales. I fully expect 2022 to be the year of the price drop on all models and I don't expect the price cuts to be small ones, either. My expectation is $149 for the Lite, $229 for the standard and $279 for the OLED (not quite $100, but pretty close to it).

Meanwhile, I don't think for a second that the market will accept a $400 device next to a $500 PS5 or Series X, let alone a $300 Series S, no matter how well Switch is doing. Put another way, I don't think the convenience of the hybrid form factor is worth THAT high a premium to consumers, and I'm certain Nintendo would be wary of the negative price comparisons that would be drawn by the press if they priced it as such at launch without an established software library of its own.

I do think price drops in 2022 are reasonably likely (although I think they may just drop the 2019 model and drop the price of the OLED model, rather than keeping them both around), but I don't really agree with your argument that they can't sell a $400 model when the PS5 and Series X are around at $500. The exact same argument was put forward when the Switch launched at $300, when you could buy a PS4 for less than that. Fast forward 5 years, and not only was it not a problem for Nintendo, they've just released a $350 device that's no more powerful than the 2017 Switch, at year after the 10x more powerful Series S came out for $300, and they're still selling almost as many as they can make.

Switch sales will drop off over the next few years, as it's reaching the late stage of its life cycle where it's largely saturated the target markets, but I don't think the PS5 and Series S/X have much to do with this. Early adopters care about having the latest high-tech hardware, but as the games industry has shown us again and again, the majority of people buying games consoles just don't care that much about specs or performance. They'll buy whatever has the games they want to play on it, and if it's capable of being used as a portable, that's a benefit for many people. I also don't think that any reporting which draws a negative comparison between a new Switch and the PS5 or Xbox Series consoles would be disingenuous at best. Any mildly technically literate person wouldn't expect a portable device to have the same performance as a stationary one. You hardly see laptop announcements met by press reports complaining about how they aren't as powerful as similarly priced desktops, do you?