LiC

Member

This page must have been updated because it now says they're in stock and shipping soon. And Nvidia's product page says it's specifically the production modules that will be available in Q4.

This page must have been updated because it now says they're in stock and shipping soon. And Nvidia's product page says it's specifically the production modules that will be available in Q4.

Yeah, I'm guessing the only reason it hasn't gotten more wide adoption is that devs haven't really been put in the situation to decide by force yet.RTXGI is vastly more performant, it has less latency, and is visually more convincing. I would only use Lumen if I had no other choice.

Nintendo Switch Sports launches in just over a month. Carts are most likely being printed as we speak.Ah, I missed the marketing material for it.

I do wonder if Nintendo will be able to integrate FSR 2.0 in time for Nintendo Switch Sport's release, seeing how it uses 1.0 already. Better yet, I wonder if Monolithsoft can patch Xenoblade Chronicles 2/Torna and Definitive Edition to use FSR 2.0 to replace it's dynamic resolution scaling/AA...

I could see them implementing something like this in Xenoblade Chronicles 3 seeing how it might already be using FSR 1.0 given its artstyle.

Edit: Some competitor's graphics cards...Does 2.0 have a minimum requirement spec to use?

Hmm, figures. I wonder if Xenoblade Chronicles 3 can still receive the upgrade, or if getting that temporal data would take a lot of work.Nintendo Switch Sports launches in just over a month. Carts are most likely being printed as we speak.

RTXGI is vastly more performant, it has less latency, and is visually more convincing. I would only use Lumen if I had no other choice.

The next Switch model is still going to be a handheld and won't have features that even the current home consoles don't have, and PC games are only just starting to have. I get that this is now the "future Nintendo AND technology" thread but there's just too much speculation about the bleeding edge of tech getting mixed in here for it to also be realistic speculation about upcoming Switch hardware.

According to its official presentation, RTXGI would work only for the 1060 6GB onwards?

Since Drake won't match the base specs even with its big chip, I hope Nvidia came with a solution to make RTXGI implementable on it in some way.

I am certain of that but there is a use case here.The next Switch model is still going to be a handheld and won't have features that even the current home consoles don't have, and PC games are only just starting to have. I get that this is now the "future Nintendo AND technology" thread but there's just too much speculation about the bleeding edge of tech getting mixed in here for it to also be realistic speculation about upcoming Switch hardware.

Note that that is not what the slide says, exactly. The initial batch of GPUs that were made DXR-enabled started with the GTX 1060 on the lower end of specs, but that doesn't mean that the 4.4 GFLOPS are an absolute minimum - just that they haven't updated drivers for other GPUs (which can be for a variety of reasons - one of which could be power requirements but another could be the lower install base and engagement with high-level graphical features).

According to its official presentation, RTXGI would work only for the 1060 6GB onwards?

Since Drake won't match the base specs even with its big chip, I hope Nvidia came with a solution to make RTXGI implementable on it in some way.

Good find and good deduction! This example opens up the possibility for a more recent chip equipped with RT cores to render RTXGI. I would go one step further: if Nintendo is serious about reducing the workload around tailoring source lights (which is intensive), then RTXGI should work in portable mode too. In such scenario, the rendering would take roughly four times longer if we assume that 1 TFLOPS are available in portable mode. That would roughly translate in a cost of 10ms per frame, leaving 6 ms for all the tasks to render a scene at 60 FPS and 23 ms at 30 FPS.Note that that is not what the slide says, exactly. The initial batch of GPUs that were made DXR-enabled started with the GTX 1060 on the lower end of specs, but that doesn't mean that the 4.4 GFLOPS are an absolute minimum - just that they haven't updated drivers for other GPUs (which can be for a variety of reasons - one of which could be power requirements but another could be the lower install base and engagement with high-level graphical features).

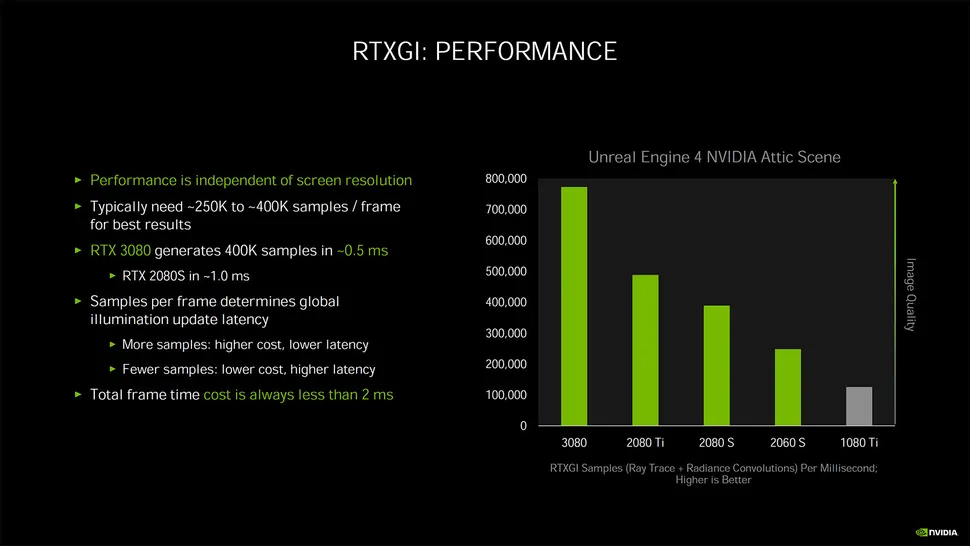

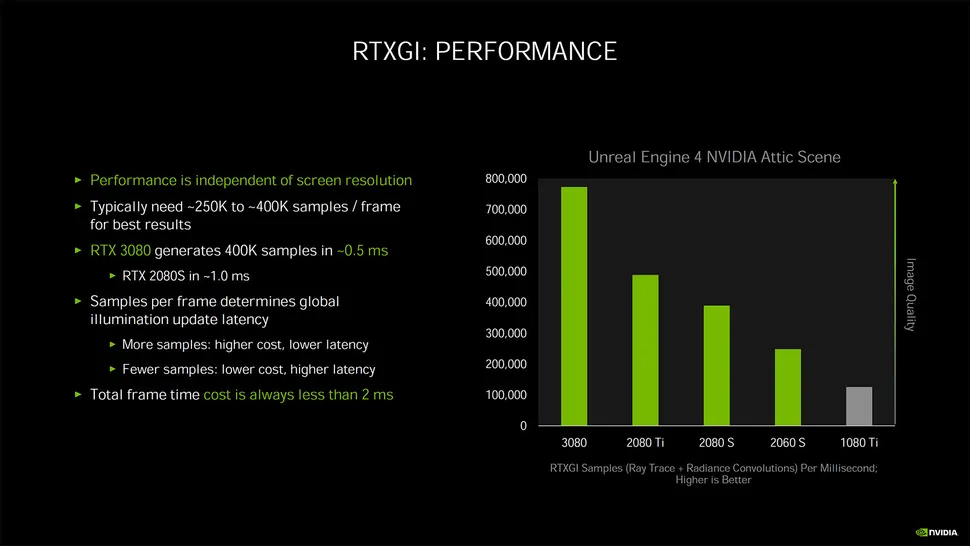

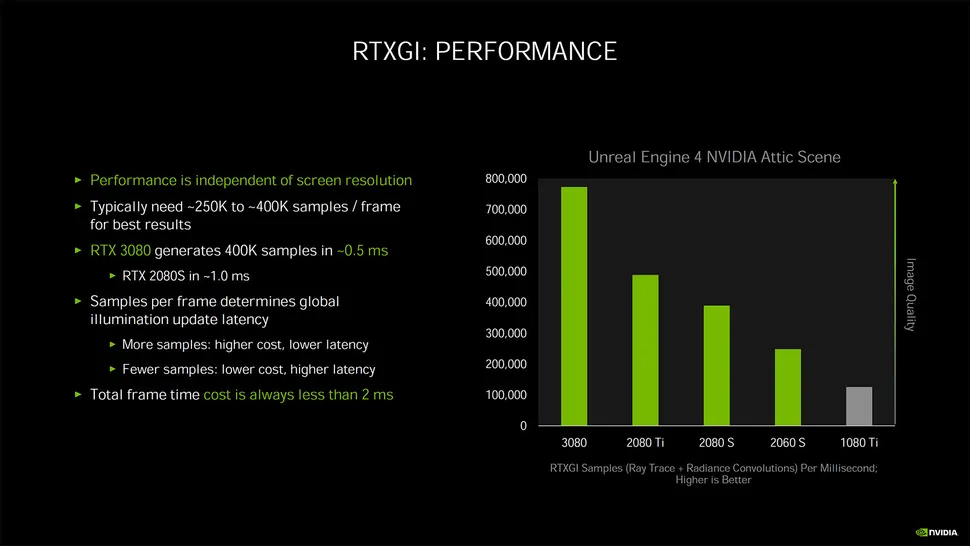

Additionally (and more importantly), I'd like to quote the image from this article:

Note that the RTX 2060S has 7.18 TFLOPS of ALU theoretical performance, whereas the GTX 1080 Ti has 11.34 TFLOPS, yet the 2060S is significantly better than the 1080 Ti. This is the power of the RT cores, which can be used for the BHV tree intersection computations, which are a significant part of the lighting calculations in ray tracing. Therefore, if Drake indeed has RT cores, then that would help tremendously in overcoming the raw ALU power gap between it and the lowest-spec GPU that has DXR enabled.

Regardless, full ray-tracing of any significant kind can be huge drain on any GPU, so I'm not sure how well it would work with a Drake-spec'ed chip. But it could be used for improving certain types of lighting characteristics I guess (perhaps someone who knows more about RTX GI and DI can explain further).

Edit: Though looking at the graph, the RTX 3080, which has 29.77 TFLOPS and 68 RT cores (1 RT core per SM), can push out 750k samples per millisecond. Doing some quick math, and using the speculated performance of 3.68 TF and assuming that the RT core count is 1 per SM for Drake as for the RTX 3080, then Drake would be 8.09x slower than the 3080, so that would suggest 92k samples per millisecond, or 2.7 ms to hit the recommended 250k samples lower bound. That is out of the entire frame buffer of 33.3 ms (for 30 fps) or 16.6 ms (for 60 fps). The latter might not be able to accommodate this depending on the rest of the pipeline, but the former could in theory be capable of it I think. Depending on which other bottlenecks might occur when applying this technique, of course.

Yep. You can buy one for $2K.... a bit expensive.This page must have been updated because it now says they're in stock and shipping soon. And Nvidia's product page says it's specifically the production modules that will be available in Q4.

They are used for denoising.tensor cores aren't used in RT tasks

This is actually good to hear, was curious on how they would compared with each other. Bodes well imo for adoption.RTXGI is vastly more performant, it has less latency, and is visually more convincing. I would only use Lumen if I had no other choice.

It looks like one of the features of RTX GI is that you can update the ray-traced GI lighting at a different rate than the frame rate, so that could offer an out, where the lighting is updated at a lower rate in undocked than in docked mode. That would reduce the lighting quality in handheld mode obviously, but you can probably get away with lower quality lighting in handheld than in docked mode. So there could be some room to manipulate the GI refresh rate so that you don't spend quite as much of the frame buffer on the lighting update.Good find and good deduction! This example opens up the possibility for a more recent chip equipped with RT cores to render RTXGI. I would go one step further: if Nintendo is serious about recuding the workload around tailoring source lights (which is intensive), then RTXGI should work in portable mode too. In such scenario, the rendering would take roughly four times longer if we assume that 1 TFLOPS are available in portable mode. That would roughly translate in a cost of 10ms per frame, leaving 6 ms for all the tasks to render a scene at 60 FPS and 23 ms at 30 FPS.

To put it bluntly, if Nintendo goes this way then we can assume that all games that use FTXGI on the console will run at 30 FPS. Only selected legacy titles will be able to run at 60 FPS and those will probably don't have a need for RTXGI in the first place, or its implementation might prove time-consuming.

You might object that there could be in theory a 40 Hz refresh rate available - should there be a 120 Hz display in the unit - and that would provide some sort of sweet spot for both performance and image quality, right? Well, given Dakhil's comment about the availability of these, we can assume such screens would be no lower than 1080p which would be more demanding for the GPU if one wants to run a game a native resolution, thus increasing the budget to render a frame before RTXGI kicks in. Rendering at a lower resolution upscale using DLSS would be tricky too because that also comes with a cost.

You start seeing that there aren't many scenarios in which this implementation of RTXGI in Drake makes a whole lot of sense (at least with these napkin calculations). In short, it is not very flexible.

And in these circumstances, I don't think Nintendo would favour its implementation. A more efficient algorithm would be needed for it to even be taken in account in the hardware design phase.

@NateDrake : about your future podcast (sorry to bring that again), could you maybe check if this feature is used in any game in development for the succ? I think this could help us narrow expectations regarding the hardware a lot.

For optix, not for games. Denoising is still done on the shader coresThey are used for denoising.

They just don’t do RT themselves.

By the time the switch 2 releases, the old consoles will be ~3 years old. While its raw power will obviously be weaker than its competition, of course it will have newer features they don't have. This isn't even debatable, we already know it has DLSS for example. It can have newer features while still being weaker simply because of raw power output but why would you expect 3 years of time to not confer some advantages in technologies beyond the base specs?The next Switch model is still going to be a handheld and won't have features that even the current home consoles don't have, and PC games are only just starting to have. I get that this is now the "future Nintendo AND technology" thread but there's just too much speculation about the bleeding edge of tech getting mixed in here for it to also be realistic speculation about upcoming Switch hardware.

This is why I think it's still a ways away. It's Occam's razor. Are we seeing every single company simultaneously get a tight hold on leaks, or is it just that there's nothing big to leak?This thing is in the hands of so many devs and only Nate and Bloomberg have really said anything about it. Really where is the rest of the media at? Nobody else in this industry got anything? Not willing to report anything? Weird

Base specifications are done years in advance, not at the drop of a hat. If a future feature is there on the device, it was something that was planned years ago and at the behest of the client as they found a need for it.It can have newer features while still being weaker simply because of raw power output but why would you expect 3 years of time to not confer some advantages in technologies beyond the base specs?

I like how we had information slipping for years and it’s just chalked up as “nothing big to leak”This is why I think it's still a ways away. It's Occam's razor. Are we seeing every single company simultaneously get a tight hold on leaks, or is it just that there's nothing big to leak?

Anything that leaks from GDC probably won't be reported on for a week or so, both to get further corroboration/clarification and to avoid drawing attention to whatever source it leaked from.No leaks from gdc?

The information we have is not of an imminently releasing console. It's stuff like vague hardware details (4k) or vague development details (some companies have dev kits). We haven't gotten any specific information from leaks, like specific games that are getting ready for the console. We did get some specific hardware details recently, but this was from a hack, not a leak from some source at a company like Ubisoft or EA or whatever. So from this we can gleam that the console is probably still a while away and as a result only the top developers/managers at these companies are intimately aware of the plans involving it.Base specifications are done years in advance, not at the drop of a hat. If a future feature is there on the device, it was something that was planned years ago and at the behest of the client as they found a need for it.

I like how we had information slipping for years and it’s just chalked up as “nothing big to leak”

We are here now because of this slipped information, not despite the information that has leaked from the cracks.

The OP even has a list of information that was documented that pertains to this.

The NX had its target specs leaked 8 months before release, and the rest remained very clouded until the October presentation. It's pretty normal not to have more than what we already have (which is quite a lot already) when you are 8 months or more away from release.The information we have is not of an imminently releasing console. It's stuff like vague hardware details (4k) or vague development details (some companies have dev kits). We haven't gotten any specific information from leaks, like specific games that are getting ready for the console. We did get some specific hardware details recently, but this was from a hack, not a leak from some source at a company like Ubisoft or EA or whatever. So from this we can gleam that the console is probably still a while away and as a result only the top developers/managers at these companies are intimately aware of the plans involving it.

The kind and scale of leaks you're referring to don't always happen though. In fact they rarely happen for Nintendo consoles. Even after the Switch was announced in 2016 we barely had any leaks about third party games coming, and again this was after the console was announced. We had nothing before.The information we have is not of an imminently releasing console. It's stuff like vague hardware details (4k) or vague development details (some companies have dev kits). We haven't gotten any specific information from leaks, like specific games that are getting ready for the console. We did get some specific hardware details recently, but this was from a hack, not a leak from some source at a company like Ubisoft or EA or whatever. So from this we can gleam that the console is probably still a while away and as a result only the top developers/managers at these companies are intimately aware of the plans involving it.

N3DS came out of nowhere and DSi was leaked only two weeks before the announcement. GBC was speculated since the early 90's but those were based on nothing. Nintendo knows how to keep a secret.The kind and scale of leaks you're referring to don't always happen though. In fact they rarely happen for Nintendo consoles. Even after the Switch was announced in 2016 we barely had any leaks about third party games coming, and again this was after the console was announced. We had nothing before.

Were there any of this kind of leak before the n3DS was announced? Or the DSi? I'd ask about the GBC but of course that was a very different time.

You’re going to have to show me what leaked games for the PS5 and series X|S, before the consoles even released.The information we have is not of an imminently releasing console. It's stuff like vague hardware details (4k) or vague development details (some companies have dev kits). We haven't gotten any specific information from leaks, like specific games that are getting ready for the console. We did get some specific hardware details recently, but this was from a hack, not a leak from some source at a company like Ubisoft or EA or whatever. So from this we can gleam that the console is probably still a while away and as a result only the top developers/managers at these companies are intimately aware of the plans involving it.

I'm just repeating myself, but the switch 2 will not be comparable to the switch in terms of third party content. The Switch followed the Wii U which was a disaster and it was on much weaker hardware compared to the competition that made porting new games a nightmare. So there weren't many third party games released in the early days of the console. In its first few months the switch had almost no major third party releases, just smaller titles that could be developed for it much quicker. The Switch 2 will be following a very successful console and will be much closer to its competition in power, so I expect a very large number of ports. I find it unlikely to believe that none of these companies have leaked anything about games in development for it. It just feels more likely to me that it's far enough away that these companies have been told what the target specs will be so that they can start making plans, but that the actual porting hasn't been started yet. That's when these things leak, once lower level employees who have a whole lot less to lose get their hands on it.The NX had its target specs leaked 8 months before release, and the rest remained very clouded until the October presentation. It's pretty normal not to have more than what we already have (which is quite a lot already) when you are 8 months or more away from release.

First leaks of console a year ago with confirmation that dev kits have been in place from other insidersThe information we have is not of an imminently releasing console.

Exact chip base of SoC leaks 8 months agoIt's stuff like vague hardware details (4k)

Some companies, includes specifically Zyngaor vague development details (some companies have dev kits). We haven't gotten any specific information from leaks, like specific games that are getting ready for the console

Again, Zynga went on record with Bloomberg. Later denials were shown to be crafted around obvious loopholes. We did get some specific hardware details recently, but this was from a hack, not a leak from some source at a company like Ubisoft or EA or whatever.

What we can glean:So from this we can gleam that the console is probably still a while away and as a result only the top developers/managers at these companies are intimately aware of the plans involving it.

Not to mention that data breach and the thing that leaked the actual details of the GPU outrightFirst leaks of console a year ago with confirmation that dev kits have been in place from other insiders

Nintendo confirms Switch to have roughly 7 year life cycle but game developers are under the impression that this is a revision

Implying a release date in 2022-2023 which insiders seem to confirm

Exact chip base of SoC leaks 8 months ago

Ray Tracing leaks 7 months ago

Nvidia hack confirms all essential details of the hardware, most of which came from the same sources saying that a 2022 release was planned. I think that's pretty key.

Nvidia begins production of chips

Specific companies including Zynga

Who are planning to release these games during late 2022 with Star Wars: Hunters heavily implied to be enhanced on New Switch

Again, Zynga went on record with Bloomberg. Later denials were shown to be crafted around obvious loopholes

What we can glean:

I'm not saying that a release date is guaranteed for this year, but I am saying that I think the data says the opposite of "console is probably still a while away"

- Leakers have consistently said 3 things

- 4k based on modern DLSS capable NVidia hardware with limited Ray Tracing support

- Positioned as a revision

- Late 2022 is target

- Nvidia leak hard confirms 2 things

- 4k based on modern DLSS capable NVidia hardware with limited Ray Tracing support

- Software internals are a revision of the Switch

- Heavily implying that leakers are correct about planned release date

- Which is backed up by Nvidia's announced production schedule

- And Zynga devs stated release target for their game

Thank you for your dedication and service. You said this way better and more sourced than I ever could.First leaks of console a year ago with confirmation that dev kits have been in place from other insiders

Nintendo confirms Switch to have roughly 7 year life cycle but game developers are under the impression that this is a revision

Implying a release date in 2022-2023 which insiders seem to confirm

Exact chip base of SoC leaks 8 months ago

Ray Tracing leaks 7 months ago

Nvidia hack confirms all essential details of the hardware, most of which came from the same sources saying that a 2022 release was planned. I think that's pretty key.

Nvidia begins production of chips

Specific companies including Zynga

Who are planning to release these games during late 2022 with Star Wars: Hunters heavily implied to be enhanced on New Switch

Again, Zynga went on record with Bloomberg. Later denials were shown to be crafted around obvious loopholes

What we can glean:

I'm not saying that a release date is guaranteed for this year, but I am saying that I think the data says the opposite of "console is probably still a while away"

- Leakers have consistently said 3 things

- 4k based on modern DLSS capable NVidia hardware with limited Ray Tracing support

- Positioned as a revision

- Late 2022 is target

- Nvidia leak hard confirms 2 things

- 4k based on modern DLSS capable NVidia hardware with limited Ray Tracing support

- Software internals are a revision of the Switch

- Heavily implying that leakers are correct about planned release date

- Which is backed up by Nvidia's announced production schedule

- And Zynga devs stated release target for their game

Well dang,I'm not saying that a release date is guaranteed for this year, but I am saying that I think the data says the opposite of "console is probably still a while away"

Because Nintendo and sales. Neither of which make any senseWith all that info summed up idk why there are those who insist on a 2024 release. That feels late.

Yeah I've heard that theory a couple times recently and it could make sense. I always found it odd that they launched the OLED model with Metroid Dread of all games, usually they go for something a bit more mass market. Monster Hunter Rise would've made sense.I wonder if the OLED Switch was originally intended for early 2021 and slipped due to COVID shutdowns impacting manufacturing.

Not to be pedantic, but Zynga, singular, is the only specified company mentioned. There's a lot of smoke and no need to oversell it.

Credit to @Dakhil for being great about keeping links in the OP I could reference. There is a lot of useful/interesting info there, but I get that for most folks it's too much.Thank you for your dedication and service. You said this way better and more sourced than I ever could.

With all that info summed up idk why there are those who insist on a 2024 release. That feels late.

To give @BobNintendofan and others some credit here, this thread goes from very speculative to very high level on a whipsaw. It can be hard to separate what is solid and what is speculation. In this particular case, while we have solid hardware knowledge due to the Nvidia hack, you'd have to be following along closely - and be pretty hardware savvy - to see the daisy chain of "Nvidia leak confirms detailed hardware rumors, detailed hardware rumors come from same people saying 2022".I was assuming 2023, but yeah, a lot of smoke for at least an original 4Q22 launch target.

I agree, I'm mostly referring to people who are aware of the timeline and information you've put together but are being pessimistic due to feeling burned by the Switch Pro not coming out in 2021. And by pessimistic, I mean the attitude of "we're definitely not seeing this thing until 2024 and you'd be foolish to believe insiders again." I'm a little tired of that rhetoric.To give @BobNintendofan and others some credit here, this thread goes from very speculative to very high level on a whipsaw. It can be hard to separate what is solid and what is speculation. In this particular case, while we have solid hardware knowledge due to the Nvidia hack, you'd have to be following along closely - and be pretty hardware savvy - to see the daisy chain of "Nvidia leak confirms detailed hardware rumors, detailed hardware rumors come from same people saying 2022".

RT modes on consoles are almost always locked to 30fps modes. There’s just no wiggle room for almost 3ms when all you have is 16.6ms. When in 60fps modes on console the RT usually has 1/4 res so 1080p at 4k.Note that that is not what the slide says, exactly. The initial batch of GPUs that were made DXR-enabled started with the GTX 1060 on the lower end of specs, but that doesn't mean that the 4.4 GFLOPS are an absolute minimum - just that they haven't updated drivers for other GPUs (which can be for a variety of reasons - one of which could be power requirements but another could be the lower install base and engagement with high-level graphical features).

Additionally (and more importantly), I'd like to quote the image from this article:

Note that the RTX 2060S has 7.18 TFLOPS of ALU theoretical performance, whereas the GTX 1080 Ti has 11.34 TFLOPS, yet the 2060S is significantly better than the 1080 Ti. This is the power of the RT cores, which can be used for the BHV tree intersection computations, which are a significant part of the lighting calculations in ray tracing. Therefore, if Drake indeed has RT cores, then that would help tremendously in overcoming the raw ALU power gap between it and the lowest-spec GPU that has DXR enabled.

Regardless, full ray-tracing of any significant kind can be huge drain on any GPU, so I'm not sure how well it would work with a Drake-spec'ed chip. But it could be used for improving certain types of lighting characteristics I guess (perhaps someone who knows more about RTX GI and DI can explain further).

Edit: Though looking at the graph, the RTX 3080, which has 29.77 TFLOPS and 68 RT cores (1 RT core per SM), can push out 750k samples per millisecond. Doing some quick math, and using the speculated performance of 3.68 TF and assuming that the RT core count is 1 per SM for Drake as for the RTX 3080, then Drake would be 8.09x slower than the 3080, so that would suggest 92k samples per millisecond, or 2.7 ms to hit the recommended 250k samples lower bound. That is out of the entire frame buffer of 33.3 ms (for 30 fps) or 16.6 ms (for 60 fps). The latter might not be able to accommodate this depending on the rest of the pipeline, but the former could in theory be capable of it I think. Depending on which other bottlenecks might occur when applying this technique, of course.

Don't you read Famiboards or read YT comment sections? Mochi and myself made it all up for clickbait.First leaks of console a year ago with confirmation that dev kits have been in place from other insiders

Nintendo confirms Switch to have roughly 7 year life cycle but game developers are under the impression that this is a revision

Implying a release date in 2022-2023 which insiders seem to confirm

Exact chip base of SoC leaks 8 months ago

Ray Tracing leaks 7 months ago

Nvidia hack confirms all essential details of the hardware, most of which came from the same sources saying that a 2022 release was planned. I think that's pretty key.

Nvidia begins production of chips

Some companies, includes specifically Zynga

Who are planning to release these games during late 2022 with Star Wars: Hunters heavily implied to be enhanced on New Switch

Again, Zynga went on record with Bloomberg. Later denials were shown to be crafted around obvious loopholes

What we can glean:

I'm not saying that a release date is guaranteed for this year, but I am saying that I think the data says the opposite of "console is probably still a while away"

- Leakers have consistently said 3 things

- 4k based on modern DLSS capable NVidia hardware with limited Ray Tracing support

- Positioned as a revision

- Late 2022 is target

- Nvidia leak hard confirms 2 things

- 4k based on modern DLSS capable NVidia hardware with limited Ray Tracing support

- Software internals are a revision of the Switch

- Heavily implying that leakers are correct about planned release date

- Which is backed up by Nvidia's announced production schedule

- And Zynga devs stated release target for their game

edited: clarified something, per @Radium

I'm pretty sure you just secretly are Mochizuki.Don't you read Famiboards or read YT comment sections? Mochi and myself made it all up for clickbait.

Yeah, driving all those clicks to your constantly releasing, but short and info-free podcast. And Mochi selling his soul to work for a low rent fanrag like Bloomberg. Sad.Don't you read Famiboards or read YT comment sections? Mochi and myself made it all up for clickbait.

I think you're just not getting my point.First leaks of console a year ago with confirmation that dev kits have been in place from other insiders

Nintendo confirms Switch to have roughly 7 year life cycle but game developers are under the impression that this is a revision

Implying a release date in 2022-2023 which insiders seem to confirm

Exact chip base of SoC leaks 8 months ago

Ray Tracing leaks 7 months ago

Nvidia hack confirms all essential details of the hardware, most of which came from the same sources saying that a 2022 release was planned. I think that's pretty key.

Nvidia begins production of chips

Some companies, includes specifically Zynga

Who are planning to release these games during late 2022 with Star Wars: Hunters heavily implied to be enhanced on New Switch

Again, Zynga went on record with Bloomberg. Later denials were shown to be crafted around obvious loopholes

What we can glean:

I'm not saying that a release date is guaranteed for this year, but I am saying that I think the data says the opposite of "console is probably still a while away"

- Leakers have consistently said 3 things

- 4k based on modern DLSS capable NVidia hardware with limited Ray Tracing support

- Positioned as a revision

- Late 2022 is target

- Nvidia leak hard confirms 2 things

- 4k based on modern DLSS capable NVidia hardware with limited Ray Tracing support

- Software internals are a revision of the Switch

- Heavily implying that leakers are correct about planned release date

- Which is backed up by Nvidia's announced production schedule

- And Zynga devs stated release target for their game

edited: clarified something, per @Radium

What you seem to be ignoring is that the specific types of leaks you're looking for almost never actually happen, especially for Nintendo products.I think you're just not getting my point.

There are different types of leaks in this context. You have leaks about general, broad things, like the next console being 4k or having raytracing, and you have leaks about specific things, like a batman collection being developed for the console or a new Donkey Kong game about the Kremlins for example. I have never denied that there have been these more broad general leaks. Consoles are in development for years, so information leaking out about the next console's hardware plans are expected at this point, nothing unusual. We have indeed gotten many of these sort of leaks. Where we are lacking in is in the more specific leaks, of software or locked in hardware plans *with the exception of the hack which is not a normal leak and is not indicative of an iminent launch.

The reason why differentiating these types of leaks is important in this context is because broad leaks about hardware plans can happen years in advance, while for example a Batman collection is probably not in development for years before the launch of the console. When that kind of information starts leaking, specific details about the console's launch and the software it will have, that's when I will believe the console is coming soon. I am not going to believe it is coming soon because it leaked that they're using DLSS in it, because they probably started planning that years ago.

Now separately you have had reporters and insiders suggesting a 2021 or 2022 window for the console. So do I think these people are lying? No, I just think their information is out of date. That's something that happens a lot, where leakers or insiders get legitimate, real information, but the information changed either after the source heard it or after they reported it. I strongly believe that the next Nintendo console was delayed, because of a combination of the chip shortage and the expanded demand due to the pandemic. I also think this aligns with what we've seen of the leaked hardware. The leaked hardware is much stronger in multiple ways than what we were all expecting. It's very hard to see how they can get this running at 8nm without severe complications relating to cost, heat, battery life, and size of the console. Occam's razor states that in that case it probably isn't 8nm, despite us hearing that from respected sources, which would point to a delay. If it launches a year and a half later than expected, it's easy to see them simply using a newer process.

We've seen that RT and 60fps is possible, just on a more limited scale. For handheld mode, it might be more limited, but we just don't know yet. Is 360p or even 270p reflections/shadows/ao/etc a viable tradeoff? Is that even readable in most situations?RT modes on consoles are almost always locked to 30fps modes. There’s just no wiggle room for almost 3ms when all you have is 16.6ms. When in 60fps modes on console the RT usually has 1/4 res so 1080p at 4k.

I really don’t see Nintendo using RT personally because it would almost certainly have to be disabled when in portable mode due to power draw. A lot of their games also target 60fps (see the problem above). The cores are there for DLSS.

Let me try and genuinely understand your point, because I think you are actually missing mine as well. No argument, just discussionI think you're just not getting my point.

I think I do understand what you're saying. Let me repeat it and see if I do?There are different types of leaks in this context. You have leaks about general, broad things, like the next console being 4k or having raytracing, and you have leaks about specific things, like a batman collection being developed for the console or a new Donkey Kong game about the Kremlins for example.

So, this is where I think you are factually wrong on one point, and I don't agree with your opinion on another.I have never denied that there have been these more broad general leaks. Consoles are in development for years, so information leaking out about the next console's hardware plans are expected at this point, nothing unusual. We have indeed gotten many of these sort of leaks. Where we are lacking in is in the more specific leaks, of software or locked in hardware plans *with the exception of the hack which is not a normal leak and is not indicative of an iminent launch.

On board custom SoCs aren't made years in advance. We know the chip. It was leaked 8 months ago, the hack confirmed that the leak wasn't bullshit.The reason why differentiating these types of leaks is important in this context is because broad leaks about hardware plans can happen years in advance, while for example a Batman collection is probably not in development for years before the launch of the console.

Well, obviously, you can believe what you want!When that kind of information starts leaking, specific details about the console's launch and the software it will have, that's when I will believe the console is coming soon. I am not going to believe it is coming soon because it leaked that they're using DLSS in it, because they probably started planning that years ago.

You believe Nintendo's plans changed in the last 6 months? Because the 2022 window is based on game developers taking to Mochizuki in SeptemberNow separately you have had reporters and insiders suggesting a 2021 or 2022 window for the console. So do I think these people are lying? No, I just think their information is out of date That's something that happens a lot, where leakers or insiders get legitimate, real information, but the information changed either after the source heard it or after they reported it.

Do you have evidence of that of are you just going by your own analysis of the market? Because I don't think it's possible to turn the ship around that late in the game, but I understand you perspective. But if those plans changed early due to these forces, why weren't developers told? Unless Nintendo made that decision literally very recently, or perhaps it was delayed - 2 years ago, and the 2022 date is the "later" one.I strongly believe that the next Nintendo console was delayed, because of a combination of the chip shortage and the expanded demand due to the pandemic.

This doesn't track at all for me. This looks like a stable design, with a complete software stack above it. It doesn't seem like hardware that could plausibly be the product of a late 2020 rethink (which would the earliest that we could reasonably expect Nintendo to delay based on increased Covid demand), but more important, a Covid based delay would imply that devs with devkits would be sent back to the drawing board.I also think this aligns with what we've seen of the leaked hardware.

Occam's razor says "leakers have been proven right on every instance, they're probably right about the release date"The leaked hardware is much stronger in multiple ways than what we were all expecting. It's very hard to see how they can get this running at 8nm without severe complications relating to cost, heat, battery life, and size of the console. Occam's razor states that in that case it probably isn't 8nm, despite us hearing that from respected sources, which would point to a delay. If it launches a year and a half later than expected, it's easy to see them simply using a newer process.

This new model releasing in 2024 would be equivalent to development on the original Switch having been in full swing as of March 2012, or the 3DS as of February 2006. Game system hardware just doesn't/can't take that long to develop.With all that info summed up idk why there are those who insist on a 2024 release. That feels late.

Shit, they rarely go as far as to use any anti-aliasing on current Switch, let alone something significantly more complicated like FSR2.If FSR 2.0 is as good as DLSS then Nintendo went through a lot of extra research for nothing, because they could just use FSR right now on the current switch

| Module | Processor | Cores | Frequency | Core configuration1 | Frequency (MHz) | TFLOPS (FP32) | TFLOPS (FP16) | DL TOPS (INT8) | Bus width | Band-width | Availability | TDP in watts |

| Orin 64 (Full T234) | Cortex-A78AE /w 9MB cache | 12 | up to 2.2 GHz | 2048:16:64 (16, 2, 8) | 1300 | 5.32 | 10.649 | 275 | 256-bit | 204.8 GB/s | Dev Kit Q1 2022, Production Oct 2022 | 15-60 |

| Orin 32 (4 CPU & 1 TPC disabled) | Cortex-A78AE /w 6MB cache | 8 | up to 2.2 GHz | 1772:14:56 (14, 2, 7) | 939 | 3.365 | 6.73 | 200 | 256-bit | 204.8 GB/s | Oct 2022 | 15-40 |

| Orin NX 16 (4 CPU & 1 GPC disabled) | Cortex-A78AE /w 6MB cache | 8 | up to 2 GHz | 1024:8:32 (8, 1, 4) | 918 | 1.88 | 3.76 | 100 | 128-bit | 102.4 GB/s | late Q4 2022 | 10-25 |

| Orin NX 8 (6 CPU & 1 GPC disabled) | Cortex-A78AE /w 5.5 MB cache | 6 | up to 2 GHz | 1024:8:32 (8, 1, 4) | 765 | 1.57 | 3.13 | 70 | 128-bit | 102.4 GB/s | late Q4 2022 | 10-20 |

| Module | Processor | Cores | Frequency | Core configuration1 | Frequency (MHz) | TFLOPS (FP32) | TFLOPS (FP16) | DL TOPS (INT8) | Bus width | Band-width | TDP in watts |

| T239 (Drake) (4-8 CPU & 2 TPC disabled) | Cortex-A78AE /w 3-6MB cache | 4-8 | under 2.2 GHz | 1536:12:48 (12, 2, 6) | under 1300 | under 4 | under 8 | ? | 256-bit | 204.8 GB/s | under 15-40? |

| devices with known clocks | Processor | Cores | Frequency | Core configuration1 | Frequency (MHz) | TFLOPS (FP32) | TFLOPS (FP16) | Bus width | Band-width | TDP in watts |

| Orin 64 | Cortex-A78AE /w 6MB cache | 8 | 2200 | 1536:12:48 (12, 2, 6) | 1300 | 3.994 | 7.987 | 256-bit | 204.8 GB/s | under 15-60 |

| Orin 32 | Cortex-A78AE /w 6MB cache | 8 | 2200 | 1536:12:48 (12, 2, 6) | 939 | 2.885 | 5.769 | 256-bit | 204.8 GB/s | under 15-40 |

| NX 16 | Cortex-A78AE /w 6MB cache | 8 | 2000 | 1536:12:48 (12, 2, 6) | 918 | 2.820 | 5.640 | 128-bit | 102.4 GB/s | under 10-25 |

| NX 8 | Cortex-A78AE /w 5.5 MB cache | 6 | 2000 | 1536:12:48 (12, 2, 6) | 765 | 2.350 | 4.700 | 128-bit | 102.4 GB/s | under 10-20 |

| Switch docked | Cortex-A78AE /w 3MB cache | 4 | 1020 | 1536:12:48 (12, 2, 6) | 768 | 2.359 | 4.719 | 128-bit | 102.4 GB/s | |

| Switch handheld | Cortex-A78AE /w 3MB cache | 4 | 1020 | 1536:12:48 (12, 2, 6) | 384 | 1.180 | 2.359 | 128-bit | 102.4 GB/s |

This isn't entirely accurate based on what has come out over the past year or so. Nintendo supposedly actually worked with Nvidia when designing the TX1, so it was at least partially designed for their needs. It wasn't just a stock chip they saw on a shelf and picked. Plus, the theory that Nvidia had a large supply and would sell them for a good price was based fully on speculation and nothing concrete.Product binning

TLDNR: I think that the Nvidia Orin (T234) chip that we now have VERY clear specs on, IS in fact the chip Nintendo will use in the next Switch, by way of a industry practice known as "binning".

1. I still hear talk about how the chip inside the original Switch was a "custom Nvidia chip for Nintendo". This is a lie. In 2017 Tech Insights did their own die shot and proved it was a stock, off the shelf Tegra X1(T210).

Q: Why did Nintendo use this chip and not a custom chip?

A: They were able to get a good price from Nvidia who had a large supply. This same chip was used in multiple products including the Nvidia Shield.

The main issue with this theory from what I can tell is the die size. Orin has a ton of automotive components that would be entirely unnecessary on a gaming console and a waste of silicon which means a waste of money. Not to mention that this thing is like 4x the size of the TX1 and unlikely to fit in any similar looking form factor.2. We need to consider that Nintendo may do the same thing again this time. That is, start with a stock chip and go from there. This would be less expensive and provide what I believe would be the same outcome.

We know that the full Orin (T234) chip is very large at 17 billion transistors. Based on pixel counts of all marketing images provided by Nvidia it could be around 450 mm2. (Very much a guess)

3. Too expensive and requiring too much power you say?

-Nvidia has documented the power saving features in their Tegra line, that allow them to disable/turn off CPU cores and parts of the GPU. The parts that are off consume zero power.

-A fully enabled T234 with the GPU clocked up to 1.3 GHz with a board module sells at $1599 USD for 1KU unit list price.

-The fully cut downT234 model with module (Jetson Orin NX 8GB) sells for $399 USD for 1KU unit list price.

Note: As a point of reference, 1.3 years before the Switch released the equivalent Tegra X1 module was announced for $299 USD for 1KU unit list price. ($357 adjusted for inflation)

4. Product binning. From Wikipedia: "Semiconductor manufacturing is an imprecise process, sometimes achieving as low as 30% yield. Defects in manufacturing are not always fatal, however; in many cases it is possible to salvage part of a failed batch of integrated circuits by modifying performance characteristics. For example, by reducing the clock frequency or disabling non-critical parts that are defective, the parts can be sold at a lower price, fulfilling the needs of lower-end market segments."

Companies have gotten smarter and built this into their design. As an example, the Xbox Series X chip contains 56 CUs but only 52 CUs are ever enabled, this increases the yields for Microsoft as they are the only customer for these wafers.

Relevant Example #1 - Nvidia Ampere based desktop GPUs:

The GeForce RTX 3080, 3080Ti and 3090 all come from the same GA102 chip with 28.3 billion transistors. Identical chip size and layout, yet their launch prices ranged from $699 to $1499 USD.

After they get made they are sorted into different "bins"

If all 82 CUs are good then it gets sold as a 3090.

If up to 2 CUs are defective, then it gets sold as a 3080 Ti.

If up to 14 CUs are defective, then it gets sold as a 3080.

The result is usable yields from each wafer are higher, and fewer chips get thrown into the garbage. (the garbage chips are a 100% loss).

Relevant Example #2 - Nvidia Jetson Orin complete lineup, with NEW final specs:

1 Shader Processors : Ray tracing cores : Tensor Cores (SM count, GPCs, TPCs)

Module Processor Cores Frequency Core configuration1 Frequency (MHz) TFLOPS (FP32) TFLOPS (FP16) DL TOPS (INT8) Bus width Band-width Availability TDP in watts Orin 64 (Full T234) Cortex-A78AE /w 9MB cache 12 up to 2.2 GHz 2048:16:64 (16, 2, 8) 1300 5.32 10.649 275 256-bit 204.8 GB/s Dev Kit Q1 2022, Production Oct 2022 15-60 Orin 32 (4 CPU & 1 TPC disabled) Cortex-A78AE /w 6MB cache 8 up to 2.2 GHz 1772:14:56 (14, 2, 7) 939 3.365 6.73 200 256-bit 204.8 GB/s Oct 2022 15-40 Orin NX 16 (4 CPU & 1 GPC disabled) Cortex-A78AE /w 6MB cache 8 up to 2 GHz 1024:8:32 (8, 1, 4) 918 1.88 3.76 100 128-bit 102.4 GB/s late Q4 2022 10-25 Orin NX 8 (6 CPU & 1 GPC disabled) Cortex-A78AE /w 5.5 MB cache 6 up to 2 GHz 1024:8:32 (8, 1, 4) 765 1.57 3.13 70 128-bit 102.4 GB/s late Q4 2022 10-20

You can confirm the above from Nvidia site here, here and here.

Of note, Nvidia shows the SOC in renders of all 4 of these modules as being the identical size. This suggests that they are all cut from wafers with the same 17 billion transistor design, just with more and more disabled at the factory level to meet each products specs.

The CPU and GPU are designed into logical clusters. During the binning process they can permanently disable parts of the chip along these logical lines that have been established. The disabled parts do not use any power and would be invisible to any software.

Specific to Orin the above table shows as that they can disable per CPU core as well as per TPC (texture processing clusters). This is important.

The full Orin GPU has 8 TPCs. Each TPC has 2 SMs, 1 Polymorph Engine, and 1 2nd-generation Ray Tracing core. Each SM is divided into 4 processing block that each contain: 1 3rd-generation Tensor core, 1 Texture unit and 32 CUDA cores.

5. What happens if we take the Orin 32 above, and instead of only disabling 1 TPC, we disable 2 TPCs (You know, for even better yields)? = Answer: Identical values to the leaked Drake/T239 specs!

1 Shader Processors : Ray tracing cores : Tensor Cores (SM count, GPCs, TPCs)

Module Processor Cores Frequency Core configuration1 Frequency (MHz) TFLOPS (FP32) TFLOPS (FP16) DL TOPS (INT8) Bus width Band-width TDP in watts T239 (Drake) (4-8 CPU & 2 TPC disabled) Cortex-A78AE /w 3-6MB cache 4-8 under 2.2 GHz 1536:12:48 (12, 2, 6) under 1300 under 4 under 8 ? 256-bit 204.8 GB/s under 15-40?

Now the only thing left is the final clock speeds for Drake, which remain unknown, and then how much Nintendo will underclock, but we can use all the known clocks to give us the most accurate range we have had so far!

devices with known clocks Processor Cores Frequency Core configuration1 Frequency (MHz) TFLOPS (FP32) TFLOPS (FP16) Bus width Band-width TDP in watts Orin 64 Cortex-A78AE /w 6MB cache 8 1536:12:48 (12, 2, 6) 256-bit 204.8 GB/s under 15-60 Orin 32 Cortex-A78AE /w 6MB cache 8 1536:12:48 (12, 2, 6) 256-bit 204.8 GB/s under 15-40 NX 16 Cortex-A78AE /w 6MB cache 8 1536:12:48 (12, 2, 6) 128-bit 102.4 GB/s under 10-25 NX 8 Cortex-A78AE /w 5.5 MB cache 6 1536:12:48 (12, 2, 6) 128-bit 102.4 GB/s under 10-20 Switch docked Cortex-A78AE /w 3MB cache 4 1536:12:48 (12, 2, 6) 128-bit 102.4 GB/s Switch handheld Cortex-A78AE /w 3MB cache 4 1536:12:48 (12, 2, 6) 128-bit 102.4 GB/s

The above table can help you come to your own conclusions, but I can't see Nintendo clocking the GPU higher then Nvidia in their highest end Orin product. Also its hard to imagine Nintendo going with a docked clock lower then the current Switch. This gives a solid range between 2.4 and 4 TFLOPS of FP32 performance docked for a Switch built on Drake(T239).

6. What this means for the development for and production of the DLSS enabled Switch?

-The Jetson AGX Orin Developer Kit is out now, so everything Nintendo would need to build their own dev kit that runs on real hardware is available now. (not just a simulator) (The Orin Developer Kit allows you to flash it to emulate the Orin NX, so Nintendo would likely be doing something similar.)

Chip yields are always lowest at the start of manufacturing, and think of all the fully working Orin chips Nvidia needs to put into all their DRIVE systems for cars.

-Now think of how many chips will not make the cut. Either they can't be clocked at full speed and or some of the TPCs are defective.

-Nvidia will begin to stockpile chips that do not make the Orin 32 cutoff (up to 1 bad CPU cluster and up to 1 bad TPC)

-Note that there is about a 3 month gap between the production availability of the Orin 64 and the NX 8. Binning helps to explain this, as they never actually try and manufacture a NX 8 part, it is just a failed Orin 64 that they binned, stock piled and then sold.

-This would allow Nintendo to come in and buy a very large volume of binned T234 chips, perhaps in 2023, and put them directly into a new Switch.

-Nintendo can structure the deal that they are essentially buying NVidia's off the shelf chips industrial waste on the cheap.

Custom from day 1 = expensive

Compare this to how much Nvidia would charge Nintendo if instead the chip was truly custom from the ground up. Nintendo gets billed for chip design, chip tapeout, test, and all manufacturing costs. Nintendo would likely be paying the bill at the manufacturing level, meaning the worse the yields are, and the longer it takes to ramp up production the more expensive it is. The cost per viable custom built to spec T239 chip would be unknown before hand. Nintendo would be taking on a lot more risk with the potential for the costs to be much higher then originally projected.

This does not sound like the Nintendo we know. We have seen the crazy things they will do to keep costs low and predictable.

custom revision down the road = cost savings

Now as production is improved and yields go up, the number of binned chips goes down. As each console generation goes on we expect both supply and demand to increase as well.

This is where there is an additional opportunity for cost savings. It makes sense to have a long term plan to make a smaller less expensive version of the Orin chip, one with less then 17 billion transistors. Once all the kinds are worked out and the console cycle is in full swing, and you have a large predictable order size, you can go back to Nvidia and the foundry and get a revision made without all the parts you don't need. known chip, known process, known fab, known monthly order size in built. = lower cost per chip

And the great thing is that the games that run on it don't care which chip it is. The core specs are locked in stone. 6 TPCs, 1536 CUDA cores, 12 2nd-generation Ray Tracing cores, 48 3rd-generation Tensor cores.

Nintendo has already done this moving the Switch from the T210 chip to the T214 chip.

So what do you all think? Excited to hear all your feedback! I am only human, so if you find any specific mistakes with this post, please let me know.