This is my concern. Granted camera tech is much better now, but a Switch camera will be a mass produced low end phone camera which will be compared unfavorably and will age poorly If the device is to last 6 plus years

I much prefer the AR camera be packed in with a software sold separately

Switch is a gaming device first and foremost and AR.games are at best gimmicks and curiosities or done much better on phones where people can upgrade every few years

A dedicated device like the Switch would be

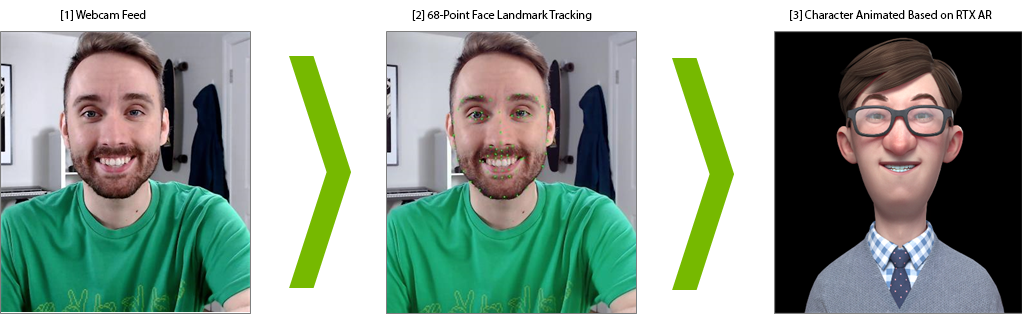

way better for AR than phones. High end phones may have better cameras, but they're overkill for AR (you don't need a 50 Mpx camera to do AR on a ~2 Mpx screen), but most importantly software can't be built with those cameras in mind, because not everyone owns a high-end phone. Phone camera set-ups have a huge amount of variability, and software like Pokemon Go has to be built for the lowest common denominator.

Depth estimation is a particularly important factor here. Even on iOS, where developers can optimise around a much smaller set of hardware configurations, Apple only includes its Lidar depth sensors (actually ToF sensors, but whatever) on the Pro models, so software obviously can't be built to require them. Other phones use stereo depth estimation, but with mismatched cameras positioned closely together, the quality isn't great, and it's mostly geared towards fake bokeh portrait photos rather than AR.

A pair of two cheap 1080p cameras on the back of Switch 2 would provide better depth estimation than almost any phone, at much lower cost. Part of the reason would simply be the use of matched cameras much farther apart than is feasible on phones. The more important part, though, is the tensor core performance of T239 and Nvidia's expertise in depth estimation from the automotive side of their business. Most stereo disparity estimation uses a relatively simple mathematical approach which is easy to implement but tends to be noisy and get caught up on small details, which is actually implemented in hardware on the OFA on T239 and other Nvidia chips.

If you've got lots of ML performance, though, which T239 has, you can do much better by using a neural network to perform stereo estimation. Nvidia have been researching this for years, with

this paper from 2018 describing a technique that's usable on the Jetson TX2 (even in handheld mode, T239 should have at least 10x the ML performance of the TX2 thanks to its tensor cores). Nintendo could do much better, though.

For one thing, every use case of stereo disparity estimation I've come across (including this one from Nvidia) takes in a full RGB images. This seems sensible, but cameras don't actually take full RGB images. Each pixel on a camera has either a red, green or blue filter in front of it, arranged in grids of four pixels typically with two green, one red and one blue (known as a Bayer pattern) so if you have, let's say a 20 Mpx camera, it actually has 10 million green pixels, 5 million red pixels and 5 million blue pixels. To get a full colour image with RGB for each pixel, software (or hardware) interpolates between the different colours, with a technique called debayering or demosaicing. Further software then applies noise reduction before displaying the picture or sending it on elsewhere.

The issue is that the process of debayering and noise reduction just makes the job of a neural network worse. The debayering step is just simple interpolation, so it's adding data without any of it being actually useful to the neural network. The neural network is getting 3x as much data, but no more usable information. The noise reduction step then potentially destroys information which might be useful to the neural network, so a debayered and denoised image is requiring a neural network to process much more data, but get less usable information out of it, than just consuming the raw sensor data. ML based solutions are very good at extracting detail from raw camera data. I use software called

DxO PureRaw for my photography, and it produces

far better results than any conventional debayering and denoising software I've ever used.

By single-sourcing a pair of camera modules, Nintendo and Nvidia could create a neural stereo depth estimator based on raw sensor data from cameras with known noise characteristics, which puts them in a better place than almost any other AR product. Furthermore, I would expect there are significant further benefits to be found from moving from a purely spatial approach to depth estimation to a spatio-temporal one. Just like DLSS 2 produces much better and more stable results than purely spatial upscalers by incorporating data from previous frames, depth estimation would benefit significantly by incorporating temporal data. I've seen papers which use optical flow as part of depth estimation, but no ML-based implementations that use a temporal approach such as a recurrent neural net. It seems like a pretty obvious win to me; feed in data from previous frame(s) along with gyroscope and accelerometer data to determine movement (potentially with optical flow as well) and you should get a more stable output that's better at resolving small details.

Another big factor is just the performance of Switch 2 relative to its screen resolution. Even with a bump to 1080p, the performance of Switch 2 per pixel would be way higher than, say the Apple Vision Pro, which is more powerful but has to push displays with 10 times as many pixels, and at a higher frame rate to boot. This allows for more impressive graphics, but also makes it easier to do things like estimate lighting conditions within the scene so that rendered elements feel like they're actually in the environment and not crudely photoshopped on top. This was one of the things that journalists were impressed by

when demoing the Apple Vision Pro, and it's also a problem that's well suited to machine learning.

Finally, and most importantly, a Switch 2 with AR capabilities would have Nintendo developing games for it. They've shown how interested in AR they still are with Labo, not to mention how they can bring new things to the table. I'd be exited to see what Nintendo's developers could do with class-leading AR hardware.