Right.

PG: Because it wasn't mainstreamed in the processor. It was sort of, "Hey, for some of these use cases over here". Well, now they get it, and all these things are driving us to be better. So in some ways, in a not very subtle manner, I've unleashed market forces to break down some of the

NIH of the Intel core development machine, and that is part of this IFS making IDM better.

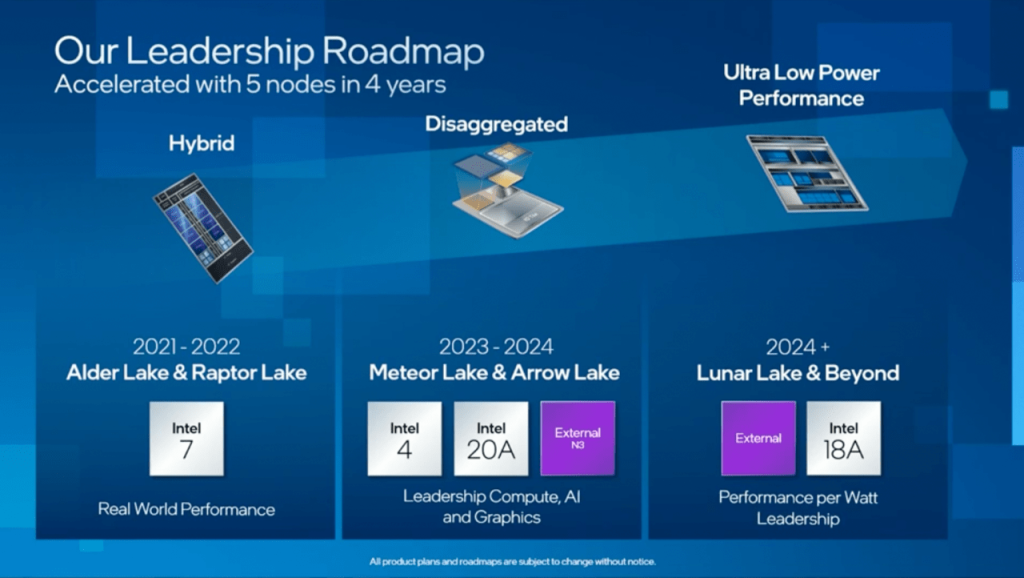

Yeah, that makes a lot of sense. You had this uber-aggressive roadmap, five nodes in four years, and I have two questions on that. The first one goes back to something you mentioned before about Apple being a partner to TSMC in getting to that next node and how important that was for TSMC. I think I noted that in this new Tick-Tock strategy, Tick-Tock 2.0, Intel's playing that role where either the tick or the tock is Intel pushing it and then the tock is opened up to your customers. I take it that's an example of how Intel being the same company really benefits itself, that you get to play the Apple role that Apple did for TSMC, you just get to play it for yourself.

PG: Yeah, well stated. Now let's say, because I'm expecting Intel 18A to be a really good foundry process technology — I'm not opposed to customers using 20A, but for the most part, the tick, that big honking change to the process technology, most customers don't want to go through the pain of that on the front end. So usually my internal design teams drive those breakthrough painful early line kind of things, is very much like the Apple role that TSMC benefited from as well. Now, if Apple would show up and say, "Hey, I want to do something in 20A", I'd say yes.

Come on in!

PG: If you list them, there are ten companies that can play that role — Qualcomm, Nvidia, AMD, MediaTek, Apple, that are really driving those front end design cycles as well, and if one of them wanted to do that on Intel 4, I'd do it, but I expect Intel 3 will be a better node for most of the foundry customers, like Intel 18A will be a better node for most of the foundry customers as well.