10 months. I wouldn't be suprised if everyone's waiting for the hammer to fall. might be this holiday when people go on vacation and tracking is harder. but by the end of March, I expect damn near all the specs to leakHow long is it since devkits for Dane have been out? 8 months? Is it unusual to not have any leaks on specs at this point? Comparing to Sony and MS? I know NDAs are in place and professional devs aren’t looking to jeopardize their careers for internet clout. But I figured we’d get something at this point, even if it’s not final.

-

Hey everyone, staff have documented a list of banned content and subject matter that we feel are not consistent with site values, and don't make sense to host discussion of on Famiboards. This list (and the relevant reasoning per item) is viewable here.

-

Furukawa Speaks! We discuss the announcement of the Nintendo Switch Successor and our June Direct Predictions on the new episode of the Famiboards Discussion Club! Check it out here!

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

StarTopic Future Nintendo Hardware & Technology Speculation & Discussion |ST| (New Staff Post, Please read)

- Thread starter Dakhil

- Start date

Threadmarks

View all 18 threadmarks

Reader mode

Reader mode

Recent threadmarks

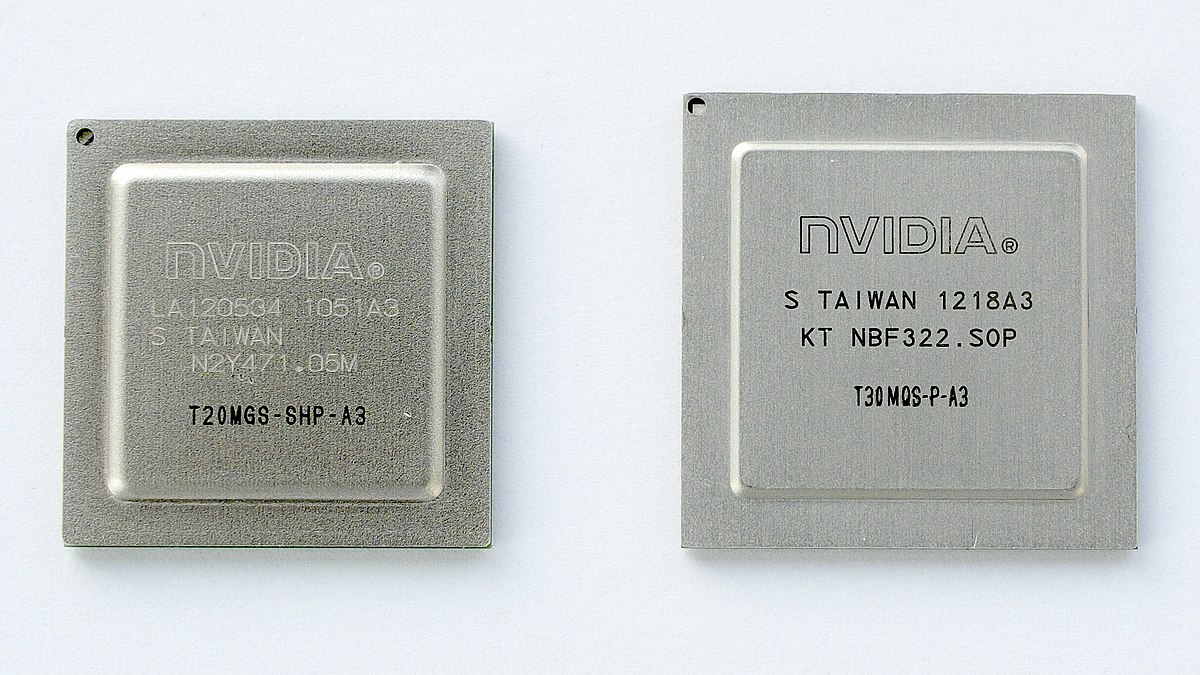

Poll #3: When do you think is the launch window for Nintendo's new hardware? Announcement regarding links to news and rumours from 2022 and 2021 Rough summary of the 2 August 2023 episode of Nate the Hate Rough summary of the 11 September 2023 episode of Nate the Hate Anatole's deep dive into a convolutional autoencoder Differences between T234 and T239 Rough summary of the 19 October 2023 episode of Nate the Hate necrolipe's Twitter (X) post on why a 2025 launch doesn't automatically make T239 outdated based on one of the leaked slides from Microsoftlemonfresh

#Team2024

- Pronouns

- He/Him

The Nintendo super dane

Hyrulean

Tektite

- Pronouns

- He/Him

Purple belt 9th daneThe Nintendo super dane

- Pronouns

- He/Him

I just found out Dane Whitman is going to be in The Eternals movie and now I'll be giggling every time he's on screen, thanks guys.

SpringwoodSlasher

Tektite

All I ask is that Nintendo keeps the Switch branding. Is that too much to ask?…

ArchedThunder

Uncle Beerus

- Pronouns

- He/Him

January

reiterated in July

Second question, if this is the case why hasn’t Nvidia said anything about Orin not being Ampere yet? Are they waiting for the 4000 series GPUs to be announced first?

- Pronouns

- He/Him

Actually marketing it as a console in the first trailer would helpI guess this is sorta on topic, but when this "Dane" model releases, how would Nintendo avoid the marketing problems and confusion that they had with the Wii U?

Orin might be brought up next month at GTCSecond question, if this is the case why hasn’t Nvidia said anything about Orin not being Ampere yet? Are they waiting for the 4000 series GPUs to be announced first?

A really good name, a unique look, and exclusives (probably from third paries)I guess this is sorta on topic, but when this "Dane" model releases, how would Nintendo avoid the marketing problems and confusion that they had with the Wii U?

- Pronouns

- He/Him

This is anecdotal, but a couple of my friends who worked at GameStop during the WiiU launch literally did not even know it was a new system. They thought it was a tablet accessory for the Wii and said most customers also thought the same. And by the time it became more widely obvious to people what it was, there were hardly any games for it.I guess this is sorta on topic, but when this "Dane" model releases, how would Nintendo avoid the marketing problems and confusion that they had with the Wii U?

I do not think the Dane model will have either of those two problems, neither the confusion about whether it's an accessory or a system, nor the lack of a game library.

ArchedThunder

Uncle Beerus

- Pronouns

- He/Him

Good name, good marketing that is clear, the benefits of the hardware being put on display, and good software.I guess this is sorta on topic, but when this "Dane" model releases, how would Nintendo avoid the marketing problems and confusion that they had with the Wii U?

bloopland33

Like Like

I haven't seen this brought up in this thread yet, and I'm sure you've all seen it, but the door is apparently not closed on Square bringing KH to Switch natively. @Dakhil, maybe you're right and we will see those games crop up on Dane.

Hopefully in a year's time or so it will be much easier for them if they decide to go through with it...

Hopefully in a year's time or so it will be much easier for them if they decide to go through with it...

YolkFolk

Tingle

I guess this is sorta on topic, but when this "Dane" model releases, how would Nintendo avoid the marketing problems and confusion that they had with the Wii U?

Switch U

How the hell we went from

To”Switch pro exist, is like Switch but 4k”

To”actually its not a pro, its Switch2, Just like Switch but 4K”

????”actually Switch 2 will change from Switch and it wont be BC”

This feels like the same issue tha Metroid had, where they announced a spin off or undesirable game without a primary game. The cloud version has had a pretty vitriolic reaction, but if it came with the announcement of a native Dane version, it would have been better recievedI haven't seen this brought up in this thread yet, and I'm sure you've all seen it, but the door is apparently not closed on Square bringing KH to Switch natively. @Dakhil, maybe you're right and we will see those games crop up on Dane.

Hopefully in a year's time or so it will be much easier for them if they decide to go through with it...

- Pronouns

- He/Him

I've seen the name "Switch Up" suggested and while I don't expect it I seriously wouldn't put it past them.Switch U

They could put the "Up" in a little blue square beside the original logo and everything.

Dr_Phishshoe

Devil's Advocate

Did someone say logo´s? I made this stupid thing a while agoI've seen the name "Switch Up" suggested and while I don't expect it I seriously wouldn't put it past them.They could put the "Up" in a little blue square beside the original logo and everything.

- Pronouns

- He/Him

I'm not sure what you mean. We always knew the SoC was different from the original switch and some 3rd parties were working on exclusives for it. The only thing that was shifting in discussion over the last year was expected launch period, naming, and marketing.How the hell we went from

To

To

????

Very little chance it doesn't have BC.

bloopland33

Like Like

Good point. I'm fine waiting this long for it to hit the platform if this is what allows them to come over at 1080p/60fps (only referring to 1.5+2.5 here). Maybe they'd have come up short somewhere if they'd been ported ages ago, who knows.This feels like the same issue tha Metroid had, where they announced a spin off or undesirable game without a primary game. The cloud version has had a pretty vitriolic reaction, but if it came with the announcement of a native Dane version, it would have been better recieved

Marce-chan

Magical Girls <3

- Pronouns

- They/Them

Since late 2020 per Nate, so almost a year if not a year already.How long is it since devkits for Dane have been out? 8 months? Is it unusual to not have any leaks on specs at this point? Comparing to Sony and MS? I know NDAs are in place and professional devs aren’t looking to jeopardize their careers for internet clout. But I figured we’d get something at this point, even if it’s not final.

Frame rate would have been the first to go, but the game is pretty light on assets. There's not much to scale down given the fact they are remastersGood point. I'm fine waiting this long for it to hit the platform if this is what allows them to come over at 1080p/60fps (only referring to 1.5+2.5 here). Maybe they'd have come up short somewhere if they'd been ported ages ago, who knows.

Marce-chan

Magical Girls <3

- Pronouns

- They/Them

There's literally no way it's not called Nintendo Switch something or something Nintendo Switch.All I ask is that Nintendo keeps the Switch branding. Is that too much to ask?…

Don't worry.

ReddDreadtheLead

#TeamLate2025WithAPotentialForEarly2026

- Pronouns

- He/Him

Add to it the graphs that I made comparing the performance of them and it muddies the waters. It’s possible that the trained more than one which they use for the automotive AI business and it just so happened to work with the gaming side. In Automotive i can see why they would train more than 1 for its purposes since it is a very tightly regulated industry, and considering how their SoCs have tensor cores on top of having a dedicated DLA (Deep Learning Accelerator) it could be that it has more than one trained for these occasions.The post that I am imagining in my head estimated the time for DLSS to run on 2-3 potential Dane configurations in ms. TBH, I may be remembering a composite of various related posts.

With that said, I replied to this Thraktor post at the time, and the results still puzzle me. In theory, the cost of DLSS should marginally increase with input resolution, since the earliest layers in the neural network are at input resolution. However, that cost should be fairly negligible (less than 10% of the total cost in the Kaplanyan/Facebook paper) compared to the reconstruction cost at output resolution.

With that in mind, it doesn’t make sense to me that the DLSS compute time should decrease as input resolution increases from 1080p to 1440p. Assuming Thraktor’s tests were controlled correctly (more on that below), the only explanation that I can think of is that Performance, Ultra Performance, and Quality modes already use somewhat different network architectures.

The way that a CNN works is independent of resolution because the weights are shared. That concept (parameter sharing) is one of the main advantage of CNNs, because it means there are fewer weights to train. Besides, from an image processing perspective, it would be a poor choice to use different weights for each pixel position, since the information at those pixel positions is different in each frame. So instead you train a filter, often a 3x3 or 5x5 pixel filter, that loops over each pixel in the frame.

In theory, since you have parameter sharing, you could use the same neural network for each mode. It would be a conscious decision on Nvidia’s part to train a different network for each mode to improve either cost or image quality. If this were true, Thraktor’s results suggest that Performance mode may use a deeper, more computationally expensive network than Quality mode.

This would make the names something of a misnomer, although it does make a certain amount of sense - for example, it makes intuitive sense that you would need a deeper network to reconstruct a “4K” looking image from 720p frames than from 1080p frames.

This doesn’t fully account for what happens when DRS and DLSS are combined. For example, which network would be used when scaling from 900p to 4K - performance or ultra performance? I am not sure.

I am hesitant to make any conclusions based on Thraktor’s tests alone. Taking the median frame time seems reasonable, but I am not sure if manually walking through a set path is controlled enough for the 0.3 ms margins we’re talking about. Plus, we haven’t seen any indication that the networks are different elsewhere. It’s an interesting possibility for the sake of discussion though.

(edited heavily to reorganize paragraphs and improve clarity)

NVDLA Primer — NVDLA Documentation

nvdla.org

Here it goes more in-depth at the DLA if you’re curious.

Not if they get extended exclusivity deal besides being a moneyhatted titleThose FF games are only timed exclusives aren’t they? They’ll come to Switch as soon as the hardware can handle them as long as the timed deal is over. Hardly a ‘yeah no lol’ scenario.

They probably don’t expect the next switch to be a troublesome competition directly, as they already hold the AAA software in PS in japan.

It’s pre-silicon right now, so hard to give an honest spec, no point in leaking what wouldn’t be there at the end.How long is it since devkits for Dane have been out? 8 months? Is it unusual to not have any leaks on specs at this point? Comparing to Sony and MS? I know NDAs are in place and professional devs aren’t looking to jeopardize their careers for internet clout. But I figured we’d get something at this point, even if it’s not final.

ArchedThunder

Uncle Beerus

- Pronouns

- He/Him

I'm seriously hoping for one of the following

Nintendo Switch Ultra

Ultra Nintendo Switch

Nintendo Ultra Switch

Personally my favorite is Ultra Nintendo Switch.

I said it before, but Ultra is perfect on multiple levels. It avoids using a number, harkens back to Super Nintendo (and Ultra 64) and it describes the 4K functionality as 4K is referred to as Ultra HD.

Nintendo Switch Ultra

Ultra Nintendo Switch

Nintendo Ultra Switch

Personally my favorite is Ultra Nintendo Switch.

I said it before, but Ultra is perfect on multiple levels. It avoids using a number, harkens back to Super Nintendo (and Ultra 64) and it describes the 4K functionality as 4K is referred to as Ultra HD.

Marce-chan

Magical Girls <3

- Pronouns

- They/Them

People misinterpreting insiders literally every time they drop a tidbit on what it is or speculate about something. That's how people got there lol.How the hell we went from

To

To

????

I mean there's literally no rumor that Dane wouldn't have BC, it was just speculations based on what the chip architecture is. It's even more bothering because I saw a Brazilian major site using @NateDrake as a supposed source on the Switch 4K being a successor and not having BC, when he literally never said that. Quite the opposite, on the podcast he and MVG explored every possible way Nintendo and Nvidia could use to make BC possible.

Thing is, people from sites get information from forums and podcasts, present them in a misleading way so they can get more clickbait, then people read it instead of listening to the podcast or reading the thread for context, and just goalpost over it, misinterpreting even further the initial source and putting blame on insiders if anything goes different or outright choosing not to believe them because the misinterpretation some piggybacking site made of their info turned into some absurd.

Marce-chan

Magical Girls <3

- Pronouns

- They/Them

I don't know if people that bought the cloud versions would be all that excited if they announce a native version for Dane later, being them Dane buyers or not.This feels like the same issue tha Metroid had, where they announced a spin off or undesirable game without a primary game. The cloud version has had a pretty vitriolic reaction, but if it came with the announcement of a native Dane version, it would have been better recieved

The major problem with KH(minus III) is file size, if they didn't bother putting major work in compression to at least make it smaller so each game could come in a 32gb card and have a not so gigantic download, and have III as cloud, I don't think they'll bother doing it for Dane after already having the cloud games out.

Anatole

Octorok

- Pronouns

- He/Him/His

I’m not really familiar with NVDLA, but scanning this, I think I should clarify that there are two definitions of “architecture” that are important here:Add to it the graphs that I made comparing the performance of them and it muddies the waters. It’s possible that the trained more than one which they use for the automotive AI business and it just so happened to work with the gaming side. In Automotive i can see why they would train more than 1 for its purposes since it is a very tightly regulated industry, and considering how their SoCs have tensor cores on top of having a dedicated DLA (Deep Learning Accelerator) it could be that it has more than one trained for these occasions.

NVDLA Primer — NVDLA Documentation

Here it goes more in-depth at the DLA if you’re curious

I) in a neural network sense, architecture refers to the number of layers, nodes, and connections between the nodes

II) in a hardware/software sense, architecture refers to the organization of stuff on a chip - instruction sets, registers, memory, etc.

It sounds like NVDLA is an architecture in the second sense. When I talk about changing the DLSS architecture, I am using the word in the first sense instead. So when I say that performance, quality, and ultra quality DLSS could have different architectures, what I meant was that their neural networks might have a different number of layers or channels.

You could also use the word “hyperparameter” to refer to things that you choose when you set up a neural network, like the number of layers/channels, but I’ve been avoiding it because it’s extra jargon.

ReddDreadtheLead

#TeamLate2025WithAPotentialForEarly2026

- Pronouns

- He/Him

They somewhat did "leak" that it is using Lovelace, but implicitly. They didn’t name it, just that it is using a "brand new Nvidia GPU" among other things, and I think @fwd-bwd found that Zhiji has some direct connection to NV? I don’t remember if it is official partners or if it is a daughter company to NV, can’t remember so I'm tagging them to see if they can correct it.Second question, if this is the case why hasn’t Nvidia said anything about Orin not being Ampere yet? Are they waiting for the 4000 series GPUs to be announced first?

Anyway...

This is what we read from official press release:

"...Nvidia's Orin X chip is made of a brand-new NVIDIA GPU and 12-core ARM CPU with a 7nm process, with a single-chip computing capacity of up to 254 TOPS per second. Among the current mass-produced automotive-grade AI chips, the Nvidia Orin X chip is at the top of the pyramid. The single-chip computing power is about 10 times that of Mobileye's latest EyeQ5 and 3.5 times that of Tesla's HW3.0. "The strongest intelligent driving AI chip on the surface."

Wouldn’t they just name it as Ampere if it is using that, or just saying "is made of latest NVIDIA GPU and 12-core ARM CPU"? They specified it is brand new, though to play devil's advocate, Ampere is also technically still a brand new GPU uArch until the successor is out, so it equally doesn't mean lovelace but we know its lovelace based on leaks so whatever

I see, when it comes to the nitty gritty of neural networks its way above what I know, getting an idea of what it is really but not on a very high technical level, I assumed that with DLA it would be more related to what you were referring to. I do thank you however for clarifying what you meant here.I’m not really familiar with NVDLA, but scanning this, I think I should clarify that there are two definitions of “architecture” that are important here:

I) in a neural network sense, architecture refers to the number of layers, nodes, and connections between the nodes

II) in a hardware/software sense, architecture refers to the organization of stuff on a chip - instruction sets, registers, memory, etc.

It sounds like NVDLA is an architecture in the second sense. When I talk about changing the DLSS architecture, I am using the word in the first sense instead. So when I say that performance, quality, and ultra quality DLSS could have different architectures, what I meant was that their neural networks might have a different number of layers or channels.

You could also use the word “hyperparameter” to refer to things that you choose when you set up a neural network, like the number of layers/channels, but I’ve been avoiding it because it’s extra jargon.

NateDrake

Chain Chomp

Basically. No one checks the original source to properly cite the information. It was clear we referenced the concerns of BC due architecture change, presented means of achieving it, and somehow that was taken as, "Switch 4K currently has no BC but it is being worked on".People misinterpreting insiders literally every time they drop a tidbit on what it is or speculate about something. That's how people got there lol.

I mean there's literally no rumor that Dane wouldn't have BC, it was just speculations based on what the chip architecture is. It's even more bothering because I saw a Brazilian major site using @NateDrake as a supposed source on the Switch 4K being a successor and not having BC, when he literally never said that. Quite the opposite, on the podcast he and MVG explored every possible way Nintendo and Nvidia could use to make BC possible.

Thing is, people from sites get information from forums and podcasts, present them in a misleading way so they can get more clickbait, then people read it instead of listening to the podcast or reading the thread for context, and just goalpost over it, misinterpreting even further the initial source and putting blame on insiders if anything goes different or outright choosing not to believe them because the misinterpretation some piggybacking site made of their info turned into some absurd.

In retrospect... maybe it wasn't even worth discussing.

I put Switch Cloud purchasers in the "informed" category. they might be miffed, but they won't be up in arms because they'd understand why it's a cloud game. the cloud market on switch is so small, I just can't see someone casually buying it without being aware of what it means to be a cloud game.I don't know if people that bought the cloud versions would be all that excited if they announce a native version for Dane later, being them Dane buyers or not.

The major problem with KH(minus III) is file size, if they didn't bother putting major work in compression to at least make it smaller so each game could come in a 32gb card and have a not so gigantic download, and have III as cloud, I don't think they'll bother doing it for Dane after already having the cloud games out.

as for size, I guess (for the remasters, not the new games) it's largely videos. I can't imagine the textures being extremely large. but if they are 4K or whatever, shrinking them to 1K is an expectation for a port, for performance reasons, rather than cart size reasons

No Switch Ultra Nintendo, Switch Nintendo Ultra, or Ultra Switch Nintendo? I personally like the acronym SNU.I'm seriously hoping for one of the following

Nintendo Switch Ultra

Ultra Nintendo Switch

Nintendo Ultra Switch

Personally my favorite is Ultra Nintendo Switch.

I said it before, but Ultra is perfect on multiple levels. It avoids using a number, harkens back to Super Nintendo (and Ultra 64) and it describes the 4K functionality as 4K is referred to as Ultra HD.

- Pronouns

- He/Him

Regarding DRS + DLSS, I found a reference to DLSS Programming guide on beyond3D: https://forum.beyond3d.com/posts/2190260/

My guess: the underlying mode (quality/balanced/etc) for DLSS since the 2.1 version is still fixed based on the optimal input res and the output res, but there will be some layers added on top compared to 2.0 version to accommodate DRS.To use DLSS with dynamic resolution, initialize NGX and DLSS as detailed in section 5.3. During the DLSS Optimal Settings calls for each DLSS mode and display resolution, the DLSS library returns the “optimal” render resolution as pOutRenderOptimalWidth and pOutRenderOptimalHeight. Those values must then be passed exactly as given to the next NGX_API_CREATE_DLSS_EXT() call.

DLSS Optimal Settings also returns four additional parameters that specify the permittable rendering resolution range that can be used during the DLSS Evaluate call. The pOutRenderMaxWidth, pOutRenderMaxHeight and pOutRenderMinWidth, pOutRenderMinHeightvaluesreturned are inclusive: passing values between as well as exactly the Min or exactly the Max dimensions is allowed.

Regarding the upscaling cost between modes when output res is fixed, I found an in-depth review here and tried to extrapolate average upscaling cost on my own as below:

The number in the right-most column is the average upscaling cost and suggests that there is a very small difference between 1080p and 1440p input res when upscaled to 2160p. If I average further between graphic settings, 1440p -> 4K upscaling takes around 0.8ms more than 1080p -> 4K upscaling on RTX 2060 and 0.8ms less on RTX 2080. However, figures for RTX2080 can be a bit unreliable as the CPU used in the test is only a meager Intel-3770K which may cause bottleneck in high framerate cases).

So I do think that input res matters much less on upscaling cost than the output res. @ILikeFeet also shared some data on this front on Era:

Regarding hypothetical upscaling time on Dane, I did some napkin math on Era as well and results seem nice enough for Dane at 4K if 30fps is the target, or NV manages to utilize structural sparsity and set 60fps as the target (assuming it did not already at the time DLSS 2.0 was introduced). I think Thraktor also conducted a similar analysis, but can't find the post now, will update it here later.upscale times for performance mode

.

Last edited:

Nintendo Snu Snu. I mean, have you seen how tall Rosalina is?No Switch Ultra Nintendo, Switch Nintendo Ultra, or Ultra Switch Nintendo? I personally like the acronym SNU.

I agree that it wasn't worth discussing. [/snark]Basically. No one checks the original source to properly cite the information. It was clear we referenced the concerns of BC due architecture change, presented means of achieving it, and somehow that was taken as, "Switch 4K currently has no BC but it is being worked on".

In retrospect... maybe it wasn't even worth discussing.

With the exception of the Switch itself, every Nintendo console of this century has had backwards compatibility to the point where I'm surprised that the Switch didn't include a 3DS cart slot itself.

I can't imagine that Nintendo and NV would've go into all this without a BC plan already in place.

That said, the discussion was pretty good at laying out all the arguments for them not having BC. I disagreed with all of them.

The best argument against BC will be that moment (seconds to weeks) where they've announced the new system, but haven't talked about BC yet.

Instro

Like Like

Headphone jack/audio on the pro controller please.Outside of beefier hardware, what else should Nintendo add to the new Switch?

They already have gyro/IR, HD rumble, OLED screen and amiibo/NFC tech. What else can they add to be an upgrade over the current Switch?

Yes, the "brand new Nvidia GPU" mention is peculiar and open for interpretation. The "3.5 times of Tesla HW 3.0" mention is also a flex. I wonder if this is Nvidia's response to the late September leak of Tesla HW 4.0 using Samsung's 7nm process. Zhiji/IM Motors is co-owned by Alibaba, which is a Nvidia partner in China.They somewhat did "leak" that it is using Lovelace, but implicitly. They didn’t name it, just that it is using a "brand new Nvidia GPU" among other things, and I think @fwd-bwd found that Zhiji has some direct connection to NV? I don’t remember if it is official partners or if it is a daughter company to NV, can’t remember so I'm tagging them to see if they can correct it.

In terms of announcing which process node is being used to fabricate Orin X, that's a possibility. In terms of choosing which process node to use for fabricating Orin X, probably not, since I imagine Nvidia would have need to make such a decision at a minimum of 6 months in advance.I wonder if this is Nvidia's response to the late September leak of Tesla HW 4.0 using Samsung's 7nm process.

Hyrulean

Tektite

- Pronouns

- He/Him

Or Nintendo something SwitchThere's literally no way it's not called Nintendo Switch something or something Nintendo Switch.

Don't worry.

Alovon11

Like Like

- Pronouns

- He/Them

Honestly, if they were to go for an iterative release model (Ala iPhones), I feel Super Switch or Switch Advanced would be a bit...not scalable.

I would rather like

If they call it the Super Nintendo Switch, we can pretty much tell they will be making this a quicker generation cutoff and that there may not be a "Switch 3", at least in the iterative sense.

I would rather like

- Switch 2 (Easy)

- Switch S (Short for Super)

- This would imply that it sort of sits between a pro model and a successor as well, like the iPhone S phones of old

- Also would let them tick-toc between Switch S's and Switch #. With the next # being a more refined version of the previous S likely.

- So Switch 2 in this case would be a highly refined/boosted version of Switch S.

If they call it the Super Nintendo Switch, we can pretty much tell they will be making this a quicker generation cutoff and that there may not be a "Switch 3", at least in the iterative sense.

Hyrulean

Tektite

- Pronouns

- He/Him

I really like Nintendo Switch Advancethe only good options. anything else and the system with fail

- Super Nintendo Switch

- Nintendo Switch Advance

Anatole

Octorok

- Pronouns

- He/Him/His

Mmm, this is very interesting! I don't think that extra layers would be required to accomplish DRS, since the convolution operation is independent of resolution like I wrote above. But to me, this does indicate that each of the performance/quality modes may indeed be configured differently from each other. I don't think we could confirm that those differences involve network architectural differences without seeing under the hood, but it's good to know.Regarding DRS + DLSS, I found a reference to DLSS Programming guide on beyond3D: https://forum.beyond3d.com/posts/2190260/

My guess: the underlying mode (quality/balanced/etc) for DLSS since the 2.1 version is still fixed based on the optimal input res and the output res, but there will be some layers added on top compared to 2.0 version to accommodate DRS.

I agree, it seems like all sources concur that output resolution is the most important factor for the network cost. Thanks for sharing - these results about the effect of input resolution are more in line with what I would expect than Thraktor's short test.The number in the right-most column is the average upscaling cost and suggests that there is a very small difference between 1080p and 1440p input res when upscaled to 2160p. If I average further between graphic settings, 1440p -> 4K upscaling takes around 0.8ms more than 1080p -> 4K upscaling on RTX 2060 and 0.8ms less on RTX 2080. However, figures for RTX2080 can be a bit unreliable as the CPU used in the test is only a meager Intel-3770K which may cause bottleneck in high framerate cases).

So I do think that input res matters much less on upscaling cost than the output res.

Right, this may have been one of the posts I was thinking of, since it looks like you extrapolated the RTX 2060S time from 34 SMs to 8 SMs as I vaguely described remembering this morning.Regarding hypothetical upscaling time on Dane, I did some napkin math on Era as well and results seem nice enough for Dane at 4K if 30fps is the target, or NV manages to utilize structural sparsity and set 60fps as the target (assuming it did not already at the time DLSS 2.0 was introduced). I think Thraktor also conducted a similar analysis, but can't find the post now, will update it here later.

I am keeping my expectations low with respect to sparsity. My thought on this is that large, offline neural networks in the last decade are often overparametrized by design, so a lot of the excess, near zero trained parameters can be culled without significantly reducing accuracy. By comparison, DLSS has to be relatively small to run in real time, so I don't necessarily expect as much redundancy in the trained parameters. This rationalization is really just a heuristic though, so it's certainly possible that we could see significant gains from sparsity.

I think numerical is the most straightforward and hard to confuse way to go about it.

That said, there are to others I really like:

Super Nintendo Switch

And

Nintendo Super Switch

That should use both names, but which one depends on region.

SNS in the US, but NSS in Japan.

That said, there are to others I really like:

Super Nintendo Switch

And

Nintendo Super Switch

That should use both names, but which one depends on region.

SNS in the US, but NSS in Japan.

ShadowFox08

Paratroopa

I'm guessing the power draw for Orion x hasn't been released here right? In 2018, 64 watts was confirmed, but it might have changed.They somewhat did "leak" that it is using Lovelace, but implicitly. They didn’t name it, just that it is using a "brand new Nvidia GPU" among other things, and I think @fwd-bwd found that Zhiji has some direct connection to NV? I don’t remember if it is official partners or if it is a daughter company to NV, can’t remember so I'm tagging them to see if they can correct it.

Anyway...

This is what we read from official press release:

"...Nvidia's Orin X chip is made of a brand-new NVIDIA GPU and 12-core ARM CPU with a 7nm process, with a single-chip computing capacity of up to 254 TOPS per second. Among the current mass-produced automotive-grade AI chips, the Nvidia Orin X chip is at the top of the pyramid. The single-chip computing power is about 10 times that of Mobileye's latest EyeQ5 and 3.5 times that of Tesla's HW3.0. "The strongest intelligent driving AI chip on the surface."

Wouldn’t they just name it as Ampere if it is using that, or just saying "is made of latest NVIDIA GPU and 12-core ARM CPU"? They specified it is brand new, though to play devil's advocate, Ampere is also technically still a brand new GPU uArch until the successor is out, so it equally doesn't mean lovelace but we know its lovelace based on leaks so whatever

I see, when it comes to the nitty gritty of neural networks its way above what I know, getting an idea of what it is really but not on a very high technical level, I assumed that with DLA it would be more related to what you were referring to. I do thank you however for clarifying what you meant here.

I wonder how Orion S will play out.. Maybe half an Orion X chop.. if they can just sort of just split the chip down the middle and give us 6 core A78s, half the GPU cores (or less), 128 bus with with GB/s 100GB/s.. With lower clock speeds for GPU and CPU (1-1.75 Ghz) that would be nice.

Tegra - Wikipedia

Switch 2. Final answer. Or Nintendo Super Switch.No Switch Ultra Nintendo, Switch Nintendo Ultra, or Ultra Switch Nintendo? I personally like the acronym SNU.

Last edited:

Honestly I think the subject kind of isn't worth discussing with an audience that isn't explicitly tech-oriented. At least, not without some knowledge of Nintendo's plans in the area. Without the counterbalance of "Nintendo/Nvidia is doing X to address the problem" rather than just "Nintendo/Nvidia could do X to address the problem" it makes very easy dooming fodder.Basically. No one checks the original source to properly cite the information. It was clear we referenced the concerns of BC due architecture change, presented means of achieving it, and somehow that was taken as, "Switch 4K currently has no BC but it is being worked on".

In retrospect... maybe it wasn't even worth discussing.

YolkFolk

Tingle

Honestly, if they were to go for an iterative release model (Ala iPhones), I feel Super Switch or Switch Advanced would be a bit...not scalable.

I would rather like

Either way, I don't super see Super Switch working out with an iterative release model tbh.

- Switch 2 (Easy)

- Switch S (Short for Super)

- This would imply that it sort of sits between a pro model and a successor as well, like the iPhone S phones of old

- Also would let them tick-toc between Switch S's and Switch #. With the next # being a more refined version of the previous S likely.

- So Switch 2 in this case would be a highly refined/boosted version of Switch S.

If they call it the Super Nintendo Switch, we can pretty much tell they will be making this a quicker generation cutoff and that there may not be a "Switch 3", at least in the iterative sense.

The only name worse than Switch S is Switch U.

NineTailSage

Bob-omb

I kinda think the transition will be quick. There will be a big backlog of games that third parties can make available on Dane switch, making it immediately more desirable day one compared to other Nintendo systems. Imagine launching with Red Dead 3, Cyberpunk, Suicide Squad, and Final Fantasy 7, all in addition to Breath of the Wild 2

This is what I was thinking that the timing of this system could come at the perfect release of many big expected games coming out, getting dev-kits out there now gives a chance we see good software schedule the moment this comes to market.

I think they're basically going to do the same as Xbox this generation.

Same OS, same digital store, slightly tweaked joy cons, extended cross gen period, enchanced bc.

If a third party wants to release an exclusive game, go ahead. But for first party, expect years of cross gen.

Definitely think the Xbox game plan is the way for them to go, especially as a unified platform front.

Is that something customizable? I was under the impression that the number of tensor cores per SM is fixed for a given architecture, i.e. that Dane would be composed of Lovelace SMs. If that were true, then Nintendo couldn’t scale tensor performance without increasing the number of SMs and/or the clock speed, which would affect the power budget.

Here's the chart I've been searching for it compares the Tensor performance between A100, GA10x and TU102 at the SM level, on Era we dissected this backwards and forward and could only come up with A100 having access to more cache as the reason A100 Tensor cores are 2x performant over GA102 at the SM level.

How long is it since devkits for Dane have been out? 8 months? Is it unusual to not have any leaks on specs at this point? Comparing to Sony and MS? I know NDAs are in place and professional devs aren’t looking to jeopardize their careers for internet clout. But I figured we’d get something at this point, even if it’s not final.

My guess is the leaky ship that is Ubisoft doesn't have any or many dev-kits yet or we would probably know everything imaginable about the hardware already/s...

Mmm, this is very interesting! I don't think that extra layers would be required to accomplish DRS, since the convolution operation is independent of resolution like I wrote above. But to me, this does indicate that each of the performance/quality modes may indeed be configured differently from each other. I don't think we could confirm that those differences involve network architectural differences without seeing under the hood, but it's good to know.

I agree, it seems like all sources concur that output resolution is the most important factor for the network cost. Thanks for sharing - these results about the effect of input resolution are more in line with what I would expect than Thraktor's short test.

Right, this may have been one of the posts I was thinking of, since it looks like you extrapolated the RTX 2060S time from 34 SMs to 8 SMs as I vaguely described remembering this morning.

I am keeping my expectations low with respect to sparsity. My thought on this is that large, offline neural networks in the last decade are often overparametrized by design, so a lot of the excess, near zero trained parameters can be culled without significantly reducing accuracy. By comparison, DLSS has to be relatively small to run in real time, so I don't necessarily expect as much redundancy in the trained parameters. This rationalization is really just a heuristic though, so it's certainly possible that we could see significant gains from sparsity.

I believe in Ampere whitepaper ultra resolution was confirmed that this was made possible by using sparsity (I will try to find the article though for clarification on this though).

NVIDIA Details Its GeForce RTX 30 Series Graphics Cards During Reddit Q&A - New SM Design, RTX IO, DLSS 2.1, PCIe Gen 4 & More

NVIDIA has revealed more details regarding its GeForce RTX 30 series graphics cards during a Q&A session over at the NVIDIA subreddit.

"EeK09 – What kind of advancements can we expect from DLSS? Most people were expecting a DLSS 3.0, or, at the very least, something like DLSS 2.1. Are you going to keep improving DLSS and offer support for more games while maintaining the same version?

[NV-Randy] DLSS SDK 2.1 is out and it includes three updates:

– New ultra performance mode for 8K gaming. Delivers 8K gaming on GeForce RTX 3090 with a new 9x scaling option.

– VR support. DLSS is now supported for VR titles.

– Dynamic resolution support. The input buffer can change dimensions from frame to frame while the output size remains fixed. If the rendering engine supports dynamic resolution, DLSS can be used to perform the required upscale to the display resolution."

In the GA102 (pg.28) whitepaper they talk about this 9x scaling a result of sparsity

Last edited:

Alovon11

Like Like

- Pronouns

- He/Them

Really?The only name worse than Switch S is Switch U.

People know what "S" means.

We've been conditioned on it since the iPhone 3S, the Galaxy S seires.etc

"S" usually has a premium connotation that is sort of easy to get.

It's only really Microsoft that uses "S" as a budget option iirc.

Also again, it would be short for Nintendo Switch S(uper). Sort of like the NVIDIA Super GPUs and the SNES.

EDIT:

And again, this is if they go for a iterative release cycle ala iPhone and don't just go "Switch 2"

ReddDreadtheLead

#TeamLate2025WithAPotentialForEarly2026

- Pronouns

- He/Him

Thanks for further clarifying it! @ArchedThunder this should clarify it for you as well. So there’s possibly a chance that they “leaked” it intentionally.Yes, the "brand new Nvidia GPU" mention is peculiar and open for interpretation. The "3.5 times of Tesla HW 3.0" mention is also a flex. I wonder if this is Nvidia's response to the late September leak of Tesla HW 4.0 using Samsung's 7nm process. Zhiji/IM Motors is co-owned by Alibaba, which is a Nvidia partner in China.

Personally, I think if they go for more CPU cores more than GPU cores, so rather than 8SMs and 6CPU cores, I think going 6SMs and 8CPU cores would be the best move.I'm guessing the power draw for Orion x hasn't been released here right? In 2018, 64 watts was confirmed, but it might have changed.

I wonder how Orion S will play out.. Maybe half an Orion X chop.. if they can just sort of just split the chip down the middle and give us 6 core A78s, half the GPU cores (or less), 128 bus with with GB/s 100GB/s.. With lower clock speeds for GPU and CPU (1-1.75 Ghz) that would be nice.

On top of having a SLC of a good amount for the whole system to better help it. Similar to how the A15B has 32MB of SLC that really helps it with its perf.

I mentioned it already, but this thing might have a surprising amount of cache for what it is.Here's the chart I've been searching for it compares the Tensor performance between A100, GA10x and TU102 at the SM level, on Era we dissected this backwards and forward and could only come up with A100 having access to more cache as the reason A100 Tensor cores are 2x performant over GA102 at the SM level.

God, I keep mentioning cache! lol

ReddDreadtheLead

#TeamLate2025WithAPotentialForEarly2026

- Pronouns

- He/Him

Separate post, sorry for the DP, but I think Nintendo Switch X would have been a cool one. Super Nintendo Switch is also good.

Nintendo Switch S where S is short for Super.

Or, stay with me, Nintendo Switch 4090Ti

Ready for that

Nintendo Switch S where S is short for Super.

Or, stay with me, Nintendo Switch 4090Ti

Ready for that

Threadmarks

View all 18 threadmarks

Reader mode

Reader mode

Recent threadmarks

Poll #3: When do you think is the launch window for Nintendo's new hardware? Announcement regarding links to news and rumours from 2022 and 2021 Rough summary of the 2 August 2023 episode of Nate the Hate Rough summary of the 11 September 2023 episode of Nate the Hate Anatole's deep dive into a convolutional autoencoder Differences between T234 and T239 Rough summary of the 19 October 2023 episode of Nate the Hate necrolipe's Twitter (X) post on why a 2025 launch doesn't automatically make T239 outdated based on one of the leaked slides from MicrosoftPlease read this new, consolidated staff post before posting.

Furthermore, according to this follow-up post, all off-topic chat will be moderated.

Furthermore, according to this follow-up post, all off-topic chat will be moderated.

Last edited by a moderator: