FSR 2 could be almost a game changer fro current AMD consoles though.Latest DF video on FSR 2.0 is very good, and FSR 2.0 looks great. Worth checking out for this thread because it (incidentally) makes a case for why DLSS is such a good win for Nintendo. To sum up

- DLSS performance is generally better, and likely to always be because tensor cores.

- FSR runs on any card - but that doesn't matter in the console space

- In comparison, current DLSS seems consistently better when working in performance mode.

-

Hey everyone, staff have documented a list of banned content and subject matter that we feel are not consistent with site values, and don't make sense to host discussion of on Famiboards. This list (and the relevant reasoning per item) is viewable here.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

StarTopic Future Nintendo Hardware & Technology Speculation & Discussion |ST| (New Staff Post, Please read)

- Thread starter Dakhil

- Start date

Threadmarks

View all 18 threadmarks

Reader mode

Reader mode

Recent threadmarks

Poll #3: When do you think is the launch window for Nintendo's new hardware? Announcement regarding links to news and rumours from 2022 and 2021 Rough summary of the 2 August 2023 episode of Nate the Hate Rough summary of the 11 September 2023 episode of Nate the Hate Anatole's deep dive into a convolutional autoencoder Differences between T234 and T239 Rough summary of the 19 October 2023 episode of Nate the Hate necrolipe's Twitter (X) post on why a 2025 launch doesn't automatically make T239 outdated based on one of the leaked slides from MicrosoftIamPeacock

Piranha Plant

- Pronouns

- Him / His

DLSS 2.3 is also much better than DLSS 2.0, so AMD still has room for improvement.FSR 2 could be almost a game changer fro current AMD consoles though.

ReddDreadtheLead

#TeamLate2025WithAPotentialForEarly2026

- Pronouns

- He/Him

I suppose so, though for everything else it was just weirdly a pain it seems.This is admittedly all second hand info - - my understanding was that the SDK was very mature. Lots of stuff - like documentation written in English - that doesn't usually come day 1 was present and correct. Getting a game up and running was apparently easy. Getting anywhere resembling sane performance out of it was not. The Switch's flat architecture means you never have to go around the SDK to get good performance, the weird Wii (or much worse, Wii U) design meant that going around it was a requirement.

DF literally says its a big leap from previous techniques, and almost as good as DLSS. If DLSS was a power equaliser before between Drake and more powerful consoles, it isn't as much anymore.unless games blow out the cpu budget on larger consoles then porting was always gonna be viable. DLSS and FSR won't change anything. on consoles, FSR2.0 isn't a big leap because temporal upscaling has been a thing in the console space already

It would be amazing if Nintendo patched a few games to take advantage of FSR 2.0, and then we could compare

they also said that they didn't expect FSR to do much in the console space as it's a more PC oriented solution.DF literally says its a big leap from previous techniques, and almost as good as DLSS. If DLSS was a power equaliser before between Drake and more powerful consoles, it isn't as much anymore.

D

Deleted member 887

Guest

It's more complicated than that. There is this narrative that DLSS was magic sauce that other consoles could never have. Now there is this narrative that FSR 2.0 is an identical magic sauce. Neither of these things are actually true.DF literally says its a big leap from previous techniques, and almost as good as DLSS. If DLSS was a power equaliser before between Drake and more powerful consoles, it isn't as much anymore.

The DLSS/FSR war just solidifies that 2nd generation temporal upscaling is an absolutely essential technique that is going to be everywhere. Because it's essential, AAA games on MS/Sony consoles will need to use FSR, which is slower and uses some of their shader budget. On Drake, it will be faster, and won't use shader budget, leaving more power for other effects. Drake will be the only console with hardware acceleration for a thing that every game needs just like how ray tracing is become universal and the Switch doesn't have it, leading ports to spend general purpose GPU power on a lesser solution.

DLSS remains a powerful gap closer, it just isn't a Special Feature That Only Nintendo has. That's probably a good thing for 3rd party support, as the decision to port won't be "man, we should rig up a TU solution for Switch" it will be "We replace our TU solution for much faster DLSS on Switch"

I should also note that DLSS currently seems much better at upscaling low res images, currently, and I suspect that is a natural advantage of AI trained models over FSR's hand tuned ones, so it is likely to persist to future versions of both. If so, that means that games which need to run at 1440p internal on Xbox in order to look good there will be able to run at 1080p on Switch and look better, which will be a huge win for frame rates.

Dekuman

Starman

- Pronouns

- He/Him/His

Those platforms are trying to push 2k and 4k resolutions, the limitation will be CPU and or RAM. if those can be managed, the resolution issue can be solved.I feel like games that need FSR 2.0 on PS5 and XSX to run were never likely candidates to be ported to Drake tbh

But again, who knows. Series S might be Nintendo's saving grace in all this, it might not. I think it's too early to say

D

Deleted member 887

Guest

I thought it might be fun to work through a totally hypothetical example of how DLSS vs FSR works on consoles and why DLSS is still an advantage for Drake. If this is something you already get, or don't care about please ignore me I just happen to like explaining things (and the sound of my own typing, probably)

First, let's make some assumptions about DLSS and FSR based on the recent DF video.

DLSS is twice as fast as FSR. This is the advantage of hardware acceleration.

FSR and DLSS both look great when working with a 1440p image. In general DLSS seems slightly superior, but that might be Deathloop specific. Let's call it a wash.

DLSS looks much much better when working with a 1080p image. This is likely an advantage of an AI solution, and how fast tensor cores let it operate.

Second, let's make some imaginary hardware.

The SteamBoat: A high end gaming PC, with an NVidia GPU running at 2Ghz.

The XRocks: A gaming console built like a PC, it uses an identical GPU to the steamboat, but only clocked at 1Ghz

The Blake: A portable console using a low power version of the same GPU, clocked at a measly 500Mhz. However, the Blake includes tensor cores that the XRocks and SteamBoat don't have.

Third, let us imagine a game, developed by Jane Q. Bamco called Pac-Ma'am Effect. It has the exact same gameplay as the classic Pac-Ma'am but adds an incredible stream of trippy effects around it, turning the game into an almost spiritual experience. The game demands nothing of the CPU and Memory - it's just Pac-Ma'am after all - but it is extremely demanding of the GPU, and it completely depends on those visuals for it's experience.

On Jane's Steamboat, the game runs at 4k60fps. This is native, no upscaling involved. Jane is a very impressive programmer. Her engine scales linearly with the power of the GPU. The reviews are great

On the XRocks, the game runs at 4k30fps. Half as much power, she just takes twice as long to render each 4k frame, and it cruises along at half speed of the Steamboat version. The reviews are meh, "If it's the only way you can experience it, it's better than experiencing it at all, but the 30fps cap really brings down the experience"

On the Blake, the game runs at 4k15fps. They don't even bother to ship this version of the game, the graphics are too compromised.

6 months later, Jane is working on patch for the game to try to punch up console sales. She sees that Temporal Upscaling might be a way to bring the frame rates up without compromising the visuals too much.

On XRocks Jane can knock the game down to 1440p, and get ~75fps. FSR costs her 10% of her frame budget, meaning she can get over 60fps, and uprez the 1440p image to a 4k image that looks as good at the Steamboat version. Ship it!

Now that she's got a TU solution, Jane takes a look at that Blake port. On the slower hardware, FSR costs her 20% of her frame budget. At 1440p she can get ~37fps. After FSR uprez, she can get 4k30fps. This is the OG XRocks numbers! That's shippable! But can she do better?

Jane tries knocking down the resolution to 1080p. That gets her 60fps! But then FSR's 20% hit takes that down to 48fps, and on top of the the FSR'd image looks terrible. But Blake has this DLSS thing, a hardware accelerated version of FSR. She tries that out. It actually looks good at 1080p. Basically as good as the XRocks version. But also DLSS is twice as fast - it only costs 10% of the frame budget, despite the hardware drop. That's 54fps. That's spitting distance of 60fps! Jane takes some particularly taxing particle effects, and cuts them in half on Blake - that gets her 60fps. Ship it!

On XRocks, the FSR version of the game runs at 4k60fps. Some reviewers note tiny visual artifacts, a few others actually think the level of detail is better than PC. It's a huge win

On Blake, the DLSS version also runs at 4k60fps, but particle effects run at 30fps. Reviewers say things like "you would only notice if you played the games side by side" and follow it up with "technically, this is a slight knock on Blake, but being able to take this incredible game with you on the go makes this the definitive version of the game"

This is how DLSS closes the gap even in a world where FSR exists. Going by this very artificial example, if FSR didn't exist, DLSS would actually let Blake overcome the XRocks, which is bonkers, because it is twice the power. But even with FSR, the specific advantages of DLSS let it stay within spitting distance.

The actual world will be more complicated, both on the hardware side and the degree to which anyone takes advantage in the software. But this narrative that FSR eliminates any advantages that DLSS gives Drake doesn't actually hold up.

First, let's make some assumptions about DLSS and FSR based on the recent DF video.

DLSS is twice as fast as FSR. This is the advantage of hardware acceleration.

FSR and DLSS both look great when working with a 1440p image. In general DLSS seems slightly superior, but that might be Deathloop specific. Let's call it a wash.

DLSS looks much much better when working with a 1080p image. This is likely an advantage of an AI solution, and how fast tensor cores let it operate.

Second, let's make some imaginary hardware.

The SteamBoat: A high end gaming PC, with an NVidia GPU running at 2Ghz.

The XRocks: A gaming console built like a PC, it uses an identical GPU to the steamboat, but only clocked at 1Ghz

The Blake: A portable console using a low power version of the same GPU, clocked at a measly 500Mhz. However, the Blake includes tensor cores that the XRocks and SteamBoat don't have.

Third, let us imagine a game, developed by Jane Q. Bamco called Pac-Ma'am Effect. It has the exact same gameplay as the classic Pac-Ma'am but adds an incredible stream of trippy effects around it, turning the game into an almost spiritual experience. The game demands nothing of the CPU and Memory - it's just Pac-Ma'am after all - but it is extremely demanding of the GPU, and it completely depends on those visuals for it's experience.

On Jane's Steamboat, the game runs at 4k60fps. This is native, no upscaling involved. Jane is a very impressive programmer. Her engine scales linearly with the power of the GPU. The reviews are great

On the XRocks, the game runs at 4k30fps. Half as much power, she just takes twice as long to render each 4k frame, and it cruises along at half speed of the Steamboat version. The reviews are meh, "If it's the only way you can experience it, it's better than experiencing it at all, but the 30fps cap really brings down the experience"

On the Blake, the game runs at 4k15fps. They don't even bother to ship this version of the game, the graphics are too compromised.

6 months later, Jane is working on patch for the game to try to punch up console sales. She sees that Temporal Upscaling might be a way to bring the frame rates up without compromising the visuals too much.

On XRocks Jane can knock the game down to 1440p, and get ~75fps. FSR costs her 10% of her frame budget, meaning she can get over 60fps, and uprez the 1440p image to a 4k image that looks as good at the Steamboat version. Ship it!

Now that she's got a TU solution, Jane takes a look at that Blake port. On the slower hardware, FSR costs her 20% of her frame budget. At 1440p she can get ~37fps. After FSR uprez, she can get 4k30fps. This is the OG XRocks numbers! That's shippable! But can she do better?

Jane tries knocking down the resolution to 1080p. That gets her 60fps! But then FSR's 20% hit takes that down to 48fps, and on top of the the FSR'd image looks terrible. But Blake has this DLSS thing, a hardware accelerated version of FSR. She tries that out. It actually looks good at 1080p. Basically as good as the XRocks version. But also DLSS is twice as fast - it only costs 10% of the frame budget, despite the hardware drop. That's 54fps. That's spitting distance of 60fps! Jane takes some particularly taxing particle effects, and cuts them in half on Blake - that gets her 60fps. Ship it!

On XRocks, the FSR version of the game runs at 4k60fps. Some reviewers note tiny visual artifacts, a few others actually think the level of detail is better than PC. It's a huge win

On Blake, the DLSS version also runs at 4k60fps, but particle effects run at 30fps. Reviewers say things like "you would only notice if you played the games side by side" and follow it up with "technically, this is a slight knock on Blake, but being able to take this incredible game with you on the go makes this the definitive version of the game"

This is how DLSS closes the gap even in a world where FSR exists. Going by this very artificial example, if FSR didn't exist, DLSS would actually let Blake overcome the XRocks, which is bonkers, because it is twice the power. But even with FSR, the specific advantages of DLSS let it stay within spitting distance.

The actual world will be more complicated, both on the hardware side and the degree to which anyone takes advantage in the software. But this narrative that FSR eliminates any advantages that DLSS gives Drake doesn't actually hold up.

Oldpuck, I just want you to know I mashed that "yeah" button so dang hard that the counter updated from 2 => 4. That's how much butt your Pac-Ma'am post just kicked

I had not clearly understood that FSR and DLSS largely performed the same operations, but that the latter basically offloads to the added hardware.

Is it right to assume the downside to DLSS/"tensor co-processing" is cost both monetarily and battery? Does it run cooler or hotter than FSR to achieve the same target?

I had not clearly understood that FSR and DLSS largely performed the same operations, but that the latter basically offloads to the added hardware.

Is it right to assume the downside to DLSS/"tensor co-processing" is cost both monetarily and battery? Does it run cooler or hotter than FSR to achieve the same target?

I don't agree. hitting CPU limitations is something I still think Series and PS5 games will struggle with. hell, the only "game" that does hit the limit is The Matrix Awakens and that's only because it blows its ass out for RT effectsI don't think the next Switch will close the gap, if anything i think it will be get bigger as the PS5 and Xbox Series are not bottlenecked by jaguar CPU's.

davec00ke

Octorok

Both Xbox Series and PS5 cpu's will be significantly more powerfull than the next Switch.I don't agree. hitting CPU limitations is something I still think Series and PS5 games will struggle with. hell, the only "game" that does hit the limit is The Matrix Awakens and that's only because it blows its ass out for RT effects

D

Deleted member 887

Guest

Glad I wasn't doing it just for myself!Oldpuck, I just want you to know I mashed that "yeah" button so dang hard that the counter updated from 2 => 4. That's how much butt your Pac-Ma'am post just kicked

The inputs and the outputs are basically the same, the middle step is very different. FSR is all human-tuned and runs on any GPU. DLSS uses AI, and needs AI acceleration.I had not clearly understood that FSR and DLSS largely performed the same operations, but that the latter basically offloads to the added hardware.

This kind of analysis is hard in ordinary times, but FSR 2.0 is literally days old, so there is no real data on how much power the tensor cores are costing you running DLSS, versus how much the shader cores are costing you running FSR.Is it right to assume the downside to DLSS/"tensor co-processing" is cost both monetarily and battery? Does it run cooler or hotter than FSR to achieve the same target?

so? if the games don't often hit the limit of the cpus, it doesn't matter how much more powerful they are. I don't think there will be so many games where "cpu" is the reason they're not on switchBoth Xbox Series and PS5 cpu's will be significantly more powerfull than the next Switch.

Fsr being as good as it is is great news for everyone. Hardware agnostic and open source solutions are awesome, and NVIDIA won't rest on their laurels with dlss and I wouldn't be surprised if the gap between both technologies increased again in the future. Having dedicated hardware to perform a task is usually a good bet.

ReddDreadtheLead

#TeamLate2025WithAPotentialForEarly2026

- Pronouns

- He/Him

I don't think the next Switch will close the gap, if anything i think it will be get bigger as the PS5 and Xbox Series are not bottlenecked by jaguar CPU's.

Considering how low they’re clocked, I doubt this.Both Xbox Series and PS5 cpu's will be significantly more powerfull than the next Switch.

The only major saving grace they have is SMT being enabled, besides that the Zen 2 CPUs are not “significantly” more performant that the A78 CPU cores at the clocks the other consoles are running at. SMT scales differently depending on the application, some make better use of it while others do not.

If it was Zen 2 vs the A57, I’d agree. But do you actually think they’ll go with A57? And please do not use the tired “because Nintendo” logic you apply in your posts, it’s not constructive at all and is more console warring than an actual tech discussion.

ShadowFox08

Paratroopa

Worst case scenario, the gap will be similar to switch vs xbone/ps4 in CPU power. I don't think it's the most likely scenario. I do think it will be better than the 3-3.5x gap. Maybe 2-2.5x. We'll see.Both Xbox Series and PS5 cpu's will be significantly more powerfull than the next Switch.

I'm thinking Switch 2 will be 8 A78 CPU cores, but not expecting 2Ghz for every core. In contrast, series S is 3.4 to 3.6Ghz per core (8 cores).

if Drake really ends up going to 5nm, they could theoretically exceed the 2GHz CPU speed... Which I expect they won't do.

--------------------------------------------------------

This is more related to Steam Deck but.. could be relevant to now Orion could perform on a 5nm mode.

Creators of AyaNeo2 claim the GPU will be as powerful as GTX 1050 TI.

2x faster than Steam Deck (3.3 vs 2.6 TFLOPs) and while running 15-28watts on the APU on 5nm.

50% more GPU cores, with higher clocks for each core vs Steam Deck

AYANEO2 announced with Ryzen 7 6800U "Rembrandt" APU featuring RDNA2 graphics twice as fast as SteamDeck - VideoCardz.com

AYANEO2 to feature RDNA2 graphics, just as powerful as GeForce GTX 1050 Ti The Chinese company has just announced its newest gaming console, featuring AMD’s latest Ryzen 6000U “Rembrandt” APU with Zen3+ and RDNA2 architectures. AYANEO2 with AMD Ryzen 7 6800U APU, Source: AYANEO The Ryzen 7...

Last edited:

I think Drake could theoretically have more performance in comparison to the Ryzen 6800U if fabricated using TSMC's N5 process node, considering the Ryzen 6800U's fabricated using TSMC's N6 process node, which has the same performance and power efficiency as TSMC's N7 process node, with the only improvement in comparison with TSMC's N7 process node is 18% higher logic density.This is more related to Steam Deck but.. could be relevant to now Orion could perform on a 5nm mode.

Creators of AyaNeo2 claim the GPU will be as powerful as GTX 1050 TI.

2x faster than Steam Deck (3.3 vs 2.6 TFLOPs) and while running 15-28watts on the APU on 5nm.

50% more GPU cores, with higher clocks for each core vs Steam Deck

AYANEO2 announced with Ryzen 7 6800U "Rembrandt" APU featuring RDNA2 graphics twice as fast as SteamDeck - VideoCardz.com

AYANEO2 to feature RDNA2 graphics, just as powerful as GeForce GTX 1050 Ti The Chinese company has just announced its newest gaming console, featuring AMD’s latest Ryzen 6000U “Rembrandt” APU with Zen3+ and RDNA2 architectures. AYANEO2 with AMD Ryzen 7 6800U APU, Source: AYANEO The Ryzen 7...videocardz.com

Maybe Drake could have comparable performance with the Ryzen 6800U if fabricated using Samsung's 5LPE process node, since Samsung's 5LPE process node is at best on par with TSMC's N7P process node.

davec00ke

Octorok

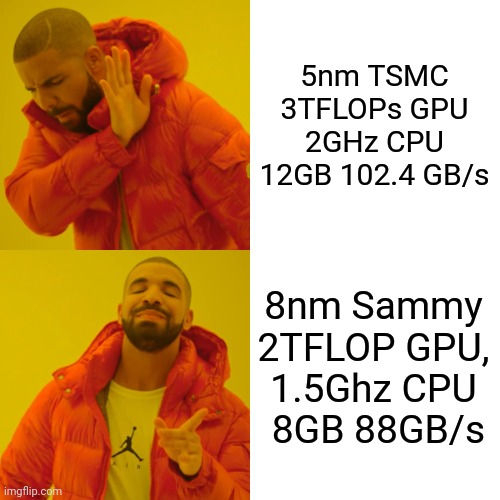

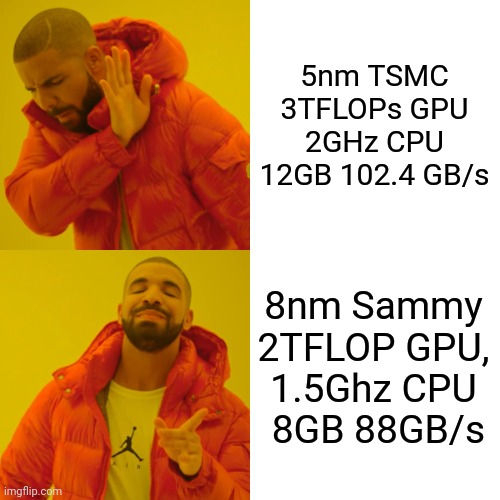

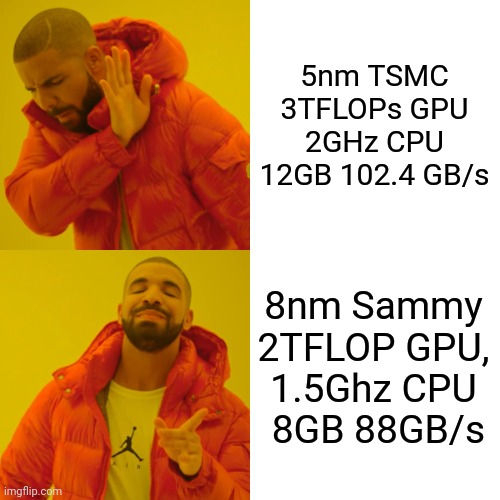

What if it uses Samsung 8nm which is worse than TSMC N7I think Drake could theoretically have more performance in comparison to the Ryzen 6800U if fabricated using TSMC's N5 process node, considering the Ryzen 6800U's fabricated using TSMC's N6 process node, which has the same performance and power efficiency as TSMC's N7 process node, with the only improvement in comparison with TSMC's N7 process node is 18% higher logic density.

Maybe Drake could have comparable performance with the Ryzen 6800U if fabricated using Samsung's 5LPE process node, since Samsung's 5LPE process node is at best on par with TSMC's N7P process node.

ShadowFox08

Paratroopa

Then the whole nintendoverse is going to dieWhat if it uses Samsung 8nm which is worse than TSMC N7

probably not

So somehow it can achieve double the GPU performance from newer AMD architecture alone vs Steam Deck's 7nm process... while having slightly higher power draw <_<I think Drake could theoretically have more performance in comparison to the Ryzen 6800U if fabricated using TSMC's N5 process node, considering the Ryzen 6800U's fabricated using TSMC's N6 process node, which has the same performance and power efficiency as TSMC's N7 process node, with the only improvement in comparison with TSMC's N7 process node is 18% higher logic density.

Maybe Drake could have comparable performance with the Ryzen 6800U if fabricated using Samsung's 5LPE process node, since Samsung's 5LPE process node is at best on par with TSMC's N7P process node.

Last edited:

Keep in mind RDNA 2 CUs aren't directly comparable to Ampere SMs since RDNA 2 has 64 Stream processors per CU and Ampere has 128 CUDA cores per SM. Van Gogh has 8 CUs, or 512 Stream processors. Ryzen 7 6800U has 12 CUs, or 768 Stream processors. Drake has 12 SMs, or 1536 CUDA cores.Then the whole nintendoverse is going to die

probably not

Å

Thats probably because most of them still run on jaguars.I don't agree. hitting CPU limitations is something I still think Series and PS5 games will struggle with. hell, the only "game" that does hit the limit is The Matrix Awakens and that's only because it blows its ass out for RT effects

How does a stream processor compare to a cuda core?Keep in mind RDNA 2 CUs aren't directly comparable to Ampere SMs since RDNA 2 has 64 Stream processors per CU and Ampere has 128 CUDA cores per SM. Van Gogh has 8 CUs, or 512 Stream processors. Ryzen 7 6800U has 12 CUs, or 768 Stream processors. Drake has 12 SMs, or 1536 CUDA cores.

Last edited:

ReddDreadtheLead

#TeamLate2025WithAPotentialForEarly2026

- Pronouns

- He/Him

But the amount of RAM doesn’t determine that speed in this case or the nodeThen the whole nintendoverse is going to die

probably not

Are you referring to Drake here or the 6800U apu?So somehow it can achieve double the GPU performance from newer AMD architecture alone vs Steam Deck's 7nm process... while having slightly higher power draw <_<

Because if it’s to Drake, the Steam deck draws like 30W almost

Drake’s chance of being that high are so low.

ShadowFox08

Paratroopa

So somehow it has double the performance from newer architecture alone with a slight node upgrade vs Steam Deck's 7nm process... while having near identical power draw.I think Drake could theoretically have more performance in comparison to the Ryzen 6800U if fabricated using TSMC's N5 process node, considering the Ryzen 6800U's fabricated using TSMC's N6 process node, which has the same performance and power efficiency as TSMC's N7 process node, with the only improvement in comparison with TSMC's N7 process node is 18% higher logic density.

Maybe Drake could have comparable performance with the Ryzen 6800U if fabricated using Samsung's 5LPE process node, since Samsung's 5LPE process node is at best on par with TSMC's N7P process node.

oh I know the RAM i listed has no effect on total bandwidth..But the amount of RAM doesn’t determine that speed in this case or the node

Are you referring to Drake here or the 6800U apu?

Because if it’s to Drake, the Steam deck draws like 30W almost

Drake’s chance of being that high are so low.

Talking about the 6800 apu. 15 to 28 watts is pretty even with steam deck now actually.

ReddDreadtheLead

#TeamLate2025WithAPotentialForEarly2026

- Pronouns

- He/Him

The steam deck APU only draws up to 15W and can be as low as 4W, the whole device draws like 27-30Woh I know the RAM i listed has no effect on total bandwidth..

Talking about the 6800 apu. 15 to 28 watts is pretty even with steam deck now actually.

If the 6800U APU draws 15-28W for the APU alone, the whole device would not draw at most 28W, it would probably draw closer to 37-45W.

So it’s not really “slightly”

Even once devs drop last gen, I'm not sure if there will be too much of a push on the cpu side. The jaguar cores were shit, but from a game design perspective, they weren't that limiting.Å

Thats probably because most of them still run on jaguars.

Outside of heavy physics simulators, massive number of ai driven actors, or boundary pushing RT from Lumen, I still struggle to see what kind of game a 6-8 core A78 can't do

In a shooter for example, there is plenty of room for more realistic physics and destruction mayhem. If you shoot a building with an rpg in real life, it doesn’t barely leave a mark.Even once devs drop last gen, I'm not sure if there will be too much of a push on the cpu side. The jaguar cores were shit, but from a game design perspective, they weren't that limiting.

Outside of heavy physics simulators, massive number of ai driven actors, or boundary pushing RT from Lumen, I still struggle to see what kind of game a 6-8 core A78 can't do

- Pronouns

- He/Him

The main issue is that requires a ton more work from developers too. Not everyone is going to want to get even crazier with their budgets and development time.In a shooter for example, there is plenty of room for more realistic physics and destruction mayhem. If you shoot a building with an rpg in real life, it doesn’t barely leave a mark.

ReddDreadtheLead

#TeamLate2025WithAPotentialForEarly2026

- Pronouns

- He/Him

They have the GPU for that.In a shooter for example, there is plenty of room for more realistic physics and destruction mayhem. If you shoot a building with an rpg in real life, it doesn’t barely leave a mark.

Nvidia GPUs aren’t a slouch when it comes to physics simulations, and the RT cores are essentially physics accelerators (I’m overly simplifying this).

It does require more work if they have the CPU doing this though, but it isn’t so far out of the realm.

ShaunSwitch

Moblin

Just thinking about CPU grunt, if Nintendo reserves one core for the OS, we are talking about 2-3 times improvement in terms of CPU headroom for the OS. Do we think Nintendo will use it?

I was going to say maybe they offload voice chat back to the console but that was never a CPU issue as voice chats foot print is tiny.

With how simple the switch OS is, can you think of any features they might add that will utilise that extra CPU headroom without making the OS feel less snappy?

The only one I can think of right now is game previews for owned titles. So if you hover over an icon in the switch OS and you have screenshot or video saved to your device from that game it will cycle them in the thumbnail area.

Would require a lot of development, but the tensor cores inference performance could be used to have a very snappy voice assistant built into the OS. Would be great for a digital library. "Hey Mario, launch dead cells.", "I'mma sorry, you don't own that title, would you like me to take you to its Eshop page?" "Hey Mario, how much time have I spent playing the binding of isaac?", "Mama Mia! You have spent approximately 1200 Hours playing the binding of isaac."

Damn it, now I want that voice assistant.

Oh and themes. It's maddening that I have such an awesome BOTW theme on my 3ds, opening the 3ds and hearing that sheika slate sound, then on my switch where the title is playable its just grey or white theme.

I was going to say maybe they offload voice chat back to the console but that was never a CPU issue as voice chats foot print is tiny.

With how simple the switch OS is, can you think of any features they might add that will utilise that extra CPU headroom without making the OS feel less snappy?

The only one I can think of right now is game previews for owned titles. So if you hover over an icon in the switch OS and you have screenshot or video saved to your device from that game it will cycle them in the thumbnail area.

Would require a lot of development, but the tensor cores inference performance could be used to have a very snappy voice assistant built into the OS. Would be great for a digital library. "Hey Mario, launch dead cells.", "I'mma sorry, you don't own that title, would you like me to take you to its Eshop page?" "Hey Mario, how much time have I spent playing the binding of isaac?", "Mama Mia! You have spent approximately 1200 Hours playing the binding of isaac."

Damn it, now I want that voice assistant.

Oh and themes. It's maddening that I have such an awesome BOTW theme on my 3ds, opening the 3ds and hearing that sheika slate sound, then on my switch where the title is playable its just grey or white theme.

Speaking of that, are Switch games actually using gpu physics to a significant degree?They have the GPU for that.

Nvidia GPUs aren’t a slouch when it comes to physics simulations, and the RT cores are essentially physics accelerators (I’m overly simplifying this).

It does require more work if they have the CPU doing this though, but it isn’t so far out of the realm.

WarioWare Get It Together use Nvidia's PhysX, but not sure if it makes use of the GPU.Speaking of that, are Switch games actually using gpu physics to a significant degree?

l

It probably does.WarioWare Get It Together use Nvidia's PhysX, but not sure if it makes use of the GPU.

ReddDreadtheLead

#TeamLate2025WithAPotentialForEarly2026

- Pronouns

- He/Him

That I know of, no. But I don’t think many do.Speaking of that, are Switch games actually using gpu physics to a significant degree?

It is the GPU if it is using PhysXWarioWare Get It Together use Nvidia's PhysX, but not sure if it makes use of the GPU.

Stream processors and CUDA cores are comparable in the sense that both are shader cores. Although shader cores do have the same fundamental function, and therefore share similarities, shader cores do also differ in terms of design depending on the architecture.How does a stream processor compare to a cuss core?

Source about WarioWare using PhysX for anyone curious: (list extracted from the game executable)

SDK MW+Nintendo+NintendoWare_Atk-10_4_1-Release

SDK MW+Nintendo+NintendoWare_G3d-10_4_1-Release

SDK MW+Nintendo+NintendoWare_Ui2d-10_4_1-Release

SDK MW+Nintendo+NintendoWare_Font-10_4_1-Release

SDK MW+Nintendo+NintendoWare_Vfx-10_4_1-Release

SDK MW+Nintendo+NintendoSDK_gfx-10_4_1-Release

SDK MW+Nintendo+NintendoWare_Bezel_Engine-1_50_0-Release

SDK MW+Nintendo+NintendoWare_Fxt-1_50_0-Release

SDK MW+Nintendo+NintendoSDK_libcurl-10_4_1-Release

SDK MW+Nintendo+NintendoSDK_libz-10_4_1-Release

SDK MW+Nintendo+NEX_RK-4_6_4

SDK MW+Nintendo+NEX_R2-4_6_4

SDK MW+Nintendo+NEX_DS-4_6_4

SDK MW+Nintendo+NEX_UT-4_6_4

SDK MW+Nintendo+NEX-4_6_4-

SDK MW+Nintendo+PiaClone-5_29_0

SDK MW+Nintendo+PiaCommon-5_29_0

SDK MW+Nintendo+Pia-5_29_0

SDK MW+Nintendo+PiaFramework-5_29_0

SDK MW+Nintendo+PiaLan-5_29_0

SDK MW+Nintendo+PiaLocal-5_29_0

SDK MW+Nintendo+PiaReckoning-5_29_0

SDK MW+Nintendo+PiaSession-5_29_0

SDK MW+Nintendo+PiaSync-5_29_0

SDK MW+Nintendo+PiaTransport-5_29_0

SDK MW+Nintendo+PiaNex-5_29_0-forNEX-4_6_4

SDK MW+Nintendo+NEX_MM-4_6_4

SDK MW+Nintendo+NEX_SS-4_6_4

SDK MW+NVIDIA+PhysX-3_4_0-release

SDK MW+Nintendo+NintendoWare_G3d-10_4_1-Release

SDK MW+Nintendo+NintendoWare_Ui2d-10_4_1-Release

SDK MW+Nintendo+NintendoWare_Font-10_4_1-Release

SDK MW+Nintendo+NintendoWare_Vfx-10_4_1-Release

SDK MW+Nintendo+NintendoSDK_gfx-10_4_1-Release

SDK MW+Nintendo+NintendoWare_Bezel_Engine-1_50_0-Release

SDK MW+Nintendo+NintendoWare_Fxt-1_50_0-Release

SDK MW+Nintendo+NintendoSDK_libcurl-10_4_1-Release

SDK MW+Nintendo+NintendoSDK_libz-10_4_1-Release

SDK MW+Nintendo+NEX_RK-4_6_4

SDK MW+Nintendo+NEX_R2-4_6_4

SDK MW+Nintendo+NEX_DS-4_6_4

SDK MW+Nintendo+NEX_UT-4_6_4

SDK MW+Nintendo+NEX-4_6_4-

SDK MW+Nintendo+PiaClone-5_29_0

SDK MW+Nintendo+PiaCommon-5_29_0

SDK MW+Nintendo+Pia-5_29_0

SDK MW+Nintendo+PiaFramework-5_29_0

SDK MW+Nintendo+PiaLan-5_29_0

SDK MW+Nintendo+PiaLocal-5_29_0

SDK MW+Nintendo+PiaReckoning-5_29_0

SDK MW+Nintendo+PiaSession-5_29_0

SDK MW+Nintendo+PiaSync-5_29_0

SDK MW+Nintendo+PiaTransport-5_29_0

SDK MW+Nintendo+PiaNex-5_29_0-forNEX-4_6_4

SDK MW+Nintendo+NEX_MM-4_6_4

SDK MW+Nintendo+NEX_SS-4_6_4

SDK MW+NVIDIA+PhysX-3_4_0-release

Everyone likes to bring that up but we had those games even back on the ps1 with Red Faction. And then Battlefield attempted to make widespread destruction more of a mainstream product, but those never took. Maybe in a single player, level based game. Or Just Cause 5. 4 really suffered under those Jaguar coresIn a shooter for example, there is plenty of room for more realistic physics and destruction mayhem. If you shoot a building with an rpg in real life, it doesn’t barely leave a mark.

But Avalanche has to want to come back

Teal'c

Shriekbat

It would be amazing if Nintendo patched a few games to take advantage of FSR 2.0, and then we could compare

Would this be so difficult/expensive for developers?

I wonder if some of the upcoming games (No Man's Sky, Hogwarts Legacy and the ARK revamp) will make use of it.

Worst case scenario, the gap will be similar to switch vs xbone/ps4 in CPU power. I don't think it's the most likely scenario. I do think it will be better than the 3-3.5x gap. Maybe 2-2.5x. We'll see.

I'm thinking Switch 2 will be 8 A78 CPU cores, but not expecting 2Ghz for every core. In contrast, series S is 3.4 to 3.6Ghz per core (8 cores).

if Drake really ends up going to 5nm, they could theoretically exceed the 2GHz CPU speed... Which I expect they won't do.

--------------------------------------------------------

This is more related to Steam Deck but.. could be relevant to now Orion could perform on a 5nm mode.

Creators of AyaNeo2 claim the GPU will be as powerful as GTX 1050 TI.

2x faster than Steam Deck (3.3 vs 2.6 TFLOPs) and while running 15-28watts on the APU on 5nm.

50% more GPU cores, with higher clocks for each core vs Steam Deck

AYANEO2 announced with Ryzen 7 6800U "Rembrandt" APU featuring RDNA2 graphics twice as fast as SteamDeck - VideoCardz.com

AYANEO2 to feature RDNA2 graphics, just as powerful as GeForce GTX 1050 Ti The Chinese company has just announced its newest gaming console, featuring AMD’s latest Ryzen 6000U “Rembrandt” APU with Zen3+ and RDNA2 architectures. AYANEO2 with AMD Ryzen 7 6800U APU, Source: AYANEO The Ryzen 7...videocardz.com

Steamdeck is really huge

With a better distribution and a tempting price I'd like to try an Ayaneo 2, even though it looks really thick.

I wonder what Drake's size will be

Just saying, there has never been a console gen where devs didn't make the most of the computational resources they had to work with. I do think games will be more cpu heavy when crossgen ends.Everyone likes to bring that up but we had those games even back on the ps1 with Red Faction. And then Battlefield attempted to make widespread destruction more of a mainstream product, but those never took. Maybe in a single player, level based game. Or Just Cause 5. 4 really suffered under those Jaguar cores

But Avalanche has to want to come back

The GPU on Van Gogh and the GPU on the Ryzen 6800U are both based on the RDNA 2 architecture.So somehow it can achieve double the GPU performance from newer AMD architecture alone vs Steam Deck's 7nm process... while having slightly higher power draw <_<

And remember when I said the only improvement TSMC's N6 process node has over TSMC's N7 process node is 18% higher logic density? AMD increased the number of CUs by 1.5x (8 CUs vs 12 CUs) and the GPU frequency by 1.5-2.4x (1-1.6 GHz vs 2.4 GHz) when comparing the GPU on Van Gogh with the GPU on the Ryzen 6800U.

10k

Nintendo Switch RTX

- Pronouns

- He/Him

So long as the CPU can get close to the Series S CPU, Switch should be fine with ports.I don't think the next Switch will close the gap, if anything i think it will be get bigger as the PS5 and Xbox Series are not bottlenecked by jaguar CPU's.

If the Drake really is a 12SM machine with 6-8 A78 CPU's and has 6GB+ of RAM, games won't be skipping it due to technical reasons.

We had no leaks indicating anything about the cpu, other than it’s likely to be a78.For all we know it’s quad core.So long as the CPU can get close to the Series S CPU, Switch should be fine with ports.

If the Drake really is a 12SM machine with 6-8 A78 CPU's and has 6GB+ of RAM, games won't be skipping it due to technical reasons.

Nothing about ram either for that matter. The only solid info we have is about gpu.and what we can extrapolate from Orin.

Last edited:

Aether

Kremling

Yeah... ok. So essentially: this says nothing, and it could still come in wintre/spring. oh well.Literally nothing keeps them from doing that.

They wouldn't have even lied, they'll say their current forecast was for currently announced products.

Actually, LiC (and Thraktor) found that in comparison to Orin, which has a max bus-width of 256-bit, Drake has a max bus-width of 128-bit, with respect to the RAM, from the illegal Nvidia leaks.Nothing about ram either for that matter.

ReddDreadtheLead

#TeamLate2025WithAPotentialForEarly2026

- Pronouns

- He/Him

I think the chance of it being 4 cores again is pretty low. Not zero, just low.

They were willing to upgrade the Wii CPU core count from 1 to 3 when devs kept complaining about the CPU of the Wii. On top of also increasing the clock frequency and adding a third level of memory that acted like a System Level Cache (32MB embedded DRAM). And with the Switch, devs are also complaining about the CPU.

If it has 6 CPU cores, I assume they would have 2-4 little cores for the OS as well. Or just the 6, and 5 available for games.

Likewise if it has 8 cores, 7 for games and 1 for the OS.

I understand that Nintendo does their own thing, and does not aim for the bleeding edge or the very top level of performance, and nor do they have to. They do, however, do listen to developer feedback from the first and third parties. And have done so numerous times before. They don’t do a “fuck you haha” like people seem to think to developers. If you want the closets thing to a “FU”, look at the CELL or the emotion engine, the latter was far worse than the CELL and Sony barely provided help at the time.

They were willing to upgrade the Wii CPU core count from 1 to 3 when devs kept complaining about the CPU of the Wii. On top of also increasing the clock frequency and adding a third level of memory that acted like a System Level Cache (32MB embedded DRAM). And with the Switch, devs are also complaining about the CPU.

If it has 6 CPU cores, I assume they would have 2-4 little cores for the OS as well. Or just the 6, and 5 available for games.

Likewise if it has 8 cores, 7 for games and 1 for the OS.

I understand that Nintendo does their own thing, and does not aim for the bleeding edge or the very top level of performance, and nor do they have to. They do, however, do listen to developer feedback from the first and third parties. And have done so numerous times before. They don’t do a “fuck you haha” like people seem to think to developers. If you want the closets thing to a “FU”, look at the CELL or the emotion engine, the latter was far worse than the CELL and Sony barely provided help at the time.

Nintendo has updated their forecast before fwiw, but I don’t remember if they gave any explanation for it that was grand. I think the most recent example was “due to semiconductor, we had to adjust our forecast down to 23M units for the fiscal year)Yeah... ok. So essentially: this says nothing, and it could still come in wintre/spring. oh well.

D

Deleted member 1324

Guest

So I ended up buying my mates Steamdeck as mentioned earlier in the thread.

Some thoughts if anyone cares since it's an up to date handheld device from the view of someone who's waited on the next Switch for 3 years and decided to break and buy a SD lol... -

CPU -

For third party games 60fps is extremely taxing on the CPU meaning it ramps up to 100% utilisation, a lot of heat then as you'd expect the fan ramps up to 100% meaning significant noise even after the latest patch to make it quieter. You also get an hour less battery life playing at 60fps versus using the 30fps cap. Keep in mind this CPU is basically the PS5/Series console CPU cut in half with 4 cores / 8 threads and can boost up to 3.5Ghz.

GPU -

Image quality wise the colours obviously aren't as good as the OLED screen but even lowering the resolution to 720p then using the AMD trick it still looks crisp. DLSS is going to be great as it's much better by all accounts.

Memory -

16GB's at 88GB/s seems to be phenomenal and plenty for most games. This could also be why games load so fast? For future proofing I'd love to see this exact RAM from Nintendo considering some will have to be used for the OS and background recording. 12GB dedicated for games would be great!

HDD -

Although I only have the standard HDD and not an SSD it loads really, really fast compared to a PS4 Pro and even faster than PS5 in Path of Exile for instance. I'm also using a 128GB SD card so maybe that makes it much faster than the PS4 Pro? Overall after using what is essentially a PC handheld with no way as much per game optimisation I'm no longer too worried if the next Switch doesn't use an SSD.

Build -

Although quite a bit bigger than the OLED Switch it's much, much more comfortable to hold as someone with large hands. Feels comparable to the OLED with the Hori Split Pad Pro Joycons. It was a LOT lighter than I anticipated.

Personal Conclusion -

Overall it's an extremely impressive piece of kit especially as I was lucky enough to bag it for the same price I paid for my OLED Switch (£330).

It has worried me slightly though for future third party games because I don't feel Nintendo will want to push this amount of RAM and clock speeds to this extent to prevent the heat, noise of fan and lower end battery life even if it's on a smaller die. It's more than enough for a lot of PS360 + PS4/XBO games at 720p/60fps.

If Nintendo can hit this CPU + GPU + RAM performance with DLSS on top then I shall be delighted though !

Some thoughts if anyone cares since it's an up to date handheld device from the view of someone who's waited on the next Switch for 3 years and decided to break and buy a SD lol... -

CPU -

For third party games 60fps is extremely taxing on the CPU meaning it ramps up to 100% utilisation, a lot of heat then as you'd expect the fan ramps up to 100% meaning significant noise even after the latest patch to make it quieter. You also get an hour less battery life playing at 60fps versus using the 30fps cap. Keep in mind this CPU is basically the PS5/Series console CPU cut in half with 4 cores / 8 threads and can boost up to 3.5Ghz.

GPU -

Image quality wise the colours obviously aren't as good as the OLED screen but even lowering the resolution to 720p then using the AMD trick it still looks crisp. DLSS is going to be great as it's much better by all accounts.

Memory -

16GB's at 88GB/s seems to be phenomenal and plenty for most games. This could also be why games load so fast? For future proofing I'd love to see this exact RAM from Nintendo considering some will have to be used for the OS and background recording. 12GB dedicated for games would be great!

HDD -

Although I only have the standard HDD and not an SSD it loads really, really fast compared to a PS4 Pro and even faster than PS5 in Path of Exile for instance. I'm also using a 128GB SD card so maybe that makes it much faster than the PS4 Pro? Overall after using what is essentially a PC handheld with no way as much per game optimisation I'm no longer too worried if the next Switch doesn't use an SSD.

Build -

Although quite a bit bigger than the OLED Switch it's much, much more comfortable to hold as someone with large hands. Feels comparable to the OLED with the Hori Split Pad Pro Joycons. It was a LOT lighter than I anticipated.

Personal Conclusion -

Overall it's an extremely impressive piece of kit especially as I was lucky enough to bag it for the same price I paid for my OLED Switch (£330).

It has worried me slightly though for future third party games because I don't feel Nintendo will want to push this amount of RAM and clock speeds to this extent to prevent the heat, noise of fan and lower end battery life even if it's on a smaller die. It's more than enough for a lot of PS360 + PS4/XBO games at 720p/60fps.

If Nintendo can hit this CPU + GPU + RAM performance with DLSS on top then I shall be delighted though !

I’m sure you know, but just need to point out that it doesn’t actually need as good specs for similar real world performance, as natively optimized games run a lot more efficient than the windows version does through Proton.So I ended up buying my mates Steamdeck as mentioned earlier in the thread.

Some thoughts if anyone cares since it's an up to date handheld device from the view of someone who's waited on the next Switch for 3 years and decided to break and buy a SD lol... -

CPU -

For third party games 60fps is extremely taxing on the CPU meaning it ramps up to 100% utilisation, a lot of heat then as you'd expect the fan ramps up to 100% meaning significant noise even after the latest patch to make it quieter. You also get an hour less battery life playing at 60fps versus using the 30fps cap. Keep in mind this CPU is basically the PS5/Series console CPU cut in half with 4 cores / 8 threads and can boost up to 3.5Ghz.

GPU -

Image quality wise the colours obviously aren't as good as the OLED screen but even lowering the resolution to 720p then using the AMD trick it still looks crisp. DLSS is going to be great as it's much better by all accounts.

Memory -

16GB's at 88GB/s seems to be phenomenal and plenty for most games. This could also be why games load so fast? For future proofing I'd love to see this exact RAM from Nintendo considering some will have to be used for the OS and background recording. 12GB dedicated for games would be great!

HDD -

Although I only have the standard HDD and not an SSD it loads really, really fast compared to a PS4 Pro and even faster than PS5 in Path of Exile for instance. I'm also using a 128GB SD card so maybe that makes it much faster than the PS4 Pro? Overall after using what is essentially a PC handheld with no way as much per game optimisation I'm no longer too worried if the next Switch doesn't use an SSD.

Build -

Although quite a bit bigger than the OLED Switch it's much, much more comfortable to hold as someone with large hands. Feels comparable to the OLED with the Hori Split Pad Pro Joycons. It was a LOT lighter than I anticipated.

Personal Conclusion -

Overall it's an extremely impressive piece of kit especially as I was lucky enough to bag it for the same price I paid for my OLED Switch (£330).

It has worried me slightly though for future third party games because I don't feel Nintendo will want to push this amount of RAM and clock speeds to this extent to prevent the heat, noise of fan and lower end battery life even if it's on a smaller die. It's more than enough for a lot of PS360 + PS4/XBO games at 720p/60fps.

If Nintendo can hit this CPU + GPU + RAM performance with DLSS on top then I shall be delighted though !

Last edited:

Threadmarks

View all 18 threadmarks

Reader mode

Reader mode

Recent threadmarks

Poll #3: When do you think is the launch window for Nintendo's new hardware? Announcement regarding links to news and rumours from 2022 and 2021 Rough summary of the 2 August 2023 episode of Nate the Hate Rough summary of the 11 September 2023 episode of Nate the Hate Anatole's deep dive into a convolutional autoencoder Differences between T234 and T239 Rough summary of the 19 October 2023 episode of Nate the Hate necrolipe's Twitter (X) post on why a 2025 launch doesn't automatically make T239 outdated based on one of the leaked slides from MicrosoftPlease read this new, consolidated staff post before posting.

Furthermore, according to this follow-up post, all off-topic chat will be moderated.

Furthermore, according to this follow-up post, all off-topic chat will be moderated.

Last edited by a moderator: