The

specs for the PI3USB30532 chip mention the following options:

- USB 3.2 Gen 1 signal only

- 1 lane of USB 3.2 Gen 1 signal and 2 DisplayPort 1.2 channels

- 4 DisplayPort 1.2 channels

Only the second and third options apply to the Nintendo Switch and the OLED model since the DisplayPort 1.2 signal is converted to a

HDMI 1.4b signal for the Nintendo Switch, or a

HDMI 2.0b signal for the OLED model, for TV mode, although HDMI 2.0b should be backwards compatible with HDMI 1.4b.

And DisplayPort 1.2 has a max data transfer rate of 21.6 Gbps.

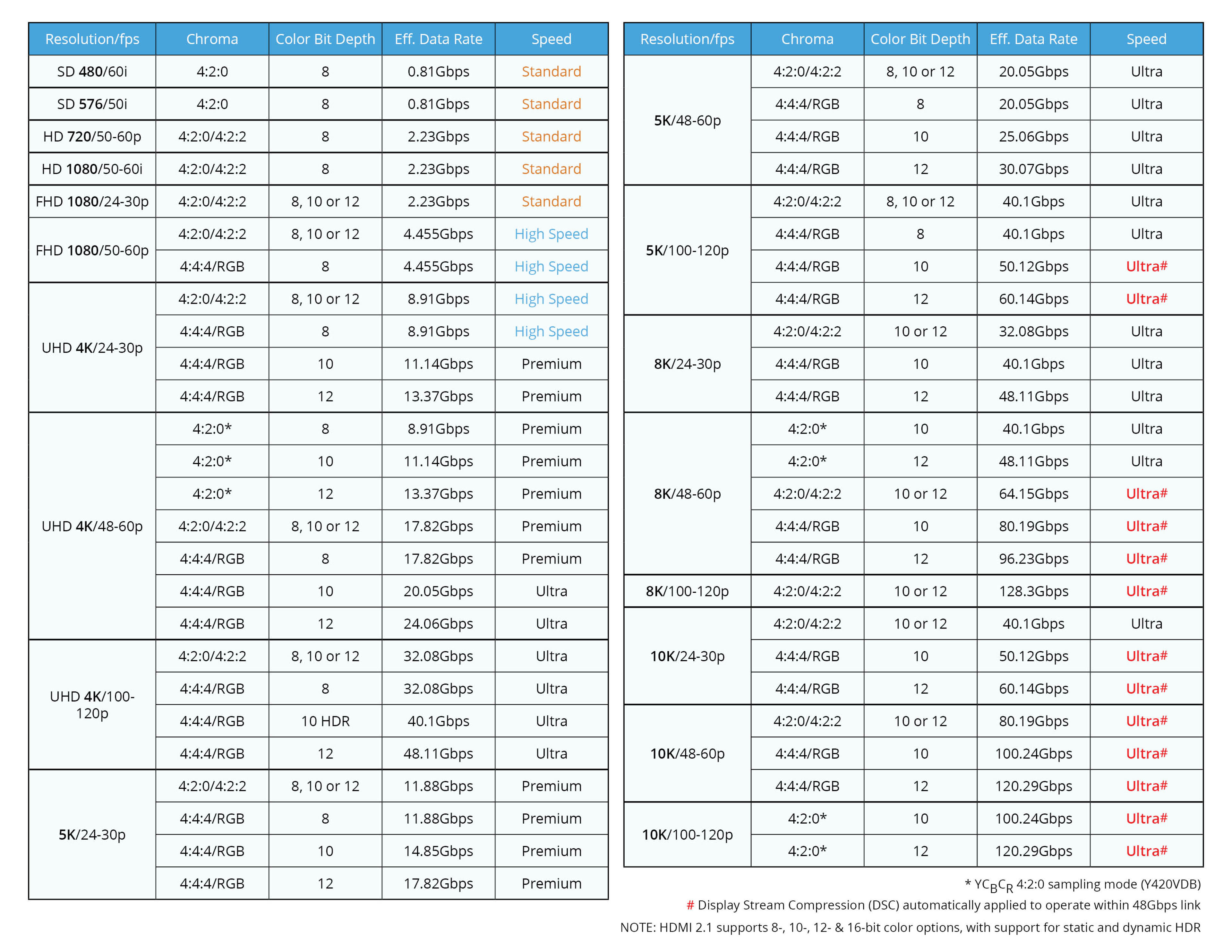

So assuming 4 DisplayPort 1.2 channels have a max data transfer rate of 21.6 Gbps, and 2 DisplayPort 1.2 channels have a max data transfer rate of 10.8 Gbps, the PI3USB30532 chip should provide a sufficient amount of bandwidth required for 4K 60 Hz for the second and third options, going by the chart provided by the HDMI Forum below.

Just don't expect full chroma support.

And dataminers noticed that Nintendo added

"4kdp_preferred_over_usb30" when Nintendo released system update 12.0.0

around last year. The

OLED model's dock features the RTL8154B chip for the LAN port, which

Realtek mentions using USB 2.0.

So Nintendo does have the option to use 4 DisplayPort 1.4 channels for TV mode, which should allow for better chroma support at 4K 60 Hz.

Of course, theoretically speaking, Nintendo could replace the PI3USB30532 chip in the console's motherboard with

the PI3USB31532 chip, and replace

the RTD2172N chip in the dock's motherboard with

the RTD2173 chip, if Nintendo wants to support a refresh rate higher than 120 Hz, and VRR via HDMI 2.1 instead of HDMI 2.0b (

via Nvidia G-Sync).

One potential problem with also putting RAM chips on the back of the motherboard is that could necessitate adding additional cooling solutions (e.g. another heat sink, another fan, another copper heat pipe, another heat spreader) to the back of the motherboard, which could require the console to be thicker and heavier, which could be problematic if Nintendo wants the form factor of the new console to be very similar to the OLED model's form factor.

I mention additional cooling solutions could be required if there are RAM chips present in the back of the motherboard is because

when Steve Burke from Gamer's Nexus measured the temperature of the RAM chips and the Tegra X1, he noticed that the temperature of the RAM chips is very similar to the temperature of the Tegra X1, which is in the range of 55°C - 60°C. And that temperature range is definitely possible if Nintendo plans on having the LPDDR5 chips run at

a max I/O rate of 6400 MT/s.

Nvidia's only responsible for providing Nintendo the SoC and the API for the Nintendo Switch, the Nintendo Switch Lite, and the OLED model. Nintendo's fully responsible for choosing which chip is used for the LAN port for the OLED model.

And going by IFixIt's picture of the OLED model's dock's motherboard above, Nintendo used the RTL8154B chip for the LAN port. And Realtek mentioned that the RTL8154B chip uses USB 2.0, which does suggest that the LAN port on the OLED model's dock is limited to

USB 2.0's max data transfer speed of 480 Mbps.