-

Hey everyone, staff have documented a list of banned content and subject matter that we feel are not consistent with site values, and don't make sense to host discussion of on Famiboards. This list (and the relevant reasoning per item) is viewable here.

-

Do you have audio editing experience and want to help out with the Famiboards Discussion Club Podcast? If so, we're looking for help and would love to have you on the team! Just let us know in the Podcast Thread if you are interested!

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

StarTopic Future Nintendo Hardware & Technology Speculation & Discussion |ST| (Read the staff posts before commenting!)

- Thread starter Dakhil

- Start date

Threadmarks

View all 18 threadmarks

Reader mode

Reader mode

Recent threadmarks

Poll #3: When do you think is the launch window for Nintendo's new hardware? Announcement regarding links to news and rumours from 2022 and 2021 Rough summary of the 2 August 2023 episode of Nate the Hate Rough summary of the 11 September 2023 episode of Nate the Hate Anatole's deep dive into a convolutional autoencoder Differences between T234 and T239 Rough summary of the 19 October 2023 episode of Nate the Hate necrolipe's Twitter (X) post on why a 2025 launch doesn't automatically make T239 outdated based on one of the leaked slides from Microsoft

Staff Communication

View all 12 threadmarks

Reader mode

Reader mode

Recent threadmarks

Regarding Hogwarts Staff Communication 05/26/23 Staff Post about Citing Leaks and Rumors Staff Post about reading Hidden Content Staff Post- Please read Keeping on topic New Switch 2 ST Made - Nontechnical Talk Has A New Home Staff Post Regarding Dubious Insiders And Better SourcingRaccoon

Fox Brigade

- Pronouns

- He/Him

FTC is suingDid something recently kill the Arm merger?

It's not dead yet, but the FTC suit is a pretty big addition to the "reasons this is unlikely to go through" pile. This was always a pretty likely outcome, it's just taken a while to get to this point.Did something recently kill the Arm merger?

ReddDreadtheLead

#TeamLate2025WithAPotentialForEarly2026

- Pronouns

- He/Him

Orin only has 6MB of L3 Cache btw =PI think we'll be fine, at least when compared to xbone and ps4 base. Nvidia architecture since Maxwell has been known to be really efficient with bandwidth, 64 bit LPDDR5 will put us a double the bandwidth of switch's 25.6 (51.2 GB/s) and that should will put us at xbone level at least. But there's a very good chance we'll get 88-102 GB/s bandwidth if we get 128 bit bus width., which I belive shouldn't be a problem when it comes head to head with PS4 games.

The absolute best case scenario is 102GB/s bandwidth with Orion NX's L2 and L34- 8mb cache tech which will help tremendously free and speed up bandwidth... Not to mention DLSS.

and that’s for the CPU

GPU only has up to L2, but there is system cache which may function as the L3 for the gpu portion.

iirc

japtor

Rattata

Yeah Nvidia's value to Nintendo is more the software and dev support side than anything else. Any of the other rumored or speculated partners have been non starters in my eyes until anyone can show they can compete with the software and support side of things.One of Swi

I've always felt like Nintendo benefits a lot more working with nvidia as their GPU techs are scaled down from their desktop designs and benefit from nvidia's drivers and breakthroughs like DLSS. I feel like its what party made Switch so powerful at launch in 2017, given the Tegra X1 was already in a retail product prior to its launch and was rather mediocre as an android device without the benefit of more dedicated nvn apis Switch has access to.

Working with a purely mobile GPU company that doesn't have the software prowess of nvidia and having to build an API on their own wouldn't have as much value to them. It's why I feel the nvidia partnership is sticky for them.

Granted it's not like Imagination is some random upstart, they powered Apple's mobile devices forever up until recently (even then, might still be some Imagination based IP mixed in there based on their agreements). Course that's Apple running the software show there, only other notable uses I can think of were Dreamcast and Vita. There's been other mobile devices too but no clue which. They also tried the PC space ages ago, twice actually iirc, one with PC cards way back and later I think Intel used them for some IGP cores.

They could buy them as a hedge for if/when RISC-V becomes the next big thing, and still do ARM stuff. If you don't want Nvidia/SiFive there'll be Imagination and who knows how many other vendors making stuff. It'll be interesting if that ever happens, but god knows how long it'd take to see fruit from it as far as mainstream uses.Ah, I see.

Nvidia buying SiFive is probably less of a threat to RISC-V as an ISA than buying ARM would be, since I think SiFive doesn't actually own the core IP and the license allows for fork threats, but I have some doubts Nvidia doing Nvidia things would be healthy for what is supposed to be a very open architecture.

At the very least, this would probably indicate a "going all in on RISC-V" scenario, as that is SiFive's focus. The chances of that trickling down to Nintendo in that scenario are high, but it is probably a manageable switch with a good enough emulator on place.

PedroNavajas

Boo

- Pronouns

- He, him

Apple nearly bankrupted PowerVR, though. The current company is a shell of its former shelf, after being drained almost dry by the tech giant...Yeah Nvidia's value to Nintendo is more the software and dev support side than anything else. Any of the other rumored or speculated partners have been non starters in my eyes until anyone can show they can compete with the software and support side of things.

Granted it's not like Imagination is some random upstart, they powered Apple's mobile devices forever up until recently (even then, might still be some Imagination based IP mixed in there based on their agreements). Course that's Apple running the software show there, only other notable uses I can think of were Dreamcast and Vita. There's been other mobile devices too but no clue which. They also tried the PC space ages ago, twice actually iirc, one with PC cards way back and later I think Intel used them for some IGP cores.

They could buy them as a hedge for if/when RISC-V becomes the next big thing, and still do ARM stuff. If you don't want Nvidia/SiFive there'll be Imagination and who knows how many other vendors making stuff. It'll be interesting if that ever happens, but god knows how long it'd take to see fruit from it as far as mainstream uses.

I don't know about the Apple A15's GPU since Apple hasn't updated the Metal Feature Set Tables since 21 October 2020, but the Apple A14's GPU does support PowerVR Texture Compression (PVRTC) pixel formats.Granted it's not like Imagination is some random upstart, they powered Apple's mobile devices forever up until recently (even then, might still be some Imagination based IP mixed in there based on their agreements). Course that's Apple running the software show there, only other notable uses I can think of were Dreamcast and Vita. There's been other mobile devices too but no clue which. They also tried the PC space ages ago, twice actually iirc, one with PC cards way back and later I think Intel used them for some IGP cores.

And Imagination Technologies is trying the PC market again with the partnership with Innosilicon (here and here).

~

Jraphic Horse from ResetEra made a good point that Orin's GPU having 50% L1 cache than GA102 isn't necessarily an indication that Orin's GPU's borrowing a hardware feature from Lovelace of having more cache since GA100 also has 192 KB of L1 cache.

However, I do think that one hardware feature that Orin's definitely borrowing from Lovelace is AV1 encoding support since consumer Ampere GPUs only have AV1 decoding support. And since @ILikeFeet mentioned that Nvidia has made no mention of which generation the RT cores on Orin are part of in the Jetson AGX Orin Module Data Sheet, the RT cores on Orin could be based on the RT cores on Lovelace, especially since Nvidia mentioned that there's a RT core per two SMs in Orin.

~

And here are benchmark scores for the Snapdragon 8 Gen 1.

Last edited:

those were straight ARM CPUs licensed by nintendo with no modification. as for who fabbed them, probably tsmc. the 3DS's gpu (which is on a separate chip) is by Digital Media Professionals and was on 65nm. probably fabbed by TSMCWho made the 3ds and ds soc

Feel free to ignore what I'm saying below if 'on a separate chip' is referring to separate chips inside of a SoC.those were straight ARM CPUs licensed by nintendo with no modification. as for who fabbed them, probably tsmc. the 3DS's gpu (which is on a separate chip) is by Digital Media Professionals and was on 65nm. probably fabbed by TSMC

I believe the PICA200 GPU's not on a separate chip, but is part of the CPU CTR SoC and CPU CTR A/B SoCs in the Nintendo 3DS/Nintendo 2DS & Nintendo 3DS XL, and the CPU LGR A SoC in the New Nintendo 3DS/New Nintendo 3DS XL/New Nintendo 2DS XL.

~

Fun fact: the Nintendo 3DS was originally supposed to run on a Tegra SoC from Nvidia. In fact, there were mentions of Nintendo working with Nvidia on the Nintendo 3DS since 2006! But I guess whatever Tegra SoC the Nintendo 3DS was supposed to be running on (Tegra 2?) was consuming too much power and producing too much heat, which forced Nintendo to switch to the PICA200, at least for the GPU.

Based on the benchmark scores provided by Qualcomm, the Snapdragon 8 Gen 1's CPU performance seems to practically be the same as the Snapdragon 888's CPU performance, which seems to vindicate a rumour from FrontTron that Qualcomm's fabricating the Snapdragon 8 Gen 1 using Samsung's 4LPX process node, which is a custom variant of Samsung's 5LPP process node. And the Snapdragon 8 Gen 1's Adreno GPU seems to beat the Apple A14 Bionic's GPU, but be very close to the Apple A15 Bionic's GPU, in terms of GPU performance.Either way, 8cx3 and G3x will be a good indication of what to expect from a 5nm chip intended for a gaming console. Moreover, the Razer dev platform has the particularity to use switch-like form factor as opposed to deck/aya/gpd that are targeting a 15W TDP in handheld mode.

Assuming the Snapdragon G3x Gen 1 is based on the Snapdragon 8 Gen 1, the Snapdragon 8 Gen 1 should give a rough impression of how the Snapdragon G3x Gen 1 would perform.

I don't expect the DLSS model*'s CPU to be close to the Snapdragon G3x Gen 1's CPU since the DLSS model*'s CPU frequency's probably going to be lower than the Snapdragon G3x Gen 1's CPU frequency.

However, I expect the DLSS model*'s GPU to be extremely competitive against the Snapdragon G3x Gen 1's GPU overall, with the DLSS model*'s GPU at least beating the Snapdragon G3x Gen 1's GPU in certain scenarios.

~

Last edited:

I think around May 2022 at the absolute latest.When do we realistically expect to find out more about this device (leaks or otherwise) if it’s due out in September-November 2022?

After the new year. Probably feb or marchWhen do we realistically expect to find out more about this device (leaks or otherwise) if it’s due out in September-November 2022?

ShadowFox08

Paratroopa

Tegra 2 had a tdp of 20 watts too. Must really gut it...Feel free to ignore what I'm saying below if 'on a separate chip' is referring to separate chips inside of a SoC.

I believe the PICA200 GPU's not on a separate chip, but is part of the CPU CTR SoC and CPU CTR A/B SoCs in the Nintendo 3DS/Nintendo 2DS & Nintendo 3DS XL, and the CPU LGR A SoC in the New Nintendo 3DS/New Nintendo 3DS XL/New Nintendo 2DS XL.

~

Fun fact: the Nintendo 3DS was originally supposed to run on a Tegra SoC from Nvidia. In fact, there were mentions of Nintendo working with Nvidia on the Nintendo 3DS since 2006! But I guess whatever Tegra SoC the Nintendo 3DS was supposed to be running on (Tegra 2?) was consuming too much power and producing too much heat, which forced Nintendo to switch to the PICA200, at least for the GPU.

What are Tegra 2's GPU specs anyway? I just know it's 300-400Mhz, but that doesn't say much like GFLOPs performance Guessing it would have been more than pica?

NineTailSage

Bob-omb

Tegra 2 is probably similar in spec to the Pica 200 @ 4.8-6.4Gflops, with the Pica GPU just better on power usage.Tegra 2 had a tdp of 20 watts too. Must really gut it...

What are Tegra 2's GPU specs anyway? I just know it's 300-400Mhz, but that doesn't say much like GFLOPs performance Guessing it would have been more than pica?

ReddDreadtheLead

#TeamLate2025WithAPotentialForEarly2026

- Pronouns

- He/Him

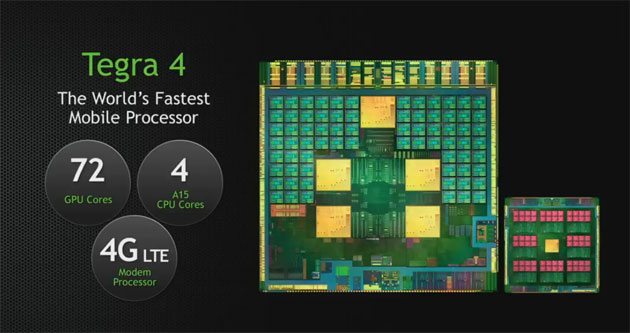

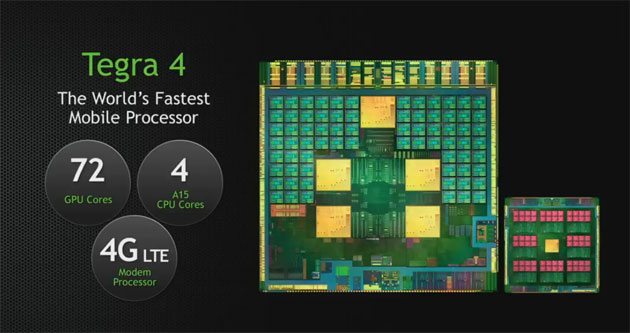

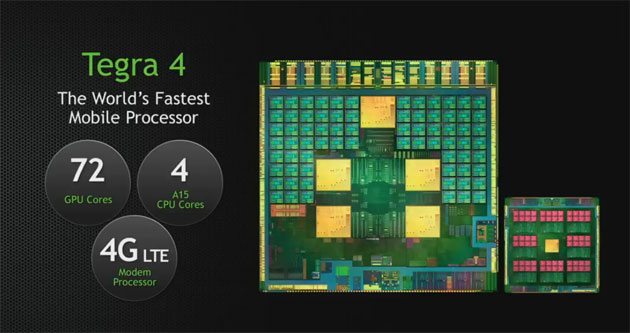

Yeah, at least according to this:Tegra 2 is probably similar in spec to the Pica 200 @ 4.8-6.4Gflops, with the Pica GPU just better on power usage.

Nvidia Tegra 5 To Be More Powerful Than Xbox 360 and PlayStation 3 | eTeknix

The best source for tech and gaming news, hardware reviews and daily fix of tech. The latest reviews of motherboards, GPUs and much more!

www.eteknix.com

Nvidia’s Tegra 2 had 4.8 GFLOPS, Nvidia’s Tegra 3 had 12 GFLOPS and Nvidia’s current generation Tegra 4 has 80 GFLOPS. Current generation consoles, the PS3 and Xbox 360, both boast about 200 GFLOPS so Nvidia would have to more than double the next generation to achieve this. However, given Tegra 4 was just under 7 times more powerful than Tegra 3, seeing the 2.5 times rise in Tegra 5 over Tegra 4 doesn’t look that unrealistic at all. To put all these GFLOPS figures into context, Nvidia’s GTX Titan produces 4500 GFLOPS while the PlayStation 4 is expected to produce 1800 GLOPS.

I also find it interesting the increase in the Tegra chips. Tegra 8 or 9 (Orin) is also another large jump.

What could have been

After 4, nvidia aligned their mobile tech with their desktop tech. Doing what AMD is doing now, actuallyYeah, at least according to this:

Nvidia Tegra 5 To Be More Powerful Than Xbox 360 and PlayStation 3 | eTeknix

The best source for tech and gaming news, hardware reviews and daily fix of tech. The latest reviews of motherboards, GPUs and much more!www.eteknix.com

I also find it interesting the increase in the Tegra chips. Tegra 8 or 9 (Orin) is also another large jump.

NineTailSage

Bob-omb

Yeah, at least according to this:

Nvidia Tegra 5 To Be More Powerful Than Xbox 360 and PlayStation 3 | eTeknix

The best source for tech and gaming news, hardware reviews and daily fix of tech. The latest reviews of motherboards, GPUs and much more!www.eteknix.com

I also find it interesting the increase in the Tegra chips. Tegra 8 or 9 (Orin) is also another large jump.

Yeah Orin is a big jump but the Tegra line has never been able to benefit from being manufactured on a cutting-edge process, every time something more efficient has been available to the mobile market and the Tegra line usually looks pretty power hungry in comparison.

We are definitely hitting that diminishing returns wall where 8nm, 7nm, 5nm and 4nm are considered decent to great nodes for mobile hardware and soon it will only get harder to improve upon what is capable.

PedroNavajas

Boo

- Pronouns

- He, him

Tegra 2 had programable shaders and full support for OpenGL ES 2.0, so is kinda hard to compare directly with a fixed function GPU like the Pica 200. I would say that the PICA 200 had considerably higher performance for some effects than the Tegra 2. RE:R per pixel ligthing was superior to even the Ps3/360/Wii U version. It had significantly worse texturing than the PowerVR GPU in the Vita and iPhones at the time, though.

PedroNavajas

Boo

- Pronouns

- He, him

Nintendo could have been the indie darling sooner if Tegra 2 was up to snuff. imaging all the Unity games that could have been on the 3DS

hell, maybe a port of this tech demo!

And the full game only got an iOS release... It is ironic how Epic was one of the biggest promoters of iOS gaming early on.

Tim Sweeny got high on his own supplyAnd the full game only got an iOS release... It is ironic how Epic was one of the biggest promoters of iOS gaming early on.

in other news, I found out the Ryzen 4800U is around 180mm2. it got me thinking what Dane could be like if it was 150mm2 to 170mm2

Dekuman

Kremling

- Pronouns

- He/Him/His

I don't think Dane needs to be the same size as the X1. The only limiting factor here is probably Nintendo. They may want a smaller chip to contain costs, but I think it's much easier to get the perf and feature set they need with a bigger chip . I hope Nvidia guides them to the right balance and not purely concentrate on launch costsTim Sweeny got high on his own supply

in other news, I found out the Ryzen 4800U is around 180mm2. it got me thinking what Dane could be like if it was 150mm2 to 170mm2

Alovon11

Like Like

- Pronouns

- He/Them

anyway, I do feel the idea that Nintendo would never consider selling at least an SKU at a minor loss at launch is impossible is..well...isn't considering all the factors?I don't think Dane needs to be the same size as the X1. The only limiting factor here is probably Nintendo. They may want a smaller chip to contain costs, but I think it's much easier to get the perf and feature set they need with a bigger chip . I hope Nvidia guides them to the right balance and not purely concentrate on launch costs

The Switch brand is a massive one and has made Nintendo a lot of money, and I feel if they wanted to push the idea of an iterative successor full-on, they would need to hit a specific price range for one SKU, and they would likely be willing to take a minor loss on that SKU to get the idea across so they a recoup the costs latest on.

Also, they would be making a return off higher-end SKUs if they go with a multi-SKU model and also sell old Switches and Software.etc

If Nintendo were to sell a system at a loss, it would be now as they are in the best position to do so at the moment (Also because the PS5 DE and the Series S exist making it so they would sort of need to target the 300-400$ price range anyway to stay competitive price-wise)

Anandtech mentioned that the GeForce ULP on the Tegra 2 runs at 4.8 GFLOPs at a frequency of 300 MHz.What are Tegra 2's GPU specs anyway? I just know it's 300-400Mhz, but that doesn't say much like GFLOPs performance Guessing it would have been more than pica?

I couldn't find power consumption measurements for the GeForce ULP on the Tegra 2. But I was able to find power consumption measurements for the Cortex-A9 CPU on the Tegra 2 courtesy of the Colibri T20 Datasheet.

The Cortex-A9 CPU consumes 1.0956 W (3.3 V * 332 mA) [256 MB] and 1.3662 W (3.3 V * 414 mA) [512 MB] when idle; and the Cortex-A9 CPU consumes 2.6796 W (3.3 V * 812 mA) [256 MB] and 2.7126 W (3.3 V * 822 mA) [512 MB] when doing high load applications. And just to put that into context, based on the AC adapter used for the Nintendo 3DS family, the Nintendo 3DS family has a max TDP of 4.14 W (4.6 V * 900 mA).

The power consumption of the Cortex-A9 CPU on the Tegra 2 definitely seems way too high!

I think it depends on how much Nintendo ultimately decides to price the DLSS model*. But saying that, I don't think it's impossible for Nintendo to sell the DLSS model* at a very small profit overall.anyway, I do feel the idea that Nintendo would never consider selling at least an SKU at a minor loss at launch is impossible is..well...isn't considering all the factors?

The Switch brand is a massive one and has made Nintendo a lot of money, and I feel if they wanted to push the idea of an iterative successor full-on, they would need to hit a specific price range for one SKU, and they would likely be willing to take a minor loss on that SKU to get the idea across so they a recoup the costs latest on.

Also, they would be making a return off higher-end SKUs if they go with a multi-SKU model and also sell old Switches and Software.etc

If Nintendo were to sell a system at a loss, it would be now as they are in the best position to do so at the moment (Also because the PS5 DE and the Series S exist making it so they would sort of need to target the 300-400$ price range anyway to stay competitive price-wise)

Dekuman

Kremling

- Pronouns

- He/Him/His

Yeah, I think $350 Switch OLED is a signal where they are going pricewise with the Switch 2. Given this report allegedly notes SWOLED allegedly only costs $10 more to manufacture per unit than the standard model, which I assume has had cost reduced already after 4 years on the market, the only reason for the $450 pricing in my mind is to position a future full upgrade in that price range, if not higher.anyway, I do feel the idea that Nintendo would never consider selling at least an SKU at a minor loss at launch is impossible is..well...isn't considering all the factors?

The Switch brand is a massive one and has made Nintendo a lot of money, and I feel if they wanted to push the idea of an iterative successor full-on, they would need to hit a specific price range for one SKU, and they would likely be willing to take a minor loss on that SKU to get the idea across so they a recoup the costs latest on.

Also, they would be making a return off higher-end SKUs if they go with a multi-SKU model and also sell old Switches and Software.etc

If Nintendo were to sell a system at a loss, it would be now as they are in the best position to do so at the moment (Also because the PS5 DE and the Series S exist making it so they would sort of need to target the 300-400$ price range anyway to stay competitive price-wise)

If they do cut back I could see a really barebones basic SKU with the same 64GB memory module at $350 and the more appealing model at $399 maybe with 128 or even 256 GB

NineTailSage

Bob-omb

I don't think Dane needs to be the same size as the X1. The only limiting factor here is probably Nintendo. They may want a smaller chip to contain costs, but I think it's much easier to get the perf and feature set they need with a bigger chip . I hope Nvidia guides them to the right balance and not purely concentrate on launch costs

Orin/Orin NX as a whole is pretty interesting that it caps out at much lower clocks than the architecture is natively capable of on the desktop side of things. It will be great to know how this all effects thermal design and yields.

Yeah, I think $350 Switch OLED is a signal where they are going pricewise with the Switch 2. Given this report allegedly notes SWOLED allegedly only costs $10 more to manufacture per unit than the standard model, which I assume has had cost reduced already after 4 years on the market, the only reason for the $450 pricing in my mind is to position a future full upgrade in that price range, if not higher.

If they do cut back I could see a really barebones basic SKU with the same 64GB memory module at $350 and the more appealing model at $399 maybe with 128 or even 256 GB

The SwOLED is definitely a determining factor of where Nintendo will gage future hardware pricing, but I have to say that in hindsight (with seeing thorough breakdowns) there's no way the SwOLED only costs $10 over the original Switch model. So seeing everything they changed I'm fully leaning into them going for a $400 price especially if the specs can clearly outclass both PS4/XboxOne on paper without trying hard, I think this will be a easier selling point for consumers to see games that look better than PS4 but on the go.

- Pronouns

- He/Him/His

Out of curiousity, have there been any more news about teardowns on the OLED Dock since the revelation it has an HDMI 2.0 compatible port that's currently turned off? A quick cursory Google search shows that was the last bit of news about it since October, but I'm curious if anything else under the hood has been uncovered either in the computer chip inside or any recent firmware updates for console or the dock itself.

no major update since. it's just an updated dock. probably because they can't get any non-2.0 compatible chipsOut of curiousity, have there been any more news about teardowns on the OLED Dock since the revelation it has an HDMI 2.0 compatible port that's currently turned off? A quick cursory Google search shows that was the last bit of news about it since October, but I'm curious if anything else under the hood has been uncovered either in the computer chip inside or any recent firmware updates for console or the dock itself.

- Pronouns

- He/Him/His

Damn, really wanted to believe it meant Netflix around the corner that can stream 4K content to TVs with compatible docksno major update since. it's just an updated dock. probably because they can't get any non-2.0 compatible chips

Guess I'll hold off on buying a new dock until there's any kind of official announcements, then

that ain't what Netflix is waiting for. or if they're even waiting at allDamn, really wanted to believe it meant Netflix around the corner that can stream 4K content to TVs with compatible docks

Guess I'll hold off on buying a new dock until there's any kind of official announcements, then

- Pronouns

- He/Him/His

Yeah, I figured Netflix is waiting to have their palms greased and Nintendo ain't budging, but it still feels silly that it makes the Switch appear older than it is by lacking software most mobile devices come with installed by defaultthat ain't what Netflix is waiting for. or if they're even waiting at all

I feel like the fact that the max CPU and GPU frequencies of the Jetson AGX Orin and the Jetson Orin NX are practically the same as the max CPU and GPU frequencies of the Tegra X1 is a pretty strong indication that Nvidia's probably using Samsung's 8N process node for fabricating the Jetson AGX Orin and the Jetson Orin NX.Orin/Orin NX as a whole is pretty interesting that it caps out at much lower clocks than the architecture is natively capable of on the desktop side of things. It will be great to know how this all effects thermal design and yields.

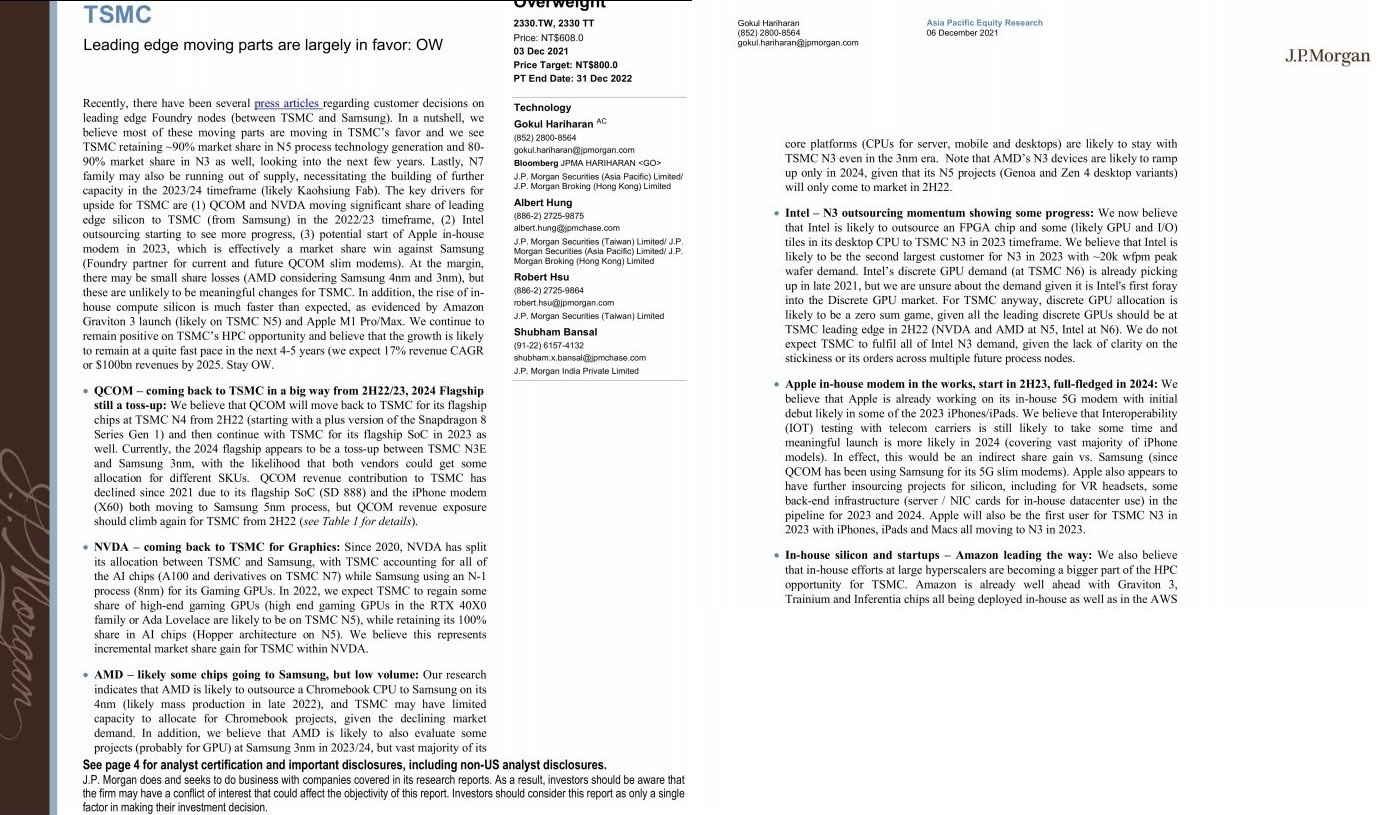

So JPMorgan released a research report, discussing which leading edge process nodes some companies are likely to use for fabricating chips to be used from 2022-2024.

I still think Nintendo and Nvidia are likely to use Samsung's 5LPP process node for the fabrication of the Dane refresh, assuming Nintendo plans on releasing the DLSS model* refresh in holiday 2024, assuming that Nintendo does release the DLSS model* in holiday 2022; and assuming Nvidia's planning on using Samsung's 5LPP process node for the fabrication of entry-level and/or mid-range Lovelace GPUs.

- Qualcomm's likely to use TSMC's N4 process node for the fabrication of the Snapdragon 8 Gen 1+, the 2022 flagship SoC, and the 2023 flagship SoC. Qualcomm's 2024 flagship SoC's a tossup between TSMC's N3E process node and Samsung's 3GAP process node for fabrication.

- Nvidia's likely to use TSMC's N5 process node for the fabrication of Hopper GPUs and high-end Lovelace GPUs.

- AMD's likely to continue using TSMC's N3 process node for server, desktop, and laptop CPUs. However, AMD's likely to use Samsung's 4 nm** process node for a Chromebook, as well as evaluating using Samsung's 3GAP process node for the fabrication of some projects, which is probably GPUs.

- Intel's likely to use TSMC's N3 process node for the fabrication of a FPGA chip and the GPU and I/O tiles for the desktop CPUs in 2023.

- Apple's likely going to be the first consumer for TSMC's N3 process node, which is likely going to be used for iPhones, iPads, and Macs in 2023.

I still think Nintendo and Nvidia are likely to use Samsung's 5LPP process node for the fabrication of the Dane refresh, assuming Nintendo plans on releasing the DLSS model* refresh in holiday 2024, assuming that Nintendo does release the DLSS model* in holiday 2022; and assuming Nvidia's planning on using Samsung's 5LPP process node for the fabrication of entry-level and/or mid-range Lovelace GPUs.

how can Nintendo even compete? they should just pull out of the hardware space completely!

also, really read the article, as it goes over some metrics

The Matrix Awakens is an unmissable next-gen showcase

After teasing us with a pre-load earlier in the week, The Matrix Awakens: An Unreal Engine 5 experience has finally unl…

this is very much a "fuck performance" demo and looks amazing because of it. especially the lighting. Lumen is a game changer here. Nanite is nice, but the lighting is the biggest deal to me and I hope Dane can do similar (sun/sky/emissives as sole lighting)

ShadowFox08

Paratroopa

Nintendo could have been the indie darling sooner if Tegra 2 was up to snuff. imaging all the Unity games that could have been on the 3DS

hell, maybe a port of this tech demo!

honestly looks like a tech demo for a wii u game

Hopefully for Switch 2, they make 2 SKUs.. With the differences being memory storage sizes. They can make up their money that way. 64 to 128GB would be pathetic for Switch 2 though. If they can't get up to 512GB-1TB, I'm just going to buy the lowest SKU and get a 1TB card. By the time Switch 2 comes out, 1TB cards will cost like $50 anyway.anyway, I do feel the idea that Nintendo would never consider selling at least an SKU at a minor loss at launch is impossible is..well...isn't considering all the factors?

The Switch brand is a massive one and has made Nintendo a lot of money, and I feel if they wanted to push the idea of an iterative successor full-on, they would need to hit a specific price range for one SKU, and they would likely be willing to take a minor loss on that SKU to get the idea across so they a recoup the costs latest on.

Also, they would be making a return off higher-end SKUs if they go with a multi-SKU model and also sell old Switches and Software.etc

If Nintendo were to sell a system at a loss, it would be now as they are in the best position to do so at the moment (Also because the PS5 DE and the Series S exist making it so they would sort of need to target the 300-400$ price range anyway to stay competitive price-wise)

how can Nintendo even compete? they should just pull out of the hardware space completely!

also, really read the article, as it goes over some metrics

The Matrix Awakens is an unmissable next-gen showcase

After teasing us with a pre-load earlier in the week, The Matrix Awakens: An Unreal Engine 5 experience has finally unl…www.eurogamer.net

this is very much a "fuck performance" demo and looks amazing because of it. especially the lighting. Lumen is a game changer here. Nanite is nice, but the lighting is the biggest deal to me and I hope Dane can do similar (sun/sky/emissives as sole lighting)

Some of the character models (particularly the beginning in the city for Neo and Trinity, look rough.. But man the actual city and lighting look amazing as hell. Imagine a portable experience on Switch 3 in 2030! 5 TFLOPS on a 1080p screen XD

Last edited:

SiG

Chain Chomp

Wow, I can't believe I'm back in NeoGAF...or beyond3d forums for that matter!how can Nintendo even compete? they should just pull out of the hardware space completely!

On a more serious note, I do wonder what would the minimum hardware requirements would be to get UE5 running on a small form factor. I still recall Tim Sweeny's "fish meets water" comment regarding UE4 support coming to the then announced Switch, which means it again is a matter of company politics (and a whole lot of money), which means Nintendo has to court the major players of all popular multiplatform gaming engines (again) to support the successor.

UE5 already runs, but I guess you are talking about Lumen and Nanite, which are current gen and pc only right now. Lumen is RT accelerated, but that's not available in the current public build, so we don't know the exact speed up. And I haven't seen anyone run the Valley of the Ancients demo on low end hardware unfortunatelyWow, I can't believe I'm back in NeoGAF...or beyond3d forums for that matter!

On a more serious note, I do wonder what would the minimum hardware requirements would be to get UE5 running on a small form factor. I still recall Tim Sweeny's "fish meets water" comment regarding UE4 support coming to the then announced Switch, which means it again is a matter of company politics (and a whole lot of money), which means Nintendo has to court the major players of all popular multiplatform gaming engines (again) to support the successor.

SiG

Chain Chomp

it's absolutely crazy how good night mode looks for the Matrix demo. ALL EMISSIVES

holy shit

Err, how exactly does this relate to Future Nintendo technology?

Do we have confirmation that Dane can run UE5/Lumen/Nanite as its baseline? I could see Nvidia toute is as "The first SoC to be fully compatible with Unreal Engine 5 with ray tracing and global illumination features enabled".

There's no official confirmation that Dane can run Unreal Engine 5 as the baseline. But I don't see why not, especially with Unreal Engine 4 being forward compatible with Unreal Engine 5.Err, how exactly does this relate to Future Nintendo technology?

Do we have confirmation that Dane can run UE5/Lumen/Nanite as its baseline? I could see Nvidia toute is as "The first SoC to be fully compatible with Unreal Engine 5 with ray tracing and global illumination features enabled".

- Pronouns

- He/Him

Even Switch can run UE5, it’s the fancier features within UE5 that are very much in question (like the ones shown off in that Matrix demo, Nanite, Lumen, Niagara, etc).Err, how exactly does this relate to Future Nintendo technology?

Do we have confirmation that Dane can run UE5/Lumen/Nanite as its baseline? I could see Nvidia toute is as "The first SoC to be fully compatible with Unreal Engine 5 with ray tracing and global illumination features enabled".

SiG

Chain Chomp

Even Switch can run UE5, it’s the fancier features within UE5 that are very much in question (like the ones shown off in that Matrix demo, Nanite, Lumen, Niagara, etc).

That's what I was asking: I suppose it lies more with Nvidia making the SoC Nanite/Lumen/Niagra, etc. compliant, but we really don't have anything to go by in terms of any fancy graphical feature set apart from suspecting DLSS and that it might feature RT cores in some capacity.There's no official confirmation that Dane can run Unreal Engine 5 as the baseline. But I don't see why not, especially with Unreal Engine 4 being forward compatible with Unreal Engine 5.

right now, the theory is that there's a lack of power for mobile devices. I'm almost certain the PS4 and Xbox One can support nanite, but they choose not to due to age. hell, mobile probably can tooThat's what I was asking: I suppose it lies more with Nvidia making the SoC Nanite/Lumen/Niagra, etc. compliant, but we really don't have anything to go by in terms of any fancy graphical feature set apart from suspecting DLSS and that it might feature RT cores in some capacity.

Lumen is the big one, however, and I can see why that's cut out of mobile. now whether it can run on last gen machines? I think it can, but you'd be pretty limited in what you can do after that. Dane being there in the game performance, but with RT acceleration assistance should help it. but we just don't completely know yet

So the cloud version of Edge of Eternity is coming to Nintendo Switch on 23 February 2022.

Assuming that Edge of Eternity's coming to the DLSS model*, I'm curious to see how close the DLSS model* version is compared to the PlayStation 5 and Xbox Series X|S versions. But what a shame that Unity blocks all possibilities of implementing ray tracing in the legacy render pipeline used for Edge of Eternity.

Assuming that Edge of Eternity's coming to the DLSS model*, I'm curious to see how close the DLSS model* version is compared to the PlayStation 5 and Xbox Series X|S versions. But what a shame that Unity blocks all possibilities of implementing ray tracing in the legacy render pipeline used for Edge of Eternity.

SiG

Chain Chomp

I think it will all come down to Nvidia figuring out how to nullify the performance penalties incured by RT without needing DLSS to compensate. With that said, Tensor cores have been used primarily as denoisers, but it would seem rather inefficient that the ray-tracing implementation has to go through two stages in the rendering pipeline before being output.right now, the theory is that there's a lack of power for mobile devices. I'm almost certain the PS4 and Xbox One can support nanite, but they choose not to due to age. hell, mobile probably can too

Lumen is the big one, however, and I can see why that's cut out of mobile. now whether it can run on last gen machines? I think it can, but you'd be pretty limited in what you can do after that. Dane being there in the game performance, but with RT acceleration assistance should help it. but we just don't completely know yet

If mobile RT is a thing, it would have to consolidate the the steps into one process while limiting power draw.

Threadmarks

View all 18 threadmarks

Reader mode

Reader mode

Recent threadmarks

Poll #3: When do you think is the launch window for Nintendo's new hardware? Announcement regarding links to news and rumours from 2022 and 2021 Rough summary of the 2 August 2023 episode of Nate the Hate Rough summary of the 11 September 2023 episode of Nate the Hate Anatole's deep dive into a convolutional autoencoder Differences between T234 and T239 Rough summary of the 19 October 2023 episode of Nate the Hate necrolipe's Twitter (X) post on why a 2025 launch doesn't automatically make T239 outdated based on one of the leaked slides from Microsoft

Staff Communication

View all 12 threadmarks

Reader mode

Reader mode

Recent threadmarks

Regarding Hogwarts Staff Communication 05/26/23 Staff Post about Citing Leaks and Rumors Staff Post about reading Hidden Content Staff Post- Please read Keeping on topic New Switch 2 ST Made - Nontechnical Talk Has A New Home Staff Post Regarding Dubious Insiders And Better SourcingPlease read this staff post before posting.

Furthermore, according to this follow-up post, all off-topic chat will be moderated.

Furthermore, according to this follow-up post, all off-topic chat will be moderated.

Last edited: