The illegal Nvidia leaks mention that Drake (GA10F) has 12 SMs. And Ampere has 128 CUDA cores per SM. So that means Drake has a total of 1536 CUDA cores.I'm not aware of any info from the NVIDIA leak that shows explicitly that 1536 CUDA cores count. What I saw was a line referencing the new chip name and a reference to an updated NVN version that supports DLSS.

-

Hey everyone, staff have documented a list of banned content and subject matter that we feel are not consistent with site values, and don't make sense to host discussion of on Famiboards. This list (and the relevant reasoning per item) is viewable here.

-

Do you have audio editing experience and want to help out with the Famiboards Discussion Club Podcast? If so, we're looking for help and would love to have you on the team! Just let us know in the Podcast Thread if you are interested!

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

StarTopic Future Nintendo Hardware & Technology Speculation & Discussion |ST| (Read the staff posts before commenting!)

- Thread starter Dakhil

- Start date

Threadmarks

View all 18 threadmarks

Reader mode

Reader mode

Recent threadmarks

Poll #3: When do you think is the launch window for Nintendo's new hardware? Announcement regarding links to news and rumours from 2022 and 2021 Rough summary of the 2 August 2023 episode of Nate the Hate Rough summary of the 11 September 2023 episode of Nate the Hate Anatole's deep dive into a convolutional autoencoder Differences between T234 and T239 Rough summary of the 19 October 2023 episode of Nate the Hate necrolipe's Twitter (X) post on why a 2025 launch doesn't automatically make T239 outdated based on one of the leaked slides from Microsoft

Staff Communication

View all 12 threadmarks

Reader mode

Reader mode

Recent threadmarks

Regarding Hogwarts Staff Communication 05/26/23 Staff Post about Citing Leaks and Rumors Staff Post about reading Hidden Content Staff Post- Please read Keeping on topic New Switch 2 ST Made - Nontechnical Talk Has A New Home Staff Post Regarding Dubious Insiders And Better SourcingReddDreadtheLead

#TeamLate2025WithAPotentialForEarly2026

- Pronouns

- He/Him

I think one of the ORIN have 12GB and it’s two 6GB modules. GDDR memory is different as that’s fit for a different use case. LPDDR has come in larger sizes (but that’s slower than GDDR).I am aware of that already... I'm just saying that as far as I'm aware, 6GB modules aren't as widely manufactured as modules in powers of 2 (2, 4, 8, 16...)

I know they are common in the GPU scene as many NVIDIA GPUs have 6GB on board memory (VRAM) but iirc those come in bundles of 1 or 2GB that total 6GB.

Not a single 6GB module (which is GDDR6 anyways, not LPDDR4 or LPDDR5 which is what ninty will use most likely).

Now, 6GB phones are indeed common nowadays, but I also believe they use multiple small LPDDR4/LPDDR5 modules (2+4)? Correct me if I'm wrong in that.

for your concern on using different batches of memory modules, I think it’s actually more expensive to do so because you’re ordering from two different capacities rather than ordering from just one capacity and filling the same spot.

D

Deleted member 887

Guest

I think “seriously” might be a bridge to far, but “treating an established community member whose story is consistent as if they are not lying by default” is about right.Why are yall taking Polygon's words so seriously?

There are a few reasons to believe they’re not lying if you read their post history, but even if they are not, I’m not assuming they are 100% reliable

In other words - what else are we going to do we can’t just sit here.

6GB modules are common in phones. The current iPhone has one.12GB of system RAM on a nintendo handheld? coming from just 4 on the previous gen no less? By the way, as far as I know, memory manufacturers generally ship modules in powers of 2; doesn't anyone agree that it makes more sense for them to ship out two 4GB modules essentially cutting costs instead of shipping a 4GB + another 8GB module?

In fact the 2017 the current iPhone had 2GB of RAM, also half the Switch. So if you compare the rumored device to the current mobile landscape (from which non-soc parts are going to be sourced as it is a mobile device with similar power/heat/size) demands 12GB is about right. Many of us assumed 8 but 12 isn’t out of the question.

I think that is very reasonableAlso, what's been said so far by the user is very... vague. Anyone whos been following the latest info could say the same.

Just keep your expectations in check;

Less than 8 would be wild - remember, this console will likely use emulation for backwards compat. JIT ain’t cheap and loves memory.I still think the new console could come with not only 8GB of RAM (or even less)

I get that you think the onus is on Polygon to justify their reliability but the onus is similarly on you to justify doubting the NVN2 numbers.but also < 1536 CUDA cores.

None of the Jetson Orin NX modules have 128-bit 12 GB LPDDR5 for RAM. The Jetson Orin NX modules have 128-bit 8 GB LPDDR5 or 128-bit 16 GB LPDDR5 for RAM.I think one of the ORIN have 12GB and it’s two 6GB modules. GDDR memory is different as that’s fit for a different use case. LPDDR has come in larger sizes (but that’s slower than GDDR).

ReddDreadtheLead

#TeamLate2025WithAPotentialForEarly2026

- Pronouns

- He/Him

I didn’t read it right, but it can’t be less than 8GB. I’m sorry. If the 128-bit number is accurate, then it has to meet up to that.

There is no configuration that can give you less than 8GB of LPDDR5 memory.

The total memory this thing can have would be 8GB, 12GB and 16GB.

unless I missed something…

There is no configuration that can give you less than 8GB of LPDDR5 memory.

The total memory this thing can have would be 8GB, 12GB and 16GB.

unless I missed something…

polygon if it's not too much trouble can you ask him if the cowboy game has been canceled for switch and switch drake, or is still happening?

As rumors emerged that there was a version for PS5 and Series X but they have been cancelled, the possible version of Drake is a bit up in the air.

Edit. I just read your message and I hope your situation improves in the future.

A big hug, you are an important member of the famiboard community

As rumors emerged that there was a version for PS5 and Series X but they have been cancelled, the possible version of Drake is a bit up in the air.

Edit. I just read your message and I hope your situation improves in the future.

A big hug, you are an important member of the famiboard community

64-bit 16 Gb (2 GB) LPDDR5 modules technically do exist, but currently are sampling, rather than being in production. (This is assuming Micron's LPDDR5 catalogue is up to date.)I didn’t read it right, but it can’t be less than 8GB. I’m sorry. If the 128-bit number is accurate, then it has to meet up to that.

There is no configuration that can give you less than 8GB of LPDDR5 memory.

The total memory this thing can have would be 8GB, 12GB and 16GB.

unless I missed something…

Last edited:

ReddDreadtheLead

#TeamLate2025WithAPotentialForEarly2026

- Pronouns

- He/Him

Great, Nintendo will go with 4GB of memory. Something so historically unprecedented for them: zero ram upgrade64-bit 16 Gb (2 GB) LPDDR5 modules technically do exist, but currently are sampling, rather than being in production. (This is assuming Micron's LPDDR5 catalog is up to date.)

The shows over folks, Drake is DEAD

robertman2

Chain Chomp

- Pronouns

- He/him

Has there been any rumored games beyond like BOTW2, Prime 4 and RDR2?

ReddDreadtheLead

#TeamLate2025WithAPotentialForEarly2026

- Pronouns

- He/Him

Prime 4? When was that rumored?Has there been any rumored games beyond like BOTW2, Prime 4 and RDR2?

Anatole

Octorok

- Pronouns

- He/Him/His

Others have already said that Ampere SMs have 128 CUDA cores, but I would direct you to this post to confirm that it is also true for Drake.I'm not aware of any info from the NVIDIA leak that shows explicitly that 1536 CUDA cores count. What I saw was a line referencing the new chip name and a reference to an updated NVN version that supports DLSS.

10k

Nintendo Switch RTX

- Pronouns

- He/Him

Damn, fatty liver.Going out to the rodeo tonight for a few beers. This will be the last time for a while due a health issue (don't worry it's nothing serious). Is there anything more I can ask that a developer would know?

I'll have a look back if y'all have any questions. If not have a great weekend everyone!

kvetcha

hoopy frood

- Pronouns

- he/him

I see what you're saying. I figure there are a bunch of potential levers Nintendo can pull to bring the design into equilibrium here: battery size, cooling, chassis size, clocks, battery life, node. Assuming chassis size, target temperatures and battery life remain broadly the same as the OLED, it means that node, clocks, and battery size are the most likely attributes remaining to adjust. How Nintendo will ultimately choose to prioritize is a mystery, but I certainly hope they'll extract as much power from the system as they can, knowing that more battery life will come in time.When I said that node doesn’t matter, I was referring to the knowledge of the node not mattering if you have the other two. Let’s assume it’s as speculated and it’s at decently high clocks, will people actually conclude that this is 8nm? They’ll conclude it’s another node based on the information given to them on the product. They won’t know if it’s 7, 6 or 5 or 4N. They’ll just conclude it is not 8nm and have something that has pretty good clock speeds.

And conversely, if it is these high clocks and we find out it is at 8nm, would people even care? It’s still operating at high numbers this giving you that high performance that people want even at 8nm.

I appreciate you taking my responses in stride - it's nice to have a discussion that's not inherently confrontational.

10k

Nintendo Switch RTX

- Pronouns

- He/Him

It would be two 6GB modules, like many cell phones use.Why are yall taking Polygon's words so seriously? 12GB of system RAM on a nintendo handheld? coming from just 4 on the previous gen no less?

By the way, as far as I know, memory manufacturers generally ship modules in powers of 2; doesn't anyone agree that it makes more sense for them to ship out two 4GB modules essentially cutting costs instead of shipping a 4GB + another 8GB module?

Also, what's been said so far by the user is very... vague. Anyone whos been following the latest info could say the same.

Just keep your expectations in check; I still think the new console could come with not only 8GB of RAM (or even less) but also < 1536 CUDA cores.

Concernt

Optimism is non-negotiable

- Pronouns

- She/Her

Nah, I'd say 720p in TV mode for 9th gen games, internal render, with a 4K output (either using DLSS Ultra Performance mode or DLSS Performance mode + spacial upscaling.)You are right to make that assumption. 4k 30fps-60fps for switch games natively that are already at 1080p, and 1080p 30-60fps for native Drake/PS4 quality games (2k with DLSS) sounds something reasonable to expect

ports from PS5/XSS?? 720p handheld with DLSS and 1080p docked with DLSS is my guess.

daase ko

Octorok

Does the porting of their game going smoothly?Going out to the rodeo tonight for a few beers. This will be the last time for a while due a health issue (don't worry it's nothing serious). Is there anything more I can ask that a developer would know?

I'll have a look back if y'all have any questions. If not have a great weekend everyone!

How will it compare to other versions?

Geshin Impact and Star Wars HuntersHas there been any rumored games beyond like BOTW2, Prime 4 and RDR2?

- Pronouns

- She/They

Roughly how easy is it/how accommodating has Nintendo been with developing for the Drake? Did the Drake version of RDR2 become a victim of the cancellations a while back?Going out to the rodeo tonight for a few beers. This will be the last time for a while due a health issue (don't worry it's nothing serious). Is there anything more I can ask that a developer would know?

I'll have a look back if y'all have any questions. If not have a great weekend everyone!

EDIT: Saw your personal message while looking back in the thread, I'm very sorry to hear that

Last edited:

I agree, but I don’t necessarily think the past is indicative of the future. PS typically increases by 16x, this time it was a mere 2x. The slowing price decrease in memory, is kind of one of the reasons Sony and MS went so hard in on fast storage.Nintendo typically increases RAM by several times (like 2-4x) for most upgraded devices.

Simba1

Bob-omb

Thinking about it, if I had a say, I would push for 16GB of RAM instead of 12GB. Although 12GB would be fine, 16GB just give the systems significant more breathing room, wouldn't increase power consumption much, would give easy bonus points with marketing (As much RAM as PS5/XBXS!) and make the device harder to emulate for not much more extra cost.

With 12GB you are stuck with the "well, sure RAM is lower, but...." we all know regarding tech discussions. I think it would be in Nintendo's best interest to have a device that is unambiguously stronger than the Steam Deck.

More is always better (even it raises costs), but I would said that even 12GB RAM would be more than enough.

- Pronouns

- He/Him

Yeah absolutely. I didn't think 12GB was a given either but RAM is not something they historically skimp on, even if the increases will obviously have to get smaller as we go on.I agree, but I don’t necessarily think the past is indicative of the future. PS typically increases by 16x, this time it was a mere 2x. The slowing price decrease in memory, is kind of one of the reasons Sony and MS went so hard in on fast storage.

SiG

Chain Chomp

I don't know if this is incredibly bad sarcasm or an actual troll post.Great, Nintendo will go with 4GB of memory. Something so historically unprecedented for them: zero ram upgrade

The shows over folks, Drake is DEAD

This thread needs to be reigned in.

Edit: So far, the rumors have precedence and seemed to line up. I would still take them in as rumors, but there's no need for incredible cynicism and people doing NintenDOOMED posting. I've had enough of those posts from the previous forum, and I'd rather not relive it.

Knowing ReddDreadtheLead, probably the former.I don't know if this is incredibly bad sarcasm or an actual troll post.

This thread needs to be reigned in.

- Pronouns

- He/Him

It's pretty clearly sarcasm I thought.I don't know if this is incredibly bad sarcasm or an actual troll post.

This thread needs to be reigned in.

Edit: So far, the rumors have precedence and seemed to line up. I would still take them in as rumors, but there's no need for incredible cynicism and people doing NintenDOOMED posting. I've had enough of those posts from the previous forum, and I'd rather not relive it.

ReddDreadtheLead

#TeamLate2025WithAPotentialForEarly2026

- Pronouns

- He/Him

I don't know if this is incredibly bad sarcasm or an actual troll post.

Dude, it’s a joke, lighten up.

It is, because of course they won’t go for 4GB.It's pretty clearly sarcasm I thought.

ShadowFox08

Paratroopa

I didn't mean 1080p native. 720p native it close to it, and then upscaled with DLSS to 1080p in docked mode, or whatever works best for performance and IQ balance.Nah, I'd say 720p in TV mode for 9th gen games, internal render, with a 4K output (either using DLSS Ultra Performance mode or DLSS Performance mode + spacial upscaling.)

I agree, but I don’t necessarily think the past is indicative of the future. PS typically increases by 16x, this time it was a mere 2x. The slowing price decrease in memory, is kind of one of the reasons Sony and MS went so hard in on fast storage.

i+1 year ago I was really adamant that Drake would not get 12 GB of RAM. I thought it would be a bit overkill, especially if they still ended up allocating 0.5 to 2 GB for OS, and still have a lot more for games than PS4 But it seems more plausible now that we could get it, since the latest phones, portable gaming PCs (they have 16GB).

But of course, Nintendo doesn't usually skimp on RAM when matching last gen consoles. It would benefit them and consumers if they spent 2-3 on OS and media playback, while the rest for games and native 1440p to 4k resolution (on par or surpassing PS4 pro performance, though DLSS would be needed to ease that). 8-10 GB for RAM sounds wild for games when you think about it though, and 12GB is proportionally more than switch vs xbone/ps4 base when compared to PS5/x series s. It's crazy.

@Dakhil Does 64 bit 6GB modules with max clock speeds exist on the market right now?

Last edited:

Concernt

Optimism is non-negotiable

- Pronouns

- She/Her

I didn't think you meant 1080p native, but I think 720p DLSS upscaled to 1080p is low balling it, that is to say I think you might be underestimating DLSS. A target resolution of 1080p would only need an internal resolution of 360-540p, which would drastically lower processing load, but I doubt they'll go with that unless they absolutely have to; If they can get it to 720p render internally, they'll try to push for a 4K output. 720p to 4K DLSS upscaling is better image quality than to 1080p, but the question as you said then is, does DLSS negatively impact performance enough for that to be a concern? My thoughts are it probably won't, but I suppose we'll see eventually.I didn't mean 1080p native. 720p native it close to it, and then upscaled with DLSS to 1080p in docked mode, or whatever works best for performance and IQ balance.

One of Micron's 64-bit 6 GB LPDDR5 modules I've linked here do have a max I/O rate of 6400 MT/s, which is the max I/O rate of LPDDR5. So yes, assuming Micron's LPDDR5 catalogue is up to date.@Dakhil Does 64 bit 6GB modules with max clock speeds exist on the market right now?

ShadowFox08

Paratroopa

I dunno man. This has been mentioned before, but we don't know how powerful the DLSS is on Drake. We can't say it's comparable to PC ampere cards. Especially when the GPU is gonna be 3 TFLOPs max in the best case scenario. 720p to 1440p with DLSS may be a possibility, but 1080p native to 4k from DLSS alone? I dunno.. It will need a lot of power to keep up with los screen refresh especially.I didn't think you meant 1080p native, but I think 720p DLSS upscaled to 1080p is low balling it, that is to say I think you might be underestimating DLSS. A target resolution of 1080p would only need an internal resolution of 360-540p, which would drastically lower processing load, but I doubt they'll go with that unless they absolutely have to; If they can get it to 720p render internally, they'll try to push for a 4K output. 720p to 4K DLSS upscaling is better image quality than to 1080p, but the question as you said then is, does DLSS negatively impact performance enough for that to be a concern? My thoughts are it probably won't, but I suppose we'll see eventually.

I agree that 720p to 1080p is low balling. Maybe 900p is more realistic, but I think 1080p (or 2k) will be very common for third party ports current gen on Drake, assuming said games aren't CPU heavy. It's not just the resolution upscale, but having an acceptable framerate and retaining IQ outside of resolution is an important balance to be made as well. And DLSS isn't all free.

Assuming 60fps isn't possible on Drake, if a 2k-4k 30-60fps game on ps5 runs 720p-900p 15-30fps on Drake, I would gladly take a stable 1080p 30fps image with high settings with DLSS. It's hard to fathom right now since Drake isn't released yet and we don't actually have any ports to compare. And then there's the potential widespread FSR 2.0 implementation and usage on current gen AMD consoles to factor as well.

Last edited:

MP!

Like Like

This is low balling it imoYou are right to make that assumption. 4k 30fps-60fps for switch games natively that are already at 1080p, and 1080p 30-60fps for native Drake/PS4 quality games (2k with DLSS) sounds something reasonable to expect

ports from PS5/XSS?? 720p handheld with DLSS and 1080p docked with DLSS is my guess.

Maybe for demanding games

I wish we knew cpu specs that would help

Yeah I suppose this is right we really don’t know how it’s going to perform.I dunno man. This has been mentioned before, but we don't know how powerful the DLSS is on Drake. We can't say it's comparable to PC ampere cards. Especially when the GPU is gonna be 3 TFLOPs max in the best case scenario. 720p to 1440p with DLSS may be a possibility, but 1080p native to 4k from DLSS alone? I dunno.. It will need a lot of power to keep up with los screen refresh especially.

This would mean base hardware could only run games at 540p… which seems low

Last edited:

SiG

Chain Chomp

Sorry, I know there are people on the internet who genuinely beleive they will go for 4GB.Dude, it’s a joke, lighten up.

It is, because of course they won’t go for 4GB.

D

Deleted member 887

Guest

I have a sick day and I have to look at Nvidia docs for a work project. TL;DR Do we have any reason to believe that Nintendo will go with an 8-core CPU config other than "that would be nice?"

I ask because obvious the NVN2 hack has made Drake seem beefier than expected, and a lot of people started assuming that Samsun 8nm was no longer on table. This isn't my area of expertise, but while I had the docs up I started to see how far you could pare Orin AGX down to get Drake.

Looking at the existing perf profiles for AGX and the NX and you can actually make a pretty good estimate for the TDP cost of the DLA - about 2.5W per core (running at 614Mhz/207Mhz for the Falcon). Cutting the two DLA cores from the 15W profile seems to give us a "half Drake" - 4 cores instead of 8, and 3 TPCs instead of 6, with clocks in the rough ballpark of the current Switch, at a TDP in the ballpark of handheld Erista.

The CPUs are AEs of course, so actually we can get an 8 Core Drake config without increasing the power draw from what's listed. The issue is the TPCs. There just doesn't seem to be enough power for 6 more of them. The GPU clock speeds in this config are not far above the OG Switch, there isn't much room to clock them lower.

The PVA has a wattage cost, but it is itself a customized Cortex-A proc running at lowish clocks speeds. I don't think it's huge. And the CPU clocks are even closer in this config to the OG Switch than the GPU clocks, so the only other place to move that I can see is the number of CPU cores themselves. Cut them down to 4 and you have significant power savings over the 8 cores present here. Possibly enough to, combined with cutting the PVA, to get us to 12 SMs?

This suggests a strategy for the device - improve GPU perf by tiny clock improvements, but with a huge win in parallelism, but improve CPU perf in the opposite direction - retain the same number of CPU cores, but bump up the clocks by a significant margin. This leaves us with a config something like this

CPU: 4 Core Cortex A78 @ 1.7 GHz

GPU: 12 SM custom Ampere @ 420 MHz/1GHz

RAM: 12GB LPDDR5

(cpu speed pulled from the Orin config for something that seemed like a comfortable place on the power curve to bump to, docked GPU speed maintains the existing handheld/docked ratio)

This... is a very Nintendo machine. Compared to the PS4/Xbone it beats them handily on RAM, barely outperforms the GPUs at the margins, and skimps on the CPU. Which is exactly how you would describe the Switch relative to the PS3/360, or the Wii to the OG Xbox. I think lots of folks would look at those GPU clocks in handheld and scream "because Nintendo!"

On the other hand the GPU performance is actually about where we expect it to be. 420MHz clocks on Drake's giant 12SM design gives you 1.3 TFLOPS, right at the Xbone, but running on Ampere's more modern arch and feature set. This neatly aligns with "Last Gen/PS4 In Handheld Mode + DLSS" which Nate and others have mentioned.

Are these estimates out of hand to anyone smarter than me - does quad core A78 seem reasonable, and does those cores run about a watt a piece at these clocks? If so, this seems to line up with everything we know and lots of things we expect. It doesn't require a die shrink or getting Ampere to run on a totally new process, it keeps the battery life right in line with the first Switch release, it squares the circle of "because Nintendo" and "new Nintendo" pretty nicely.

It also puts Nintendo in a nice place for a Next NEXT Switch, especially if the 4 cpus are achieved via a hardware errata down from 8 - Nintendo can take the die shrink/process change that some have assumed has already happened, and ride it to the next phase of Switch evolution, rather than rebuilding on Nvidia Next and re-re-solving the BC problem.

On the other hand if a whole ARM Cortex-A cluster is simply so efficient that it can't account for ~4W then I think I'll have to concede that the 8nm process is dead - which opens up a lot of power options in terms of clocks, and number of CPU cores.

I ask because obvious the NVN2 hack has made Drake seem beefier than expected, and a lot of people started assuming that Samsun 8nm was no longer on table. This isn't my area of expertise, but while I had the docs up I started to see how far you could pare Orin AGX down to get Drake.

Looking at the existing perf profiles for AGX and the NX and you can actually make a pretty good estimate for the TDP cost of the DLA - about 2.5W per core (running at 614Mhz/207Mhz for the Falcon). Cutting the two DLA cores from the 15W profile seems to give us a "half Drake" - 4 cores instead of 8, and 3 TPCs instead of 6, with clocks in the rough ballpark of the current Switch, at a TDP in the ballpark of handheld Erista.

The CPUs are AEs of course, so actually we can get an 8 Core Drake config without increasing the power draw from what's listed. The issue is the TPCs. There just doesn't seem to be enough power for 6 more of them. The GPU clock speeds in this config are not far above the OG Switch, there isn't much room to clock them lower.

The PVA has a wattage cost, but it is itself a customized Cortex-A proc running at lowish clocks speeds. I don't think it's huge. And the CPU clocks are even closer in this config to the OG Switch than the GPU clocks, so the only other place to move that I can see is the number of CPU cores themselves. Cut them down to 4 and you have significant power savings over the 8 cores present here. Possibly enough to, combined with cutting the PVA, to get us to 12 SMs?

This suggests a strategy for the device - improve GPU perf by tiny clock improvements, but with a huge win in parallelism, but improve CPU perf in the opposite direction - retain the same number of CPU cores, but bump up the clocks by a significant margin. This leaves us with a config something like this

CPU: 4 Core Cortex A78 @ 1.7 GHz

GPU: 12 SM custom Ampere @ 420 MHz/1GHz

RAM: 12GB LPDDR5

(cpu speed pulled from the Orin config for something that seemed like a comfortable place on the power curve to bump to, docked GPU speed maintains the existing handheld/docked ratio)

This... is a very Nintendo machine. Compared to the PS4/Xbone it beats them handily on RAM, barely outperforms the GPUs at the margins, and skimps on the CPU. Which is exactly how you would describe the Switch relative to the PS3/360, or the Wii to the OG Xbox. I think lots of folks would look at those GPU clocks in handheld and scream "because Nintendo!"

On the other hand the GPU performance is actually about where we expect it to be. 420MHz clocks on Drake's giant 12SM design gives you 1.3 TFLOPS, right at the Xbone, but running on Ampere's more modern arch and feature set. This neatly aligns with "Last Gen/PS4 In Handheld Mode + DLSS" which Nate and others have mentioned.

Are these estimates out of hand to anyone smarter than me - does quad core A78 seem reasonable, and does those cores run about a watt a piece at these clocks? If so, this seems to line up with everything we know and lots of things we expect. It doesn't require a die shrink or getting Ampere to run on a totally new process, it keeps the battery life right in line with the first Switch release, it squares the circle of "because Nintendo" and "new Nintendo" pretty nicely.

It also puts Nintendo in a nice place for a Next NEXT Switch, especially if the 4 cpus are achieved via a hardware errata down from 8 - Nintendo can take the die shrink/process change that some have assumed has already happened, and ride it to the next phase of Switch evolution, rather than rebuilding on Nvidia Next and re-re-solving the BC problem.

On the other hand if a whole ARM Cortex-A cluster is simply so efficient that it can't account for ~4W then I think I'll have to concede that the 8nm process is dead - which opens up a lot of power options in terms of clocks, and number of CPU cores.

- Pronouns

- He/Him

I’d be curious to heat if there’s a data access rate target in dev kits. Basically, if there’s an indication of how fast game data is being read from internal or external media sources in the hardware, we can take a guess of what kind of storage solutions they’re planning to use and whether they’ve been able to improve speeds on Game Cards.Going out to the rodeo tonight for a few beers. This will be the last time for a while due a health issue (don't worry it's nothing serious). Is there anything more I can ask that a developer would know?

I'll have a look back if y'all have any questions. If not have a great weekend everyone!

- Pronouns

- He/Him

Here's a weird question that I could probably answer myself if I dug for it, but thought I might as well ask. What does the codename Drake reference? I recall reading that NVIDIA codenames are all to comic book characters; does that hold true here?

Tim Drake - Wikipedia

Raccoon

Fox Brigade

- Pronouns

- He/Him

- Pronouns

- He/Him

This is how most of my interpersonal interactions endwell that's lame

thank you though

SiG

Chain Chomp

How many Robins are there?

Tim Drake - Wikipedia

en.m.wikipedia.org

ReddDreadtheLead

#TeamLate2025WithAPotentialForEarly2026

- Pronouns

- He/Him

Here's a weird question that I could probably answer myself if I dug for it, but thought I might as well ask. What does the codename Drake reference? I recall reading that NVIDIA codenames are all to comic book characters; does that hold true here?

Robin (Teen Titans)

That's what friends do.Robin to RavenRobin (real name Richard John "Dick" Grayson, also known as Nightwing in an alternate future) is the main protagonist of the Teen Titans franchise and one of the main characters in Teen Titans Go! vs Teen Titans, released at least thirteen years after the...

But I’m stretching it here, I just chose a recognizable Robin

Nvidia naming scheme for the Tegra SOCs is based on super heroes.

One of the Tegras was called Wayne, after Bruce Wayne. Another was Xavier. Another is Mariko and Erista (the TX1) and you also have Orin, which is Aquaman.

These are all DC Comics superheroes. I think.

If they believe it, then just let them, they’ll be proven wrong anyways.Sorry, I know there are people on the internet who genuinely beleive they will go for 4GB.

There’s a legit larger amount of people that think they’ll be XB1 performance when docked for their next system. But you cannot correct everyone on the internet or expect them all to listen. It’s just wasting time.

This setup would be severely lopsided I fear.CPU: 4 Core Cortex A78 @ 1.7 GHz

GPU: 12 SM custom Ampere @ 420 MHz/1GHz

RAM: 12GB LPDDR5

You’d only have 3 for games, and one of the dev complaints was lack of cores and request of more CPU cores.

(On top of more memory, but that’s always a given)

Last edited:

robertman2

Chain Chomp

- Pronouns

- He/him

5How many Robins are there?

Logan, Parker, Xavier, Erista, Mariko are all Marvel (and X-men related save for Spider-man)One of the Tegras was called Wayne, after Bruce Wayne. Another was Xavier. Another is Mariko and Erista (the TX1) and you also have Orin, which is Aquaman.

These are all DC Comics superheroes.

no. you'd be running as limited as the PS4/XBO and in a world where the competition is running unfettered Zen 2s, you can almost absolutely rule out ports. even 6-cores could be stretching it, but at least you can clock higherdoes quad core A78 seem reasonable

ShadowFox08

Paratroopa

I have a sick day and I have to look at Nvidia docs for a work project. TL;DR Do we have any reason to believe that Nintendo will go with an 8-core CPU config other than "that would be nice?"

I ask because obvious the NVN2 hack has made Drake seem beefier than expected, and a lot of people started assuming that Samsun 8nm was no longer on table. This isn't my area of expertise, but while I had the docs up I started to see how far you could pare Orin AGX down to get Drake.

Looking at the existing perf profiles for AGX and the NX and you can actually make a pretty good estimate for the TDP cost of the DLA - about 2.5W per core (running at 614Mhz/207Mhz for the Falcon). Cutting the two DLA cores from the 15W profile seems to give us a "half Drake" - 4 cores instead of 8, and 3 TPCs instead of 6, with clocks in the rough ballpark of the current Switch, at a TDP in the ballpark of handheld Erista.

The CPUs are AEs of course, so actually we can get an 8 Core Drake config without increasing the power draw from what's listed. The issue is the TPCs. There just doesn't seem to be enough power for 6 more of them. The GPU clock speeds in this config are not far above the OG Switch, there isn't much room to clock them lower.

The PVA has a wattage cost, but it is itself a customized Cortex-A proc running at lowish clocks speeds. I don't think it's huge. And the CPU clocks are even closer in this config to the OG Switch than the GPU clocks, so the only other place to move that I can see is the number of CPU cores themselves. Cut them down to 4 and you have significant power savings over the 8 cores present here. Possibly enough to, combined with cutting the PVA, to get us to 12 SMs?

This suggests a strategy for the device - improve GPU perf by tiny clock improvements, but with a huge win in parallelism, but improve CPU perf in the opposite direction - retain the same number of CPU cores, but bump up the clocks by a significant margin. This leaves us with a config something like this

CPU: 4 Core Cortex A78 @ 1.7 GHz

GPU: 12 SM custom Ampere @ 420 MHz/1GHz

RAM: 12GB LPDDR5

(cpu speed pulled from the Orin config for something that seemed like a comfortable place on the power curve to bump to, docked GPU speed maintains the existing handheld/docked ratio)

This... is a very Nintendo machine. Compared to the PS4/Xbone it beats them handily on RAM, barely outperforms the GPUs at the margins, and skimps on the CPU. Which is exactly how you would describe the Switch relative to the PS3/360, or the Wii to the OG Xbox. I think lots of folks would look at those GPU clocks in handheld and scream "because Nintendo!"

On the other hand the GPU performance is actually about where we expect it to be. 420MHz clocks on Drake's giant 12SM design gives you 1.3 TFLOPS, right at the Xbone, but running on Ampere's more modern arch and feature set. This neatly aligns with "Last Gen/PS4 In Handheld Mode + DLSS" which Nate and others have mentioned.

Are these estimates out of hand to anyone smarter than me - does quad core A78 seem reasonable, and does those cores run about a watt a piece at these clocks? If so, this seems to line up with everything we know and lots of things we expect. It doesn't require a die shrink or getting Ampere to run on a totally new process, it keeps the battery life right in line with the first Switch release, it squares the circle of "because Nintendo" and "new Nintendo" pretty nicely.

It also puts Nintendo in a nice place for a Next NEXT Switch, especially if the 4 cpus are achieved via a hardware errata down from 8 - Nintendo can take the die shrink/process change that some have assumed has already happened, and ride it to the next phase of Switch evolution, rather than rebuilding on Nvidia Next and re-re-solving the BC problem.

On the other hand if a whole ARM Cortex-A cluster is simply so efficient that it can't account for ~4W then I think I'll have to concede that the 8nm process is dead - which opens up a lot of power options in terms of clocks, and number of CPU cores.

1.7 GHz is nice for CPU... but with only three cores vs seven 3.5 GHz cores on PS5/Series S dedicated to gaming, we're looking at an even wider CPU gap than last gen with switch vs PS4. If we had seven A78 CPU cores at say 1.4 GHz each, the gap would be significantly narrower and allow for more ports that are CPU heavy, which they will be in due time. If it was just 7 CPU cores at 1.0 Ghz each, that would match last gen's gap of 3.5x roughly. This of course does not count for multi thread performance, which AMD specializes in.

I would gladly match current clock speeds of switch for GPU (307 and 768) if it means we get 8 A78 CPUs with above 1 Ghz per CPU. The GPU is easier to scale in games, but not so much CPU...

it's also important to note that besides higher clock speeds, the 32 GB Orion module has"

-256 bit RAM at 204.8 GB/s vs 102.4 GB/s bandwidth on Drake and NX models

-1792 GPU cuda cores vs 1000 on NX and +1500 on Drake

-6 cameras vs 4 on Orion NX models (likely won't be used at all

-more A.I. Tops than NX models (200 vs 70-100 on NX models), which I don't know will be on Drake.

Drake at +1500 cudda cores or less, will have more in common with the 8 and 16 GB NX modules that run 10-25 watts than the AGX models (32 GB running up to 40 watts).

I've suggested this before that Drake module could have a theoretical watt performance of 30 watts (in between 32 GB AGX and 16 GB NX), and this isn't taking some of the a.i. stuff that won't be needed on switch. So maybe 20-25 on 8nm? I'm seriously reaching here and haven't put the rest of switch components power draws into account, but they should be minimal to the SOC.

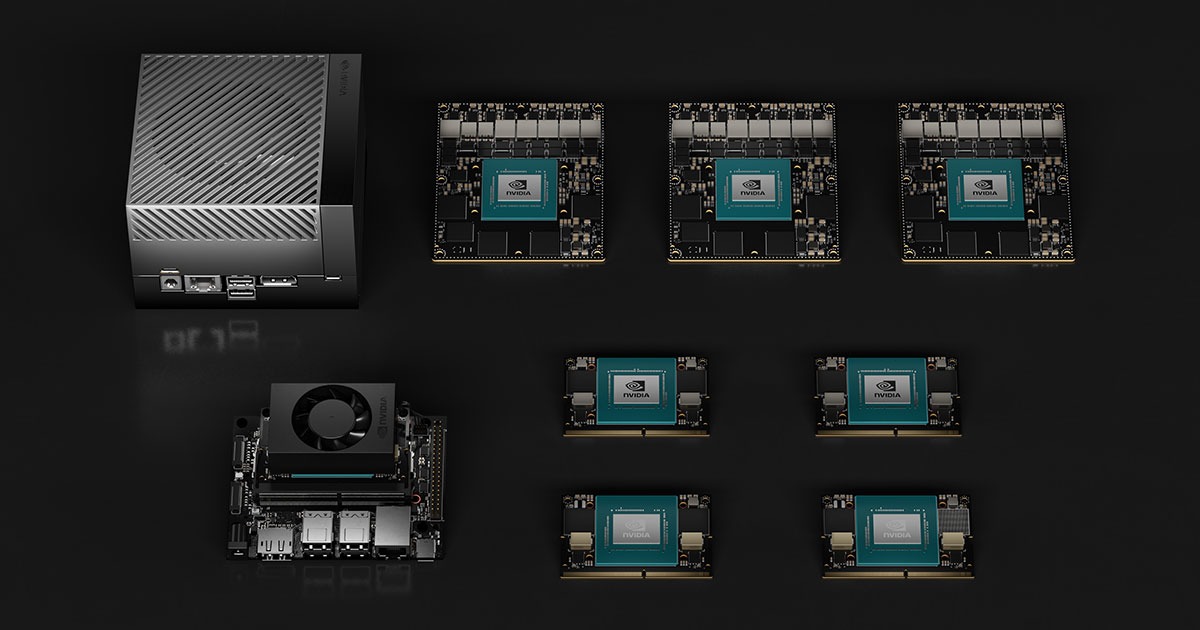

Looking at the picture you posted.. With ~15% less cuda cores, less RAM and bandwidth, remove the a.i junk that doesn't need to be there... Maybe it's possible to get Drake to run at eight A78s at 1.4 Ghz and 600-800 Mhz GPU at 20 watts or less on an 8nm node? I'm seriously reaching with the estimates of course.

Last edited:

Look over there

Bob-omb

@oldpuck

Hmm, here's my stab at the A78 power usage on Samsung 8nm question.

First off, I'm assuming that Samsung's 4LPP is comparable to TSMC N5, so I'm subbing in 3 Ghz@1 watt for 4LPP. I actually don't think that's the case, but I need a number to start with, and hey, maybe I was wrong and 4LPP actually is comparable to N5. So consider this an optimistic/best case estimation.

Then, I take what Samsung claims in this slide from July 20221 at face value. Like some others here, that's not necessarily something I prefer to do, but for this exercise, fine.

Working backwards from 4LPP to get to 8LPP, take the 3 Ghz@1 watt, then go 3/(1.11*1.11*1.09) ~= 2.23 Ghz@1 watt.

Given that, my guess for how much power 1.7 Ghz would require would then be...

2.23/1.7 ~= 1.31 (ratio of the two frequencies)

1.31^2 ~= 1.73 (the relative increase in power to go from 1.7 Ghz to ~2.23 Ghz)

1/1.73 ~= 0.58

So, at best, 0.58 watts to get a single A78 to 1.7 ghz on Samsung's 8 nm node. More likely to be a bit higher than that. Probably fair to round it up to 0.6 watts?

Hmm, here's my stab at the A78 power usage on Samsung 8nm question.

First off, I'm assuming that Samsung's 4LPP is comparable to TSMC N5, so I'm subbing in 3 Ghz@1 watt for 4LPP. I actually don't think that's the case, but I need a number to start with, and hey, maybe I was wrong and 4LPP actually is comparable to N5. So consider this an optimistic/best case estimation.

Then, I take what Samsung claims in this slide from July 20221 at face value. Like some others here, that's not necessarily something I prefer to do, but for this exercise, fine.

Working backwards from 4LPP to get to 8LPP, take the 3 Ghz@1 watt, then go 3/(1.11*1.11*1.09) ~= 2.23 Ghz@1 watt.

Given that, my guess for how much power 1.7 Ghz would require would then be...

2.23/1.7 ~= 1.31 (ratio of the two frequencies)

1.31^2 ~= 1.73 (the relative increase in power to go from 1.7 Ghz to ~2.23 Ghz)

1/1.73 ~= 0.58

So, at best, 0.58 watts to get a single A78 to 1.7 ghz on Samsung's 8 nm node. More likely to be a bit higher than that. Probably fair to round it up to 0.6 watts?

Alovon11

Like Like

- Pronouns

- He/Them

A critical problem with 420MHz on Drake's GPU as the "High End" as you seemingly imply...Ampere's power curve bottoms out at 300MHz.CPU: 4 Core Cortex A78 @ 1.7 GHz

GPU: 12 SM custom Ampere @ 420 MHz/1GHz

RAM: 12GB LPDDR5

(cpu speed pulled from the Orin config for something that seemed like a comfortable place on the power curve to bump to, docked GPU speed maintains the existing handheld/docked ratio)

This... is a very Nintendo machine. Compared to the PS4/Xbone it beats them handily on RAM, barely outperforms the GPUs at the margins, and skimps on the CPU. Which is exactly how you would describe the Switch relative to the PS3/360, or the Wii to the OG Xbox. I think lots of folks would look at those GPU clocks in handheld and scream "because Nintendo!"

On the other hand the GPU performance is actually about where we expect it to be. 420MHz clocks on Drake's giant 12SM design gives you 1.3 TFLOPS, right at the Xbone, but running on Ampere's more modern arch and feature set. This neatly aligns with "Last Gen/PS4 In Handheld Mode + DLSS" which Nate and others have mentioned.

Are these estimates out of hand to anyone smarter than me - does quad core A78 seem reasonable, and does those cores run about a watt a piece at these clocks? If so, this seems to line up with everything we know and lots of things we expect. It doesn't require a die shrink or getting Ampere to run on a totally new process, it keeps the battery life right in line with the first Switch release, it squares the circle of "because Nintendo" and "new Nintendo" pretty nicely.

It also puts Nintendo in a nice place for a Next NEXT Switch, especially if the 4 cpus are achieved via a hardware errata down from 8 - Nintendo can take the die shrink/process change that some have assumed has already happened, and ride it to the next phase of Switch evolution, rather than rebuilding on Nvidia Next and re-re-solving the BC problem.

On the other hand if a whole ARM Cortex-A cluster is simply so efficient that it can't account for ~4W then I think I'll have to concede that the 8nm process is dead - which opens up a lot of power options in terms of clocks, and number of CPU cores.

The system as depicted in that context would have pretty much no change between Portable and Docked modes...

Also, There is sort of a problem with the 4 A78 config reasonability-wise as you'd be absolutely wasting one of those precious (And pushing closer to the Steam Deck CPU perf-wise at that clock) CPU cores on the very minimal OS, so they'd be better off doing 4 A78+4A55 or 6+ A78Cs

Also, Orin's power characteristics are just strange in general and may have something to do with the PVA or Ampere's power curve in an SoC design as is

Codename_Steam

Cappy

- Pronouns

- He/Him

Could 2.00Ghz+ be possible on the CPU? (TSMC 5NM)

Look over there

Bob-omb

Possible? Sure.

Taking ARM's claim of 3 Ghz@1 watt on TSMC N5 at face value, it's like, 4/9th of a watt for an A78 to hit 2 Ghz. Then multiply that by the number of cores and see if that fits within the power budget you have in mind.

For example, what I have in mind, going above 2 Ghz per core's asking for a quad A78 setup. If I want a hexa core setup, for the budget I'm targeting, I'd have to stop at 2 Ghz exactly, while needing either N5P (-10% power) or N4 (-9% power) to cut it just enough to get within the upper bound of what I'm willing to tolerate (2-2.5 watts with a preference to be closer to the lower end*).

But everyone has different targets in mind.

*OG Switch's CPU power budget can be estimated to be in the area of ~1.83 watts, or close enough to it. So when I speculate for fun, I like to at least try to stick to 2 watts. 2.5's my concession for 'ehhh, maybe there's a bit more leeway given battery density improvements'

Taking ARM's claim of 3 Ghz@1 watt on TSMC N5 at face value, it's like, 4/9th of a watt for an A78 to hit 2 Ghz. Then multiply that by the number of cores and see if that fits within the power budget you have in mind.

For example, what I have in mind, going above 2 Ghz per core's asking for a quad A78 setup. If I want a hexa core setup, for the budget I'm targeting, I'd have to stop at 2 Ghz exactly, while needing either N5P (-10% power) or N4 (-9% power) to cut it just enough to get within the upper bound of what I'm willing to tolerate (2-2.5 watts with a preference to be closer to the lower end*).

But everyone has different targets in mind.

*OG Switch's CPU power budget can be estimated to be in the area of ~1.83 watts, or close enough to it. So when I speculate for fun, I like to at least try to stick to 2 watts. 2.5's my concession for 'ehhh, maybe there's a bit more leeway given battery density improvements'

ShadowFox08

Paratroopa

It should be able to. But its hard to say what the GPU clocks would be.Could 2.00Ghz+ be possible on the CPU? (TSMC 5NM)

Looking at @oldpuck 's picture, I would have thought it would have run at the max clocks of +2Ghz CPU and 920Mhz GPU at 40 watts on 8nm for that AGX 32 GB model.. But it's only at 75% CPU performance and 90% GPU. Not taking A.I. into account that might not be needed. Jumping from 8nm samsung to 5nm TSMC should be able to run 2Ghz and 900Mhz GPU with reduced power draw.

I think what the highest mariko switch CPU and GPU settings were that ran safely and consistently is 1.7-1.9 Ghz CPU and 921 Mhz GPU.

If Drake runs just 1.5Ghz for 7 cores for games, they would match Steam Deck in single core performance...A critical problem with 420MHz on Drake's GPU as the "High End" as you seemingly imply...Ampere's power curve bottoms out at 300MHz.

The system as depicted in that context would have pretty much no change between Portable and Docked modes...

Also, There is sort of a problem with the 4 A78 config reasonability-wise as you'd be absolutely wasting one of those precious (And pushing closer to the Steam Deck CPU perf-wise at that clock) CPU cores on the very minimal OS, so they'd be better off doing 4 A78+4A55 or 6+ A78Cs

Also, Orin's power characteristics are just strange in general and may have something to do with the PVA or Ampere's power curve in an SoC design as is

Last edited:

Z0m3le

Bob-omb

Not only Battery density improvements, but the heavy optimization that Nvidia's upscaling technology offers, means that the CPU/GPU budget vs the TX1, will lean towards the CPU more than it did before, I'd say confidently that the CPU power budget should be 50% more minimum and that doesn't include battery improvements. Drake's cpu should draw 3 watts imo, in fact I think portable mode should target 10 watts on the low end (Erista was 7.1 to 9 watts based on brightness and wireless)Possible? Sure.

Taking ARM's claim of 3 Ghz@1 watt on TSMC N5 at face value, it's like, 4/9th of a watt for an A78 to hit 2 Ghz. Then multiply that by the number of cores and see if that fits within the power budget you have in mind.

For example, what I have in mind, going above 2 Ghz per core's asking for a quad A78 setup. If I want a hexa core setup, for the budget I'm targeting, I'd have to stop at 2 Ghz exactly, while needing either N5P (-10% power) or N4 (-9% power) to cut it just enough to get within the upper bound of what I'm willing to tolerate (2-2.5 watts with a preference to be closer to the lower end*).

But everyone has different targets in mind.

*OG Switch's CPU power budget can be estimated to be in the area of ~1.83 watts, or close enough to it. So when I speculate for fun, I like to at least try to stick to 2 watts. 2.5's my concession for 'ehhh, maybe there's a bit more leeway given battery density improvements'

The battery should be ~35% bigger, so power draw should increase by about that much, which is around 9.6 watts.

Threadmarks

View all 18 threadmarks

Reader mode

Reader mode

Recent threadmarks

Poll #3: When do you think is the launch window for Nintendo's new hardware? Announcement regarding links to news and rumours from 2022 and 2021 Rough summary of the 2 August 2023 episode of Nate the Hate Rough summary of the 11 September 2023 episode of Nate the Hate Anatole's deep dive into a convolutional autoencoder Differences between T234 and T239 Rough summary of the 19 October 2023 episode of Nate the Hate necrolipe's Twitter (X) post on why a 2025 launch doesn't automatically make T239 outdated based on one of the leaked slides from Microsoft

Staff Communication

View all 12 threadmarks

Reader mode

Reader mode

Recent threadmarks

Regarding Hogwarts Staff Communication 05/26/23 Staff Post about Citing Leaks and Rumors Staff Post about reading Hidden Content Staff Post- Please read Keeping on topic New Switch 2 ST Made - Nontechnical Talk Has A New Home Staff Post Regarding Dubious Insiders And Better SourcingPlease read this staff post before posting.

Furthermore, according to this follow-up post, all off-topic chat will be moderated.

Furthermore, according to this follow-up post, all off-topic chat will be moderated.

Last edited: